Some HA Questions Memory Error, Parallel Migrate, HA for all VMs,

-

Hello Everyone,

After enable HA we saw that if you have two VMs one HA disabled other is reset mode we got "HOST_NOT_ENOUGH_FREE_MEMORY" error, after set disabled one reset then no error appear any more.

We don't understand why step by step we should enable from each VM HA mode reset , i mean if we configured HA all VM should be protected isn't it ? it should be default behaviour i guess

When try to soft reboot host, could't find where i have to adjust parallel migration number for VMs, its 1 by 1 migrating ....

Looks like balance mode do not care about until CPU and Mem thresholds kicked , right ?

Thanks

VM -

What does the memory utilization looks on all the hosts in the pool?

How much max RAM did you allocate the VM's that has the restart option enabled?About the parallel migration I think you are right, it will migrate the VM's one by one to another host in the pool. Haven't found any setting to change this.

-

-

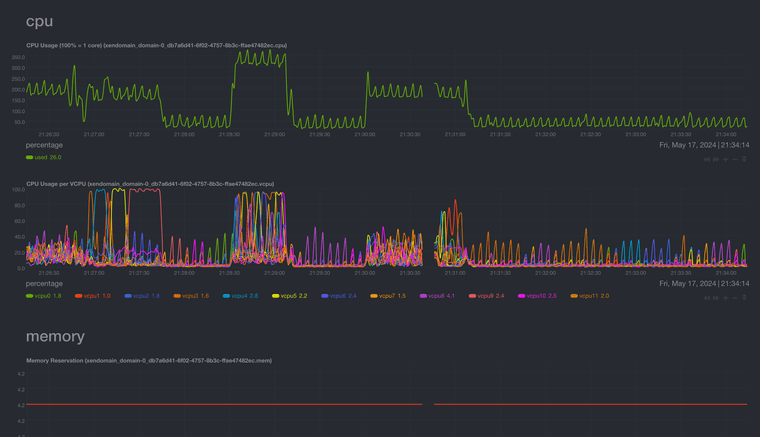

@vahric-0 VM migration takes a lot of resources; be sure to give dom0 plenty of VCPUs and RAM. You can run top or xentop from the CLI to see the impact during migration and watch for CPU and memory saturation as a sign that dom0 does not have adequate resources.

-

@nikade In XenServer at least, I thought the limit was three VMs being able to be migrated in parallel, according to this:

https://docs.xenserver.com/en-us/xencenter/current-release/vms-relocate.html -

@tjkreidl said in Some HA Questions Memory Error, Parallel Migrate, HA for all VMs,:

@nikade In XenServer at least, I thought the limit was three VMs being able to be migrated in parallel, according to this:

https://docs.xenserver.com/en-us/xencenter/current-release/vms-relocate.htmlYeah, that seems to be correct. I would asume XCP has the same baseline.

-

@nikade And if you queue up more than three migration instances, my experience has been that then they are processed such that no more than three run concurrently.

-

@tjkreidl said in Some HA Questions Memory Error, Parallel Migrate, HA for all VMs,:

@nikade And if you queue up more than three migration instances, my experience has been that then they are processed such that no more than three run concurrently.

Yeah, thats good to hear.

Any idea if there's a max number of migrations you can queue? -

@nikade No, but I've done a lot -- probably one or two dozen -- when doing updates to help speed up the evacuation of hosts. You can check the queue with

"xe task-list" to see what's being processed or queued. -

@tjkreidl said in Some HA Questions Memory Error, Parallel Migrate, HA for all VMs,:

@nikade No, but I've done a lot -- probably one or two dozen -- when doing updates to help speed up the evacuation of hosts. You can check the queue with

"xe task-list" to see what's being processed or queued.Cool, I rarely do migrate them manually so I wouldn't know.

-

@nikade @tjkreidl

increasing memory of Dom0 did not effect ...

increasing vCPU of Dom0 did not effect (actually not all vcpu already used for it but i just want to try )

I run stress*ng for load vms memory but did not effect

No pinning or numa config need because single cpu and shared L3 cache for all cores

Also MTU size is not effecting its working same with 1500 and 9000 MTU

I saw and change tcp_limit_output_bytes but did not help meOnly what effect is changing the hardware

My Intel servers are Intel(R) Xeon(R) CPU E5-2620 v3 @ 2.40GHz --> 0.9 Gbit/s per migration

My AMD servers are AMD EPYC 7502P 32-Core Processor --> 1.76 Gbit/s per migrationDo you have any advise ?

-

@vahric-0 Are you using shared storage? Your numbers looks like you are migrating storage as well, im seeing way higher numbers within our pools and they also have the same CPU (Dual socket Intel(R) Xeon(R) CPU E5-2620 v3 @ 2.40GHz).

-

@nikade No storage migration , they are all sit in shared storage

-

@vahric-0 said in Some HA Questions Memory Error, Parallel Migrate, HA for all VMs,:

@nikade No storage migration , they are all sit in shared storage

Thats strange, are all the XCP hosts within the same network as well? Not different VLAN's or subnets?

-

@nikade yes same, they are running on same broadcast domain

-

@vahric-0 Then I am out of suggestions, sorry.