Live Migrate fails with `Failed_to_suspend` error

-

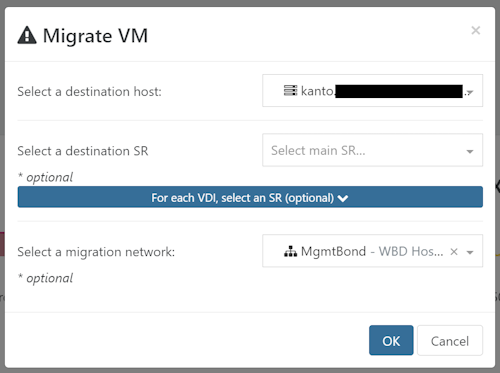

I've run into an error a few times now when attempting to live-migrate VMs within a pool.

The migrate process seems to start successfully and sends the Memory to the target Host, however the process gets stuck at around the 85% mark and never completes. Ultimately the process fails with the error:

Xenops_interface.Xenopsd_error([S(Failed_to_suspend);The hosts have shared storage so the disk is not being migrated.

The hosts are connected with a 10Gig management network.

The Control domain memory is set to 8GiB (and doesn't seem to be running out).

Everything is up to date, all patches were installed just yesterday (manually since we can't use the rolling pool upgrade due to this issue - and rebooted).

Running Xen Orchestra v5.97.

VMs are running Cloudlinux 8 or 9 with Management Agent 7.30.0-11 or 12.This has happened with multiple different VMs on multiple hosts (in this one pool). The only similarity that I can see is that they all have large disks (over 1TB) - but I can't see how this is relevant given that the disk contents are not being migrated. VMs with a smaller disk seem to work reliably.

My other theory is that something running on the VMs is preventing the suspend operation (ie. an active NFS connection or other running process). Is this possible? Can a running process prevent a suspend operation?

{ "id": "0m04goylw", "properties": { "method": "vm.migrate", "params": { "vm": "121d53f3-71e7-be03-f58d-8eacf7bb55d1", "migrationNetwork": "a189fcf5-2bc1-a8e6-52fe-c8a88b9c4a57", "targetHost": "349dbfa5-184b-416c-9fc7-2fe68cff9ac3" }, "name": "API call: vm.migrate", "userId": "b03288de-9b3b-4f5f-84f2-a8079a601de7", "type": "api.call" }, "start": 1724281513268, "status": "failure", "updatedAt": 1724282833944, "end": 1724282833944, "result": { "code": "INTERNAL_ERROR", "params": [ "Xenops_interface.Xenopsd_error([S(Failed_to_suspend);[S(121d53f3-71e7-be03-f58d-8eacf7bb55d1);F(1200)]])" ], "task": { "uuid": "3a3b04f8-2272-e1d5-45a4-f8b7aab31915", "name_label": "Async.VM.migrate_send", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20240821T23:05:13Z", "finished": "20240821T23:27:13Z", "status": "failure", "resident_on": "OpaqueRef:06124cbc-db3e-454f-815b-55744f966d70", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "INTERNAL_ERROR", "Xenops_interface.Xenopsd_error([S(Failed_to_suspend);[S(121d53f3-71e7-be03-f58d-8eacf7bb55d1);F(1200)]])" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xenopsd-xc)(filename xc/xenops_server_xen.ml)(line 2545))((process xenopsd-xc)(filename xc/domain.ml)(line 1658))((process xenopsd-xc)(filename xc/emu_manager.ml)(line 239))((process xenopsd-xc)(filename xc/emu_manager.ml)(line 244))((process xenopsd-xc)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xenopsd-xc)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xenopsd-xc)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xenopsd-xc)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xenopsd-xc)(filename xc/domain.ml)(line 1770))((process xenopsd-xc)(filename xc/domain.ml)(line 1763))((process xenopsd-xc)(filename xc/xenops_server_xen.ml)(line 2536))((process xenopsd-xc)(filename lib/xenops_server.ml)(line 2140))((process xenopsd-xc)(filename list.ml)(line 121))((process xenopsd-xc)(filename lib/xenops_server.ml)(line 2133))((process xenopsd-xc)(filename lib/xenops_server.ml)(line 2458))((process xenopsd-xc)(filename lib/xenops_server.ml)(line 2470))((process xenopsd-xc)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xenopsd-xc)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xenopsd-xc)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xenopsd-xc)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xenopsd-xc)(filename stunnel/stunnel.ml)(line 349))((process xenopsd-xc)(filename lib/xenops_server.ml)(line 2393))((process xenopsd-xc)(filename lib/xenops_server.ml)(line 2834))((process xenopsd-xc)(filename lib/xenops_server.ml)(line 2844))((process xenopsd-xc)(filename lib/xenops_server.ml)(line 2864))((process xenopsd-xc)(filename lib/task_server.ml)(line 177))((process xapi)(filename ocaml/xapi/xapi_xenops.ml)(line 3302))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/xapi_xenops.ml)(line 3470))((process xapi)(filename ocaml/xapi/xapi_vm_migrate.ml)(line 219))((process xapi)(filename ocaml/xapi/xapi_vm_migrate.ml)(line 225))((process xapi)(filename ocaml/xapi/xapi_vm_migrate.ml)(line 249))((process xapi)(filename ocaml/xapi/xapi_vm_migrate.ml)(line 1433))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/xapi_vm_migrate.ml)(line 1573))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 131))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 205))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 95)))" }, "message": "INTERNAL_ERROR(Xenops_interface.Xenopsd_error([S(Failed_to_suspend);[S(121d53f3-71e7-be03-f58d-8eacf7bb55d1);F(1200)]]))", "name": "XapiError", "stack": "XapiError: INTERNAL_ERROR(Xenops_interface.Xenopsd_error([S(Failed_to_suspend);[S(121d53f3-71e7-be03-f58d-8eacf7bb55d1);F(1200)]]))\n at Function.wrap (file:///opt/xo/xo-builds/xen-orchestra-202408051306/packages/xen-api/_XapiError.mjs:16:12)\n at default (file:///opt/xo/xo-builds/xen-orchestra-202408051306/packages/xen-api/_getTaskResult.mjs:13:29)\n at Xapi._addRecordToCache (file:///opt/xo/xo-builds/xen-orchestra-202408051306/packages/xen-api/index.mjs:1076:24)\n at file:///opt/xo/xo-builds/xen-orchestra-202408051306/packages/xen-api/index.mjs:1110:14\n at Array.forEach (<anonymous>)\n at Xapi._processEvents (file:///opt/xo/xo-builds/xen-orchestra-202408051306/packages/xen-api/index.mjs:1100:12)\n at Xapi._watchEvents (file:///opt/xo/xo-builds/xen-orchestra-202408051306/packages/xen-api/index.mjs:1273:14)" } }Edit: I've spent some time digging through the source code to try to work this one out. I haven't found anything conclusive, but here's my findings so far.

The error is triggered inxenopsd/xc/xenops_server_xen.mlat the following line:if not (wait_shutdown task vm Suspend 1200.) then raise (Xenopsd_error (Failed_to_suspend (vm.Vm.id, 1200.)))This calls the

wait_shutdownmethod with a 1200 timeout. That method looks like this:let wait_shutdown task vm reason timeout = event_wait internal_updates task timeout (function | Dynamic.Vm id when id = vm.Vm.id -> debug "EVENT on our VM: %s" id ; on_domain (fun xc xs _ vm di -> di.Xenctrl.shutdown) task vm | Dynamic.Vm id -> debug "EVENT on other VM: %s" id ; false | _ -> debug "OTHER EVENT" ; false )This is basically calling the

Xenctrl.shutdownmethod on the target VM.

So the issue is that the VM is failing to shutdown when instructed to. -

I think I may have worked this one out. The culprit could be High Availability.I found the following in the

/var/log/xensource.loglogs:Aug 22 11:19:54 johto xenopsd-xc: [debug||38 |Async.VM.migrate_send R:0a6a99c47056|xenops] EVENT on our VM: 121d53f3-71e7-be03-f58d-8eacf7bb55d1 Aug 22 11:19:54 johto xenopsd-xc: [debug||5 ||xenops_server] Received an event on managed VM 121d53f3-71e7-be03-f58d-8eacf7bb55d1 Aug 22 11:19:54 johto xenopsd-xc: [debug||5 |queue|xenops_server] Queue.push ["VM_check_state","121d53f3-71e7-be03-f58d-8eacf7bb55d1"] onto redirected 121d53f3-71e7-be03-f58d-8eacf7bb55d1:[ ["VM_check_state","121d53f3-71e7-be03-f58d-8eacf7bb55d1"] ] ... Aug 22 11:34:27 johto xenopsd-xc: [ info||38 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] Caught Xenops_interface.Xenopsd_error([S(Failed_to_suspend);[S(121d53f3-71e7-be03-f58d-8eacf7bb55d1);F(1200)]]) executing ["VM_migrate",{"vmm_tmp_dest_id":"121d53f3-71e7-be03-f58d-000000000001","vmm_tmp_src_id":"121d53f3-71e7-be03-f58d-000000000000","vmm_url":"https://10.10.100.65/services/xenops?session_id=OpaqueRef%3a7a0016fc-4196-4bbc-bbf7-d3a6c49a67cb","vmm_vgpu_pci_map":{},"vmm_vif_map":{"0":["Local","xapi0"],"1":["Local","xapi4"]},"vmm_vdi_map":{"c5daed52-60ab-1530-d79f-da27465fc66d/bb2ae183-b461-44e7-ace0-a8590722799c":"c5daed52-60ab-1530-d79f-da27465fc66d/bb2ae183-b461-44e7-ace0-a8590722799c"},"vmm_id":"121d53f3-71e7-be03-f58d-8eacf7bb55d1"}]: triggering cleanup actions Aug 22 11:34:27 johto xenopsd-xc: [debug||38 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] Task 5684 reference Async.VM.migrate_send R:0a6a99c47056: ["VM_check_state","121d53f3-71e7-be03-f58d-8eacf7bb55d1"] Aug 22 11:34:27 johto xenopsd-xc: [debug||38 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] VM 121d53f3-71e7-be03-f58d-8eacf7bb55d1 is not requesting any attention Aug 22 11:34:27 johto xenopsd-xc: [debug||38 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] VM_DB.signal 121d53f3-71e7-be03-f58d-8eacf7bb55d1 Aug 22 11:34:27 johto xenopsd-xc: [debug||38 |Async.VM.migrate_send R:0a6a99c47056|task_server] Task 5684 completed; duration = 0 Aug 22 11:34:27 johto xenopsd-xc: [debug||38 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] Task 5685 reference Async.VM.migrate_send R:0a6a99c47056: ["VM_check_state","121d53f3-71e7-be03-f58d-000000000000"] Aug 22 11:34:27 johto xenopsd-xc: [ info||38 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] Caught Xenops_interface.Xenopsd_error([S(Does_not_exist);[S(VM);S(121d53f3-71e7-be03-f58d-000000000000/config)]]) executing ["VM_check_state","121d53f3-71e7-be03-f58d-000000000000"]: triggering cleanup actions Aug 22 11:34:27 johto xenopsd-xc: [error||38 |Async.VM.migrate_send R:0a6a99c47056|task_server] Task 5685 failed; Xenops_interface.Xenopsd_error([S(Does_not_exist);[S(VM);S(121d53f3-71e7-be03-f58d-000000000000/config)]]) Aug 22 11:34:27 johto xenopsd-xc: [error||38 ||xenops_server] Triggering cleanup actions failed: Xenops_interface.Xenopsd_error([S(Does_not_exist);[S(VM);S(121d53f3-71e7-be03-f58d-000000000000/config)]]) Aug 22 11:34:27 johto xenopsd-xc: [error||38 ||task_server] Task 5593 failed; Xenops_interface.Xenopsd_error([S(Failed_to_suspend);[S(121d53f3-71e7-be03-f58d-8eacf7bb55d1);F(1200)]]) Aug 22 11:34:27 johto xenopsd-xc: [debug||38 ||xenops_server] TASK.signal 5593 = ["Failed",["Failed_to_suspend",["121d53f3-71e7-be03-f58d-8eacf7bb55d1",1200.0]]] Aug 22 11:34:27 johto xenopsd-xc: [debug||28 ||xenops_server] Queue.pop returned ["VM_check_state","121d53f3-71e7-be03-f58d-8eacf7bb55d1"] Aug 22 11:34:27 johto xenopsd-xc: [debug||28 |events|xenops_server] Task 5595 reference events: ["VM_check_state","121d53f3-71e7-be03-f58d-8eacf7bb55d1"] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vm","121d53f3-71e7-be03-f58d-8eacf7bb55d1"] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenops event on VM 121d53f3-71e7-be03-f58d-8eacf7bb55d1 Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenopsd event: ignoring event for VM (VM 121d53f3-71e7-be03-f58d-8eacf7bb55d1 migrating away) Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Task","5593"] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenops event on Task 5593 Aug 22 11:34:27 johto xapi: [debug||287339 ||xapi_vm_migrate] xenops: not retrying migration: caught Xenops_interface.Xenopsd_error([S(Failed_to_suspend);[S(121d53f3-71e7-be03-f58d-8eacf7bb55d1);F(1200)]]) from sync_with_task in attempt 1 of 3. Aug 22 11:34:27 johto xapi: [debug||287339 ||xenops] re-enabled xenops events on VM: 121d53f3-71e7-be03-f58d-8eacf7bb55d1; refreshing VM Aug 22 11:34:27 johto xenopsd-xc: [debug||12349 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] UPDATES.refresh_vm 121d53f3-71e7-be03-f58d-8eacf7bb55d1 Aug 22 11:34:27 johto xenopsd-xc: [debug||12349 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] VM_DB.signal 121d53f3-71e7-be03-f58d-8eacf7bb55d1 Aug 22 11:34:27 johto xenopsd-xc: [debug||12349 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] VBD_DB.signal 121d53f3-71e7-be03-f58d-8eacf7bb55d1.xvda Aug 22 11:34:27 johto xenopsd-xc: [debug||12349 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] VBD_DB.signal 121d53f3-71e7-be03-f58d-8eacf7bb55d1.xvdd Aug 22 11:34:27 johto xenopsd-xc: [debug||12349 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] VIF_DB.signal 121d53f3-71e7-be03-f58d-8eacf7bb55d1.1 Aug 22 11:34:27 johto xenopsd-xc: [debug||12349 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] VIF_DB.signal 121d53f3-71e7-be03-f58d-8eacf7bb55d1.0 Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vm","121d53f3-71e7-be03-f58d-8eacf7bb55d1"] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenops event on VM 121d53f3-71e7-be03-f58d-8eacf7bb55d1 Aug 22 11:34:27 johto xenopsd-xc: [debug||12350 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops_server] VM.stat 121d53f3-71e7-be03-f58d-8eacf7bb55d1 Aug 22 11:34:27 johto xapi: [debug||287339 ||xenops] Client.UPDATES.inject_barrier 20 Aug 22 11:34:27 johto xenopsd-xc: [debug||12351 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] UPDATES.inject_barrier 121d53f3-71e7-be03-f58d-8eacf7bb55d1 20 Aug 22 11:34:27 johto xenopsd-xc: [debug||28 |events|xenops_server] VM 121d53f3-71e7-be03-f58d-8eacf7bb55d1 is not requesting any attention Aug 22 11:34:27 johto xenopsd-xc: [debug||28 |events|xenops_server] VM_DB.signal 121d53f3-71e7-be03-f58d-8eacf7bb55d1 Aug 22 11:34:27 johto xenopsd-xc: [debug||28 |events|task_server] Task 5595 completed; duration = 0 Aug 22 11:34:27 johto xenopsd-xc: [debug||28 ||xenops_server] TASK.signal 5595 (object deleted) Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenopsd event: processing event for VM 121d53f3-71e7-be03-f58d-8eacf7bb55d1 Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Supressing VM.allowed_operations update because guest_agent data is largely the same Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenopsd event: Updating VM 121d53f3-71e7-be03-f58d-8eacf7bb55d1 domid 6 guest_agent Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing barrier 20 Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vbd",["121d53f3-71e7-be03-f58d-8eacf7bb55d1","xvda"]] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenops event on VBD 121d53f3-71e7-be03-f58d-8eacf7bb55d1.xvda Aug 22 11:34:27 johto xenopsd-xc: [debug||12353 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops_server] VBD.stat 121d53f3-71e7-be03-f58d-8eacf7bb55d1.xvda Aug 22 11:34:27 johto xenopsd-xc: [debug||12353 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] /usr/bin/ionice -p17061 Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenopsd event: ignoring event for VBD 121d53f3-71e7-be03-f58d-8eacf7bb55d1.xvda: metadata has not changed Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vbd",["121d53f3-71e7-be03-f58d-8eacf7bb55d1","xvdd"]] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenops event on VBD 121d53f3-71e7-be03-f58d-8eacf7bb55d1.xvdd Aug 22 11:34:27 johto xenopsd-xc: [debug||12354 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops_server] VBD.stat 121d53f3-71e7-be03-f58d-8eacf7bb55d1.xvdd Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenopsd event: ignoring event for VBD 121d53f3-71e7-be03-f58d-8eacf7bb55d1.xvdd: metadata has not changed Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vif",["121d53f3-71e7-be03-f58d-8eacf7bb55d1","1"]] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenops event on VIF 121d53f3-71e7-be03-f58d-8eacf7bb55d1.1 Aug 22 11:34:27 johto xenopsd-xc: [debug||12355 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops_server] VIF.stat 121d53f3-71e7-be03-f58d-8eacf7bb55d1.1 Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenopsd event: ignoring event for VIF 121d53f3-71e7-be03-f58d-8eacf7bb55d1.1: metadata has not changed Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vif",["121d53f3-71e7-be03-f58d-8eacf7bb55d1","0"]] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenops event on VIF 121d53f3-71e7-be03-f58d-8eacf7bb55d1.0 Aug 22 11:34:27 johto xenopsd-xc: [debug||12356 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops_server] VIF.stat 121d53f3-71e7-be03-f58d-8eacf7bb55d1.0 Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenopsd event: ignoring event for VIF 121d53f3-71e7-be03-f58d-8eacf7bb55d1.0: metadata has not changed Aug 22 11:34:27 johto xenopsd-xc: [debug||12357 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops_server] UPDATES.remove_barrier 20 Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vbd",["121d53f3-71e7-be03-f58d-8eacf7bb55d1","xvda"]] Aug 22 11:34:27 johto xapi: [error||287339 ||xapi_vm_migrate] Caught Xenops_interface.Xenopsd_error([S(Failed_to_suspend);[S(121d53f3-71e7-be03-f58d-8eacf7bb55d1);F(1200)]]): cleaning up Aug 22 11:34:27 johto xapi: [debug||287339 ||xenops] suppressing xenops events on VM: 121d53f3-71e7-be03-f58d-8eacf7bb55d1 Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Skipping (already processed this round) Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vbd",["121d53f3-71e7-be03-f58d-8eacf7bb55d1","xvdd"]] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Skipping (already processed this round) Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vif",["121d53f3-71e7-be03-f58d-8eacf7bb55d1","1"]] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Skipping (already processed this round) Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vif",["121d53f3-71e7-be03-f58d-8eacf7bb55d1","0"]] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Skipping (already processed this round) Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vm","121d53f3-71e7-be03-f58d-8eacf7bb55d1"] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenops event on VM 121d53f3-71e7-be03-f58d-8eacf7bb55d1 Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenopsd event: ignoring event for VM (VM 121d53f3-71e7-be03-f58d-8eacf7bb55d1 migrating away) Aug 22 11:34:27 johto xenopsd-xc: [debug||12358 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] VM.stat 121d53f3-71e7-be03-f58d-8eacf7bb55d1 Aug 22 11:34:27 johto xapi: [debug||287339 ||xenops] re-enabled xenops events on VM: 121d53f3-71e7-be03-f58d-8eacf7bb55d1; refreshing VM Aug 22 11:34:27 johto xenopsd-xc: [debug||12360 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] UPDATES.refresh_vm 121d53f3-71e7-be03-f58d-8eacf7bb55d1 Aug 22 11:34:27 johto xenopsd-xc: [debug||12360 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] VM_DB.signal 121d53f3-71e7-be03-f58d-8eacf7bb55d1 Aug 22 11:34:27 johto xenopsd-xc: [debug||12360 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] VBD_DB.signal 121d53f3-71e7-be03-f58d-8eacf7bb55d1.xvda Aug 22 11:34:27 johto xenopsd-xc: [debug||12360 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] VBD_DB.signal 121d53f3-71e7-be03-f58d-8eacf7bb55d1.xvdd Aug 22 11:34:27 johto xenopsd-xc: [debug||12360 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] VIF_DB.signal 121d53f3-71e7-be03-f58d-8eacf7bb55d1.1 Aug 22 11:34:27 johto xenopsd-xc: [debug||12360 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] VIF_DB.signal 121d53f3-71e7-be03-f58d-8eacf7bb55d1.0 Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vm","121d53f3-71e7-be03-f58d-8eacf7bb55d1"] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenops event on VM 121d53f3-71e7-be03-f58d-8eacf7bb55d1 Aug 22 11:34:27 johto xenopsd-xc: [debug||12361 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops_server] VM.stat 121d53f3-71e7-be03-f58d-8eacf7bb55d1 Aug 22 11:34:27 johto xapi: [debug||287339 ||xenops] Client.UPDATES.inject_barrier 21 Aug 22 11:34:27 johto xenopsd-xc: [debug||12362 |Async.VM.migrate_send R:0a6a99c47056|xenops_server] UPDATES.inject_barrier 121d53f3-71e7-be03-f58d-8eacf7bb55d1 21 Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenopsd event: ignoring event for VM 121d53f3-71e7-be03-f58d-8eacf7bb55d1: metadata has not changed Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing barrier 21 Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vbd",["121d53f3-71e7-be03-f58d-8eacf7bb55d1","xvda"]] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenops event on VBD 121d53f3-71e7-be03-f58d-8eacf7bb55d1.xvda Aug 22 11:34:27 johto xenopsd-xc: [debug||12364 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops_server] VBD.stat 121d53f3-71e7-be03-f58d-8eacf7bb55d1.xvda Aug 22 11:34:27 johto xenopsd-xc: [debug||12364 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] /usr/bin/ionice -p17061 Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenopsd event: ignoring event for VBD 121d53f3-71e7-be03-f58d-8eacf7bb55d1.xvda: metadata has not changed Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vbd",["121d53f3-71e7-be03-f58d-8eacf7bb55d1","xvdd"]] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenops event on VBD 121d53f3-71e7-be03-f58d-8eacf7bb55d1.xvdd Aug 22 11:34:27 johto xenopsd-xc: [debug||12365 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops_server] VBD.stat 121d53f3-71e7-be03-f58d-8eacf7bb55d1.xvdd Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenopsd event: ignoring event for VBD 121d53f3-71e7-be03-f58d-8eacf7bb55d1.xvdd: metadata has not changed Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vif",["121d53f3-71e7-be03-f58d-8eacf7bb55d1","1"]] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenops event on VIF 121d53f3-71e7-be03-f58d-8eacf7bb55d1.1 Aug 22 11:34:27 johto xenopsd-xc: [debug||12366 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops_server] VIF.stat 121d53f3-71e7-be03-f58d-8eacf7bb55d1.1 Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenopsd event: ignoring event for VIF 121d53f3-71e7-be03-f58d-8eacf7bb55d1.1: metadata has not changed Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vif",["121d53f3-71e7-be03-f58d-8eacf7bb55d1","0"]] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenops event on VIF 121d53f3-71e7-be03-f58d-8eacf7bb55d1.0 Aug 22 11:34:27 johto xenopsd-xc: [debug||12367 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops_server] VIF.stat 121d53f3-71e7-be03-f58d-8eacf7bb55d1.0 Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] xenopsd event: ignoring event for VIF 121d53f3-71e7-be03-f58d-8eacf7bb55d1.0: metadata has not changed Aug 22 11:34:27 johto xenopsd-xc: [debug||12368 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops_server] UPDATES.remove_barrier 21 Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vbd",["121d53f3-71e7-be03-f58d-8eacf7bb55d1","xvda"]] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Skipping (already processed this round) Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vbd",["121d53f3-71e7-be03-f58d-8eacf7bb55d1","xvdd"]] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Skipping (already processed this round) Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vif",["121d53f3-71e7-be03-f58d-8eacf7bb55d1","1"]] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Skipping (already processed this round) Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Processing event: ["Vif",["121d53f3-71e7-be03-f58d-8eacf7bb55d1","0"]] Aug 22 11:34:27 johto xapi: [debug||851 |org.xen.xapi.xenops.classic events D:6d228e86cbf9|xenops] Skipping (already processed this round) Aug 22 11:34:27 johto xapi: [error||287339 ||backtrace] Async.VM.migrate_send R:0a6a99c47056 failed with exception Xenops_interface.Xenopsd_error([S(Failed_to_suspend);[S(121d53f3-71e7-be03-f58d-8eacf7bb55d1);F(1200)]]) Aug 22 11:34:27 johto xapi: [error||287339 ||backtrace] Raised Xenops_interface.Xenopsd_error([S(Failed_to_suspend);[S(121d53f3-71e7-be03-f58d-8eacf7bb55d1);F(1200)]]) Aug 22 11:34:27 johto xapi: [error||287339 ||backtrace] 1/1 xapi Raised at file (Thread 287339 has no backtrace table. Was with_backtraces called?, line 0 Aug 22 11:34:27 johto xapi: [error||287339 ||backtrace] Aug 22 11:34:27 johto xapi: [debug||856 ||xenops] Event on VM 121d53f3-71e7-be03-f58d-8eacf7bb55d1; resident_here = true Aug 22 11:34:27 johto xapi: [debug||292123 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.logout D:ec5ee6136a7e created by task D:912a6848dc70 Aug 22 11:34:27 johto xapi: [debug||856 ||xenops] xapi_cache: not updating cache for 121d53f3-71e7-be03-f58d-8eacf7bb55d1 Aug 22 11:34:27 johto xapi: [debug||856 ||dummytaskhelper] task timeboxed_rpc D:401f2fb7a9bc created by task D:f506801848df Aug 22 11:34:27 johto xapi: [ info||292123 /var/lib/xcp/xapi|session.logout D:7b42c2e6fc06|xapi_session] Session.destroy trackid=647828d4342b28d66c96849e86479a13 Aug 22 11:34:27 johto xapi: [debug||292124 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:event.from D:65babbde7027 created by task D:f506801848df Aug 22 11:34:27 johto xapi: [debug||292125 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.slave_login D:b142525d6326 created by task D:912a6848dc70 Aug 22 11:34:27 johto xapi: [ info||292125 /var/lib/xcp/xapi|session.slave_login D:b06e3b881d19|xapi_session] Session.create trackid=7bec712bd2ad9586d3757a81c69753a0 pool=true uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 Aug 22 11:34:27 johto xapi: [debug||292126 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:d8a7b4490527 created by task D:b06e3b881d19 Aug 22 11:34:27 johto xapi: [debug||292127 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:event.from D:352aea7dadc6 created by task D:912a6848dc70 Aug 22 11:34:28 johto xapi: [debug||255190 ha_monitor|HA monitor D:aaa99bafd59f|helpers] about to call script: /usr/libexec/xapi/cluster-stack/xhad/ha_query_liveset Aug 22 11:34:28 johto xapi: [debug||255190 ha_monitor|HA monitor D:aaa99bafd59f|xapi_ha] Liveset: online 349dbfa5-184b-416c-9fc7-2fe68cff9ac3 [ L A ]; f4de3c79-b444-466d-b2f2-46ae529eeb7d [*LM A ]; 36eaaff3-1d05-4a01-a167-cbb81d521084 [ L A ]; Aug 22 11:34:28 johto xapi: [debug||255190 ha_monitor|HA monitor D:aaa99bafd59f|xapi_ha] Processing warnings Aug 22 11:34:28 johto xapi: [debug||255190 ha_monitor|HA monitor D:aaa99bafd59f|xapi_ha] Done with warnings Aug 22 11:34:28 johto xapi: [debug||255190 ha_monitor|HA monitor D:aaa99bafd59f|xapi_ha_vm_failover] restart_auto_run_vms called Aug 22 11:34:28 johto xapi: [debug||255190 ha_monitor|HA monitor D:aaa99bafd59f|xapi_ha_vm_failover] Setting all VMs running or paused to Halted Aug 22 11:34:28 johto xapi: [debug||255190 ha_monitor|HA monitor D:aaa99bafd59f|xapi_ha_vm_failover] Protected VMs: [ __REDACTED__ ] Aug 22 11:34:28 johto xapi: [debug||255190 ha_monitor|HA monitor D:aaa99bafd59f|xapi_ha_vm_failover] Restart plan for non-agile offline VMs: [ ] Aug 22 11:34:28 johto xapi: [debug||255190 ha_monitor|HA monitor D:aaa99bafd59f|xapi_ha_vm_failover] Planning configuration for offline agile VMs = { total_hosts = 3; num_failures = 1; hosts = [ __REDACTED__ ]; vms = [ __REDACTED__ ]; placement = [ __REDACTED__ ] } Aug 22 11:34:28 johto xapi: [debug||255190 ha_monitor|HA monitor D:aaa99bafd59f|xapi_ha_vm_failover] Computing a specific plan for the failure of VMs: [ ] Aug 22 11:34:28 johto xapi: [debug||255190 ha_monitor|HA monitor D:aaa99bafd59f|xapi_ha_vm_failover] Restart plan for agile offline VMs: [ ] Aug 22 11:34:28 johto xapi: [debug||255190 ha_monitor|HA monitor D:aaa99bafd59f|xapi_ha_vm_failover] Planning configuration for future failures = { total_hosts = 3; num_failures = 1; hosts = [ __REDACTED__ ]; vms = [ __REDACTED__ ]; placement = [ __REDACTED__ ] } Aug 22 11:34:28 johto xapi: [debug||292128 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.slave_login D:53b8efa25b89 created by task D:aaa99bafd59f Aug 22 11:34:28 johto xapi: [ info||292128 /var/lib/xcp/xapi|session.slave_login D:c245ed49adce|xapi_session] Session.create trackid=6805737fd6e27aaaf0651892eeb522b1 pool=true uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 Aug 22 11:34:28 johto xapi: [debug||292129 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:a2615696094c created by task D:c245ed49adce Aug 22 11:34:28 johto xapi: [debug||255190 ha_monitor|HA monitor D:aaa99bafd59f|xapi_ha_vm_failover] Processing 0 parallel groups Aug 22 11:34:28 johto xapi: message repeated 2 times: [ [debug||255190 ha_monitor|HA monitor D:aaa99bafd59f|xapi_ha_vm_failover] Processing 0 parallel groups] Aug 22 11:34:28 johto xapi: [debug||292130 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.logout D:60d1a540f918 created by task D:aaa99bafd59f Aug 22 11:34:28 johto xapi: [ info||292130 /var/lib/xcp/xapi|session.logout D:6e1e1dfa2cb3|xapi_session] Session.destroy trackid=6805737fd6e27aaaf0651892eeb522b1 Aug 22 11:34:28 johto xapi: [debug||255190 ha_monitor|HA monitor D:aaa99bafd59f|xapi_ha] Network peers = [349dbfa5-184b-416c-9fc7-2fe68cff9ac3;36eaaff3-1d05-4a01-a167-cbb81d521084;f4de3c79-b444-466d-b2f2-46ae529eeb7d] Aug 22 11:34:28 johto xapi: message repeated 2 times: [ [debug||255190 ha_monitor|HA monitor D:aaa99bafd59f|xapi_ha] Network peers = [349dbfa5-184b-416c-9fc7-2fe68cff9ac3;36eaaff3-1d05-4a01-a167-cbb81d521084;f4de3c79-b444-466d-b2f2-46ae529eeb7d]] Aug 22 11:34:29 johto xapi: [debug||292131 HTTP 127.0.0.1->:::80|Get RRD updates. D:d94f8323d287|xapi_services] hand_over_connection GET /rrd_updates to /var/lib/xcp/xcp-rrdd.forwardedThe logs referencing

xapi_hacaught my attention. Why is the HA process doing things during a VM migration? This led me to try disabling HA and running the migration again, which resulted in a successful migration!I suspect this is not the expected behaviour? I feel like it should be possible to move VMs around in a HA environment?

Edit: Now it's working with HA enabled. Looking closer at the logs, these processes don't appear to be related. Maybe HA wasn't the cause? I have no idea why this is working all of a sudden.

-

I've just run into what seems to be the same underlying issue while attempting to Coalesce Leaf one of the VMs. The Async.VM.suspend operation took approximately 15 minutes to complete.

Resuming the VM also took a similar amount of time.Does anyone have any insight into what might be causing suspend / shutdown operations to take this long to complete? It seems that this is causing an occasional timeout, which is crashing out the live-migrate process.

Looking through the xensource logs again, I see

Planning configuration for offline agile VMs = { total_hosts = 3; num_failures = 1;, which seems to indicate that the HA system thinks that this is a failure. Could it be that HA is indeed the cause? Does HA need to be disabled every time that any suspend/migrate/coalesce operation is run (the documentation seems to suggest that any action via Xen Orchestra won't trigger a failure)? -

Try on XOA latest, however I have the feeling it's a problem inside your VM. Do you reproduce with another OS? (like Debian).

-

I've just tested with the latest XOA (5.95.2) and see the same issue.

I have run some tests with brand new VMs (just Guest tools installed), to see if I can determine any contributing factors.

The baseline config for the tests is:

Almalinux 9.4, 50GB Disk (via Fiber Channel), 16GB RAM, 1x network (with Public IP), HA disabled for the VM (but enabled pool wide).Below is the time taken to suspend the VM, with different configurations...

- Baseline: 1:40 (in minutes:seconds)

- With 1.5TB Disk: 1:40

- With 96GB RAM: 9:36

- Network disabled: 1:40

- VM HA Enabled (restart): 1:40

- Pool HA disabled: 1:38

- VM SR on local SSD: 1:39

- Suspend SR on local SSD: 1:48

Below is the time taken to live-migrate the VM...

- Migrate: 1:51 (0:08 paused)

- HA enabled: 1:32 (0:04 paused)

- 96GB RAM: 10:02 (0:08 paused)

- 1.5TiB Disk: 1:49 (0:10 paused)

- NFS Mounted: 2:57 (1:02 paused)

Edit: Ignore the below. I realised that this is probably not relevant to the current issue - probably just the limitations of SMAPIv1 (but I've left it here for the curious).

! The only observable contributor to suspend time is RAM size (which makes sense). During suspend, I observed ~180MiB/s write speed to the Fiber Channel Disks (which calculates about right at 1:30 for 16GB / 9:00 for 96GB).

This does seem slow though. The drive specs are 1,000MiB/s writes per disk (FC array specs are even higher).

!

! Based on this, it sounds like I could be running into a write-speed bottleneck somewhere, which is causing the suspends to take too long (and timeout any live-migrations). The fact that both the Fiber Channel and local SSD seems to be hitting about the same limit has me confused. Both SRs should be capable of much higher write speeds than I'm seeing.

!

! I've done further testing withddinside the VM and am hitting about the same numbers (minus overhead). ~160MB/s write speed (on both local SSDs and Fiber Channel). Read speeds go over 1,000MB/s though. I can't think of a way to test the FC storage directly (it's fully committed as one large volume, so I can't make a new volume that isn't an SR).

!

! Could there be something in XCP-ng limiting the write speed? Or is do these speeds make sense with overhead of a suspend operation?Ultimately I wasn't able to replicate the actual issue with a new VM. While I did observe a significant increase in live-migrate paused time when NFS was mounted, this didn't approach the 20 minute timeout that was triggering on the live VMs. I am no closer to working out what the issue is.

-

@peter_webbird i think i have the same issue, it happens also when trying to move disk to another SR:

https://xcp-ng.org/forum/topic/9618/disk-move-fails-vm-crashes -

We're having the same trouble. xcp-ng 8.2.1 and xoa 5.95.2. VMs are ubuntu 20, 22, and 24 LTS, some SuSE. Failed migrations are keeping us from vacating hosts for patching. Shutting down the VM lets us move it but some production VMs require significant planning to take down.

-

@randyrue Confirmed that after cold booting a VM to a new host I can then migrate it live to another new host.