SR NFS Creation Error 13

-

Another spitball idea:

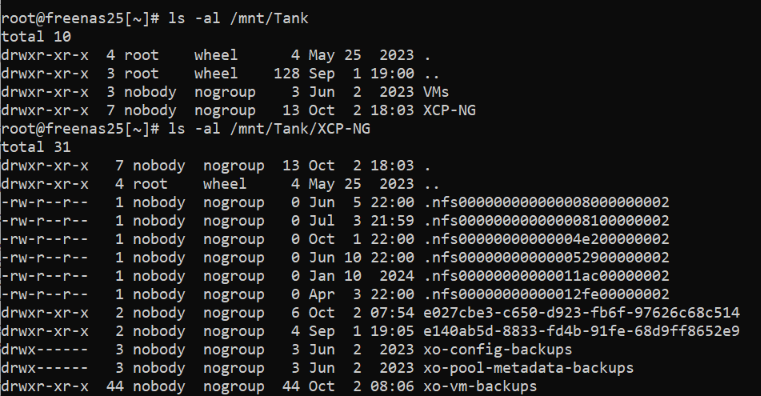

I did notice too that you have folders in the /mnt/Tank path : "VMs" and "XCP-NG". I assume the "XCP-NG" folder is your XO remote (as I recognise the xo-config / xo-pool / xo-vm folders from backup jobs), so I assume that the "VMs" folder is your VDI SR?

If those assumptions are correct, when you add the storage to the host, I feel like your 'Create a new SR' info is missing the "VMs" text in the subdirectory - from the screenshot you posted, it looks like the SR is trying to create in the /mnt/Tank path, not the /mnt/Tank/VMs path?

But I'm obviously not entirely sure of your intention and expectations, whether this host is part of a pool or is a standalone host; all of which has an impact on NFS storage and resultant paths. -

@stevewest15 I pasted this error log into ChatLLM and it spat out a key error here:

"The error log you provided indicates that there was an issue during the import of a virtual machine (VM) in XCP-ng. The specific error is related to the creation of a network, and the relevant part of the log is:

[error||8816 /var/lib/xcp/xapi||backtrace] network.create R:1662dcd1a2fb failed with exception Server_error(INVALID_VALUE, [ bridge; xapi1 ])

This error suggests that there was an attempt to create a network with a bridge named xapi1, but the value provided for the bridge was invalid.

This could be due to several reasons:

Bridge Name Conflict: The bridge name xapi1 might already be in use or reserved, causing a conflict.

Incorrect Bridge Configuration: The bridge configuration might be incorrect or not properly set up in the network settings of the host.Network Configuration Issue: There might be a broader issue with the network configuration on the host, such as missing or misconfigured network interfaces.

VLAN Configuration: If VLANs are involved, ensure that the VLAN ID is correctly configured and that the network interface supports VLAN tagging.

To resolve this issue, you can try the following steps:

Check Existing Bridges: Use the command brctl show on the host to list all existing bridges and verify if xapi1 already exists.Review Network Configuration: Check the network configuration files on the host to ensure that the bridge xapi1 is correctly defined and not conflicting with other network settings.

Verify VLAN Settings: If VLANs are used, ensure that the VLAN ID is correctly set and that the network interface is configured to handle VLANs.

Consult Documentation: Review the XCP-ng documentation for any specific requirements or limitations regarding network bridge names and configurations.

If these steps do not resolve the issue, you may need to provide additional context or configuration details for further troubleshooting."

-

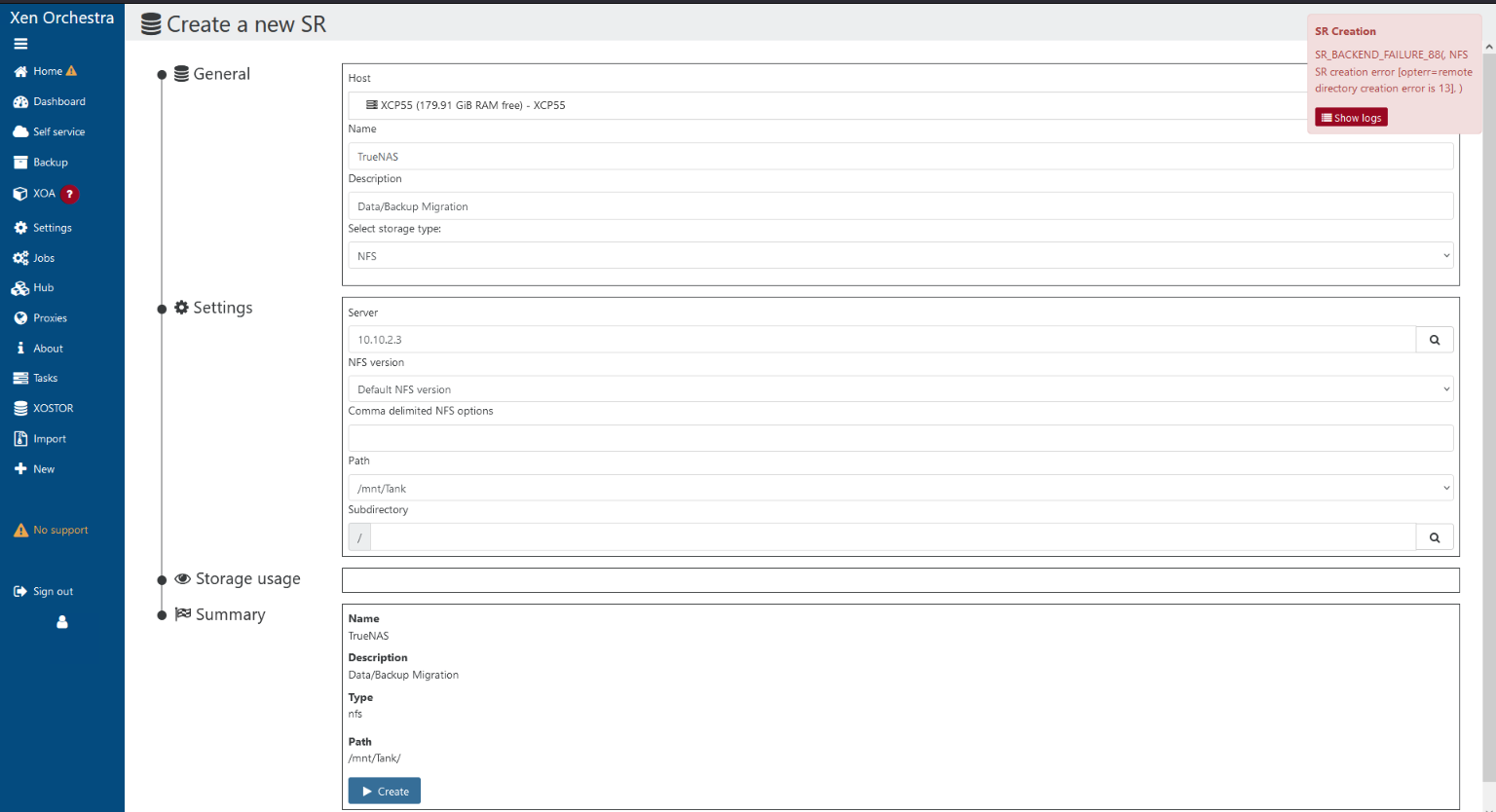

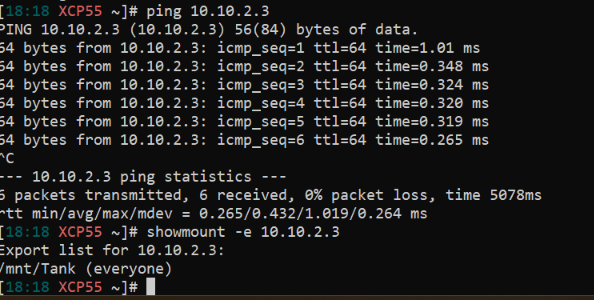

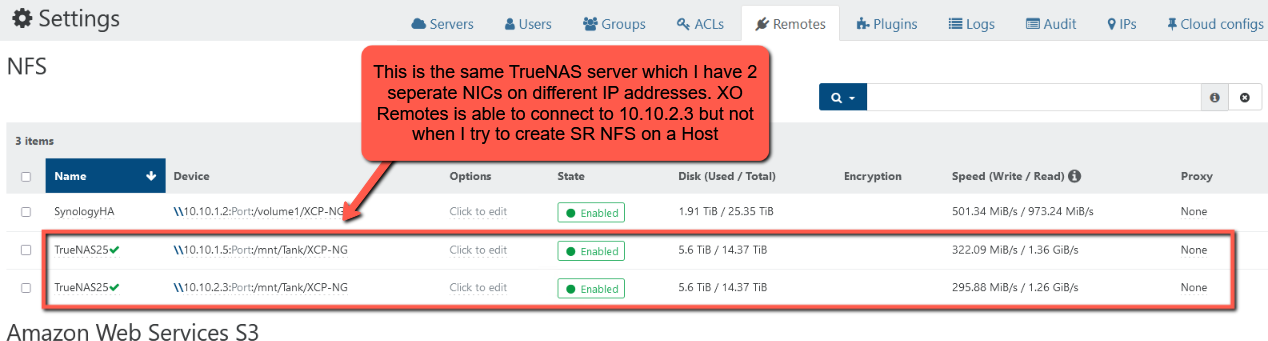

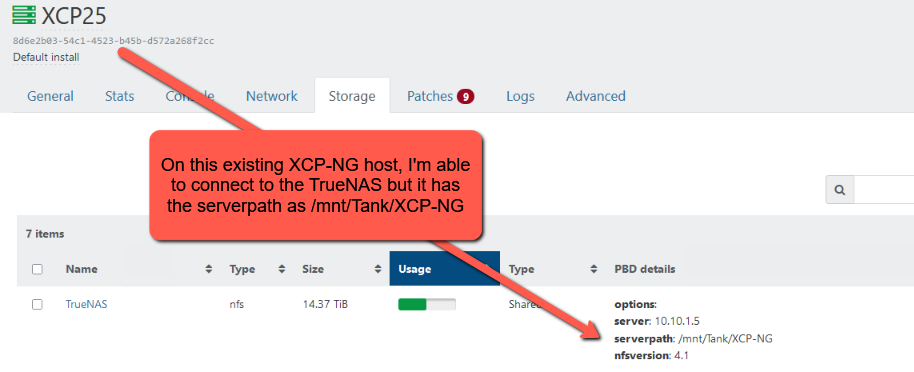

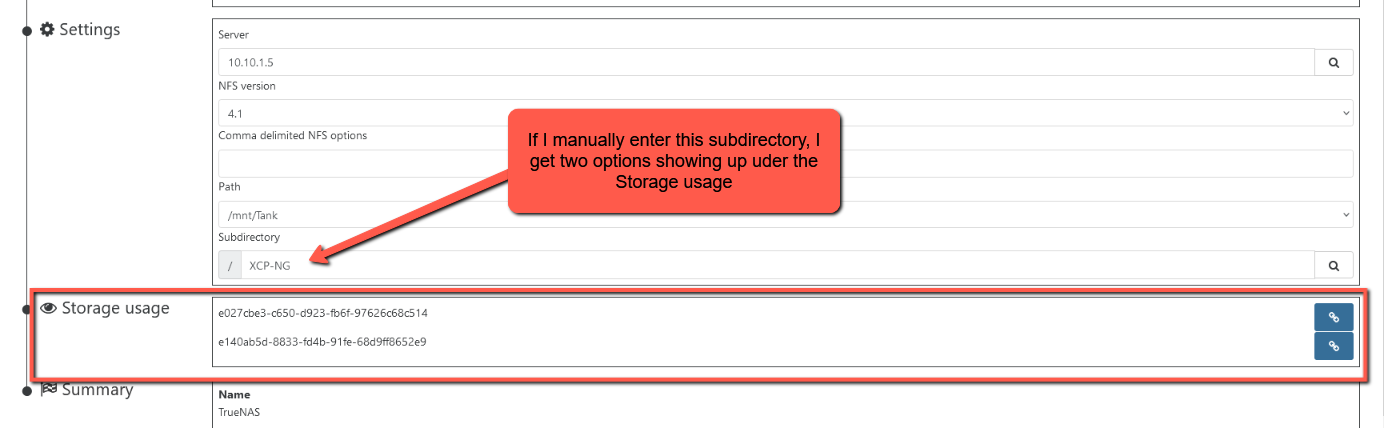

@TS79 Thanks again! The TrueNAS NFS path which I see on the existing XCP-NG Hosts has the serverpath: /mnt/Tank/XCP-NG (see screenshot below):

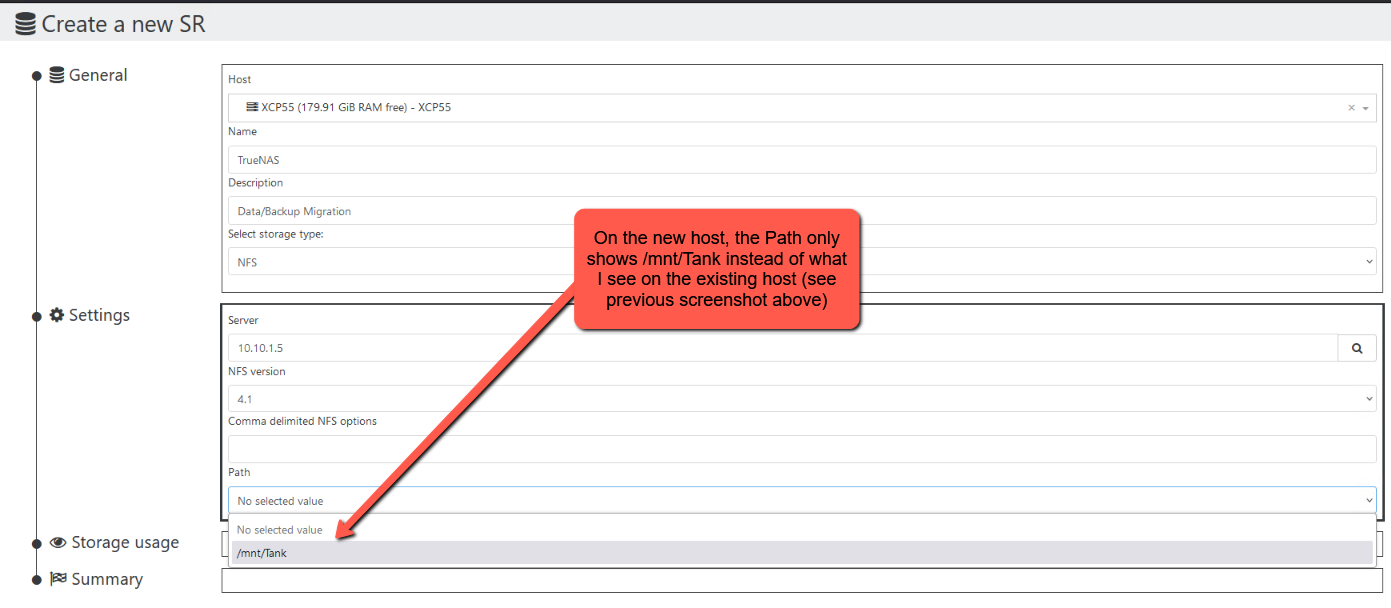

However, when I try to create this SR NFS on the new host, it only shows the serverpath as /mnt/Tank like so:

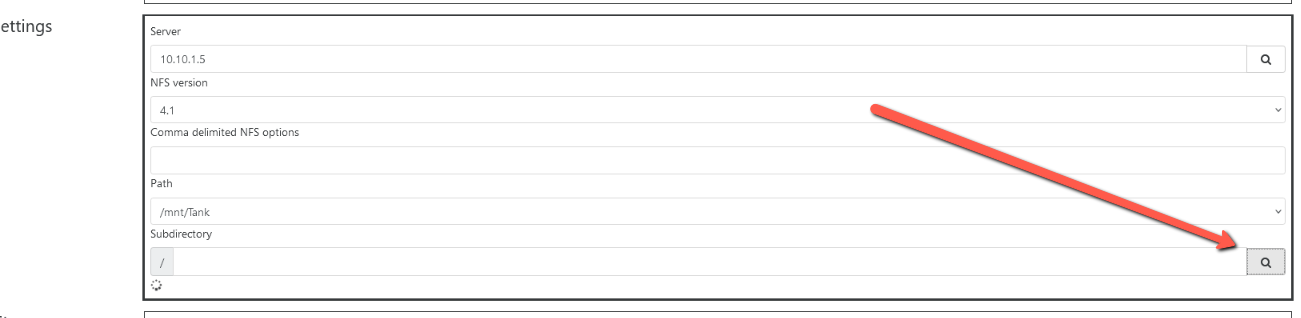

Using the search icon under the Subdirectory doesn't load anything:

And if I manually enter XCP-NG in the Subdirectory field, I'm presented with two options under the "Storage usage":

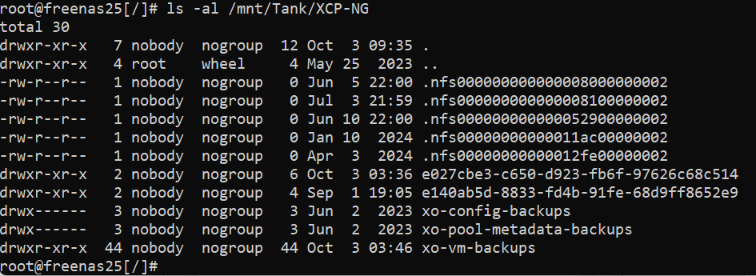

FreeNAS shows the following content in /mn/Tank/XCP-NG directory:

The "e027cbe3-c650-d923-fb6f-97626c68c514" and "e140ab5d-8833-fd4b-91fe-68d9ff8652e9" are SR for existing hosts that connect with no issues to TrueNAS via NFS.

On the networking error when trying to install XO using the deploy method, I ran the following command on this host and returned the following:

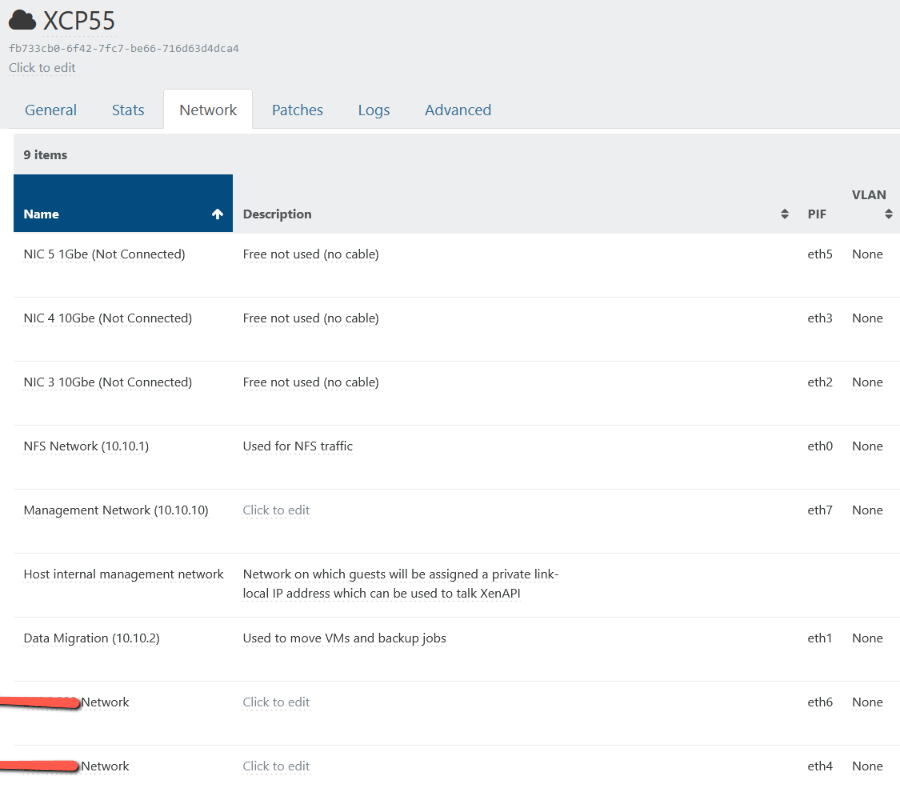

I'm not using any VLANs and not sure where to check the network configuration files but this is what I have via the XOCE for this host:

[12:24 XCP55 ~]# brctl show bridge name bridge id STP enabled interfaces [12:24 XCP55 ~]# [12:24 XCP55 ~]# ip route show default via 10.10.10.1 dev xenbr7 10.10.1.0/24 dev xenbr0 proto kernel scope link src 10.10.1.10 10.10.2.0/24 dev xenbr1 proto kernel scope link src 10.10.2.10 10.10.10.0/24 dev xenbr7 proto kernel scope link src 10.10.10.5 [12:25 XCP55 ~]# [12:32 XCP55 ~]# ip a show 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 2: eth4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP group default qlen 1000 link/ether a0:36:9f:8a:18:18 brd ff:ff:ff:ff:ff:ff 3: eth5: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq master ovs-system state DOWN group default qlen 1000 link/ether a0:36:9f:8a:18:19 brd ff:ff:ff:ff:ff:ff 4: eth6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP group default qlen 1000 link/ether a0:36:9f:8a:18:1a brd ff:ff:ff:ff:ff:ff 5: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc mq master ovs-system state UP group default qlen 1000 link/ether e4:43:4b:c8:51:84 brd ff:ff:ff:ff:ff:ff 6: eth7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP group default qlen 1000 link/ether a0:36:9f:8a:18:1b brd ff:ff:ff:ff:ff:ff 7: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc mq master ovs-system state UP group default qlen 1000 link/ether e4:43:4b:c8:51:85 brd ff:ff:ff:ff:ff:ff 8: eth2: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq master ovs-system state DOWN group default qlen 1000 link/ether e4:43:4b:c8:51:86 brd ff:ff:ff:ff:ff:ff 9: eth3: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq master ovs-system state DOWN group default qlen 1000 link/ether e4:43:4b:c8:51:87 brd ff:ff:ff:ff:ff:ff 10: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether 1e:13:9f:39:76:f9 brd ff:ff:ff:ff:ff:ff 11: xenbr4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000 link/ether a0:36:9f:8a:18:18 brd ff:ff:ff:ff:ff:ff 14: xenbr2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000 link/ether e4:43:4b:c8:51:86 brd ff:ff:ff:ff:ff:ff 15: xenbr6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000 link/ether a0:36:9f:8a:18:1a brd ff:ff:ff:ff:ff:ff 16: xenbr5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000 link/ether a0:36:9f:8a:18:19 brd ff:ff:ff:ff:ff:ff 17: xenbr3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000 link/ether e4:43:4b:c8:51:87 brd ff:ff:ff:ff:ff:ff 18: xenbr7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000 link/ether a0:36:9f:8a:18:1b brd ff:ff:ff:ff:ff:ff inet 10.10.10.5/24 brd 10.10.10.255 scope global xenbr7 valid_lft forever preferred_lft forever 19: xenbr1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc noqueue state UNKNOWN group default qlen 1000 link/ether e4:43:4b:c8:51:85 brd ff:ff:ff:ff:ff:ff inet 10.10.2.10/24 brd 10.10.2.255 scope global xenbr1 valid_lft forever preferred_lft forever 20: xenbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc noqueue state UNKNOWN group default qlen 1000 link/ether e4:43:4b:c8:51:84 brd ff:ff:ff:ff:ff:ff inet 10.10.1.10/24 brd 10.10.1.255 scope global xenbr0 valid_lft forever preferred_lft foreverThank you again!

SW

-

@stevewest15 Hey dude, thanks for feeding back all that info. Is your new host part of a pool or is it a standalone host?

The fact that when you browse paths, you can see the 2 existing SR IDs means that NFS is working - at least to read the folder.

Let's ignore what ChatLLM said about the error - but it is a fair point. If the host is part of a pool, all physical networking needs to be identical across the hosts (for example, ETH0 is for LAN, ETH1 is for storage, etc.)Interesting point: I just tried to add an NFS storage to my homelab host (standalone), and I'm having the same problem on TrueNAS Scale - I create the NFS share, set the permissions correctly, but when I go to add it in XO, I also cannot see folders correctly and it won't let me add!

@olivierlambert - perhaps a bug in recent XO(A) updates? I deploy & update mine from source using the ronivay script, onto Ubuntu 22.04 LTS. Not sure when the potential bug has surfaced though, as I don't often try add new NFS storage at home...

-

I'm not aware of any change in the SR creation code since years

-

@TS79 Thanks for the help! This host is NOT part of a pool. It's a standalone host.

I'm so glad you are also getting the same issue!!! Man I've been trying to figure out this error/bug for the past seveal days. Like you, it was working at some point as my old hosts have no problem w/ the NFS share from FreeNAS.

I used the Jarli01 script to build the XOCE and keep it updated regularly. I was 3 commits behind but just updated to the latest commit and still have the same issue.

@olivierlambert Any ideas why I can't deploy XO? Not sure if the logs I provided above are helpful.

Please let me know if you wish me to provide you with any additional logs, etc.

Thank you both!

SW

-

@olivierlambert @TS79 I just rebooted the server, ran the XOCE update script, and tried adding the SR NFS to the new host and I was able to create the SR NFS!

I'm about to test it by installing a VM to confirm it's working properly!

-

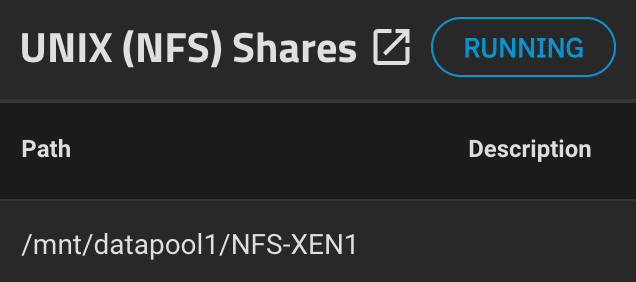

@stevewest15 Just did the same (well, using the ronivay script to update) - still had the same error adding NFS storage to the host. Noticed the following oddities:

- I only typed in the IP address of the NFS server, then immediately selected the NFS version 4.1 before clicking anything else

- After hitting the "search" icon to the right of the NFS Server's IP address, the only Path option was /mnt

This is NOT expected, as in TrueNAS Scale, the NFS export is set to /mnt/datapool/NFS-XEN1

-

Clicking the "search" icon to the right of the Subdirectory field didn't show any results, so I entered the datapool/NFS-XEN1 manually

-

This kicked up an SR creation error

-

I then set the NFS version to 'Default' and clicked Create again - this kicked up a different error specifically saying that NFS version 3 failed

-

I changed back to NFS 4.1, clicked Create again, and this time it worked...

Very sporadic behaviour, and not a problem I've had until recently. Many previous months of testing with NFS have all added perfectly (once I had the TrueNAS permissions set correctly).

-

@TS79 It seems something is not stable w/ SR NFS creation. The strange thing is XOCE allowed the creation of the NFS Remote under Settings > Remotes but refused to create the SR NFS until I patched to latest build (I was 3 commits behind) and then rebooted the entire server.

I was hoping to test this via just XO to rule out if it's an issue w/ XOCE or not but I couldn't get the XO deploy script to work. But I'll have to tackle that issue another day.

I was able to install a VM using the SR NFS and will be running som Disk IO tests to see how stable the NFS connection.

Thank you again for your help and testing this on your home lab! Greatly appreciate it!

Best Regards,

SW

-

O olivierlambert marked this topic as a question on

O olivierlambert marked this topic as a question on

-

BR & SR are vastly different, and not mounted by the same machine (XO vs XCP-ng). Happy to see it works now.

-

O olivierlambert has marked this topic as solved on

O olivierlambert has marked this topic as solved on

-

I ran into this problem with a fresh install of XCP-ng and TrueNAS last night.

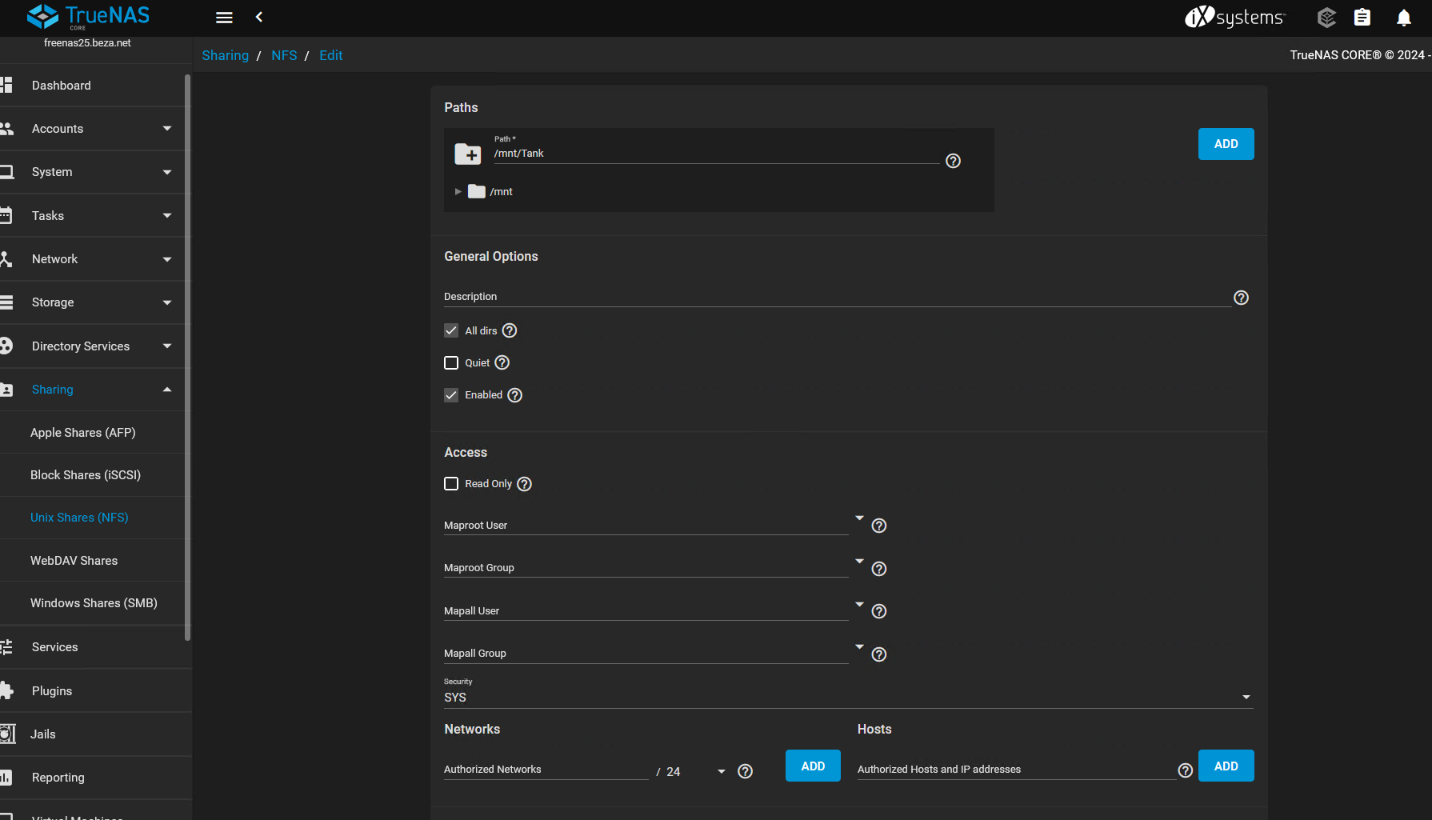

The fix is on TrueNAS, NFS Advanced Options, set the maproot user to 'root' and maproot group to 'wheel'.

By default the fields are empty.