CEPH FS Storage Driver

-

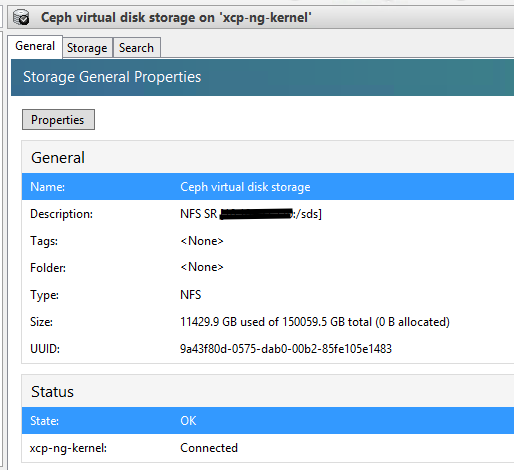

As a side experiment - I could extract and test CEPH FS kernel module for XCP-NG 7.4+ and mount a multi-mon ceph cluster (luminous).

Once ceph.ko module is in place, XCP-NG can mount the ceph fs mount point similar to NFS.

e.g. In NFSSR we use

#mount.nfs4 addr:remotepath localpathwhile for CEPHSR we can use

#mount.ceph addr1,addr2,addr3,addr4:remotepath localpathI'm currently looking at NFSSR.py to create CEPHFSSR.py - and would share once ready, meanwhile if anyone wants to help me in this by testing ceph.ko, developing CEPHFSSR.py, kindly ping here. Like EXT4 and XFS, this too will have some cleanup issues..

If this works as expected, I'd request @olivierlambert and @borzel to look at possibilities of integrating CEPH FS option in XO/XC near NFS SR.

Note: This is completely different than RBDSR which is worked by @rposudnevskiy and uses rbd image protocol of ceph.Bonus: If someone knows how nfs-ganesha fits in this - buzzz... e.g. Each CEPH FS node can have a NFS server while each XCP-NG host mounts all of them using NFS 4.1 (pNFS). Bypassing need of ceph.ko. We can also rebuild NFS module as CONFIG_NFSD_PNFS is not set by default.Caveats: CEPH FS caching is not fully explored.State: Ready. See post below for instructions on how to use. -

So taking inspiration and doing small modifications to existing NFS driver, patch allows you to use both NFS and Ceph FS same time.

Note : The patch is applied against XCP-NG 7.6.0# cd / # wget "https://gist.githubusercontent.com/rushikeshjadhav/af53bb5747365875f0ab21bd3a64c6fe/raw/59ef7a4b54574e4163da1ac39acd640554bd0d24/ceph.patch" # patch -p0 < ceph.patchApart from the patch, ceph.ko & ceph-common would be needed.

To install ceph-common on XCP-NG

# yum install centos-release-ceph-luminous --enablerepo=extras

# yum install ceph-commonTo install and load ceph.ko

# wget -O ceph-4.4.52-4.0.12.x86_64.rpm "https://github.com/rushikeshjadhav/ceph-4.4.52/blob/master/RPMS/x86_64/ceph-4.4.52-4.0.12.x86_64.rpm?raw=true"

# yum install ceph-4.4.52-4.0.12.x86_64.rpm

# modprobe -v ceph(Optional)User experience:

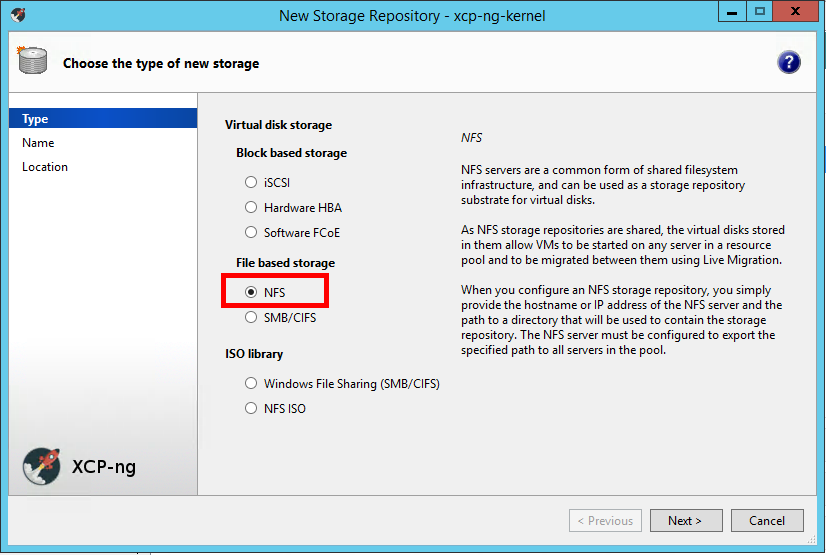

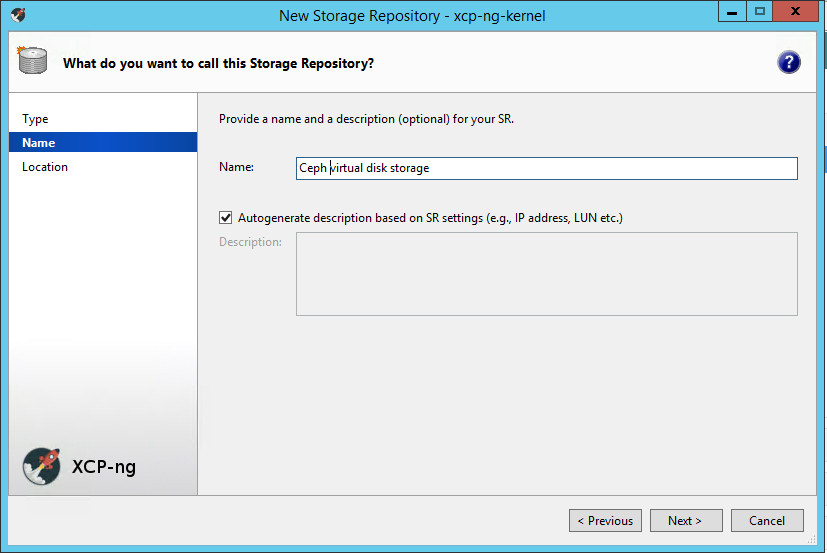

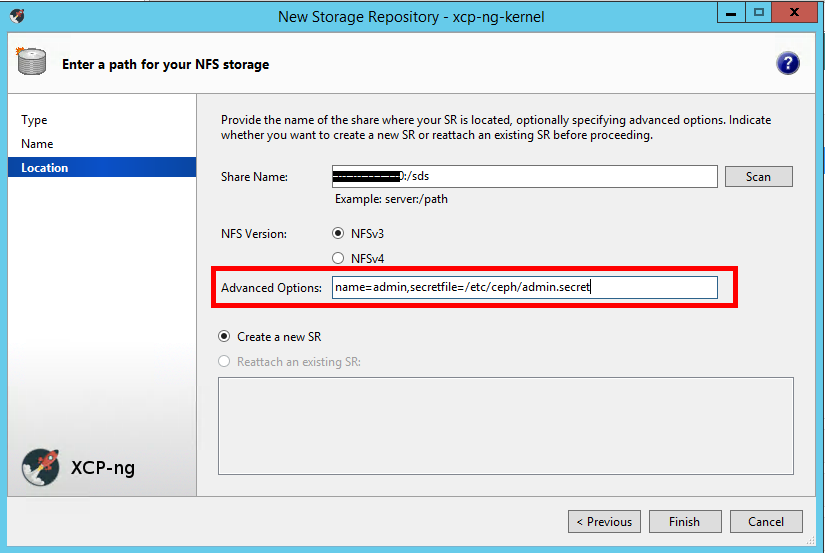

In

Share Name, you can put multiple addresses from your CEPH mon.

InAdvanced Options, you need to add your ceph authx user details, such as name and its secret file location. These will be used bymount.ceph.Note: Keep secret file in /etc/ceph/ with permissions 600.Current SR detection patch replies on presence of word "ceph" in advance options to discriminate between NFS and CEPH FS.

Log Location : /var/log/SMlogEdit: Created RPM installer for ceph.ko

-

[Edited to remove huge quote]

WOW @r1

This sound really good.CephFS is the one with the worst performance.

But at least if it works it's better than nothing.

This also doesn't seems so disruptive but a small patch.

So I guess, less changes, less issues with the standard installation of XCP.good job!

I'll start to test it at beginning of march.

Count on me. -

Thanks @maxcuttins. Let me know how it goes..

-

RBD is faster because of client side caching.. Ceph FS does not do it but can be made faster by enabling

fscache. Once you have your numbers we will retest withfscache

-

Ok I have mine in a very restricted environment and do not have any logins defined for the ceph access.

So saying that if I don't put any user authentication in the options will it still work? I had issues with your plugin around that same problem.

I'm also still on 7.5 will that be an issue and my ceph is mimic. -

Can you show your mount command by doing some example mount? e.g.

#mount.ceph add1:remotepath localpath /mnt? Does this work as it is?The advanced options are necessary for now to discriminate between NFS and Ceph. But I think once I know your working command, I will be able to generalize it.

BTW - I hope you installed the ceph.ko as mentioned in earlier steps.

-

@r1 I'm in planning phase right now since these boxes are actually production and I'll have to figure how to do this without it being a possible danger to my production enviro. The box I have that needs it the worst I still haven't upgraded from xenserver 6.5. I need to setup a fully dev box for this. I've got plans for upgrading my infrastructure for the ceph and virtual public network that this would help tremendously.

-

Sadly XS 6.5 uses 3.10 kernel which does not support Ceph outright and you would most likely to update this host to a recent XCP version.

-

@r1 Yeah I just haven't had time yet since it has one of our heaviest used virtuals on it and not good to upset the masses lol.

-

@scboley said in CEPH FS Storage Driver:

and not good to upset the masses

this is the reason I worked at night the last two weeks at work

-

@r1 sudo mount -t ceph sanadmin.nams.net:6789: / /mnt/nfsmigrate

-

@scboley nice. It should work straight as mentioned in 2nd post of this thread.

-

Ok,

i start my adventure but I get soon an issue.

This command install the nautilus release Repo:

yum install centos-release-ceph-nautilus --enablerepo=extrasand worked fine.

This one should installed ceph-common but cannot find many dependencies

yum install ceph-common Loaded plugins: fastestmirror centos-ceph-nautilus | 2.9 kB 00:00:00 centos-ceph-nautilus/7/x86_64/primary_db | 62 kB 00:00:00 Loading mirror speeds from cached hostfile * centos-ceph-nautilus: mirrors.prometeus.net Resolving Dependencies --> Running transaction check ---> Package ceph-common.x86_64 2:14.2.0-1.el7 will be installed --> Processing Dependency: python-rgw = 2:14.2.0-1.el7 for package: 2:ceph-common-14.2.0-1.el7.x86_64 ... [CUT] ... Error: Package: 2:librgw2-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: libibverbs.so.1()(64bit) Error: Package: leveldb-1.12.0-5.el7.1.x86_64 (centos-ceph-nautilus) Requires: libsnappy.so.1()(64bit) Error: Package: 2:libcephfs2-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: librdmacm.so.1()(64bit) Error: Package: 2:ceph-common-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: liblz4.so.1()(64bit) Error: Package: 2:libradosstriper1-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: libibverbs.so.1()(64bit) Error: Package: 2:librbd1-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: libibverbs.so.1()(64bit) Error: Package: 2:ceph-common-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: python-requests Error: Package: 2:librgw2-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: librdmacm.so.1()(64bit) Error: Package: 2:librados2-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: librdmacm.so.1()(64bit) Error: Package: 2:ceph-common-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: libibverbs.so.1()(64bit) Error: Package: 2:libcephfs2-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: libibverbs.so.1()(64bit) Error: Package: 2:ceph-common-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: libfuse.so.2()(64bit) Error: Package: 2:ceph-common-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: libsnappy.so.1()(64bit) Error: Package: 2:librbd1-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: librdmacm.so.1()(64bit) Error: Package: 2:librados2-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: librdmacm.so.1(RDMACM_1.0)(64bit) Error: Package: 2:ceph-common-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: librabbitmq.so.4()(64bit) Error: Package: 2:libradosstriper1-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: librdmacm.so.1()(64bit) Error: Package: 2:librgw2-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: librabbitmq.so.4()(64bit) Error: Package: 2:librados2-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: libibverbs.so.1()(64bit) Error: Package: 2:ceph-common-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: libtcmalloc.so.4()(64bit) Error: Package: 2:librados2-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: libibverbs.so.1(IBVERBS_1.0)(64bit) Error: Package: 2:librados2-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: libibverbs.so.1(IBVERBS_1.1)(64bit) Error: Package: 2:ceph-common-14.2.0-1.el7.x86_64 (centos-ceph-nautilus) Requires: librdmacm.so.1()(64bit) You could try using --skip-broken to work around the problem You could try running: rpm -Va --nofiles --nodigestOf course I'm using a higher version of Ceph than your post.

Any hint anyway? -

Ok, solved.

Of course Ceph need Epel and Base repo enabled.This worked:

yum install epel-release -y --enablerepo=extras yum install ceph-common --enablerepo='centos-ceph-nautilus' --enablerepo='epel' --enablerepo='base' -

@r1 said in CEPH FS Storage Driver:

patch -p0 < ceph.patch

This went not so smooth, reporting "unexpected end":

patch -p0 < ceph.patch patching file /opt/xensource/sm/nfs.py patching file /opt/xensource/sm/NFSSR.py patch unexpectedly ends in middle of line Hunk #3 succeeded at 197 with fuzz 1. -

Hi @maxcuttins - what's your XCP-NG version and can you share checksum for your NFSSR.py file?

-

Hi,

I also play around with xcp-ng and ceph and just tried to use CephFS as a storage repos. I am using XCP-ng 8.0.0 with Ceph Nautilus. Before I tried to use lvm on top of a rbd which basically works but performance is really bad this way.

So I downloaded your patch and ran it on my test 8.0.0 xenserver, all Hunks succeeded. ceph is also installed, modprobe ceph works. Next I tried to add a new cephFs repository just as you described it above.

In the end I see an error box pooping up in XencCenter: "SM has thrown a generic python exception". And thats all.

In /var/log/SMlog I see the log attached below.

Thanks

Rainer/var/log/SMLog:

Sep 26 09:42:54 rzinstal4 SM: [18075] lock: opening lock file /var/lock/sm/d63d0d49-522d-6160-a1eb-a9d5f34cec1e/sr Sep 26 09:42:54 rzinstal4 SM: [18075] lock: acquired /var/lock/sm/d63d0d49-522d-6160-a1eb-a9d5f34cec1e/sr Sep 26 09:42:54 rzinstal4 SM: [18075] sr_create {'sr_uuid': 'd63d0d49-522d-6160-a1eb-a9d5f34cec1e', 'subtask_of': 'DummyRef:|55cbc4ef-c6e9-4dba-aa5f-8f56b2473443|SR.create', 'args': ['0'], 'host_ref': 'OpaqueRef:0c03f516-7ed5-48ac-895b-51833177aed5', 'session_ref': 'OpaqueRef:d472846e-c6aa-467d-ac8b-0f401fbc698c', 'device_config': {'server': 'ip_of_mon', 'SRmaster': 'true', 'serverpath': '/sds', 'options': 'name=admin,secretfile=/etc/ceph/admin.secret'}, 'command': 'sr_create', 'sr_ref': 'OpaqueRef:9fb35d75-a45c-4ce4-b9f4-8ed8072ad6ad'} Sep 26 09:42:54 rzinstal4 SM: [18075] ['/usr/sbin/rpcinfo', '-p', 'ip_of_mon'] Sep 26 09:42:54 rzinstal4 SM: [18075] FAILED in util.pread: (rc 1) stdout: '', stderr: 'rpcinfo: can't contact portmapper: RPC: Remote system error - Connection refused Sep 26 09:42:54 rzinstal4 SM: [18075] ' Sep 26 09:42:54 rzinstal4 SM: [18075] Unable to obtain list of valid nfs versions Sep 26 09:42:54 rzinstal4 SM: [18075] lock: released /var/lock/sm/d63d0d49-522d-6160-a1eb-a9d5f34cec1e/sr Sep 26 09:42:54 rzinstal4 SM: [18075] ***** generic exception: sr_create: EXCEPTION <type 'exceptions.TypeError'>, not all arguments converted during string formatting Sep 26 09:42:54 rzinstal4 SM: [18075] File "/opt/xensource/sm/SRCommand.py", line 110, in run Sep 26 09:42:54 rzinstal4 SM: [18075] return self._run_locked(sr) Sep 26 09:42:54 rzinstal4 SM: [18075] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Sep 26 09:42:54 rzinstal4 SM: [18075] rv = self._run(sr, target) Sep 26 09:42:54 rzinstal4 SM: [18075] File "/opt/xensource/sm/SRCommand.py", line 323, in _run Sep 26 09:42:54 rzinstal4 SM: [18075] return sr.create(self.params['sr_uuid'], long(self.params['args'][0])) Sep 26 09:42:54 rzinstal4 SM: [18075] File "/opt/xensource/sm/NFSSR", line 216, in create Sep 26 09:42:54 rzinstal4 SM: [18075] raise exn Sep 26 09:42:54 rzinstal4 SM: [18075] Sep 26 09:42:54 rzinstal4 SM: [18075] ***** NFS VHD: EXCEPTION <type 'exceptions.TypeError'>, not all arguments converted during string formatting Sep 26 09:42:54 rzinstal4 SM: [18075] File "/opt/xensource/sm/SRCommand.py", line 372, in run Sep 26 09:42:54 rzinstal4 SM: [18075] ret = cmd.run(sr) Sep 26 09:42:54 rzinstal4 SM: [18075] File "/opt/xensource/sm/SRCommand.py", line 110, in run Sep 26 09:42:54 rzinstal4 SM: [18075] return self._run_locked(sr) Sep 26 09:42:54 rzinstal4 SM: [18075] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Sep 26 09:42:54 rzinstal4 SM: [18075] rv = self._run(sr, target) Sep 26 09:42:54 rzinstal4 SM: [18075] File "/opt/xensource/sm/SRCommand.py", line 323, in _run Sep 26 09:42:54 rzinstal4 SM: [18075] return sr.create(self.params['sr_uuid'], long(self.params['args'][0])) Sep 26 09:42:54 rzinstal4 SM: [18075] File "/opt/xensource/sm/NFSSR", line 216, in create Sep 26 09:42:54 rzinstal4 SM: [18075] raise exn Sep 26 09:42:54 rzinstal4 SM: [18075] Sep 26 09:42:54 rzinstal4 SM: [18075] lock: closed /var/lock/sm/d63d0d49-522d-6160-a1eb-a9d5f34cec1e/sr -

-

Hello oliverlambert,

thanks for the hint. I edited my post correcting the formatting.