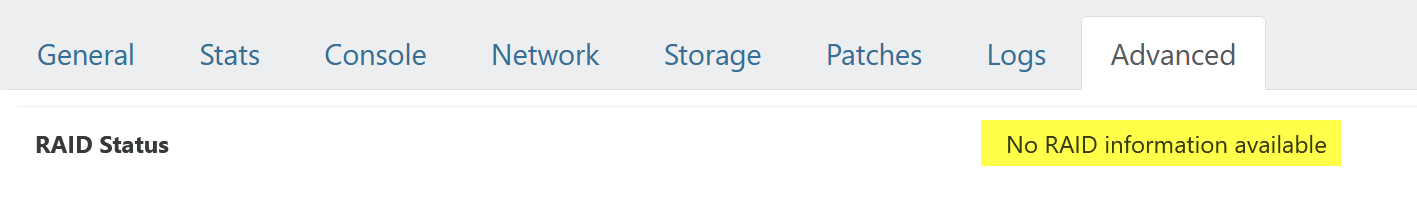

RAID Status on the Advanced tab of the host

-

Under what circumstances is the RAID array status displayed here? I have an mdadm RAID array on my host (dom0), but here it says "No RAID information available":

-

Hi,

On which XCP-ng version are you?

-

@olivierlambert

8.3 including todays candidates -

Hmm that would be interesting to check manually the plugin report:

xe host-call-plugin host-uuid=<uuid> plugin=raid.py fn=check_raid_pool -

[18:38 vms04 ~]# xe host-call-plugin host-uuid=5f94209f-8801-4179-91d5-5bdf3eb1d3f1 plugin=raid.py fn=check_raid_pool {} [18:38 vms04 ~]#[18:38 vms04 ~]# cat /proc/mdstat Personalities : [raid0] md0 : active raid0 sdb[1] sda[0] sdc[2] 11720658432 blocks super 1.2 512k chunks unused devices: <none> [18:56 vms04 ~]# mdadm --detail /dev/md0 /dev/md0: Version : 1.2 Creation Time : Fri May 2 14:43:06 2025 Raid Level : raid0 Array Size : 11720658432 (11177.69 GiB 12001.95 GB) Raid Devices : 3 Total Devices : 3 Persistence : Superblock is persistent Update Time : Fri May 2 14:43:06 2025 State : clean Active Devices : 3 Working Devices : 3 Failed Devices : 0 Spare Devices : 0 Chunk Size : 512K Consistency Policy : none Name : vms04:0 (local to host vms04) UUID : bbdc7253:792a8ada:ee21a207:4b8d52d2 Events : 0 Number Major Minor RaidDevice State 0 8 0 0 active sync /dev/sda 1 8 16 1 active sync /dev/sdb 2 8 32 2 active sync /dev/sdc [18:56 vms04 ~]# -

In theory, if the raid was created using the installer (so during installation), you should have

/dev/md127. That's what the plugin checks:

https://github.com/xcp-ng/xcp-ng-xapi-plugins/blob/master/SOURCES/etc/xapi.d/plugins/raid.py#L18 -

Yeah, that's it, thanks.

device = '/dev/md0'

-

How did you create your RAID in the first place?

-

The array was created later after installation manually using unused disks. And then it was connected in the normal way as a local SR using XO.

It would be nice if it would also handle the state of other

mdamdRAID arrays. After all, the All mdadm RAID are healthy message kind of predetermines it

-

The plugin was initially created to check RAID created during install, not custom RAIDs created manually. But that could be an improvement to the plugin

@stormi I leave you the honor to add this somewhere

@stormi I leave you the honor to add this somewhere