Windows11 VMs failing to boot

-

Jul 11 10:33:59 hst100 cleanup.py[132827]: All output goes to log Jul 11 10:33:59 hst100 systemd[1]: Started Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d. Jul 11 10:34:12 hst100 forkexecd: [error||0 ||forkexecd] 133009 (/opt/xensource/libexec/mail-alarm <?xml version="1.0" encoding="UTF-8"?>\x0A<message><ref>OpaqueRef:80e8cac6-789a-8d76-70b6-afe1551f8de2</ref><name>ALARM</name><priority>3</priority><cls>VM</cls><obj_uuid>1b298dd8-5921-4090-8ac9-f26efbaf88b3</obj_uuid><timestamp>20250711T00:34:12Z</timestamp><uuid>d2844e9b-f88c-bf7e-5456-610060fcb05b</uuid><body>value: 1.000000\x0Aconfig:\x0A<variable>\x0A\x09<name value="fs_usage"/>\x0A\x09<alarm_trigger_level value="0.9"/>\x0A\x09<alarm_trigger_period value="60"/>\x0A\x09<alarm_auto_inhibit_period value="3600"/>\x0A</variable>\x0A</body></message>) exited with code 1 Jul 11 10:34:22 hst100 systemd[1]: Starting Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d... Jul 11 10:34:28 hst100 cleanup.py[133122]: All output goes to log Jul 11 10:34:28 hst100 systemd[1]: Started Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d. Jul 11 10:34:38 hst100 sparse_dd: [debug||0 ||sparse_dd] progress 10% Jul 11 10:34:44 hst100 qemu-dm-5[22780]: 22780@1752194084.964567:xen_platform_log xen platform: xeniface|IoctlLog: USER: OnSessionChange(SessionLock, 2) Jul 11 10:34:47 hst100 qemu-dm-5[22780]: 22780@1752194087.641201:xen_platform_log xen platform: xen|ModuleAdd: FFFFF80333BF0000 - FFFFF80333C00FFF [mskssrv.sys] Jul 11 10:34:47 hst100 qemu-dm-5[22780]: 22780@1752194087.642844:xen_platform_log xen platform: xen|ModuleAdd: FFFFF80333C10000 - FFFFF80333C20FFF [ksthunk.sys] Jul 11 10:34:52 hst100 systemd[1]: Starting Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d... Jul 11 10:34:59 hst100 cleanup.py[133367]: All output goes to log Jul 11 10:34:59 hst100 systemd[1]: Started Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d. Jul 11 10:35:23 hst100 systemd[1]: Starting Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d... Jul 11 10:35:29 hst100 cleanup.py[133646]: All output goes to log Jul 11 10:35:29 hst100 systemd[1]: Started Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d. Jul 11 10:35:52 hst100 systemd[1]: Starting Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d... Jul 11 10:35:59 hst100 cleanup.py[133908]: All output goes to log Jul 11 10:35:59 hst100 systemd[1]: Started Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d. Jul 11 10:36:22 hst100 systemd[1]: Starting Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d... Jul 11 10:36:29 hst100 cleanup.py[134206]: All output goes to log Jul 11 10:36:29 hst100 systemd[1]: Started Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d. Jul 11 10:36:52 hst100 systemd[1]: Starting Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d... Jul 11 10:36:59 hst100 cleanup.py[134483]: All output goes to log Jul 11 10:36:59 hst100 systemd[1]: Started Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d. Jul 11 10:37:23 hst100 systemd[1]: Starting Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d... Jul 11 10:37:29 hst100 cleanup.py[134766]: All output goes to log Jul 11 10:37:29 hst100 systemd[1]: Started Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d. Jul 11 10:37:52 hst100 systemd[1]: Starting Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d... Jul 11 10:37:59 hst100 cleanup.py[135061]: All output goes to log Jul 11 10:37:59 hst100 systemd[1]: Started Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d. Jul 11 10:38:22 hst100 systemd[1]: Starting Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d... Jul 11 10:38:29 hst100 cleanup.py[135334]: All output goes to log Jul 11 10:38:29 hst100 systemd[1]: Started Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d. Jul 11 10:38:52 hst100 systemd[1]: Starting Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d... Jul 11 10:38:58 hst100 cleanup.py[135655]: All output goes to log Jul 11 10:38:58 hst100 systemd[1]: Started Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d. Jul 11 10:39:22 hst100 systemd[1]: Starting Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d... Jul 11 10:39:29 hst100 cleanup.py[135953]: All output goes to log Jul 11 10:39:29 hst100 systemd[1]: Started Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d. Jul 11 10:39:52 hst100 systemd[1]: Starting Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d... Jul 11 10:39:59 hst100 cleanup.py[136246]: All output goes to log Jul 11 10:39:59 hst100 systemd[1]: Started Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d. Jul 11 10:40:01 hst100 systemd[1]: Started Session c43 of user root. Jul 11 10:40:01 hst100 systemd[1]: Starting Session c43 of user root. Jul 11 10:40:22 hst100 tapdisk[33072]: received 'close' message (uuid = 10) Jul 11 10:40:22 hst100 tapdisk[33072]: nbd: NBD server pause(0x16b09f0) Jul 11 10:40:22 hst100 tapdisk[33072]: nbd: NBD server pause(0x16c8810) Jul 11 10:40:22 hst100 tapdisk[33072]: nbd: NBD server free(0x16b09f0) Jul 11 10:40:22 hst100 tapdisk[33072]: nbd: NBD server free(0x16c8810) Jul 11 10:40:22 hst100 tapdisk[33072]: gaps written/skipped: 0/0 Jul 11 10:40:22 hst100 tapdisk[33072]: /var/run/sr-mount/ff9dc099-c34f-d3ac-3ac4-19ed74480a4b/310dc526-765c-455c-848d-610bb7ae6cd1.vhd: b: 102400, a: 102279, f: 102279, n: 419753840 Jul 11 10:40:22 hst100 tapdisk[33072]: closed image /var/run/sr-mount/ff9dc099-c34f-d3ac-3ac4-19ed74480a4b/310dc526-765c-455c-848d-610bb7ae6cd1.vhd (0 users, state: 0x00000000, type: 4) Jul 11 10:40:22 hst100 tapdisk[33072]: sending 'close response' message (uuid = 10) Jul 11 10:40:22 hst100 tapdisk[33072]: received 'detach' message (uuid = 10) Jul 11 10:40:22 hst100 tapdisk[33072]: sending 'detach response' message (uuid = 10) Jul 11 10:40:22 hst100 tapdisk[33072]: tapdisk-log: closing after 0 errors Jul 11 10:40:22 hst100 tapdisk[33072]: tapdisk-syslog: 22 messages, 1932 bytes, xmits: 23, failed: 0, dropped: 0 Jul 11 10:40:22 hst100 tapdisk[33072]: tapdisk-control: draining 1 connections Jul 11 10:40:22 hst100 tapdisk[33072]: tapdisk-control: done Jul 11 10:40:22 hst100 systemd[1]: Starting Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d... Jul 11 10:40:29 hst100 cleanup.py[136554]: All output goes to log Jul 11 10:40:29 hst100 systemd[1]: Started Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d. Jul 11 10:40:52 hst100 xenguest-13-build[136890]: Command line: -controloutfd 8 -controlinfd 9 -mode hvm_build -image /usr/libexec/xen/boot/hvmloader -domid 13 -store_port 5 -store_domid 0 -console_port 6 -console_domid 0 -mem_max_mib 8184 -mem_start_mib 8184 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: Domain Properties: Type HVM, hap 1 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: Determined the following parameters from xenstore: Jul 11 10:40:52 hst100 xenguest-13-build[136890]: vcpu/number:4 vcpu/weight:256 vcpu/cap:0 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: nx: 1, pae 1, cores-per-socket 4, x86-fip-width 0, nested 0 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: apic: 1 acpi: 1 acpi_s4: 0 acpi_s3: 0 tsc_mode: 0 hpet: 1 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: nomigrate 0, timeoffset 36000 mmio_hole_size 0 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: viridian: 1, time_ref_count: 1, reference_tsc: 1 hcall_remote_tlb_flush: 0 apic_assist: 1 crash_ctl: 1 stimer: 1 hcall_ipi: 0 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: vcpu/0/affinity:111111111111 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: vcpu/1/affinity:111111111111 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: vcpu/2/affinity:111111111111 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: vcpu/3/affinity:111111111111 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_allocate: cmdline="", features="" Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_kernel_file: filename="/usr/libexec/xen/boot/hvmloader" Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_malloc_filemap : 629 kB Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_module_file: filename="/usr/share/ipxe/ipxe.bin" Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_malloc_filemap : 132 kB Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_boot_xen_init: ver 4.17, caps xen-3.0-x86_64 hvm-3.0-x86_32 hvm-3.0-x86_32p hvm-3.0-x86_64 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_parse_image: called Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_find_loader: trying multiboot-binary loader ... Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: loader probe failed Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_find_loader: trying HVM-generic loader ... Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: loader probe OK Jul 11 10:40:52 hst100 xenguest-13-build[136890]: xc: detail: ELF: phdr: paddr=0x100000 memsz=0x57ac4 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: xc: detail: ELF: memory: 0x100000 -> 0x157ac4 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_compat_check: supported guest type: xen-3.0-x86_64 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_compat_check: supported guest type: hvm-3.0-x86_32 <= matches Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_compat_check: supported guest type: hvm-3.0-x86_32p Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_compat_check: supported guest type: hvm-3.0-x86_64 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: Calculated provisional MMIO hole size as 0x10000000 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: Loaded OVMF from /usr/share/edk2/OVMF-release.fd Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_mem_init: mem 8184 MB, pages 0x1ff800 pages, 4k each Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_mem_init: 0x1ff800 pages Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_boot_mem_init: called Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: range: start=0x0 end=0xf0000000 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: range: start=0x100000000 end=0x20f800000 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: xc: detail: PHYSICAL MEMORY ALLOCATION: Jul 11 10:40:52 hst100 xenguest-13-build[136890]: xc: detail: 4KB PAGES: 0x0000000000000200 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: xc: detail: 2MB PAGES: 0x00000000000003fb Jul 11 10:40:52 hst100 xenguest-13-build[136890]: xc: detail: 1GB PAGES: 0x0000000000000006 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: Final lower MMIO hole size is 0x10000000 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_build_image: called Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_pfn_to_ptr_retcount: domU mapping: pfn 0x100+0x58 at 0x7f47fc88a000 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_alloc_segment: kernel : 0x100000 -> 0x157ac4 (pfn 0x100 + 0x58 pages) Jul 11 10:40:52 hst100 xenguest-13-build[136890]: xc: detail: ELF: phdr 0 at 0x7f47fac98000 -> 0x7f47face8ea0 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_pfn_to_ptr_retcount: domU mapping: pfn 0x158+0x200 at 0x7f47faaf0000 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_alloc_segment: System Firmware module : 0x158000 -> 0x358000 (pfn 0x158 + 0x200 pages) Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_pfn_to_ptr_retcount: domU mapping: pfn 0x358+0x22 at 0x7f47fc868000 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_alloc_segment: module0 : 0x358000 -> 0x379200 (pfn 0x358 + 0x22 pages) Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_pfn_to_ptr_retcount: domU mapping: pfn 0x37a+0x1 at 0x7f47fca47000 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_alloc_segment: HVM start info : 0x37a000 -> 0x37a878 (pfn 0x37a + 0x1 pages) Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_build_image : virt_alloc_end : 0x37b000 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_build_image : virt_pgtab_end : 0x0 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_boot_image: called Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: domain builder memory footprint Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: allocated Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: malloc : 18525 bytes Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: anon mmap : 0 bytes Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: mapped Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: file mmap : 762 kB Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: domU mmap : 2540 kB Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: Adding module 0 guest_addr 358000 len 135680 Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: vcpu_hvm: called Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_set_gnttab_entry: d13 gnt[0] -> d0 0xfefff Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_set_gnttab_entry: d13 gnt[1] -> d0 0xfeffc Jul 11 10:40:52 hst100 xenguest-13-build[136890]: viridian base Jul 11 10:40:52 hst100 xenguest-13-build[136890]: + time_ref_count Jul 11 10:40:52 hst100 xenguest-13-build[136890]: + reference_tsc Jul 11 10:40:52 hst100 xenguest-13-build[136890]: + apic_assist Jul 11 10:40:52 hst100 xenguest-13-build[136890]: + crash_ctl Jul 11 10:40:52 hst100 xenguest-13-build[136890]: + stimer Jul 11 10:40:52 hst100 xenguest-13-build[136890]: Parsing '178bfbff-f6f83203-2e500800-040001f3-0000000f-f1bf07a9-00405f4e-00000000-711ed005-10000010-00000020-18000144-00000000-00000000-00000000-00000000-00000000-00000000-00000000-00000000-00000000-00000000' as featureset Jul 11 10:40:52 hst100 xenguest-13-build[136890]: domainbuilder: detail: xc_dom_release: called Jul 11 10:40:52 hst100 xenguest-13-build[136890]: Writing to control: 'result:1044476 1044479#012' Jul 11 10:40:52 hst100 xenguest-13-build[136890]: All done Jul 11 10:40:52 hst100 ovs-vsctl: ovs|00001|db_ctl_base|ERR|no row "vif13.0" in table Interface Jul 11 10:40:52 hst100 ovs-vsctl: ovs|00001|vsctl|INFO|Called as ovs-vsctl --timeout=30 -- --if-exists del-port vif13.0 Jul 11 10:40:52 hst100 ovs-vsctl: ovs|00001|vsctl|INFO|Called as ovs-vsctl --timeout=30 add-port xapi2 vif13.0 -- set interface vif13.0 "external-ids:\"xs-vm-uuid\"=\"16a5f8be-781c-46fe-df43-83744df32826\"" -- set interface vif13.0 "external-ids:\"xs-vif-uuid\"=\"a48873c0-17c9-8905-712e-72579245a342\"" -- set interface vif13.0 "external-ids:\"xs-network-uuid\"=\"1bfba311-a261-d329-d01d-ab2713d0dc78\"" -- set interface vif13.0 "external-ids:\"attached-mac\"=\"da:ff:e4:1f:38:61\"" Jul 11 10:40:53 hst100 systemd[1]: Starting Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d... Jul 11 10:40:53 hst100 tapdisk[137103]: tapdisk-control: init, 10 x 4k buffers Jul 11 10:40:53 hst100 tapdisk[137103]: I/O queue driver: lio Jul 11 10:40:53 hst100 tapdisk[137103]: I/O queue driver: lio Jul 11 10:40:53 hst100 tapdisk[137103]: tapdisk-log: started, level 0 Jul 11 10:40:53 hst100 tapdisk[137103]: Tapdisk running, control on /var/run/blktap-control/ctl137103 Jul 11 10:40:53 hst100 tapdisk[137103]: nbd: Set up local unix domain socket on path '/var/run/blktap-control/nbdclient137103' Jul 11 10:40:53 hst100 tapdisk[137103]: received 'attach' message (uuid = 10) Jul 11 10:40:53 hst100 tapdisk[137103]: sending 'attach response' message (uuid = 10) Jul 11 10:40:53 hst100 tapdisk[137103]: received 'open' message (uuid = 10) Jul 11 10:40:53 hst100 tapdisk[137103]: /var/run/sr-mount/ff9dc099-c34f-d3ac-3ac4-19ed74480a4b/0d16619f-bcb7-47ea-9ecc-46159e4ff4ad.vhd version: tap 0x00010003, b: 51200, a: 21320, f: 21320, n: 87497696 Jul 11 10:40:53 hst100 tapdisk[137103]: opened image /var/run/sr-mount/ff9dc099-c34f-d3ac-3ac4-19ed74480a4b/0d16619f-bcb7-47ea-9ecc-46159e4ff4ad.vhd (1 users, state: 0x00000001, type: 4, rw) Jul 11 10:40:53 hst100 tapdisk[137103]: VBD CHAIN: Jul 11 10:40:53 hst100 tapdisk[137103]: /var/run/sr-mount/ff9dc099-c34f-d3ac-3ac4-19ed74480a4b/0d16619f-bcb7-47ea-9ecc-46159e4ff4ad.vhd: type:vhd(4) storage:ext(2) Jul 11 10:40:53 hst100 tapdisk[137103]: bdev: capacity=209715200 sector_size=512/512 flags=0 Jul 11 10:40:53 hst100 tapdisk[137103]: nbd: Set up local unix domain socket on path '/var/run/blktap-control/nbdserver137103.10' Jul 11 10:40:53 hst100 tapdisk[137103]: nbd: registering for unix_listening_fd Jul 11 10:40:53 hst100 tapdisk[137103]: nbd: Successfully started NBD server on /var/run/blktap-control/nbd-old137103.10 Jul 11 10:40:53 hst100 tapdisk[137103]: nbd: Set up local unix domain socket on path '/var/run/blktap-control/nbdserver-new137103.10' Jul 11 10:40:53 hst100 tapdisk[137103]: nbd: registering for unix_listening_fd Jul 11 10:40:53 hst100 tapdisk[137103]: nbd: Successfully started NBD server on /var/run/blktap-control/nbd137103.10 Jul 11 10:40:53 hst100 tapdisk[137103]: sending 'open response' message (uuid = 10) Jul 11 10:40:53 hst100 tapdisk[137114]: tapdisk-control: init, 10 x 4k buffers Jul 11 10:40:53 hst100 tapdisk[137114]: I/O queue driver: lio Jul 11 10:40:53 hst100 tapdisk[137114]: I/O queue driver: lio Jul 11 10:40:53 hst100 tapdisk[137114]: tapdisk-log: started, level 0 Jul 11 10:40:53 hst100 tapdisk[137114]: Tapdisk running, control on /var/run/blktap-control/ctl137114 Jul 11 10:40:53 hst100 tapdisk[137114]: nbd: Set up local unix domain socket on path '/var/run/blktap-control/nbdclient137114' Jul 11 10:40:53 hst100 tapdisk[137114]: received 'attach' message (uuid = 12) Jul 11 10:40:53 hst100 tapdisk[137114]: sending 'attach response' message (uuid = 12) Jul 11 10:40:53 hst100 tapdisk[137114]: received 'open' message (uuid = 12) Jul 11 10:40:53 hst100 tapdisk[137114]: /var/run/sr-mount/ff9dc099-c34f-d3ac-3ac4-19ed74480a4b/310dc526-765c-455c-848d-610bb7ae6cd1.vhd version: tap 0x00010003, b: 102400, a: 102279, f: 102279, n: 419753840 Jul 11 10:40:53 hst100 tapdisk[137114]: opened image /var/run/sr-mount/ff9dc099-c34f-d3ac-3ac4-19ed74480a4b/310dc526-765c-455c-848d-610bb7ae6cd1.vhd (1 users, state: 0x00000001, type: 4, rw) Jul 11 10:40:53 hst100 tapdisk[137114]: VBD CHAIN: Jul 11 10:40:53 hst100 tapdisk[137114]: /var/run/sr-mount/ff9dc099-c34f-d3ac-3ac4-19ed74480a4b/310dc526-765c-455c-848d-610bb7ae6cd1.vhd: type:vhd(4) storage:ext(2) Jul 11 10:40:53 hst100 tapdisk[137114]: bdev: capacity=419430400 sector_size=512/512 flags=0 Jul 11 10:40:53 hst100 tapdisk[137114]: nbd: Set up local unix domain socket on path '/var/run/blktap-control/nbdserver137114.12' Jul 11 10:40:53 hst100 tapdisk[137114]: nbd: registering for unix_listening_fd Jul 11 10:40:53 hst100 tapdisk[137114]: nbd: Successfully started NBD server on /var/run/blktap-control/nbd-old137114.12 Jul 11 10:40:53 hst100 tapdisk[137114]: nbd: Set up local unix domain socket on path '/var/run/blktap-control/nbdserver-new137114.12' Jul 11 10:40:53 hst100 tapdisk[137114]: nbd: registering for unix_listening_fd Jul 11 10:40:53 hst100 tapdisk[137114]: nbd: Successfully started NBD server on /var/run/blktap-control/nbd137114.12 Jul 11 10:40:53 hst100 tapdisk[137114]: sending 'open response' message (uuid = 12) Jul 11 10:40:53 hst100 tapback[137128]: tapback.c:445 slave tapback daemon started, only serving domain 13 Jul 11 10:40:53 hst100 tapback[137128]: backend.c:406 832 physical_device_changed Jul 11 10:40:53 hst100 tapback[137128]: backend.c:406 768 physical_device_changed Jul 11 10:40:53 hst100 tapback[137128]: backend.c:406 832 physical_device_changed Jul 11 10:40:53 hst100 tapback[137128]: backend.c:492 832 found tapdisk[137114], for 254:12 Jul 11 10:40:53 hst100 tapdisk[137114]: received 'disk info' message (uuid = 12) Jul 11 10:40:53 hst100 tapdisk[137114]: VBD 12 got disk info: sectors=419430400 sector size=512, info=0 Jul 11 10:40:53 hst100 tapdisk[137114]: sending 'disk info rsp' message (uuid = 12) Jul 11 10:40:53 hst100 tapback[137128]: backend.c:406 768 physical_device_changed Jul 11 10:40:53 hst100 tapback[137128]: backend.c:492 768 found tapdisk[137103], for 254:10 Jul 11 10:40:53 hst100 tapdisk[137103]: received 'disk info' message (uuid = 10) Jul 11 10:40:53 hst100 tapdisk[137103]: VBD 10 got disk info: sectors=209715200 sector size=512, info=0 Jul 11 10:40:53 hst100 tapdisk[137103]: sending 'disk info rsp' message (uuid = 10) Jul 11 10:40:53 hst100 systemd[1]: Started transient unit for varstored-13. Jul 11 10:40:53 hst100 systemd[1]: Starting transient unit for varstored-13... Jul 11 10:40:53 hst100 varstored-13[137207]: main: --domain = '13' Jul 11 10:40:53 hst100 varstored-13[137207]: main: --chroot = '/var/run/xen/varstored-root-13' Jul 11 10:40:53 hst100 varstored-13[137207]: main: --depriv = '(null)' Jul 11 10:40:53 hst100 varstored-13[137207]: main: --uid = '65548' Jul 11 10:40:53 hst100 varstored-13[137207]: main: --gid = '1004' Jul 11 10:40:53 hst100 varstored-13[137207]: main: --backend = 'xapidb' Jul 11 10:40:53 hst100 varstored-13[137207]: main: --arg = 'socket:/xapi-depriv-socket' Jul 11 10:40:53 hst100 varstored-13[137207]: main: --pidfile = '/var/run/xen/varstored-13.pid' Jul 11 10:40:53 hst100 varstored-13[137207]: main: --arg = 'uuid:16a5f8be-781c-46fe-df43-83744df32826' Jul 11 10:40:53 hst100 varstored-13[137207]: main: --arg = 'save:/efi-vars-save.dat' Jul 11 10:40:53 hst100 varstored-13[137207]: varstored_initialize: 4 vCPU(s) Jul 11 10:40:53 hst100 varstored-13[137207]: varstored_initialize: ioservid = 0 Jul 11 10:40:53 hst100 varstored-13[137207]: varstored_initialize: iopage = 0x7fe157ec9000 Jul 11 10:40:53 hst100 varstored-13[137207]: varstored_initialize: VCPU0: 7 -> 308 Jul 11 10:40:53 hst100 varstored-13[137207]: varstored_initialize: VCPU1: 8 -> 309 Jul 11 10:40:53 hst100 varstored-13[137207]: varstored_initialize: VCPU2: 9 -> 310 Jul 11 10:40:53 hst100 varstored-13[137207]: varstored_initialize: VCPU3: 10 -> 311 Jul 11 10:40:53 hst100 varstored-13[137207]: load_one_auth_data: Auth file '/var/lib/varstored/dbx.auth' is missing! Jul 11 10:40:53 hst100 varstored-13[137207]: load_one_auth_data: Auth file '/var/lib/varstored/db.auth' is missing! Jul 11 10:40:53 hst100 varstored-13[137207]: load_one_auth_data: Auth file '/var/lib/varstored/KEK.auth' is missing! Jul 11 10:40:53 hst100 varstored-13[137207]: initialize_settings: Secure boot enable: false Jul 11 10:40:53 hst100 varstored-13[137207]: initialize_settings: Authenticated variables: enforcing Jul 11 10:40:53 hst100 varstored-13[137207]: IO request not ready Jul 11 10:40:53 hst100 varstored-13[137207]: message repeated 3 times: [ IO request not ready] Jul 11 10:40:53 hst100 systemd[1]: Started transient unit for swtpm-13. Jul 11 10:40:53 hst100 systemd[1]: Starting transient unit for swtpm-13... Jul 11 10:40:53 hst100 swtpm-13[137230]: Arguments: 13 /var/lib/xcp/run/swtpm-root-13// unix+http://xapi-depriv-socket false Jul 11 10:40:53 hst100 swtpm-13[137230]: Binding socket to /var/lib/xcp/run/swtpm-root-13//swtpm-sock Jul 11 10:40:53 hst100 swtpm-13[137230]: Exec: /usr/bin/swtpm swtpm-13 socket --tpm2 --tpmstate backend-uri=unix+http://xapi-depriv-socket --ctrl type=unixio,fd=3 --log level=1 --pid file=/swtpm-13.pid -t --chroot /var/lib/xcp/run/swtpm-root-13// --runas 196621 Jul 11 10:40:53 hst100 swtpm-13[137230]: core dump limit: 67108864 Jul 11 10:40:53 hst100 swtpm-13[137230]: Could not write to pidfile : No space left on device Jul 11 10:40:53 hst100 ovs-vsctl: ovs|00001|db_ctl_base|ERR|no row "tap13.0" in table Interface Jul 11 10:40:53 hst100 ovs-vsctl: ovs|00001|vsctl|INFO|Called as ovs-vsctl --timeout=30 -- --if-exists del-port tap13.0 Jul 11 10:40:53 hst100 ovs-vsctl: ovs|00001|vsctl|INFO|Called as ovs-vsctl --timeout=30 add-port xapi2 tap13.0 -- set interface tap13.0 "external-ids:\"xs-vm-uuid\"=\"16a5f8be-781c-46fe-df43-83744df32826\"" -- set interface tap13.0 "external-ids:\"xs-vif-uuid\"=\"a48873c0-17c9-8905-712e-72579245a342\"" -- set interface tap13.0 "external-ids:\"xs-network-uuid\"=\"1bfba311-a261-d329-d01d-ab2713d0dc78\"" -- set interface tap13.0 "external-ids:\"attached-mac\"=\"da:ff:e4:1f:38:61\"" Jul 11 10:40:53 hst100 systemd[1]: swtpm-13.service: main process exited, code=exited, status=1/FAILURE Jul 11 10:40:53 hst100 systemd[1]: Unit swtpm-13.service entered failed state. Jul 11 10:40:53 hst100 systemd[1]: swtpm-13.service failed. Jul 11 10:40:53 hst100 forkexecd: [ info||0 ||forkexecd] qemu-dm-13[137252]: Arguments: 13 --syslog -chardev socket,id=chrtpm,path=/var/lib/xcp/run/swtpm-root-13/swtpm-sock -tpmdev emulator,id=tpm0,chardev=chrtpm -device tpm-crb,tpmdev=tpm0 -std-vga -videoram 8 -vnc unix:/var/run/xen/vnc-13,lock-key-sync=off -acpi -monitor null -pidfile /var/run/xen/qemu-dm-13.pid -xen-domid 13 -m size=8184 -boot order=dc -usb -device usb-tablet,port=2 -smp 4,maxcpus=4 -serial pty -display none -nodefaults -trace enable=xen_platform_log -sandbox on,obsolete=deny,elevateprivileges=allow,spawn=deny,resourcecontrol=deny -S -parallel null -qmp unix:/var/run/xen/qmp-libxl-13,server,nowait -qmp unix:/var/run/xen/qmp-event-13,server,nowait -device xen-platform,addr=3,device-id=0x0002 -device nvme,serial=nvme0,id=nvme0,addr=7 -drive id=disk0,if=none,file=/dev/sm/backend/ff9dc099-c34f-d3ac-3ac4-19ed74480a4b/0d16619f-bcb7-47ea-9ecc-46159e4ff4ad,media=disk,auto-read-only=off,format=raw -device nvme-ns,drive=disk0,bus=nvme0,nsid=1 -drive id=disk1,if=none,file=/dev/sm/backend/ff9dc099-c34f-d3ac-3ac4-19ed74480a4b/310dc526-765c-455c-848d-610bb7ae6cd1,media=disk,auto-read-only=off,format=raw -device nvme-ns,drive=disk1,bus=nvme0,nsid=2 -device e1000,netdev=tapnet0,mac=da:ff:e4:1f:38:61,addr=4,rombar=0 -netdev tap,id=tapnet0,fd=8 Jul 11 10:40:53 hst100 forkexecd: [ info||0 ||forkexecd] qemu-dm-13[137252]: Exec: /usr/lib64/xen/bin/qemu-system-i386 qemu-dm-13 -machine pc-i440fx-2.10,accel=xen,max-ram-below-4g=4026531840,suppress-vmdesc=on,allow-unassigned=true,trad_compat=False -chardev socket,id=chrtpm,path=/var/lib/xcp/run/swtpm-root-13/swtpm-sock -tpmdev emulator,id=tpm0,chardev=chrtpm -device tpm-crb,tpmdev=tpm0 -vnc unix:/var/run/xen/vnc-13,lock-key-sync=off -monitor null -pidfile /var/run/xen/qemu-dm-13.pid -xen-domid 13 -m size=8184 -boot order=dc -usb -device usb-tablet,port=2 -smp 4,maxcpus=4 -serial pty -display none -nodefaults -trace enable=xen_platform_log -sandbox on,obsolete=deny,elevateprivileges=allow,spawn=deny,resourcecontrol=deny -S -parallel null -qmp unix:/var/run/xen/qmp-libxl-13,server,nowait -qmp unix:/var/run/xen/qmp-event-13,server,nowait -device xen-platform,addr=3,device-id=0x0002 -device nvme,serial=nvme0,id=nvme0,addr=7 -drive id=disk0,if=none,file=/dev/sm/backend/ff9dc099-c34f-d3ac-3ac4-19ed74480a4b/0d16619f-bcb7-47ea-9ecc-46159e4ff4ad,media=disk,auto-read-only=off,format=raw -device nvme-ns,drive=disk0,bus=nvme0,nsid=1 -drive id=disk1,if=none,file=/dev/sm/backend/ff9dc099-c34f-d3ac-3ac4-19ed74480a4b/310dc526-765c-455c-848d-610bb7ae6cd1,media=disk,auto-read-only=off,format=raw -device nvme-ns,drive=disk1,bus=nvme0,nsid=2 -device e1000,netdev=tapnet0,mac=da:ff:e4:1f:38:61,addr=4,rombar=0 -netdev tap,id=tapnet0,fd=8 -device VGA,vgamem_mb=8,addr=2,romfile= -vnc-clipboard-socket-fd 4 -chardev stdio,id=ovmf -device isa-debugcon,chardev=ovmf,iobase=0x402 -xen-domid-restrict -chroot /var/xen/qemu/root-13 -runas 65548:1004 Jul 11 10:40:53 hst100 qemu-dm-13[137268]: Moving to cgroup slice 'vm.slice' Jul 11 10:40:53 hst100 qemu-dm-13[137268]: core dump limit: 67108864 Jul 11 10:40:53 hst100 qemu-dm-13[137268]: qemu-dm-13: -chardev socket,id=chrtpm,path=/var/lib/xcp/run/swtpm-root-13/swtpm-sock: Failed to connect socket /var/lib/xcp/run/swtpm-root-13/swtpm-sock: Connection refused Jul 11 10:40:53 hst100 /opt/xensource/libexec/xcp-clipboardd[137266]: poll failed because revents=0x11 (qemu socket) Jul 11 10:40:59 hst100 cleanup.py[137060]: All output goes to log Jul 11 10:40:59 hst100 systemd[1]: Started Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d. Jul 11 10:41:22 hst100 systemd[1]: Starting Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d... Jul 11 10:41:29 hst100 cleanup.py[137582]: All output goes to log Jul 11 10:41:29 hst100 systemd[1]: Started Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d. Jul 11 10:41:52 hst100 systemd[1]: Starting Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d... Jul 11 10:41:59 hst100 cleanup.py[137875]: All output goes to log Jul 11 10:41:59 hst100 systemd[1]: Started Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d. Jul 11 10:42:22 hst100 systemd[1]: Starting Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d... Jul 11 10:42:23 hst100 sparse_dd: [debug||0 ||sparse_dd] progress 20% Jul 11 10:42:29 hst100 cleanup.py[138151]: All output goes to log Jul 11 10:42:29 hst100 systemd[1]: Started Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d. Jul 11 10:42:52 hst100 systemd[1]: Starting Garbage Collector for SR d4b22411-592f-7ada-0597-68dbcb56ee4d... -

@McHenry

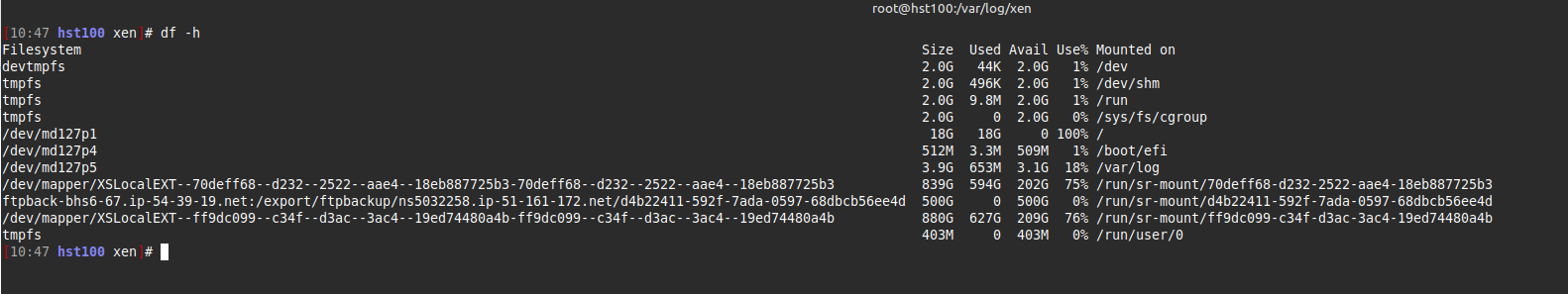

Do they work if you turn off Secure Boot? There's a procedure to enable Secure Boot, see https://docs.xcp-ng.org/guides/guest-UEFI-Secure-Boot/ .Do you have space left on your Dom0 disk?

-

-

@McHenry

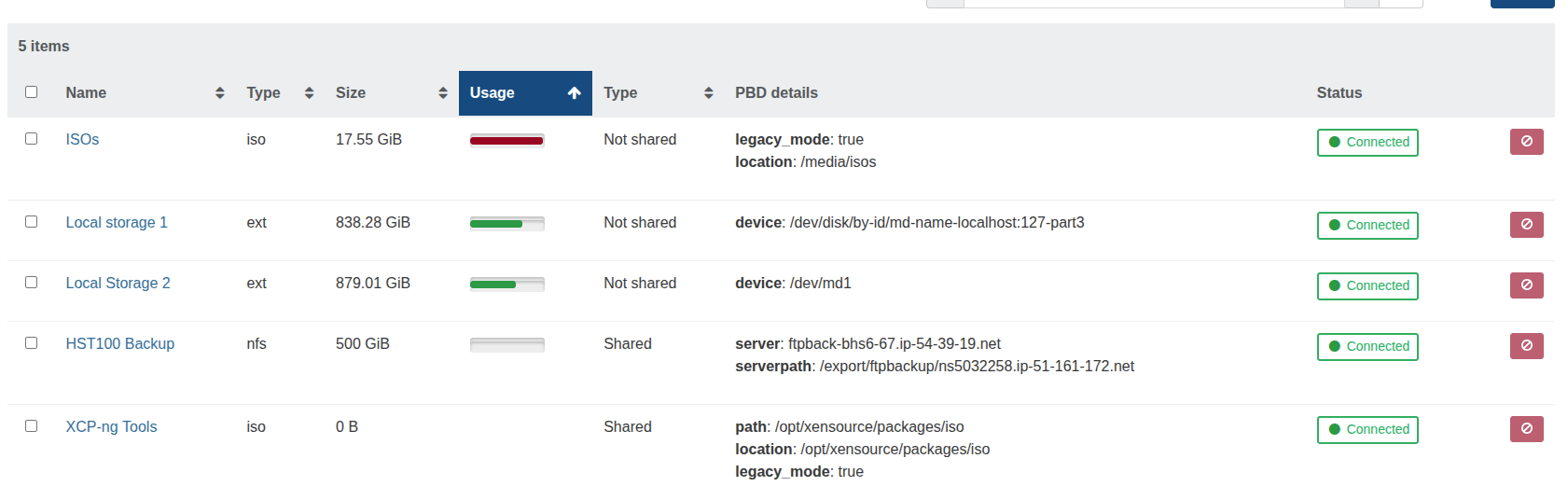

/dev/md127p1(the root partition) looks pretty full. Do you store anything big in there (ISOs...)? -

-

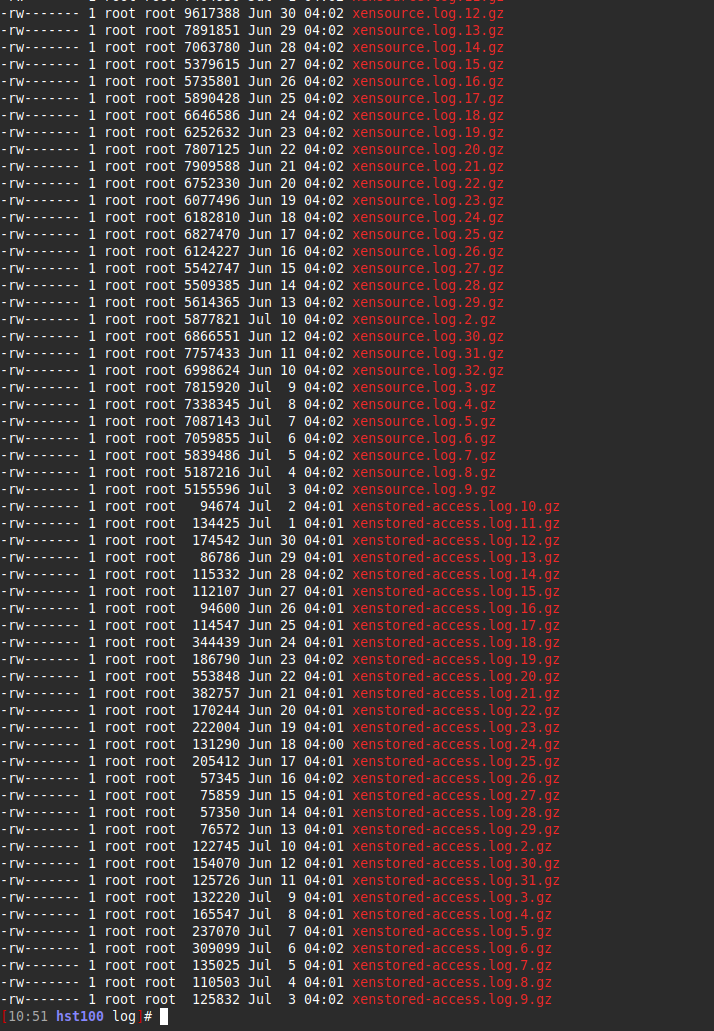

Safe to delete these *.gz files?

-

@McHenry That's your Dom0 partition, which stores the XCP-ng operating system. Don't store the ISOs there (which your local ISO SR is doing), you should mount an ISO SR using NFS instead.

/var/log shouldn't be an issue as it's in a separate partition. (I misread the df output)

-

Wow, it worked!

-

I deleted a few ISOs and the VM now boots.

So the issue was I was storing ISOs in the root partition and it was full?

-

@McHenry Yes, that's the cause of your issue.

-

Thank you so much. If you want me I'll be at the pub.

-

O olivierlambert marked this topic as a question on

O olivierlambert marked this topic as a question on

-

O olivierlambert has marked this topic as solved on

O olivierlambert has marked this topic as solved on