1 out of 6 vms failing backup

-

Currently on Commit CF044

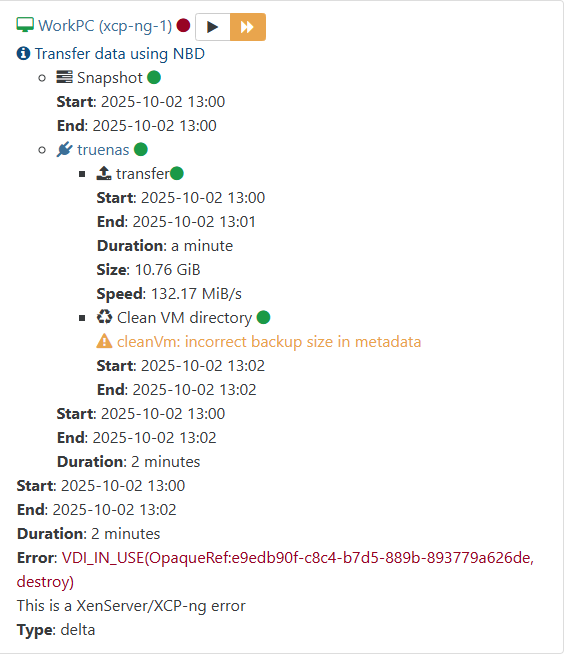

Backups from last night all passed this afternoon 1 windows vm failing backup.

"id": "1759427171116", "message": "clean-vm", "start": 1759427171116, "status": "success", "end": 1759427171251, "result": { "merge": false } }, { "id": "1759427171846", "message": "snapshot", "start": 1759427171846, "status": "success", "end": 1759427174310, "result": "cd33d2e1-b161-4258-a1dc-704402cf9f96" }, { "data": { "id": "52af1ce0-abad-4478-ac69-db1b7cfcefd8", "isFull": false, "type": "remote" }, "id": "1759427174311", "message": "export", "start": 1759427174311, "status": "success", "tasks": [ { "id": "1759427175416", "message": "transfer", "start": 1759427175416, "status": "success", "end": 1759427349288, "result": { "size": 11827937280 } }, { "id": "1759427397567", "message": "clean-vm", "start": 1759427397567, "status": "success", "warnings": [ { "data": { "path": "/xo-vm-backups/2eaf6b24-ae55-6e01-a3a4-aa710d221834/vdis/95ac8089-69f3-404e-b902-21d0e878eec2/0e0733db-3db0-437b-aff5-d2c7644c08a2/20251002T174615Z.vhd", "error": { "parent": "/xo-vm-backups/2eaf6b24-ae55-6e01-a3a4-aa710d221834/vdis/95ac8089-69f3-404e-b902-21d0e878eec2/0e0733db-3db0-437b-aff5-d2c7644c08a2/20251002T040024Z.vhd", "child1": "/xo-vm-backups/2eaf6b24-ae55-6e01-a3a4-aa710d221834/vdis/95ac8089-69f3-404e-b902-21d0e878eec2/0e0733db-3db0-437b-aff5-d2c7644c08a2/20251002T170006Z.vhd", "child2": "/xo-vm-backups/2eaf6b24-ae55-6e01-a3a4-aa710d221834/vdis/95ac8089-69f3-404e-b902-21d0e878eec2/0e0733db-3db0-437b-aff5-d2c7644c08a2/20251002T174615Z.vhd" } }, "message": "VHD check error" }, { "data": { "backup": "/xo-vm-backups/2eaf6b24-ae55-6e01-a3a4-aa710d221834/20251002T174615Z.json", "missingVhds": [ "/xo-vm-backups/2eaf6b24-ae55-6e01-a3a4-aa710d221834/vdis/95ac8089-69f3-404e-b902-21d0e878eec2/0e0733db-3db0-437b-aff5-d2c7644c08a2/20251002T174615Z.vhd" ] }, "message": "some VHDs linked to the backup are missing" } ], "end": 1759427397842, "result": { "merge": false } } ], "end": 1759427397855 } ], "infos": [ { "message": "Transfer data using NBD" } ], "end": 1759427397855, "result": { "code": "VDI_IN_USE", "params": [ "OpaqueRef:e9edb90f-c8c4-b7d5-889b-893779a626de", "destroy" ], "task": { "uuid": "db444258-2a26-a05f-3e5a-d6f1da90f46d", "name_label": "Async.VDI.destroy", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20251002T17:49:57Z", "finished": "20251002T17:49:57Z", "status": "failure", "resident_on": "OpaqueRef:fd6f7486-5079-c16d-eac9-59586ef1b0f9", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "VDI_IN_USE", "OpaqueRef:e9edb90f-c8c4-b7d5-889b-893779a626de", "destroy" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 5189))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 39))((process xapi)(filename ocaml/xapi/helpers.ml)(line 1706))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 5178))((process xapi)(filename ocaml/xapi/rbac.ml)(line 188))((process xapi)(filename ocaml/xapi/rbac.ml)(line 197))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 77)))" }, "message": "VDI_IN_USE(OpaqueRef:e9edb90f-c8c4-b7d5-889b-893779a626de, destroy)", "name": "XapiError", "stack": "XapiError: VDI_IN_USE(OpaqueRef:e9edb90f-c8c4-b7d5-889b-893779a626de, destroy)\n at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202509301501/packages/xen-api/_XapiError.mjs:16:12)\n at default (file:///opt/xo/xo-builds/xen-orchestra-202509301501/packages/xen-api/_getTaskResult.mjs:13:29)\n at Xapi._addRecordToCache (file:///opt/xo/xo-builds/xen-orchestra-202509301501/packages/xen-api/index.mjs:1073:24)\n at file:///opt/xo/xo-builds/xen-orchestra-202509301501/packages/xen-api/index.mjs:1107:14\n at Array.forEach (<anonymous>)\n at Xapi._processEvents (file:///opt/xo/xo-builds/xen-orchestra-202509301501/packages/xen-api/index.mjs:1097:12)\n at Xapi._watchEvents (file:///opt/xo/xo-builds/xen-orchestra-202509301501/packages/xen-api/index.mjs:1270:14)\n at process.processTicksAndRejections (node:internal/process/task_queues:105:5)" } } ], "end": 1759427397855 -

Maybe this was caused by Veeam? Other vm's that were backed up by veeam not having issues just this one.

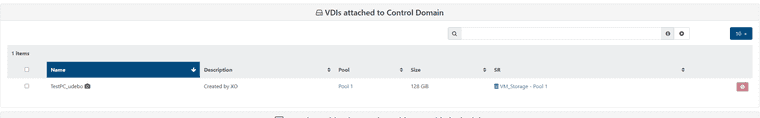

When i try to click on the forget button this error shows.

OPERATION_NOT_ALLOWED(VBD '817247bb-50a9-6b1a-04bc-1c7458e9f824' still attached to '5e876c35-6d27-4090-950b-a4d2a94d4ec8')

When i click disconnect.

INTERNAL_ERROR(Expected 0 or 1 VDI with datapath, had 3)When i click forget

OPERATION_NOT_ALLOWED(VBD '817247bb-50a9-6b1a-04bc-1c7458e9f824' still attached to '5e876c35-6d27-4090-950b-a4d2a94d4ec8')When i click on detroy

VDI_IN_USE(OpaqueRef:e9edb90f-c8c4-b7d5-889b-893779a626de, destroy) -

@acebmxer if possible for you, restart toolstack of the host being attached to this VM.

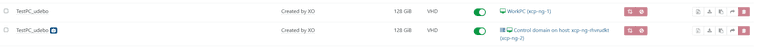

and check to see if this vdi still attached to dom0sometimes a full reboot of the host is needed, and you get an orphaned snapshot instead of vdi attached to dom0

delete the orphaned snapshot and. backup will get in place like before.

-

I thought restarting tool stack affected both host? Either way did try that but on host 1 originally. So i restarted on host 2 (where problem is). still no locked. I rebooted host 2 and that seem to do the trick. Took a few min for garbage collection to complete. All clean now.

Thanks.