mirror backup to S3

-

-

@acebmxer [excuse for the poor english!]

i've now this situation:

1 backup job with two disables schedules, one full and one delta, to a nas

1 mirror full backup to wasabi (S3)

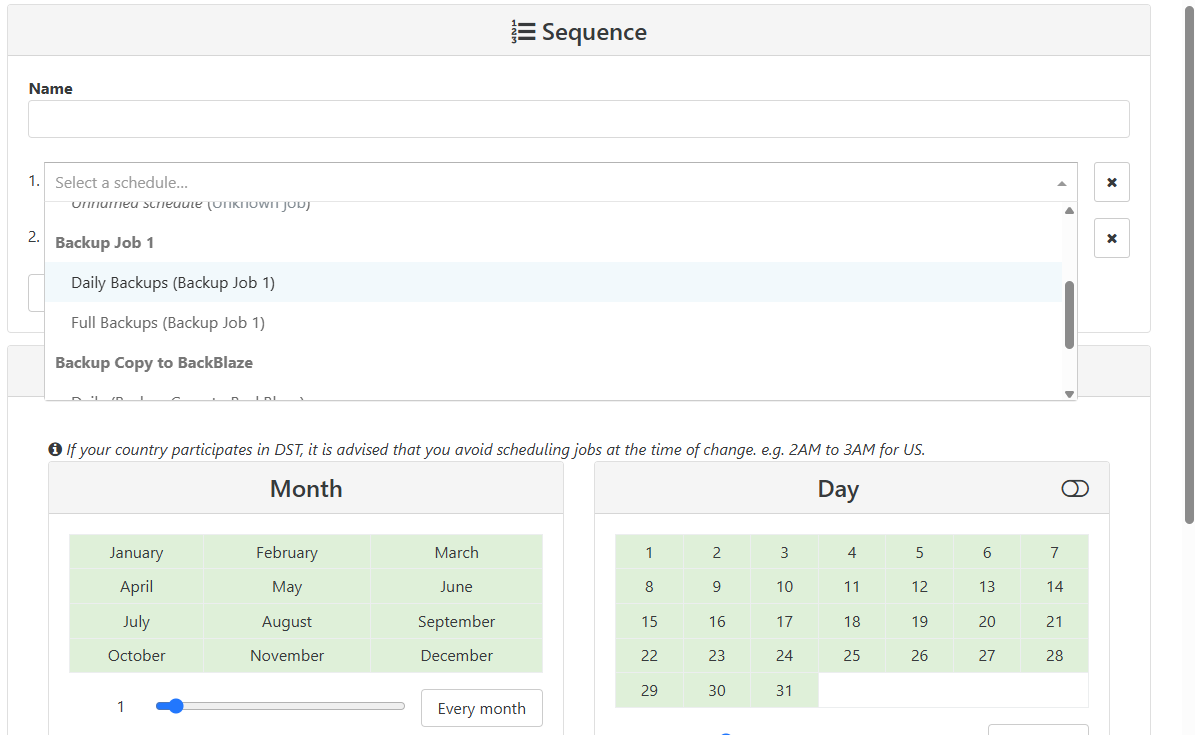

1 mirror incremental backupi've insert two sequences:

one starting at sunday for full backup (the sequence is full backup and then full mirror)

one every 3 hours with delta backup and then mirror incrementalthe job start at the correct hour but the mirror incremental send every time the same data size..

backup to nas:dns_interno1 (ctx1.tosnet.it) Transfer data using NBD Clean VM directory cleanVm: incorrect backup size in metadata Start: 2025-06-24 16:00 End: 2025-06-24 16:00 Snapshot Start: 2025-06-24 16:00 End: 2025-06-24 16:00 Backup XEN OLD transfer Start: 2025-06-24 16:00 End: 2025-06-24 16:01 Duration: a few seconds Size: 132 MiB Speed: 11.86 MiB/s Start: 2025-06-24 16:00 End: 2025-06-24 16:01 Duration: a minute Start: 2025-06-24 16:00 End: 2025-06-24 16:01 Duration: a minute Type: deltadns_interno1 (ctx1.tosnet.it) Wasabi transfer Start: 2025-06-24 16:02 End: 2025-06-24 16:15 Duration: 13 minutes Size: 25.03 GiB Speed: 34.14 MiB/s transfer Start: 2025-06-24 16:15 End: 2025-06-24 16:15 Duration: a few seconds Size: 394 MiB Speed: 22.49 MiB/s Start: 2025-06-24 16:02 End: 2025-06-24 16:17 Duration: 15 minutes Wasabi Start: 2025-06-24 16:15 End: 2025-06-24 16:17 Duration: 2 minutes Start: 2025-06-24 16:02 End: 2025-06-24 16:17 Duration: 15 minutesthe job send every time 25gb to wasabi, not the incremental data.

-

What i posted was just examples of how i have my backups configured. You dont have to call the backup jobs the same as mine. Maybe someone else with more experience can step in. Double check your schedules make sure you did set incorrect settings in one of the schedules/jobs.

-

@acebmxer of course, this is only a test.

the problem is not the schedulng but why incremental send every time all data. -

@robyt said in mirror backup to S3:

1 backup job with two disables schedules, one full and one delta, to a nas

Hi robyt

i the mirror settings : full mirror if when mirroring full backup ( called backup ) , as one file per VM

The incremental mirror will take care on all the files created by a delta backupCan you show me what retention you have on both side ? you have more explanation on the syncrohnization algorithm here https://docs.xen-orchestra.com/mirror_backup , to be short a delta mirror can only be done if part of the disks chains are common to both remotes

-

@florent hi, i've adjusted the retention parameters and i'm waiting for some days of backup/mirror for checking

-

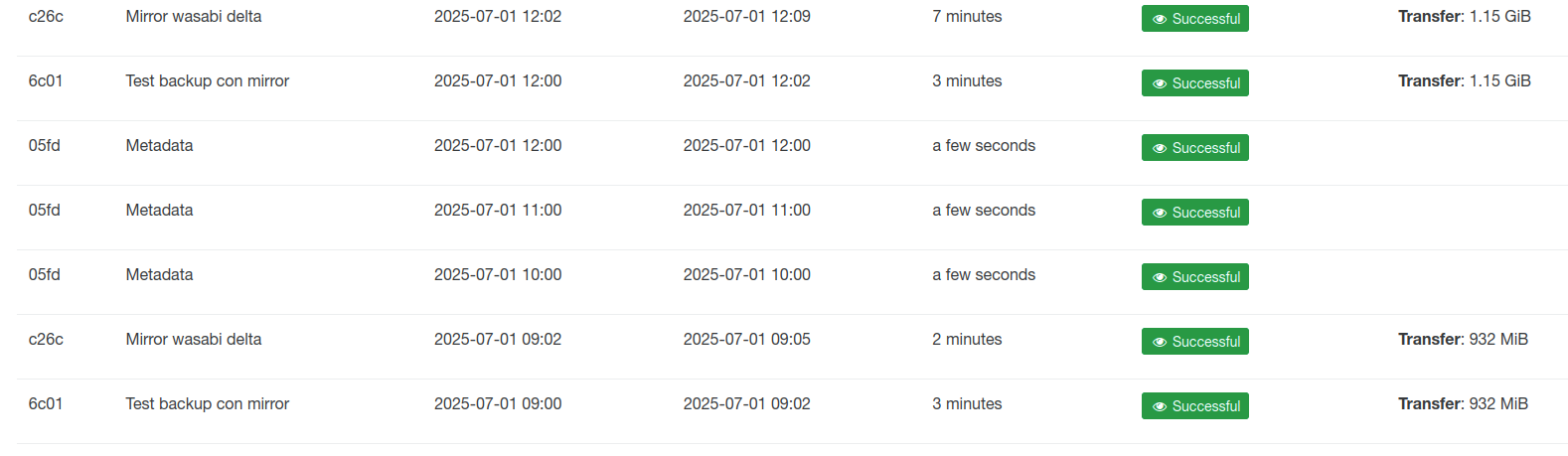

Hi @florent, i've clean backup data, add the correct retention and now

it's fine

i'm lowering nbd connection (from 4 to 1), the speed of "test backup con mirror" is too low -

@robyt 2 is generally a sweet spot

-

@florent i've a little problem with backup to s3/wasabi..

for delta seems all ok:

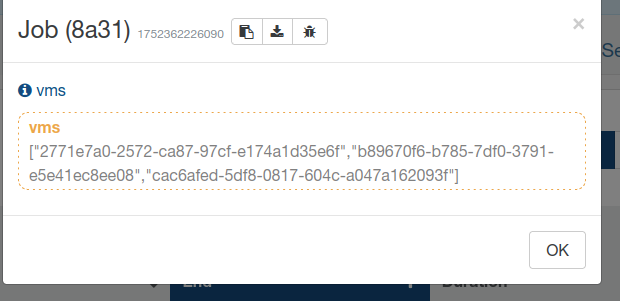

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1751914964818", "jobId": "e4adc26c-8723-4388-a5df-c2a1663ed0f7", "jobName": "Mirror wasabi delta", "message": "backup", "scheduleId": "62a5edce-88b8-4db9-982e-ad2f525c4eb9", "start": 1751914964818, "status": "success", "infos": [ { "data": { "vms": [ "2771e7a0-2572-ca87-97cf-e174a1d35e6f", "b89670f6-b785-7df0-3791-e5e41ec8ee08", "cac6afed-5df8-0817-604c-a047a162093f" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "b89670f6-b785-7df0-3791-e5e41ec8ee08" }, "id": "1751914968373", "message": "backup VM", "start": 1751914968373, "status": "success", "tasks": [ { "id": "1751914968742", "message": "clean-vm", "start": 1751914968742, "status": "success", "end": 1751914979708, "result": { "merge": false } }, { "data": { "id": "ea222c7a-b242-4605-83f0-fdcc9865eb88", "type": "remote" }, "id": "1751914984503", "message": "export", "start": 1751914984503, "status": "success", "tasks": [ { "id": "1751914984667", "message": "transfer", "start": 1751914984667, "status": "success", "end": 1751914992365, "result": { "size": 125829120 } }, { "id": "1751914995521", "message": "clean-vm", "start": 1751914995521, "status": "success", "tasks": [ { "id": "1751915004208", "message": "merge", "start": 1751915004208, "status": "success", "end": 1751915018911 } ], "end": 1751915020075, "result": { "merge": true } } ], "end": 1751915020077 } ], "end": 1751915020077 }, { "data": { "type": "VM", "id": "2771e7a0-2572-ca87-97cf-e174a1d35e6f" }, "id": "1751914968380", "message": "backup VM", "start": 1751914968380, "status": "success", "tasks": [ { "id": "1751914968903", "message": "clean-vm", "start": 1751914968903, "status": "success", "end": 1751914979840, "result": { "merge": false } }, { "data": { "id": "ea222c7a-b242-4605-83f0-fdcc9865eb88", "type": "remote" }, "id": "1751914986808", "message": "export", "start": 1751914986808, "status": "success", "tasks": [ { "id": "1751914987416", "message": "transfer", "start": 1751914987416, "status": "success", "end": 1751914993152, "result": { "size": 119537664 } }, { "id": "1751914996024", "message": "clean-vm", "start": 1751914996024, "status": "success", "tasks": [ { "id": "1751915005023", "message": "merge", "start": 1751915005023, "status": "success", "end": 1751915035567 } ], "end": 1751915039414, "result": { "merge": true } } ], "end": 1751915039414 } ], "end": 1751915039415 }, { "data": { "type": "VM", "id": "cac6afed-5df8-0817-604c-a047a162093f" }, "id": "1751915020089", "message": "backup VM", "start": 1751915020089, "status": "success", "tasks": [ { "id": "1751915020443", "message": "clean-vm", "start": 1751915020443, "status": "success", "end": 1751915030194, "result": { "merge": false } }, { "data": { "id": "ea222c7a-b242-4605-83f0-fdcc9865eb88", "type": "remote" }, "id": "1751915034962", "message": "export", "start": 1751915034962, "status": "success", "tasks": [ { "id": "1751915035142", "message": "transfer", "start": 1751915035142, "status": "success", "end": 1751915052723, "result": { "size": 719323136 } }, { "id": "1751915056146", "message": "clean-vm", "start": 1751915056146, "status": "success", "tasks": [ { "id": "1751915064681", "message": "merge", "start": 1751915064681, "status": "success", "end": 1751915116508 } ], "end": 1751915117838, "result": { "merge": true } } ], "end": 1751915117839 } ], "end": 1751915117839 } ], "end": 1751915117839 }For full i'm not sure:

{ "data": { "mode": "full", "reportWhen": "always" }, "id": "1751757492933", "jobId": "35c78a31-67c5-47ba-9988-9c4cb404ed8e", "jobName": "Mirror wasabi full", "message": "backup", "scheduleId": "476b863d-a651-42e5-9bb3-db830dbdac7c", "start": 1751757492933, "status": "success", "infos": [ { "data": { "vms": [ "2771e7a0-2572-ca87-97cf-e174a1d35e6f", "b89670f6-b785-7df0-3791-e5e41ec8ee08", "cac6afed-5df8-0817-604c-a047a162093f" ] }, "message": "vms" } ], "end": 1751757496499 }XOA send to me the email with this report

Job ID: 35c78a31-67c5-47ba-9988-9c4cb404ed8e Run ID: 1751757492933 Mode: full Start time: Sunday, July 6th 2025, 1:18:12 am End time: Sunday, July 6th 2025, 1:18:16 am Duration: a few secondsfour second for 203 gb?

-

any ideas?

-

@robyt are you using full backus ( called "backup" ) on the source ?

because incremental mirror will transfer all the backups generated by a "delta backup" whereas it's the first transfer or the following delta(our terminology can be confusing for now)

-

@florent said in mirror backup to S3:

@robyt are you using full backus ( called "backup" ) on the source ?

because incremental mirror will transfer all the backups generated by a "delta backup" whereas it's the first transfer or the following delta(our terminology can be confusing for now)

Hi, i've two delta jobs, one with "force full backup" checked

In log i've only this:

-

@robyt your doiing incremental backup with 2 step : complete backup ( full/key disks) and delta (differencing/incremental) Both of theses are transfered through an incremental mirror

on the other hand if you do Backup , it build one xva file per VM containing all the VM data at each backup. These are transfered through a Full backup mirror

we are working on clarifying the vocabulary

-

@florent said in mirror backup to S3:

@robyt your doiing incremental backup with 2 step : complete backup ( full/key disks) and delta (differencing/incremental) Both of theses are transfered through an incremental mirror

on the other hand if you do Backup , it build one xva file per VM containing all the VM data at each backup. These are transfered through a Full backup mirror

we are working on clarifying the vocabularyahhhh...

the full mirror to S3 is not necessary -

@florent mmm.

Sounds good

dns_interno2 (ctx3.tosnet.it) Wasabi transfer Start: 2025-07-27 06:02 End: 2025-07-27 06:15 Duration: 13 minutes Size: 14.21 GiB Speed: 18.73 MiB/s transfer Start: 2025-07-27 06:15 End: 2025-07-27 06:16 Duration: a few seconds Size: 314 MiB Speed: 12.17 MiB/s Start: 2025-07-27 06:02 End: 2025-07-27 06:19 Duration: 16 minutes Wasabi Start: 2025-07-27 06:15 End: 2025-07-27 06:19 Duration: 3 minutes Start: 2025-07-27 06:02 End: 2025-07-27 06:19 Duration: 16 minutes dns_interno1 (ctx1.tosnet.it) Wasabi transfer Start: 2025-07-27 06:02 End: 2025-07-27 06:20 Duration: 17 minutes Size: 25.03 GiB Speed: 24.84 MiB/s transfer Start: 2025-07-27 06:20 End: 2025-07-27 06:20 Duration: a few seconds Size: 506 MiB Speed: 16.66 MiB/s Start: 2025-07-27 06:02 End: 2025-07-27 06:23 Duration: 21 minutes Wasabi Start: 2025-07-27 06:20 End: 2025-07-27 06:23 Duration: 3 minutes Start: 2025-07-27 06:02 End: 2025-07-27 06:23 Duration: 21 minutes FtpA TEST (ctx1.tosnet.it) Wasabi transfer Start: 2025-07-27 06:19 End: 2025-07-27 07:40 Duration: an hour Size: 164.59 GiB Speed: 34.67 MiB/s transfer Start: 2025-07-27 07:40 End: 2025-07-27 07:41 Duration: a few seconds Size: 804 MiB Speed: 52.72 MiB/s Start: 2025-07-27 06:19 End: 2025-07-27 07:42 Duration: an hour Wasabi Start: 2025-07-27 07:40 End: 2025-07-27 07:42 Duration: 2 minutes Start: 2025-07-27 06:19 End: 2025-07-27 07:42 Duration: an hour