Host failure after patches

-

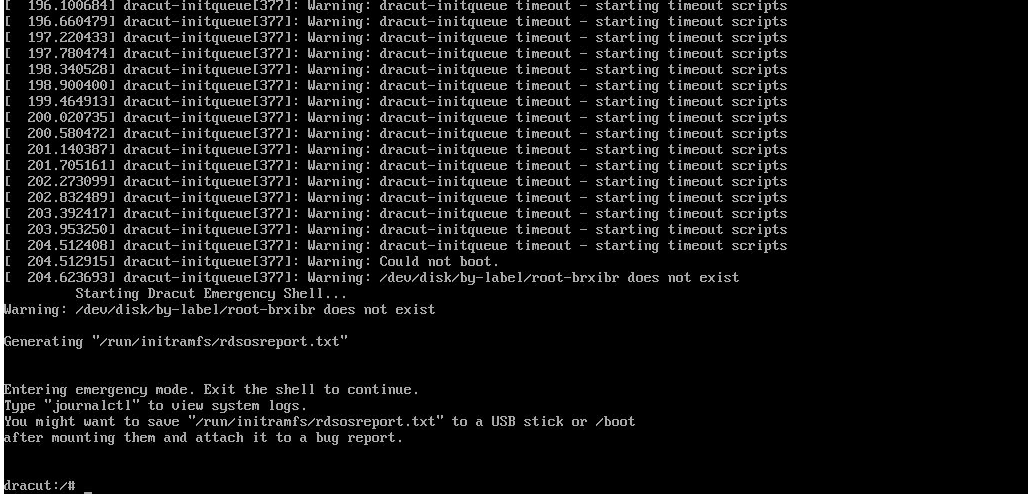

I have attempted to install outstanding patches on a pool master however now the host will no longer boot.

At startup the xcp-ng logo shows and when the progress bar gets to the end

Finally the the error shown is:

Being the pool master I am unable to access any other hosts.

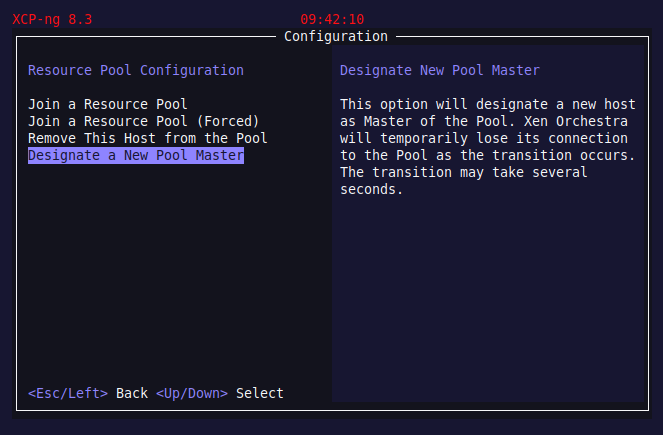

I believe I will need to elevate an existing host to be the new host master until this is resolved. Is this correct?

-

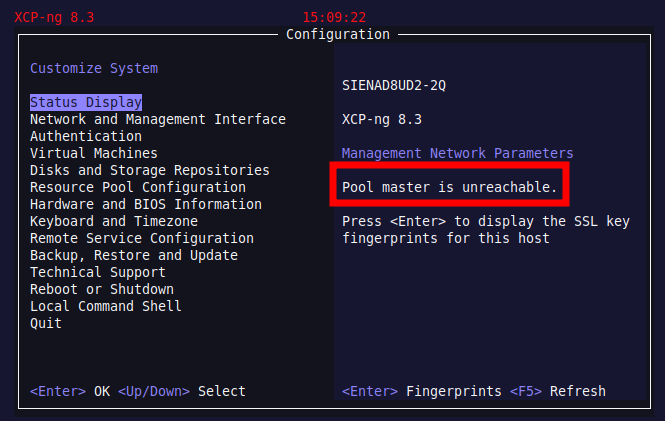

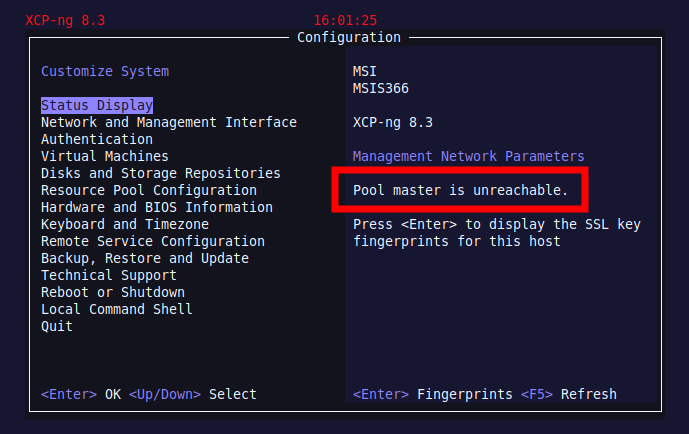

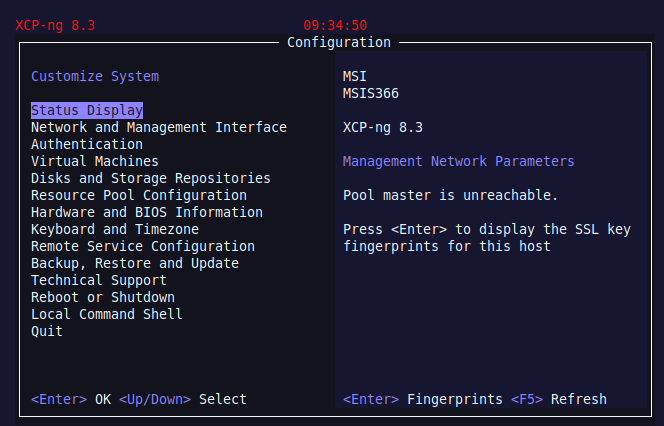

When I log into a member host I see:

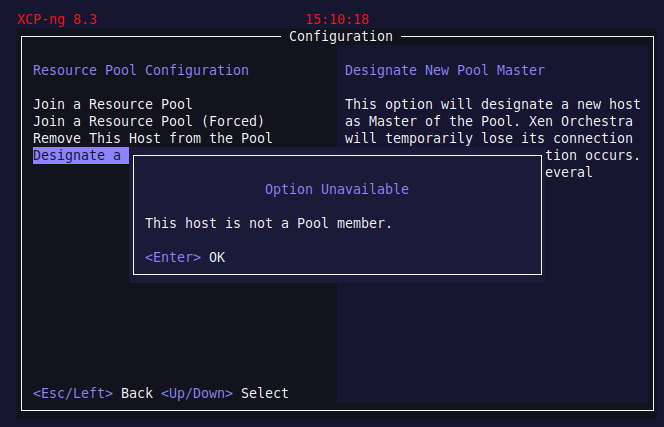

I know this host is a pool member but this message says otherwise:

Not sure what to do here...

-

@McHenry i think the command you need to run on a current slave is

xe pool-emergency-transition-to-master followed by xe-toolstack-restartThis will make that slave the new pool master. You should only do this though if the current pool master for sure dead.

This may also be useful:

https://docs.xenserver.com/en-us/xenserver/8/dr/machine-failures.html -

Thank you.

So there is no temporary workaround to get the VMs up again whilst I try to fix the pool master?

I guess I could:

- Make the slave the new master

- Rebuild the failed master

- Elevate the rebuilt master to be the new pool master

-

@McHenry i think that us your best bet, you can also leave the new master as the master, and the rebuilt host as a salve. Did the other hosts all update ok? Kind of concerning that running updates resulted in an unbootable host.

-

I have updates for all hosts but I understand I need to patch the host master first so that is what I was doing.

Will I lose all my pool settings like VLANs etc?

-

@McHenry No moving the pool master shouldn't result in any config loss.

-

OK, pool is now up and accessible

Now I am restoring a VM that resided on the failed master. Fingers crossed.

Interestingly, the master failed to boot after installing patched. I had also installed the patches on the slave but not yet rebooted the slave. When I elevated the slave to master it said it would reboot in 10 seconds and I thought SHIT what if this fails too.

I guess the learning here is to only install the patches when the host is ready to be restarted, not before.

Thanks for the quick response.

-

I maybe spoke to soon.

The host with my DR VM does not show as being part of the pool

Does I need to add it to the new pool master?

-

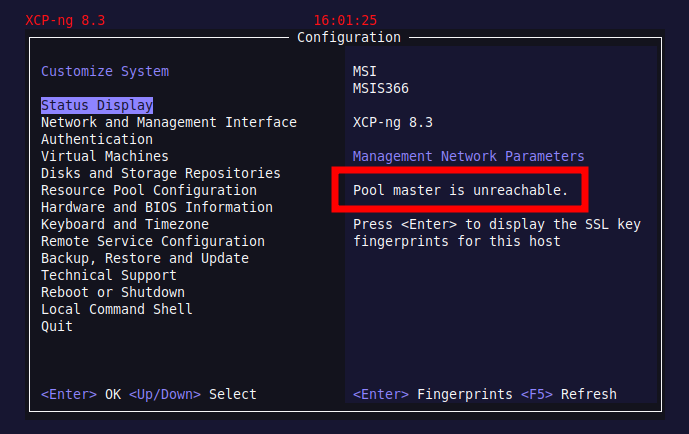

With a new pool master I am still unable to access the old pool slave as it reports the pool master is unavailable:

I expect this is because the pool slave is still looking for the old pool master and has not identified that a new pool master exists.

What needs to be done to the pool slave to have it appear as a member of the pool again?

-

-

No I didn't. Does this need to be run on the slave itself or the new master?

When you say "after selecting a new master" do you mean after I did this on the new master?

xe pool-emergency-transition-to-masterEdit:

Found this which shows this is run on the new pool master:

https://www.ervik.as/how-to-change-the-pool-master-in-a-xenserver-farm/ -

Yes you'd run that command on the pool master.

I linked this earlier in the thread, it outlines the process you need to follow:

https://docs.xenserver.com/en-us/xenserver/8/dr/machine-failures.htmlLet us know if this works!

-

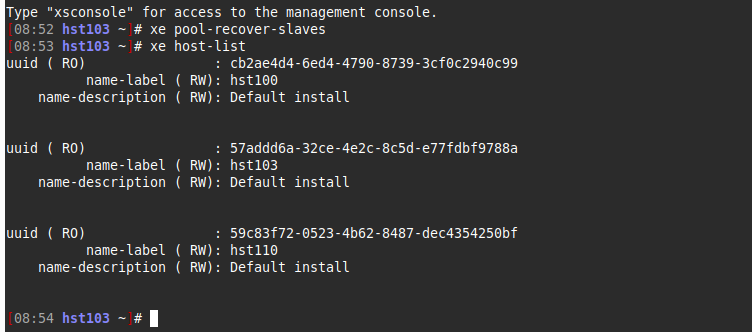

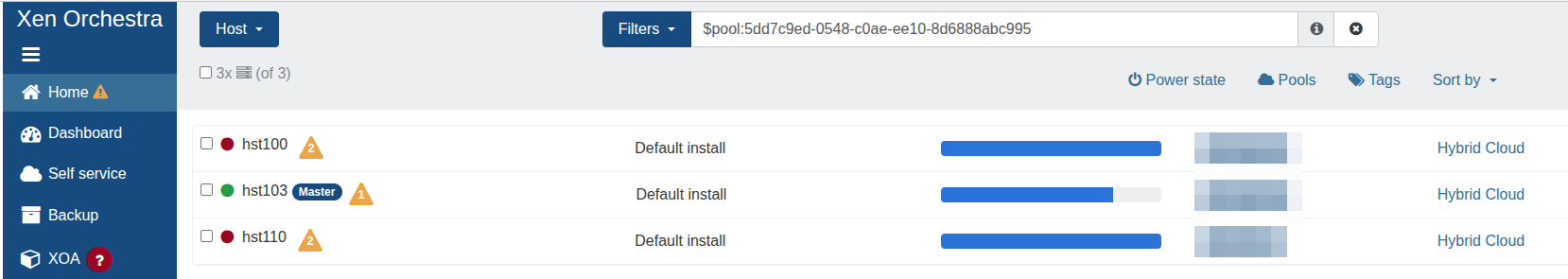

Apologies, I missed that. I have run the command and now see:

hst103 is the new pool master. hst100 is the old pool master that failed.

In XO I can only see hst103 under hosts however all three hosts are listed under the pool:

-

@McHenry Have you tried restarting the toolstack on the hosts since running pool-recover-slave?

I have only had to do this once before but remember it going fairly smoothly at the time. (As smooth as you can expect a host failure to be anyways)

-

Have restarted the tool stack but no different.

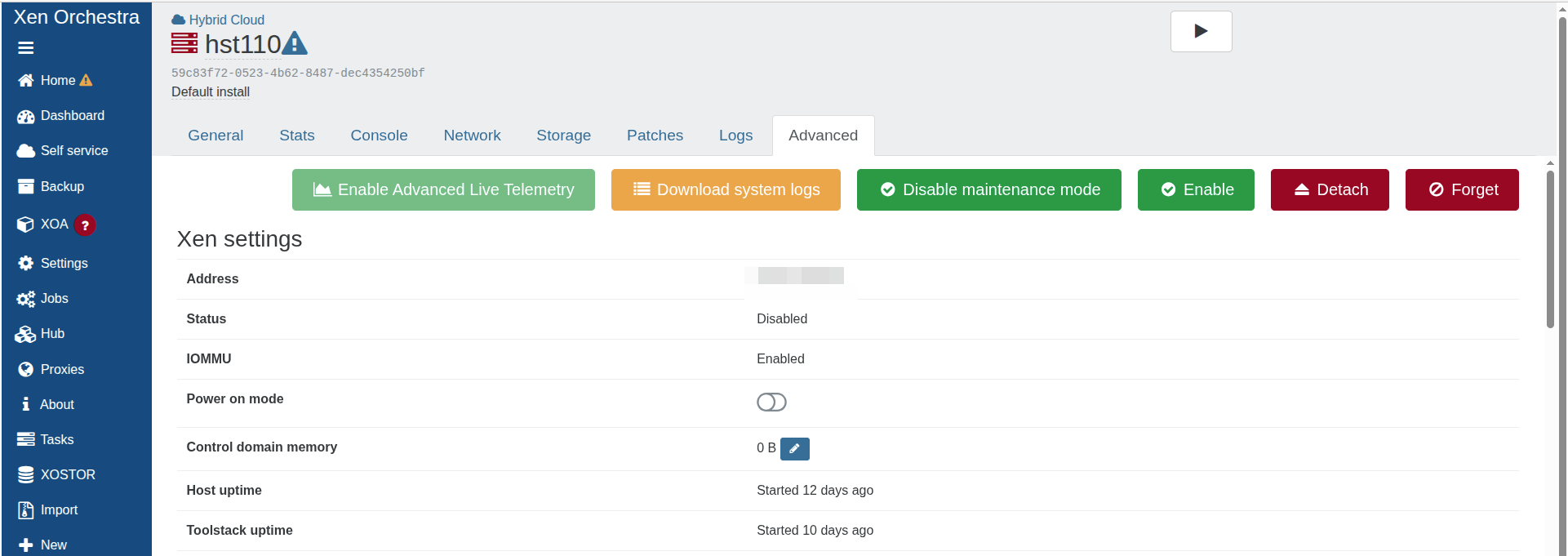

When I view hst110 I see:

I was tempted to restart it however it has Patches pending installation after a restart and as the mater is not fully patched I thought best not to restart it. I understand the master needs to be patched first.

I just checked xsconsole on hst110 and it shill shows pool master unavailable

Do I need to change the pool master used by the slave?

-

@McHenry I am wondering if you are in a situation where you need to reboot. You have patches installed but have not rebooted, but have restarted tool stacks on the salves, which means some components have restarted and are running on their new versions? If you have support it may be best to reach out to Vates for guidance.

-

Can I restart the slave and install patches if the master has not been patched yet?

-

@McHenry No the pool master must always been patched and rebooted first. Do you have a pool metadata backup? Are your VMs on shared storage of some sort? In case you need to rebuild the pool.

-

My setup is pretty basic.

I have two hosts in the pool, one for running VMs on local storage and one for DR backups on local storage.

I'd like to setup shared storage so i could run the VMs on multiple hosts and seamlessly move them between hosts without migrating storage too.To setup shared storage would this be on an xcp-ng host or totally independent of xcp-ng?