Auto coalescing of disks

-

Hi,

On my SR I have 8 VDI's that require coalesce. Most of these are between 20-80GB in size with a chain length of 1-4. How long roughly would this take and when can I expect this to happen? Is it actively happening in the background?

- I have no snapshots

- No orphaned VDI's

- The disk is a thick provisioned lvmohba

- There is 1.63TB free on the disk

- I'm running XO server 5.71.2, XenServer 7.4.0

Question 2 - can I force coalesce by rescanning the disk? If I rescan the disk, is there any chance of this causing an outage?

Thanks,

Chris. -

@chrispage1 said in Auto coalescing of disks:

Question 2 - can I force coalesce by rescanning the disk? If I rescan the disk, is there any chance of this causing an outage?

This automatically kicks GC apart from regular schedule. Rescanning won't cause outage. Do check

/var/log/SMlogfor any obvious error causing GC to not work. -

Thanks for the very quick response! I've tail'd the SMlog and as you suspected I'm getting -

Apr 7 16:07:09 node-01 SMGC: [20480] SR 767a ('Pool') (43 VDIs in 16 VHD trees): showing only VHD trees that changed: Apr 7 16:07:09 node-01 SMGC: [20480] *80da56bc[VHD](10.000G//4.684G|n) Apr 7 16:07:09 node-01 SMGC: [20480] *4c8eff6e[VHD](80.000G//10.035G|n) Apr 7 16:07:09 node-01 SMGC: [20480] *c16bbd77[VHD](80.000G//68.000M|n) Apr 7 16:07:09 node-01 SMGC: [20480] 22229e00[VHD](80.000G//80.164G|n) Apr 7 16:07:09 node-01 SMGC: [20480] c09ea342[VHD](40.000G//40.086G|n) Apr 7 16:07:09 node-01 SMGC: [20480] 40647cba[VHD](10.000G//244.000M|n) Apr 7 16:07:09 node-01 SMGC: [20480] *310a023f[VHD](10.000G//1.078G|n) Apr 7 16:07:09 node-01 SMGC: [20480] *f6618327[VHD](10.000G//140.000M|n) Apr 7 16:07:09 node-01 SMGC: [20480] *524d192c[VHD](10.000G//5.262G|n) Apr 7 16:07:09 node-01 SMGC: [20480] 914771aa[VHD](10.000G//10.027G|n) Apr 7 16:07:09 node-01 SMGC: [20480] *47f40b1b[VHD](10.000G//252.000M|n) Apr 7 16:07:09 node-01 SMGC: [20480] *18f02843[VHD](10.000G//5.828G|n) Apr 7 16:07:09 node-01 SMGC: [20480] c7a0b227[VHD](10.000G//10.027G|n) Apr 7 16:07:09 node-01 SMGC: [20480] Apr 7 16:07:09 node-01 SMGC: [20480] *~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~* Apr 7 16:07:09 node-01 SMGC: [20480] *********************** Apr 7 16:07:09 node-01 SMGC: [20480] * E X C E P T I O N * Apr 7 16:07:09 node-01 SMGC: [20480] *********************** Apr 7 16:07:09 node-01 SMGC: [20480] gc: EXCEPTION <class 'XenAPI.Failure'>, ['XENAPI_PLUGIN_FAILURE', 'multi', 'CommandException', 'Input/output error'] Apr 7 16:07:09 node-01 SMGC: [20480] File "/opt/xensource/sm/cleanup.py", line 2712, in gc Apr 7 16:07:09 node-01 SMGC: [20480] _gc(None, srUuid, dryRun) Apr 7 16:07:09 node-01 SMGC: [20480] File "/opt/xensource/sm/cleanup.py", line 2597, in _gc Apr 7 16:07:09 node-01 SMGC: [20480] _gcLoop(sr, dryRun) Apr 7 16:07:09 node-01 SMGC: [20480] File "/opt/xensource/sm/cleanup.py", line 2573, in _gcLoop Apr 7 16:07:09 node-01 SMGC: [20480] sr.coalesce(candidate, dryRun) Apr 7 16:07:09 node-01 SMGC: [20480] File "/opt/xensource/sm/cleanup.py", line 1542, in coalesce Apr 7 16:07:09 node-01 SMGC: [20480] self._coalesce(vdi) Apr 7 16:07:09 node-01 SMGC: [20480] File "/opt/xensource/sm/cleanup.py", line 1732, in _coalesce Apr 7 16:07:09 node-01 SMGC: [20480] vdi._doCoalesce() Apr 7 16:07:09 node-01 SMGC: [20480] File "/opt/xensource/sm/cleanup.py", line 1181, in _doCoalesce Apr 7 16:07:09 node-01 SMGC: [20480] VDI._doCoalesce(self) Apr 7 16:07:09 node-01 SMGC: [20480] File "/opt/xensource/sm/cleanup.py", line 693, in _doCoalesce Apr 7 16:07:09 node-01 SMGC: [20480] self.sr._updateSlavesOnResize(self.parent) Apr 7 16:07:09 node-01 SMGC: [20480] File "/opt/xensource/sm/cleanup.py", line 2492, in _updateSlavesOnResize Apr 7 16:07:09 node-01 SMGC: [20480] vdi.fileName, vdi.uuid, slaves) Apr 7 16:07:09 node-01 SMGC: [20480] File "/opt/xensource/sm/lvhdutil.py", line 300, in lvRefreshOnSlaves Apr 7 16:07:09 node-01 SMGC: [20480] text = session.xenapi.host.call_plugin(slave, "on-slave", "multi", args) Apr 7 16:07:09 node-01 SMGC: [20480] File "/usr/lib/python2.7/site-packages/XenAPI.py", line 263, in __call__ Apr 7 16:07:09 node-01 SMGC: [20480] return self.__send(self.__name, args) Apr 7 16:07:09 node-01 SMGC: [20480] File "/usr/lib/python2.7/site-packages/XenAPI.py", line 159, in xenapi_request Apr 7 16:07:09 node-01 SMGC: [20480] result = _parse_result(getattr(self, methodname)(*full_params)) Apr 7 16:07:09 node-01 SMGC: [20480] File "/usr/lib/python2.7/site-packages/XenAPI.py", line 237, in _parse_result Apr 7 16:07:09 node-01 SMGC: [20480] raise Failure(result['ErrorDescription']) Apr 7 16:07:09 node-01 SMGC: [20480] Apr 7 16:07:09 node-01 SMGC: [20480] *~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~* Apr 7 16:07:09 node-01 SMGC: [20480] * * * * * SR 767a9d9c-55aa-0898-fe10-400ee856bdce: ERROR Apr 7 16:07:09 node-01 SMGC: [20480]This is right at the end of my log. Any ideas from the logs what might be causing this? Input/output error seems fairly ambiguous?

Chris.

-

@chrispage1 said in Auto coalescing of disks:

Apr 7 16:07:09 node-01 SMGC: [20480] text = session.xenapi.host.call_plugin(slave, "on-slave", "multi", args)

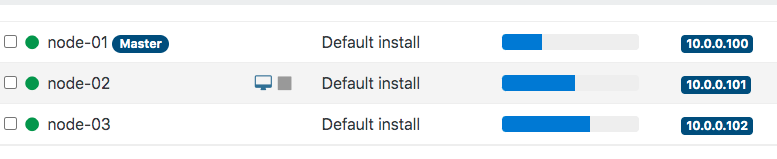

Seems the issue is here. Are all your slaves in pool healthy?

-

-

By default the UI will only display running hosts. Be sure to have an empty search field.

-

@olivierlambert thanks - that's definitely all the hosts. Only three on this system. Any way to check each host at a deeper level?

Chris

-

Input/output errorisn't a good sign

Try to reboot all your hosts and see if the issue persist. Also check

dmesg. -

@olivierlambert ah - would the input/output error be on the nodes itself or the SR?

We have seen this input/output error before when attempting to snapshot or migrate VM's -

"code": "SR_BACKEND_FAILURE_81", "params": [ "", "Failed to clone VDI [opterr=['XENAPI_PLUGIN_FAILURE', 'multi', 'CommandException', 'Input/output error']]", "" ],I can reboot the nodes but would be a little hesitant due to potential downtime, etc. Would restarting the tool stack help?

dmesg isn't reporting anything particularly concerning.

Appreciate your support on this,

Chris. -

It's on the SR here. The storage code is reporting the issue, so that's why coalesce isn't happening.

-

@olivierlambert thanks. The only other thing - I was using templates so I notice on my storage that one block consists of 6 base copies & 3 VDI's.

In this format, I have issues migrating and snapshotting them. However, if I power them off, snapshot, run a full clone, and use the new clone they work fine (migratable, snapshottable, bootable). Any ideas why this might be and could this be the cause of the coalesce issue?

Thanks,

Chris. -

@olivierlambert Hi, I've been keeping an eye on dmesg and getting the following -

[Thu Apr 8 20:26:51 2021] device-mapper: ioctl: unable to remove open device VG_XenStorage--767a9d9c--55aa--0898--fe10--400ee856bdce-clone_ba7ea2dd--4684--4cc9--9ccd--ba1909cda656_1I don't know if this could possibly be related to the exceptions I am seeing?

Thanks,

Chris. -

You have weird issues on your storage (I/O error on badly named VHD, hard to tell without investigating further)

-

@olivierlambert thanks - I'll continue to look into it.