Nested Virtualization of Windows Hyper-V on XCP-ng

-

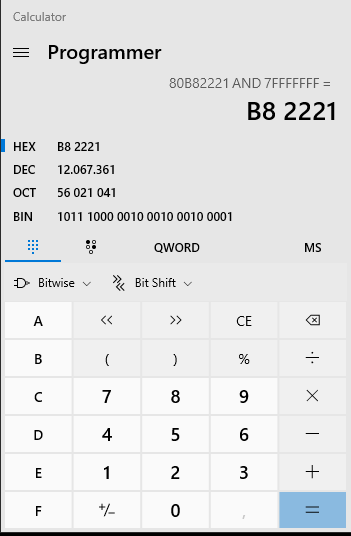

@alexanderk If you are running a current version of Windows 10, you can use the Calculator app. It has a programmer mode that allows you to enter the 32-bit source value from the platform cpuid in hex and bitwise AND it with 0x7FFFFFFF. Programmer mode is widely available on calculators for Linux too so use the tool with which you are most comfortable.

-

@xcp-ng-justgreat said in Nested Virtualization of Windows Hyper-V on XCP-ng:

0x7FFFFFFF

there are some groups of hex digits at the cpu id.

I use the second group of digits?The second group is 80b82221 and with bitwise and 7FFFFFF i have this

the result is 6 digits. Is this correct?

-

@alexanderk Two leading hex zeros (8-bits) are implied.

-

@xcp-ng-justgreat

ok thanks.

desktop docker worked at your vm? -

hyperv is working without issues?

-

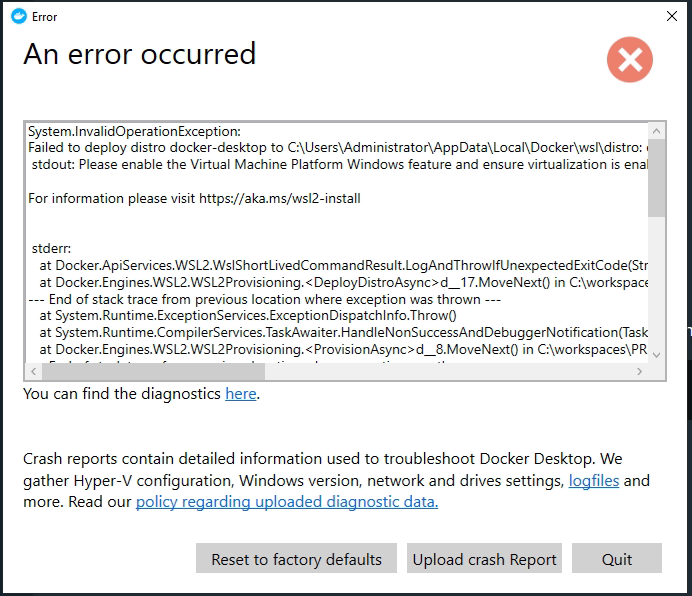

@alexanderk (With apologies to all for the following dense reading as there is much detail. TLDR; Hyper-V installs, but remains disabled on XCP-ng. Hyper-V installs, is enabled, and starts fine on ESXi where nested Hyper-V works. Methodology and items tested to follow.) No breakthrough yet. The Windows Hyper-V feature installs fine into the guest, but it does not appear to become active following the reboot. This is the same behavior that occurs using pure vanilla Xen hypervisor. I set up two parallel test environments: one using ESXi 6.7 Build 17700523 which works perfectly, and another using XCP-ng which does not yet work. Host hardware is very similar with identical Intel processors. Turning off the "hypervisor running" CPUID bit 31 in XCP-ng using the technique provided by @olivierlambert remains an essential prerequisite step. VMware's hypervisor.cpuid.v0 = "FALSE" .vmx setting is the equivalent configuration change required for nested virtualization to work on ESXi. After booting identical Windows 10 VMs on each hypervisor, I ran the Windows Sysinternals CoreInfo64.exe and CoreInfo64.exe -v commands inside the guests in order to compare the processor features available in each case. The active CPU flags appeared to all be the same except that XCP-ng had three additional flags set: acpi, hle and rtm that were not present in the ESXi guest. Likewise, ESXi had the x2apic flag set which was not present in the XCP-ng guest. It appears that the corresponding x2apic CPUID bit 21 from our modified platform:featureset=CPUID 32-bit word is on, presumably making that feature available in the VM despite it showing disabled in the guest. The other thing that is different between the two is that on ESXi, the CoreInfo64.exe -v command shows that all four CPU assisted virtualization feature flags are reversed after Hyper-V is installed and activated following a reboot. In other words, hypervisor present becomes true (Hyper-V is now active) and the other three flags: vmx, ept and urg (unrestricted guest) are all false. I continue to be frustrated that there doesn't seem to be an authoritative document for all of the platform:mapkey=value pairs for XenServer/XCP-ng. Similarly, there appear to be additional global hypervisor settings enabled with the /opt/xensource/libexec/xen-cmdline <param(s)> for which I can't find any documentation. For example, there is the /opt/xensource/libexec/xen-cmdline --set-xen force_software_vmcs_shadow global setting that is required by the one Citrix-supported nested virtualization configuration. Search for Bromium in the Citrix online docs for further information. If anybody knows where to find the complete documentation for the platform:mapped key/value pairs and the global hypervisor settings like the one cited, I'd be very interested. One other thing examined was the system Hyper-V drivers loaded in the two nested guest configs. Each appeared to have the same set of drivers loaded without any failures. However, the HV Host Service would not start on XCP-ng, yet starts fine on the working ESXi hosted VM. (Probably requires Hyper-V to be active so is an observed effect rather than a cause.) Any suggestions for additional productive avenues of inquiry are much appreciated. I will keep working on it.

-

Thanks for the detailed feedback @XCP-ng-JustGreat

Let me check around if I can find more information.

-

Okay so I've been told that we should try with an extra Xen patch: https://paste.debian.net/hidden/870debb8/

@stormi do we have a guide to assist on rebuilding Xen with this extra modification?

-

@olivierlambert I'm happy to try out an alternate Xen kernel if that's what we're talking about. Please let me know if there is anything else that you need from me. It feels like we are so close to solving this

. . .

. . . -

You can use our build container https://github.com/xcp-ng/xcp-ng-build-env on the RPM sources for

xenin XCP-ng 8.2 https://github.com/xcp-ng-rpms/xen/tree/8.2 to build new packages.To add a patch, add it to SOURCES/ and list it in the spec file. The patch may fail to apply as it could have been made against a different version of xen, in which case it will need to be adjusted to the current codebase.

-

I've not compiled an OS kernel before, but I'm willing to give it a try. It will probably take me a while to get the containerized development environment set up, etc. in order to build the updated kernel. I don't pretend to understand what this code does or how it may or may not fix nested Hyper-V on XCP-ng.

Here is the source code that we have been asked to integrate:

diff --git a/xen/arch/x86/hvm/vmx/vvmx.c b/xen/arch/x86/hvm/vmx/vvmx.c index e9f94daf64..4c80912368 100644 --- a/xen/arch/x86/hvm/vmx/vvmx.c +++ b/xen/arch/x86/hvm/vmx/vvmx.c @@ -2237,6 +2237,7 @@ int nvmx_msr_read_intercept(unsigned int msr, u64 *msr_content) /* 1-settings */ data = PIN_BASED_EXT_INTR_MASK | PIN_BASED_NMI_EXITING | + PIN_BASED_VIRTUAL_NMIS | PIN_BASED_PREEMPT_TIMER; data = gen_vmx_msr(data, VMX_PINBASED_CTLS_DEFAULT1, host_data); break; -

@stormi Which host OS should be used to create the containerized XCP-ng build environment? My build host will be a VM running on XCP-ng (of course). I'm looking for the choice that mostly "just works" with the fewest adjustments. Would that be CentOS 7 or 8, Debian 10 or something else? Thanks for your guidance.

-

If you use an XCP-ng VM for building, then you don't need any containers. All build dependencies are available in the repositories, so you just need to install git, git clone the xen RPM source repository, install the build dependencies (

yum-builddep /path/to/xen.spec) then build with rpmbuild.The container solution is here to automated all this, and can be used on any linux system that supports docker.

-

@stormi Sorry. I may not have been completely clear. It sounds like you are suggesting that I run a nested XCP-ng VM on my XCP-ng host. That way, all the dependencies are in place without the need for containers. Just git clone and compile in the VM running XCP-ng. Is that what you meant?

-

@xcp-ng-justgreat My fault, I misread. No, I don't suggest that. I thought that's what you were going to do so I explained how you could possibly use a nested XCP-ng for the build.

However the container solution is preferred. It may be a bit harder to setup, but then you will be able to easily rebuild any XCP-ng package, and benefit from the automation of dependency installation each time you do a build, whatever the RPM you need to build.

So now you've got two possible ways

-

@stormi Makes sense. So which OS do you guys use to host your Docker build environment?

-

I think most of us use either Fedora (not the easiest due to its bleeding edge nature, but guides exist) or Arch. But really you should pick whatever distro you manage to find an easy "install and setup docker" guide for.

-

By the way, if someone wants to create a VM image that already contains everything setup and ready to use, we'd gladly host it to make this kind of tests easier.

-

@stormi Noted. And thank you!

-

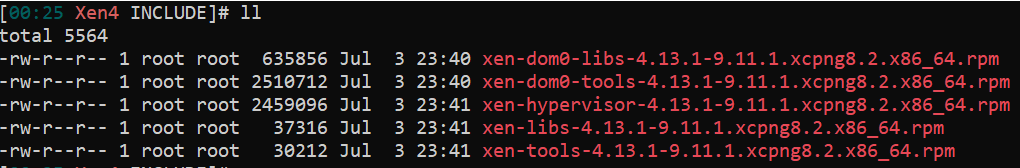

@AlexanderK Based on @olivierlambert - provided patch for /xen/arch/x86/hvm/vmx/vvmx.c module, and @stormi - provided build information plus the excellent XCP-ng docs, I was able to spin-up a CentOS 8 Stream VM on XCP-ng, install latest Docker and create the XCP-ng-build-env container using build.sh 8.2. I got a bit confused by the run.py command nipping back and forth between the host and the container so I opted to run everything inside the container as it seemed more straightforward. After pulling the XCP-ng Xen source with git, updating the SPECS file with Patch245: nested-hyper-v-on-xen.patch and adding the nested-hyper-v-on-xen.patch file to SOURCES, I was ready to rpmbuild -ba SPECS/*.spec

This is where I could use some guidance. Thirteen RPMS were successfully generated as follows:

image url)

image url)

yum list installed "xen-*" gives:

image url)

image url)

so the five matching installed packages are copied to the INCLUDE directory:

and installed from the INCLUDE directory as follows:

yum reinstall ./*

I wasn't sure if it was necessary, but I also rebuilt initrd using dracut -f then rebooted the host.Did I install the updated packages correctly?

If so, then here is the final result: The nested Windows VM where Hyper-V is installed no longer boots. It just freezes at the Tiano firmware boot screen. At that point, only a forced shutdown will bring the machine down. If I start the VM on one of the other unpatched hosts, it starts fine, though as before, Hyper-V will not activate in the guest OS. BCDEdit shows the hypervisorlaunchtype = Auto. Please let me know if something seems wrong with my build or application process and also if there are additional next steps. I haven't given up yet!