-

Failure trying to revert a VM to a snapshot with XOSTOR.

Created a VM with main VDI on XOSTOR (24GB) and with 6 disks each also on XOSTOR (2GB each).

All is running OK.

Now create a snapshot of the VM - this takes quite a while but does eventually succeed.

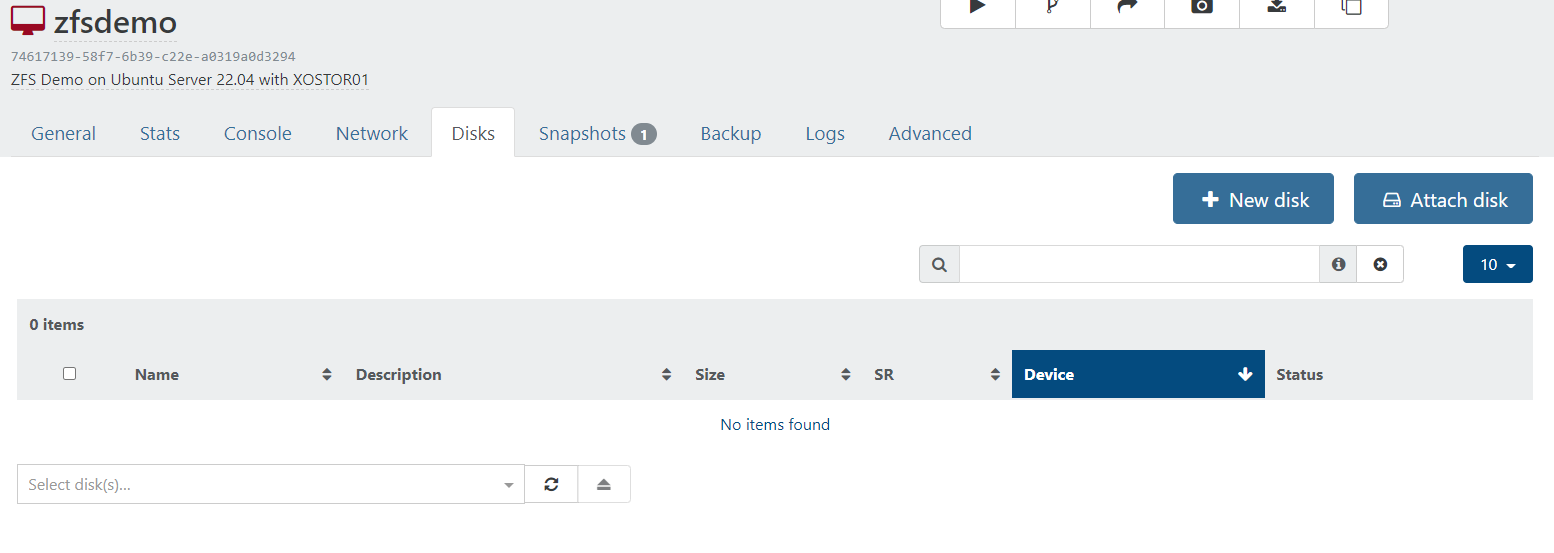

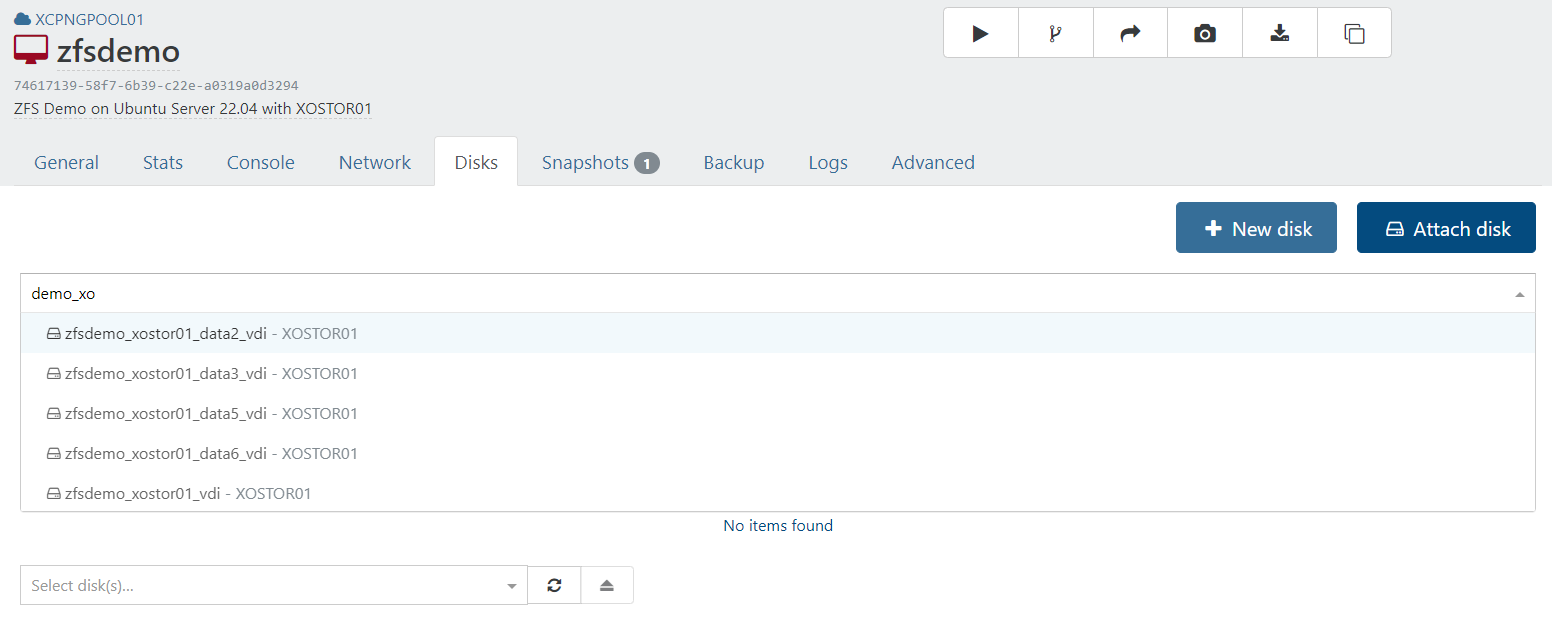

Now using XO (from sources) click the "Revert VM to this snapshot". This errors and the VM stops.vm.revert { "snapshot": "6032fc73-eb7f-cf64-2481-4346b7b57204" } { "code": "VM_REVERT_FAILED", "params": [ "OpaqueRef:1439fd0f-4e66-44c9-99af-1f8536e59378", "OpaqueRef:5ad4c51e-473e-4ab0-877d-2d0dbdb90add" ], "task": { "uuid": "4804fefd-0037-d7dd-9a7c-769230728483", "name_label": "Async.VM.revert", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20220527T15:01:42Z", "finished": "20220527T15:01:46Z", "status": "failure", "resident_on": "OpaqueRef:a1e9a8f3-0a79-4824-b29f-d81b3246d190", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "VM_REVERT_FAILED", "OpaqueRef:1439fd0f-4e66-44c9-99af-1f8536e59378", "OpaqueRef:5ad4c51e-473e-4ab0-877d-2d0dbdb90add" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename ocaml/xapi/xapi_vm_snapshot.ml)(line 492))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 131))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 231))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 103)))" }, "message": "VM_REVERT_FAILED(OpaqueRef:1439fd0f-4e66-44c9-99af-1f8536e59378, OpaqueRef:5ad4c51e-473e-4ab0-877d-2d0dbdb90add)", "name": "XapiError", "stack": "XapiError: VM_REVERT_FAILED(OpaqueRef:1439fd0f-4e66-44c9-99af-1f8536e59378, OpaqueRef:5ad4c51e-473e-4ab0-877d-2d0dbdb90add) at Function.wrap (/opt/xo/xo-builds/xen-orchestra-202204291839/packages/xen-api/src/_XapiError.js:16:12) at _default (/opt/xo/xo-builds/xen-orchestra-202204291839/packages/xen-api/src/_getTaskResult.js:11:29) at Xapi._addRecordToCache (/opt/xo/xo-builds/xen-orchestra-202204291839/packages/xen-api/src/index.js:949:24) at forEach (/opt/xo/xo-builds/xen-orchestra-202204291839/packages/xen-api/src/index.js:983:14) at Array.forEach (<anonymous>) at Xapi._processEvents (/opt/xo/xo-builds/xen-orchestra-202204291839/packages/xen-api/src/index.js:973:12) at Xapi._watchEvents (/opt/xo/xo-builds/xen-orchestra-202204291839/packages/xen-api/src/index.js:1139:14)" }Now viewing the VM with XO on the disks tab shows no attached disks - disk tab is blank.

But linstor appears to still have the disks and the snapshot disks too.

┊ XCPNG01 ┊ xcp-volume-142cb89f-2850-4ac8-a47c-10bb2cfc4692 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1010 ┊ /dev/drbd1010 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG02 ┊ xcp-volume-142cb89f-2850-4ac8-a47c-10bb2cfc4692 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1010 ┊ /dev/drbd1010 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG03 ┊ xcp-volume-142cb89f-2850-4ac8-a47c-10bb2cfc4692 ┊ DfltDisklessStorPool ┊ 0 ┊ 1010 ┊ /dev/drbd1010 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG04 ┊ xcp-volume-142cb89f-2850-4ac8-a47c-10bb2cfc4692 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1010 ┊ /dev/drbd1010 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG01 ┊ xcp-volume-18fa145a-d36b-44bd-b1b5-af1e9424ea00 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1018 ┊ /dev/drbd1018 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG02 ┊ xcp-volume-18fa145a-d36b-44bd-b1b5-af1e9424ea00 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1018 ┊ /dev/drbd1018 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG03 ┊ xcp-volume-18fa145a-d36b-44bd-b1b5-af1e9424ea00 ┊ DfltDisklessStorPool ┊ 0 ┊ 1018 ┊ /dev/drbd1018 ┊ ┊ InUse ┊ Diskless ┊ ┊ XCPNG04 ┊ xcp-volume-18fa145a-d36b-44bd-b1b5-af1e9424ea00 ┊ DfltDisklessStorPool ┊ 0 ┊ 1018 ┊ /dev/drbd1018 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG05 ┊ xcp-volume-18fa145a-d36b-44bd-b1b5-af1e9424ea00 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1018 ┊ /dev/drbd1018 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG01 ┊ xcp-volume-1a6c7272-f718-4c4d-a8b0-ca8419eab314 ┊ DfltDisklessStorPool ┊ 0 ┊ 1024 ┊ /dev/drbd1024 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG02 ┊ xcp-volume-1a6c7272-f718-4c4d-a8b0-ca8419eab314 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1024 ┊ /dev/drbd1024 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG03 ┊ xcp-volume-1a6c7272-f718-4c4d-a8b0-ca8419eab314 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1024 ┊ /dev/drbd1024 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG04 ┊ xcp-volume-1a6c7272-f718-4c4d-a8b0-ca8419eab314 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1024 ┊ /dev/drbd1024 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG05 ┊ xcp-volume-1a6c7272-f718-4c4d-a8b0-ca8419eab314 ┊ DfltDisklessStorPool ┊ 0 ┊ 1024 ┊ /dev/drbd1024 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG01 ┊ xcp-volume-2cab6c2d-abf6-42c7-9094-d75351ed8ebb ┊ xcp-sr-linstor_group ┊ 0 ┊ 1016 ┊ /dev/drbd1016 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG02 ┊ xcp-volume-2cab6c2d-abf6-42c7-9094-d75351ed8ebb ┊ xcp-sr-linstor_group ┊ 0 ┊ 1016 ┊ /dev/drbd1016 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG03 ┊ xcp-volume-2cab6c2d-abf6-42c7-9094-d75351ed8ebb ┊ xcp-sr-linstor_group ┊ 0 ┊ 1016 ┊ /dev/drbd1016 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG04 ┊ xcp-volume-2cab6c2d-abf6-42c7-9094-d75351ed8ebb ┊ DfltDisklessStorPool ┊ 0 ┊ 1016 ┊ /dev/drbd1016 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG05 ┊ xcp-volume-2cab6c2d-abf6-42c7-9094-d75351ed8ebb ┊ DfltDisklessStorPool ┊ 0 ┊ 1016 ┊ /dev/drbd1016 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG01 ┊ xcp-volume-30bf014b-025d-4f3f-a068-f9a9bf34fab2 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1013 ┊ /dev/drbd1013 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG02 ┊ xcp-volume-30bf014b-025d-4f3f-a068-f9a9bf34fab2 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1013 ┊ /dev/drbd1013 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG03 ┊ xcp-volume-30bf014b-025d-4f3f-a068-f9a9bf34fab2 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1013 ┊ /dev/drbd1013 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG01 ┊ xcp-volume-3bdb2b25-706c-4309-ab8f-df3190f57c43 ┊ DfltDisklessStorPool ┊ 0 ┊ 1021 ┊ /dev/drbd1021 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG02 ┊ xcp-volume-3bdb2b25-706c-4309-ab8f-df3190f57c43 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1021 ┊ /dev/drbd1021 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG03 ┊ xcp-volume-3bdb2b25-706c-4309-ab8f-df3190f57c43 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1021 ┊ /dev/drbd1021 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG04 ┊ xcp-volume-3bdb2b25-706c-4309-ab8f-df3190f57c43 ┊ DfltDisklessStorPool ┊ 0 ┊ 1021 ┊ /dev/drbd1021 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG05 ┊ xcp-volume-3bdb2b25-706c-4309-ab8f-df3190f57c43 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1021 ┊ /dev/drbd1021 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG01 ┊ xcp-volume-450f65f7-7fcc-4ffd-893e-761a2f6ac366 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1020 ┊ /dev/drbd1020 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG02 ┊ xcp-volume-450f65f7-7fcc-4ffd-893e-761a2f6ac366 ┊ DfltDisklessStorPool ┊ 0 ┊ 1020 ┊ /dev/drbd1020 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG03 ┊ xcp-volume-450f65f7-7fcc-4ffd-893e-761a2f6ac366 ┊ DfltDisklessStorPool ┊ 0 ┊ 1020 ┊ /dev/drbd1020 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG04 ┊ xcp-volume-450f65f7-7fcc-4ffd-893e-761a2f6ac366 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1020 ┊ /dev/drbd1020 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG05 ┊ xcp-volume-450f65f7-7fcc-4ffd-893e-761a2f6ac366 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1020 ┊ /dev/drbd1020 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG01 ┊ xcp-volume-466938db-11f1-4b59-8a90-ad08fa20e085 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1015 ┊ /dev/drbd1015 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG02 ┊ xcp-volume-466938db-11f1-4b59-8a90-ad08fa20e085 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1015 ┊ /dev/drbd1015 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG03 ┊ xcp-volume-466938db-11f1-4b59-8a90-ad08fa20e085 ┊ DfltDisklessStorPool ┊ 0 ┊ 1015 ┊ /dev/drbd1015 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG04 ┊ xcp-volume-466938db-11f1-4b59-8a90-ad08fa20e085 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1015 ┊ /dev/drbd1015 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG05 ┊ xcp-volume-466938db-11f1-4b59-8a90-ad08fa20e085 ┊ DfltDisklessStorPool ┊ 0 ┊ 1015 ┊ /dev/drbd1015 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG01 ┊ xcp-volume-470dcf6f-d916-403d-8258-e012c065b8ec ┊ xcp-sr-linstor_group ┊ 0 ┊ 1009 ┊ /dev/drbd1009 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG02 ┊ xcp-volume-470dcf6f-d916-403d-8258-e012c065b8ec ┊ xcp-sr-linstor_group ┊ 0 ┊ 1009 ┊ /dev/drbd1009 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG03 ┊ xcp-volume-470dcf6f-d916-403d-8258-e012c065b8ec ┊ DfltDisklessStorPool ┊ 0 ┊ 1009 ┊ /dev/drbd1009 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG04 ┊ xcp-volume-470dcf6f-d916-403d-8258-e012c065b8ec ┊ xcp-sr-linstor_group ┊ 0 ┊ 1009 ┊ /dev/drbd1009 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG01 ┊ xcp-volume-551db5b5-7772-407a-9e8c-e549db3a0e5f ┊ xcp-sr-linstor_group ┊ 0 ┊ 1008 ┊ /dev/drbd1008 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG02 ┊ xcp-volume-551db5b5-7772-407a-9e8c-e549db3a0e5f ┊ xcp-sr-linstor_group ┊ 0 ┊ 1008 ┊ /dev/drbd1008 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG03 ┊ xcp-volume-551db5b5-7772-407a-9e8c-e549db3a0e5f ┊ DfltDisklessStorPool ┊ 0 ┊ 1008 ┊ /dev/drbd1008 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG04 ┊ xcp-volume-551db5b5-7772-407a-9e8c-e549db3a0e5f ┊ xcp-sr-linstor_group ┊ 0 ┊ 1008 ┊ /dev/drbd1008 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG01 ┊ xcp-volume-699871db-2319-4ddd-9a44-0514d2e7aee3 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1025 ┊ /dev/drbd1025 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG02 ┊ xcp-volume-699871db-2319-4ddd-9a44-0514d2e7aee3 ┊ DfltDisklessStorPool ┊ 0 ┊ 1025 ┊ /dev/drbd1025 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG03 ┊ xcp-volume-699871db-2319-4ddd-9a44-0514d2e7aee3 ┊ DfltDisklessStorPool ┊ 0 ┊ 1025 ┊ /dev/drbd1025 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG04 ┊ xcp-volume-699871db-2319-4ddd-9a44-0514d2e7aee3 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1025 ┊ /dev/drbd1025 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG05 ┊ xcp-volume-699871db-2319-4ddd-9a44-0514d2e7aee3 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1025 ┊ /dev/drbd1025 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG01 ┊ xcp-volume-6c96822b-7ded-41dd-b4ff-690dc4795ee7 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1023 ┊ /dev/drbd1023 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG02 ┊ xcp-volume-6c96822b-7ded-41dd-b4ff-690dc4795ee7 ┊ DfltDisklessStorPool ┊ 0 ┊ 1023 ┊ /dev/drbd1023 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG03 ┊ xcp-volume-6c96822b-7ded-41dd-b4ff-690dc4795ee7 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1023 ┊ /dev/drbd1023 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG04 ┊ xcp-volume-6c96822b-7ded-41dd-b4ff-690dc4795ee7 ┊ DfltDisklessStorPool ┊ 0 ┊ 1023 ┊ /dev/drbd1023 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG05 ┊ xcp-volume-6c96822b-7ded-41dd-b4ff-690dc4795ee7 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1023 ┊ /dev/drbd1023 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG01 ┊ xcp-volume-70004559-a2c4-480f-b7bc-b26dcb95bfba ┊ xcp-sr-linstor_group ┊ 0 ┊ 1027 ┊ /dev/drbd1027 ┊ 24.06 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG02 ┊ xcp-volume-70004559-a2c4-480f-b7bc-b26dcb95bfba ┊ xcp-sr-linstor_group ┊ 0 ┊ 1027 ┊ /dev/drbd1027 ┊ 24.06 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG03 ┊ xcp-volume-70004559-a2c4-480f-b7bc-b26dcb95bfba ┊ DfltDisklessStorPool ┊ 0 ┊ 1027 ┊ /dev/drbd1027 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG04 ┊ xcp-volume-70004559-a2c4-480f-b7bc-b26dcb95bfba ┊ xcp-sr-linstor_group ┊ 0 ┊ 1027 ┊ /dev/drbd1027 ┊ 24.06 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG05 ┊ xcp-volume-70004559-a2c4-480f-b7bc-b26dcb95bfba ┊ DfltDisklessStorPool ┊ 0 ┊ 1027 ┊ /dev/drbd1027 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG01 ┊ xcp-volume-707a0158-ad31-4b4b-af2b-20d89e5717de ┊ DfltDisklessStorPool ┊ 0 ┊ 1026 ┊ /dev/drbd1026 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG02 ┊ xcp-volume-707a0158-ad31-4b4b-af2b-20d89e5717de ┊ xcp-sr-linstor_group ┊ 0 ┊ 1026 ┊ /dev/drbd1026 ┊ 24.06 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG03 ┊ xcp-volume-707a0158-ad31-4b4b-af2b-20d89e5717de ┊ xcp-sr-linstor_group ┊ 0 ┊ 1026 ┊ /dev/drbd1026 ┊ 24.06 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG04 ┊ xcp-volume-707a0158-ad31-4b4b-af2b-20d89e5717de ┊ DfltDisklessStorPool ┊ 0 ┊ 1026 ┊ /dev/drbd1026 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG05 ┊ xcp-volume-707a0158-ad31-4b4b-af2b-20d89e5717de ┊ xcp-sr-linstor_group ┊ 0 ┊ 1026 ┊ /dev/drbd1026 ┊ 24.06 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG02 ┊ xcp-volume-7aaa7a6e-98c4-4a57-a4f1-4fea0a36b17a ┊ xcp-sr-linstor_group ┊ 0 ┊ 1011 ┊ /dev/drbd1011 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG03 ┊ xcp-volume-7aaa7a6e-98c4-4a57-a4f1-4fea0a36b17a ┊ xcp-sr-linstor_group ┊ 0 ┊ 1011 ┊ /dev/drbd1011 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG04 ┊ xcp-volume-7aaa7a6e-98c4-4a57-a4f1-4fea0a36b17a ┊ xcp-sr-linstor_group ┊ 0 ┊ 1011 ┊ /dev/drbd1011 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG01 ┊ xcp-volume-9320c158-489e-49e7-92b8-85c93c9e3eeb ┊ xcp-sr-linstor_group ┊ 0 ┊ 1022 ┊ /dev/drbd1022 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG02 ┊ xcp-volume-9320c158-489e-49e7-92b8-85c93c9e3eeb ┊ xcp-sr-linstor_group ┊ 0 ┊ 1022 ┊ /dev/drbd1022 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG03 ┊ xcp-volume-9320c158-489e-49e7-92b8-85c93c9e3eeb ┊ DfltDisklessStorPool ┊ 0 ┊ 1022 ┊ /dev/drbd1022 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG04 ┊ xcp-volume-9320c158-489e-49e7-92b8-85c93c9e3eeb ┊ xcp-sr-linstor_group ┊ 0 ┊ 1022 ┊ /dev/drbd1022 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG05 ┊ xcp-volume-9320c158-489e-49e7-92b8-85c93c9e3eeb ┊ DfltDisklessStorPool ┊ 0 ┊ 1022 ┊ /dev/drbd1022 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG02 ┊ xcp-volume-b341848b-01d1-4019-a62f-85c6108a53e3 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1006 ┊ /dev/drbd1006 ┊ 24.06 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG03 ┊ xcp-volume-b341848b-01d1-4019-a62f-85c6108a53e3 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1006 ┊ /dev/drbd1006 ┊ 24.06 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG05 ┊ xcp-volume-b341848b-01d1-4019-a62f-85c6108a53e3 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1006 ┊ /dev/drbd1006 ┊ 24.06 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG01 ┊ xcp-volume-bccefe12-9ff5-4317-b05c-515cb44a5710 ┊ DfltDisklessStorPool ┊ 0 ┊ 1014 ┊ /dev/drbd1014 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG02 ┊ xcp-volume-bccefe12-9ff5-4317-b05c-515cb44a5710 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1014 ┊ /dev/drbd1014 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG03 ┊ xcp-volume-bccefe12-9ff5-4317-b05c-515cb44a5710 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1014 ┊ /dev/drbd1014 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG04 ┊ xcp-volume-bccefe12-9ff5-4317-b05c-515cb44a5710 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1014 ┊ /dev/drbd1014 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG05 ┊ xcp-volume-bccefe12-9ff5-4317-b05c-515cb44a5710 ┊ DfltDisklessStorPool ┊ 0 ┊ 1014 ┊ /dev/drbd1014 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG01 ┊ xcp-volume-cdc051ae-bc39-4012-9ce0-6e4f855a5063 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1012 ┊ /dev/drbd1012 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG02 ┊ xcp-volume-cdc051ae-bc39-4012-9ce0-6e4f855a5063 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1012 ┊ /dev/drbd1012 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG03 ┊ xcp-volume-cdc051ae-bc39-4012-9ce0-6e4f855a5063 ┊ DfltDisklessStorPool ┊ 0 ┊ 1012 ┊ /dev/drbd1012 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG04 ┊ xcp-volume-cdc051ae-bc39-4012-9ce0-6e4f855a5063 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1012 ┊ /dev/drbd1012 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG01 ┊ xcp-volume-d5a744ec-d1a1-4116-a576-38608b9dd790 ┊ DfltDisklessStorPool ┊ 0 ┊ 1019 ┊ /dev/drbd1019 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG02 ┊ xcp-volume-d5a744ec-d1a1-4116-a576-38608b9dd790 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1019 ┊ /dev/drbd1019 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG03 ┊ xcp-volume-d5a744ec-d1a1-4116-a576-38608b9dd790 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1019 ┊ /dev/drbd1019 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG04 ┊ xcp-volume-d5a744ec-d1a1-4116-a576-38608b9dd790 ┊ xcp-sr-linstor_group ┊ 0 ┊ 1019 ┊ /dev/drbd1019 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG05 ┊ xcp-volume-d5a744ec-d1a1-4116-a576-38608b9dd790 ┊ DfltDisklessStorPool ┊ 0 ┊ 1019 ┊ /dev/drbd1019 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG01 ┊ xcp-volume-f9cf9143-829d-4246-9051-9102f2c4709c ┊ DfltDisklessStorPool ┊ 0 ┊ 1017 ┊ /dev/drbd1017 ┊ ┊ Unused ┊ Diskless ┊ ┊ XCPNG02 ┊ xcp-volume-f9cf9143-829d-4246-9051-9102f2c4709c ┊ xcp-sr-linstor_group ┊ 0 ┊ 1017 ┊ /dev/drbd1017 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG03 ┊ xcp-volume-f9cf9143-829d-4246-9051-9102f2c4709c ┊ xcp-sr-linstor_group ┊ 0 ┊ 1017 ┊ /dev/drbd1017 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG04 ┊ xcp-volume-f9cf9143-829d-4246-9051-9102f2c4709c ┊ xcp-sr-linstor_group ┊ 0 ┊ 1017 ┊ /dev/drbd1017 ┊ 2.02 GiB ┊ Unused ┊ UpToDate ┊ ┊ XCPNG05 ┊ xcp-volume-f9cf9143-829d-4246-9051-9102f2c4709c ┊ DfltDisklessStorPool ┊ 0 ┊ 1017 ┊ /dev/drbd1017 ┊ ┊ Unused ┊ Diskless ┊From the VM disks tab if I try to Attach the disks, two of the disks created on XOSTOR are missing (data1 and data4).

Finally if I go to storage and bring up the XOSTOR storage and then press "Rescan all disks" I get this error:

sr.scan { "id": "cf896912-cd71-d2b2-488a-5792b7147c87" } { "code": "SR_BACKEND_FAILURE_46", "params": [ "", "The VDI is not available [opterr=Could not load 735fc2d7-f1f0-4cc6-9d35-42a049d8ec6c because: ['XENAPI_PLUGIN_FAILURE', 'getVHDInfo', 'CommandException', 'No such file or directory']]", "" ], "task": { "uuid": "4dcac885-dfaa-784a-eb2d-02335efde0fb", "name_label": "Async.SR.scan", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20220527T16:27:36Z", "finished": "20220527T16:27:50Z", "status": "failure", "resident_on": "OpaqueRef:a1e9a8f3-0a79-4824-b29f-d81b3246d190", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "SR_BACKEND_FAILURE_46", "", "The VDI is not available [opterr=Could not load 735fc2d7-f1f0-4cc6-9d35-42a049d8ec6c because: ['XENAPI_PLUGIN_FAILURE', 'getVHDInfo', 'CommandException', 'No such file or directory']]", "" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename lib/backtrace.ml)(line 210))((process xapi)(filename ocaml/xapi/storage_access.ml)(line 32))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 128))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 231))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 103)))" }, "message": "SR_BACKEND_FAILURE_46(, The VDI is not available [opterr=Could not load 735fc2d7-f1f0-4cc6-9d35-42a049d8ec6c because: ['XENAPI_PLUGIN_FAILURE', 'getVHDInfo', 'CommandException', 'No such file or directory']], )", "name": "XapiError", "stack": "XapiError: SR_BACKEND_FAILURE_46(, The VDI is not available [opterr=Could not load 735fc2d7-f1f0-4cc6-9d35-42a049d8ec6c because: ['XENAPI_PLUGIN_FAILURE', 'getVHDInfo', 'CommandException', 'No such file or directory']], ) at Function.wrap (/opt/xo/xo-builds/xen-orchestra-202204291839/packages/xen-api/src/_XapiError.js:16:12) at _default (/opt/xo/xo-builds/xen-orchestra-202204291839/packages/xen-api/src/_getTaskResult.js:11:29) at Xapi._addRecordToCache (/opt/xo/xo-builds/xen-orchestra-202204291839/packages/xen-api/src/index.js:949:24) at forEach (/opt/xo/xo-builds/xen-orchestra-202204291839/packages/xen-api/src/index.js:983:14) at Array.forEach (<anonymous>) at Xapi._processEvents (/opt/xo/xo-builds/xen-orchestra-202204291839/packages/xen-api/src/index.js:973:12) at Xapi._watchEvents (/opt/xo/xo-builds/xen-orchestra-202204291839/packages/xen-api/src/index.js:1139:14)" } -

@ronan-a said in XOSTOR hyperconvergence preview:

Okay so it's probably not related to the driver itself, I will take a look to the logs after reception.

Did you get chance to look at the logs I sent?

-

@geoffbland So, I didn't notice useful info outside of:

FIXME drbd_a_xcp-volu[24302] op clear, bitmap locked for 'set_n_write sync_handshake' by drbd_r_xcp-volu[24231] ... FIXME drbd_a_xcp-volu[24328] op clear, bitmap locked for 'demote' by drbd_w_xcp-volu[24188]Like I said in my e-mail, maybe there are more details in another log file. I hope.

-

@ronan-a said in [XOSTOR hyperconvergence preview]

Like I said in my e-mail, maybe there are more details in another log file. I hope.

In the end I realised I was more trying to "use" XOSTOR whilst testing rather than properly test it. So I decided to rip it all down and start again and retest it again - this time properly recording each step so any issues can be replicated. I will let you know how this goes.

-

@ronan-a Could you please take a look at this issue I raised elsewhere on the forums.

I am currently unable to create new VMs, getting a

No such Tapdiskerror - checking down the stack trace - it seems to be coming from aget()call in/opt/xensource/sm/blktap2.pyand this code seems to have been changed in a XOSTOR release from 24th May. -

@geoffbland Hi, I was away last week, I will take a look.

-

This is a supercool and amazing thing. Coming from Proxmox with Ceph I really feel this was a missing piece. I want to migrate my whole homelab to this!

I've been playing around with it a fair bit, and it works well, but when it came to enabling HA I ran into trouble. Is XOSTOR not a valid shared storage target to enable HA on?

Having multiple shared storages (NFS/CIFS etc) in production is a given to put backups and whatnot on, but I thought it was weird that I couldn't use XOSTOR storage to enable HA.

-

@yrrips said in XOSTOR hyperconvergence preview:

when it came to enabling HA I ran into trouble. Is XOSTOR not a valid shared storage target to enable HA on?

I'm also really hoping that XOSTOR works well and also feel like this is something XCP-NG really needs. I've tried other distributed storage solutions, notably GlusterFS but never found anything that really works 100% when outages occur.

Note I plan to use XOSTOR just for VM disks and any data they use - not for the HA share and not for backups. My logic is that:

- backups should not be anywhere near XCP-NG and should be isolated software and hardware - and hopefully location wise.

- HA share needs to survive a issue where XCP-NG HA fails; if XCP-NG quorum is not working then XOSTOR (Linstor) quorum may be affected in a similar way - so I keep my HA share on a reliable NFS share. Note that in testing I found if the HA share is not available for a while that XCP-NG stays running OK (just don't make any configuration changes until HA share is back).

-

@yrrips I fixed several problems with the HA and the linstor driver. I don't know what's your sm version, but I updated it few weeks ago (current:

sm-2.30.6-1.2.0.linstor.1.xcpng8.2.x86_64). Could you give me more details? -

I have been waiting for this for some time. after XOSAN didn't really work for me, but neither did OVH's SAN offering.

Is there a documentation page to look at rather than going through the thread?

In the absense of that is there a minumum recommanded host count?

Would love to give this a try but would mean playing with production VMs!

-

Hello @markhewitt1978 !

- First post is a complete guide

- 3 hosts is fine, no less. No problem with more.

We are still investigating a bug we discovered recently, so I wouldn't play in production right now (except if you are very confident and you have a lot of backup).

- First post is a complete guide

-

Is it possible to use a separate network for the XOSTOR/Linstor disk replication from the "main" network used for XCP-ng servers?

If so when the SR is created with this command:

xe sr-create type=linstor name-label=XOSTOR host-uuid=bc3cd3af-3f09-48cf-ae55-515ba21930f5 device-config:hosts=host-a,host-b,host-c,host-d device-config:group-name=linstor_group/thin_device device-config:redundancy=4 shared=true device-config:provisioning=thinDo the

device-config:hostsneed to be XCP-ng hosts - or can IP address of the "data-replication" network be provided here.For example, my XCP-NG servers have dual NICs, I could use the second NIC on a private network/switch with a different subnet to the "main" hosts and use this solely for XOSTOR/Linstor disk replication. Is this possible?

-

Doing some more testing on XOSTOR and starting from scratch again. A brand-new XCP-ng installation made onto a new 3 server pool, each server with a blank 4GB disk.

All servers have xcpng patched up to date.

Then I installed XOSTOR onto each of these servers, Linstor installed OK and I have the linstor group on each.[19:22 XCPNG30 ~]# vgs VG #PV #LV #SN Attr VSize VFree VG_XenStorage-a776b6b1-9a96-e179-ea12-f2419ae512b6 1 1 0 wz--n- <405.62g 405.61g linstor_group 1 0 0 wz--n- <3.64t <3.64t [19:22 XCPNG30 ~]# rpm -qa | grep -E "^(sm|xha)-.*linstor.*" xha-10.1.0-2.2.0.linstor.2.xcpng8.2.x86_64 sm-2.30.7-1.3.0.linstor.1.xcpng8.2.x86_64 sm-rawhba-2.30.7-1.3.0.linstor.1.xcpng8.2.x86_64 [19:21 XCPNG31 ~]# vgs VG #PV #LV #SN Attr VSize VFree VG_XenStorage-f75785ef-df30-b54c-2af4-84d19c966453 1 1 0 wz--n- <405.62g 405.61g linstor_group 1 0 0 wz--n- <3.64t <3.64t [19:21 XCPNG31 ~]# rpm -qa | grep -E "^(sm|xha)-.*linstor.*" xha-10.1.0-2.2.0.linstor.2.xcpng8.2.x86_64 sm-2.30.7-1.3.0.linstor.1.xcpng8.2.x86_64 sm-rawhba-2.30.7-1.3.0.linstor.1.xcpng8.2.x86_64 [19:23 XCPNG32 ~]# vgs VG #PV #LV #SN Attr VSize VFree VG_XenStorage-abaf8356-fc58-9124-a23b-c29e7e67c983 1 1 0 wz--n- <405.62g 405.61g linstor_group 1 0 0 wz--n- <3.64t <3.64t [19:23 XCPNG32 ~]# rpm -qa | grep -E "^(sm|xha)-.*linstor.*" xha-10.1.0-2.2.0.linstor.2.xcpng8.2.x86_64 sm-2.30.7-1.3.0.linstor.1.xcpng8.2.x86_64 sm-rawhba-2.30.7-1.3.0.linstor.1.xcpng8.2.x86_64 [19:26 XCPNG31 ~]# xe host-list uuid ( RO) : 7c3f2fae-0456-4155-a9ad-43790fcb4155 name-label ( RW): XCPNG32 name-description ( RW): Default install uuid ( RO) : 2e48b46a-c420-4957-9233-3e029ea39305 name-label ( RW): XCPNG30 name-description ( RW): Default install uuid ( RO) : 7aaaf4a5-0e43-442e-a9b1-38620c87fd69 name-label ( RW): XCPNG31 name-description ( RW): Default installBut I am not able to create the SR.

xe sr-create type=linstor name-label=XOSTOR01 host-uuid=7aaaf4a5-0e43-442e-a9b1-38620c87fd69 device-config:hosts=xcpng30,xcpng31,xcpng32 device-config:group-name=linstor_group device-config:redundancy=2 shared=true device-config:provisioning=thickThis gives the following error:

Error code: SR_BACKEND_FAILURE_5006 Error parameters: , LINSTOR SR creation error [opterr=Not enough online hosts],Here's the error in the SMLog

Jul 15 19:29:22 XCPNG31 SM: [9747] sr_create {'sr_uuid': '14aa2b8b-430f-34e5-fb74-c37667cb18ec', 'subtask_of': 'DummyRef:|d39839f1-ee3a-4bfe-8a41-7a077f4f2640|SR.create', 'args': ['0'], 'host_ref': 'OpaqueRef:196f738d-24fa-4598-8e96-4a13390abc87', 'session_ref': 'OpaqueRef:e806b347-1e5f-4644-842f-26a7b06b2561', 'device_config': {'group-name': 'linstor_group', 'redundancy': '2', 'hosts': 'xcpng30,xcpng31,xcpng32', 'SRmaster': 'true', 'provisioning': 'thick'}, 'command': 'sr_create', 'sr_ref': 'OpaqueRef:7ded7feb-729f-47c3-9893-1b62db0b7e17'} Jul 15 19:29:22 XCPNG31 SM: [9747] LinstorSR.create for 14aa2b8b-430f-34e5-fb74-c37667cb18ec Jul 15 19:29:22 XCPNG31 SM: [9747] Raising exception [5006, LINSTOR SR creation error [opterr=Not enough online hosts]] Jul 15 19:29:22 XCPNG31 SM: [9747] lock: released /var/lock/sm/14aa2b8b-430f-34e5-fb74-c37667cb18ec/sr Jul 15 19:29:22 XCPNG31 SM: [9747] ***** generic exception: sr_create: EXCEPTION <class 'SR.SROSError'>, LINSTOR SR creation error [opterr=Not enough online hosts] Jul 15 19:29:22 XCPNG31 SM: [9747] File "/opt/xensource/sm/SRCommand.py", line 110, in run Jul 15 19:29:22 XCPNG31 SM: [9747] return self._run_locked(sr) Jul 15 19:29:22 XCPNG31 SM: [9747] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Jul 15 19:29:22 XCPNG31 SM: [9747] rv = self._run(sr, target) Jul 15 19:29:22 XCPNG31 SM: [9747] File "/opt/xensource/sm/SRCommand.py", line 323, in _run Jul 15 19:29:22 XCPNG31 SM: [9747] return sr.create(self.params['sr_uuid'], long(self.params['args'][0])) Jul 15 19:29:22 XCPNG31 SM: [9747] File "/opt/xensource/sm/LinstorSR", line 612, in wrap Jul 15 19:29:22 XCPNG31 SM: [9747] return load(self, *args, **kwargs) Jul 15 19:29:22 XCPNG31 SM: [9747] File "/opt/xensource/sm/LinstorSR", line 597, in load Jul 15 19:29:22 XCPNG31 SM: [9747] return wrapped_method(self, *args, **kwargs) Jul 15 19:29:22 XCPNG31 SM: [9747] File "/opt/xensource/sm/LinstorSR", line 443, in wrapped_method Jul 15 19:29:22 XCPNG31 SM: [9747] return method(self, *args, **kwargs) Jul 15 19:29:22 XCPNG31 SM: [9747] File "/opt/xensource/sm/LinstorSR", line 688, in create Jul 15 19:29:22 XCPNG31 SM: [9747] opterr='Not enough online hosts' Jul 15 19:29:22 XCPNG31 SM: [9747] Jul 15 19:29:22 XCPNG31 SM: [9747] ***** LINSTOR resources on XCP-ng: EXCEPTION <class 'SR.SROSError'>, LINSTOR SR creation error [opterr=Not enough online hosts] Jul 15 19:29:22 XCPNG31 SM: [9747] File "/opt/xensource/sm/SRCommand.py", line 378, in run Jul 15 19:29:22 XCPNG31 SM: [9747] ret = cmd.run(sr) Jul 15 19:29:22 XCPNG31 SM: [9747] File "/opt/xensource/sm/SRCommand.py", line 110, in run Jul 15 19:29:22 XCPNG31 SM: [9747] return self._run_locked(sr) Jul 15 19:29:22 XCPNG31 SM: [9747] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Jul 15 19:29:22 XCPNG31 SM: [9747] rv = self._run(sr, target) Jul 15 19:29:22 XCPNG31 SM: [9747] File "/opt/xensource/sm/SRCommand.py", line 323, in _run Jul 15 19:29:22 XCPNG31 SM: [9747] return sr.create(self.params['sr_uuid'], long(self.params['args'][0])) Jul 15 19:29:22 XCPNG31 SM: [9747] File "/opt/xensource/sm/LinstorSR", line 612, in wrap Jul 15 19:29:22 XCPNG31 SM: [9747] return load(self, *args, **kwargs) Jul 15 19:29:22 XCPNG31 SM: [9747] File "/opt/xensource/sm/LinstorSR", line 597, in load Jul 15 19:29:22 XCPNG31 SM: [9747] return wrapped_method(self, *args, **kwargs) Jul 15 19:29:22 XCPNG31 SM: [9747] File "/opt/xensource/sm/LinstorSR", line 443, in wrapped_method Jul 15 19:29:22 XCPNG31 SM: [9747] return method(self, *args, **kwargs) Jul 15 19:29:22 XCPNG31 SM: [9747] File "/opt/xensource/sm/LinstorSR", line 688, in create Jul 15 19:29:22 XCPNG31 SM: [9747] opterr='Not enough online hosts'I have found the issue - the

device-config:hostslist is case-sensitive, if the hosts are given in lower-case the above error occurs. Specifying the hosts in upper-case works.Also using a fully-qualified name for the host fails - regardless of the case used.

-

Pinging @ronan-a

-

Do the device-config:hosts need to be XCP-ng hosts - or can IP address of the "data-replication" network be provided here.

This param must use the names of the XAPI host objects. The names are reused in the LINSTOR configuration by the smapi driver. I will try to remove this param later to simplify

xe sr-createcommand.For example, my XCP-NG servers have dual NICs, I could use the second NIC on a private network/switch with a different subnet to the "main" hosts and use this solely for XOSTOR/Linstor disk replication. Is this possible?

Yes, you can. Please take a look to: https://linbit.com/drbd-user-guide/linstor-guide-1_0-en/#s-managing_network_interface_cards

To get the storage pool name, execute this command in your pool:

linstor --controllers=<comma-separated-list-of-ips> storage-pool list -

@ronan-a said in XOSTOR hyperconvergence preview:

Yes, you can. Please take a look to: https://linbit.com/drbd-user-guide/linstor-guide-1_0-en/#s-managing_network_interface_cards

To get the storage pool name, execute this command in your pool:OK - so to set up this XOSTOR SR I would first

xe sr-create type=linstor name-label=XOSTOR01 host-uuid=xxx device-config:hosts=<XCPNG Host Names> ...etc...to create the Linstor storage-pool. Then

linstor storage-pool listto get the name the pool. Then on each node found in

device-config:hosts=<XCPNG Host Names>run the following command:linstor storage-pool set-property <host/node_name> <pool_name> PrefNic <nic_name>where

nic_nameis the name of the Linstor interface created for the specific NIC. -

As promised I have done some more "organised" testing, with a brand new cluster set up to test XOSTOR. Early simple tests seemed to be OK and I can create, restart, snapshot, move and delete VMs with no problem.

But then after putting a VM under load for an hour and then restarting it I am seeing the same weird behaviour I saw previously with XOSTOR.

Firstly, the VM took far longer than expected to shutdown. Then trying to restart the VM fails.

Let me know if you want me to provide logs or any specific testing.

vm.start { "id": "ade699f2-42f0-8629-35ea-6fcc69de99d7", "bypassMacAddressesCheck": false, "force": false } { "code": "SR_BACKEND_FAILURE_1200", "params": [ "", "No such Tapdisk(minor=12)", "" ], "call": { "method": "VM.start", "params": [ "OpaqueRef:a60d0553-a2f2-41e6-9df4-fad745fbacc8", false, false ] }, "message": "SR_BACKEND_FAILURE_1200(, No such Tapdisk(minor=12), )", "name": "XapiError", "stack": "XapiError: SR_BACKEND_FAILURE_1200(, No such Tapdisk(minor=12), ) at Function.wrap (/opt/xo/xo-builds/xen-orchestra-202206111352/packages/xen-api/src/_XapiError.js:16:12) at /opt/xo/xo-builds/xen-orchestra-202206111352/packages/xen-api/src/transports/json-rpc.js:37:27 at AsyncResource.runInAsyncScope (async_hooks.js:197:9) at cb (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/util.js:355:42) at tryCatcher (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/util.js:16:23) at Promise._settlePromiseFromHandler (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:547:31) at Promise._settlePromise (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:604:18) at Promise._settlePromise0 (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:649:10) at Promise._settlePromises (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:729:18) at _drainQueueStep (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:93:12) at _drainQueue (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:86:9) at Async._drainQueues (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:102:5) at Immediate.Async.drainQueues [as _onImmediate] (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:15:14) at processImmediate (internal/timers.js:464:21) at process.callbackTrampoline (internal/async_hooks.js:130:17)" }The linstore volume for this VM appears to be OK (by the way I hope eventually we have an easy way to match up VMs to Linstore volumes)

[16:53 XCPNG31 ~]# linstor volume list | grep xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG30 | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | xcp-sr-linstor_group | 0 | 1001 | /dev/drbd1001 | 40.10 GiB | InUse | UpToDate | | XCPNG31 | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | xcp-sr-linstor_group | 0 | 1001 | /dev/drbd1001 | 40.10 GiB | Unused | UpToDate | | XCPNG32 | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | DfltDisklessStorPool | 0 | 1001 | /dev/drbd1001 | | Unused | Diskless | [16:59 XCPNG31 ~]# linstor node list ╭─────────────────────────────────────────────────────────╮ ┊ Node ┊ NodeType ┊ Addresses ┊ State ┊ ╞═════════════════════════════════════════════════════════╡ ┊ XCPNG30 ┊ COMBINED ┊ 192.168.1.30:3366 (PLAIN) ┊ Online ┊ ┊ XCPNG31 ┊ COMBINED ┊ 192.168.1.31:3366 (PLAIN) ┊ Online ┊ ┊ XCPNG32 ┊ COMBINED ┊ 192.168.1.32:3366 (PLAIN) ┊ Online ┊ ╰─────────────────────────────────────────────────────────╯ [17:13 XCPNG31 ~]# linstor storage-pool list ╭──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╮ ┊ StoragePool ┊ Node ┊ Driver ┊ PoolName ┊ FreeCapacity ┊ TotalCapacity ┊ CanSnapshots ┊ State ┊ SharedName ┊ ╞══════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════╡ ┊ DfltDisklessStorPool ┊ XCPNG30 ┊ DISKLESS ┊ ┊ ┊ ┊ False ┊ Ok ┊ ┊ ┊ DfltDisklessStorPool ┊ XCPNG31 ┊ DISKLESS ┊ ┊ ┊ ┊ False ┊ Ok ┊ ┊ ┊ DfltDisklessStorPool ┊ XCPNG32 ┊ DISKLESS ┊ ┊ ┊ ┊ False ┊ Ok ┊ ┊ ┊ xcp-sr-linstor_group ┊ XCPNG30 ┊ LVM ┊ linstor_group ┊ 3.50 TiB ┊ 3.64 TiB ┊ False ┊ Ok ┊ ┊ ┊ xcp-sr-linstor_group ┊ XCPNG31 ┊ LVM ┊ linstor_group ┊ 3.49 TiB ┊ 3.64 TiB ┊ False ┊ Ok ┊ ┊ ┊ xcp-sr-linstor_group ┊ XCPNG32 ┊ LVM ┊ linstor_group ┊ 3.50 TiB ┊ 3.64 TiB ┊ False ┊ Ok ┊ ┊ ╰──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯I am seeing the following errors in the SMLog (bb027c9a-5655-4f93-9090-e76b34b2c90d is the disk for this VM)

Jul 23 16:48:30 XCPNG30 SM: [22961] lock: opening lock file /var/lock/sm/bb027c9a-5655-4f93-9090-e76b34b2c90d/vdi Jul 23 16:48:30 XCPNG30 SM: [22961] blktap2.deactivate Jul 23 16:48:30 XCPNG30 SM: [22961] lock: acquired /var/lock/sm/bb027c9a-5655-4f93-9090-e76b34b2c90d/vdi Jul 23 16:48:30 XCPNG30 SM: [22961] ['/usr/sbin/tap-ctl', 'close', '-p', '19527', '-m', '2', '-t', '30'] Jul 23 16:49:00 XCPNG30 SM: [22961] = 5 Jul 23 16:49:00 XCPNG30 SM: [22961] ***** BLKTAP2:<function _deactivate_locked at 0x7f6fb33208c0>: EXCEPTION <class 'blktap2.CommandFailure'>, ['/usr/sbin/tap-ctl', 'close', '-p', '19527', '-m', '2', '-t', '30'] failed: status=5, pid=22983, errmsg=Input/output error Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 85, in wrapper Jul 23 16:49:00 XCPNG30 SM: [22961] ret = op(self, *args) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1744, in _deactivate_locked Jul 23 16:49:00 XCPNG30 SM: [22961] self._deactivate(sr_uuid, vdi_uuid, caching_params) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1785, in _deactivate Jul 23 16:49:00 XCPNG30 SM: [22961] self._tap_deactivate(minor) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1368, in _tap_deactivate Jul 23 16:49:00 XCPNG30 SM: [22961] tapdisk.shutdown() Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 880, in shutdown Jul 23 16:49:00 XCPNG30 SM: [22961] TapCtl.close(self.pid, self.minor, force) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 433, in close Jul 23 16:49:00 XCPNG30 SM: [22961] cls._pread(args) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 296, in _pread Jul 23 16:49:00 XCPNG30 SM: [22961] tapctl._wait(quiet) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 285, in _wait Jul 23 16:49:00 XCPNG30 SM: [22961] raise self.CommandFailure(self.cmd, **info) Jul 23 16:49:00 XCPNG30 SM: [22961] Jul 23 16:49:00 XCPNG30 SM: [22961] lock: released /var/lock/sm/bb027c9a-5655-4f93-9090-e76b34b2c90d/vdi Jul 23 16:49:00 XCPNG30 SM: [22961] call-plugin on 7aaaf4a5-0e43-442e-a9b1-38620c87fd69 (linstor-manager:lockVdi with {'groupName': 'linstor_group', 'srUuid': '141d63f6-d3ed-4a2f-588a-1835f0cea588', 'vdiUuid': 'bb027c9a-5655-4f93-9090-e76b34b2c90d', 'locked': 'False'}) returned: True Jul 23 16:49:00 XCPNG30 SM: [22961] ***** generic exception: vdi_deactivate: EXCEPTION <class 'blktap2.CommandFailure'>, ['/usr/sbin/tap-ctl', 'close', '-p', '19527', '-m', '2', '-t', '30'] failed: status=5, pid=22983, errmsg=Input/output error Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/SRCommand.py", line 110, in run Jul 23 16:49:00 XCPNG30 SM: [22961] return self._run_locked(sr) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Jul 23 16:49:00 XCPNG30 SM: [22961] rv = self._run(sr, target) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/SRCommand.py", line 274, in _run Jul 23 16:49:00 XCPNG30 SM: [22961] caching_params) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1729, in deactivate Jul 23 16:49:00 XCPNG30 SM: [22961] if self._deactivate_locked(sr_uuid, vdi_uuid, caching_params): Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 85, in wrapper Jul 23 16:49:00 XCPNG30 SM: [22961] ret = op(self, *args) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1744, in _deactivate_locked Jul 23 16:49:00 XCPNG30 SM: [22961] self._deactivate(sr_uuid, vdi_uuid, caching_params) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1785, in _deactivate Jul 23 16:49:00 XCPNG30 SM: [22961] self._tap_deactivate(minor) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1368, in _tap_deactivate Jul 23 16:49:00 XCPNG30 SM: [22961] tapdisk.shutdown() Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 880, in shutdown Jul 23 16:49:00 XCPNG30 SM: [22961] TapCtl.close(self.pid, self.minor, force) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 433, in close Jul 23 16:49:00 XCPNG30 SM: [22961] cls._pread(args) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 296, in _pread Jul 23 16:49:00 XCPNG30 SM: [22961] tapctl._wait(quiet) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 285, in _wait Jul 23 16:49:00 XCPNG30 SM: [22961] raise self.CommandFailure(self.cmd, **info) Jul 23 16:49:00 XCPNG30 SM: [22961] Jul 23 16:49:00 XCPNG30 SM: [22961] ***** LINSTOR resources on XCP-ng: EXCEPTION <class 'blktap2.CommandFailure'>, ['/usr/sbin/tap-ctl', 'close', '-p', '19527', '-m', '2', '-t', '30'] failed: status=5, pid=22983, errmsg=Input/output error Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/SRCommand.py", line 378, in run Jul 23 16:49:00 XCPNG30 SM: [22961] ret = cmd.run(sr) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/SRCommand.py", line 110, in run Jul 23 16:49:00 XCPNG30 SM: [22961] return self._run_locked(sr) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Jul 23 16:49:00 XCPNG30 SM: [22961] rv = self._run(sr, target) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/SRCommand.py", line 274, in _run Jul 23 16:49:00 XCPNG30 SM: [22961] caching_params) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1729, in deactivate Jul 23 16:49:00 XCPNG30 SM: [22961] if self._deactivate_locked(sr_uuid, vdi_uuid, caching_params): Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 85, in wrapper Jul 23 16:49:00 XCPNG30 SM: [22961] ret = op(self, *args) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1744, in _deactivate_locked Jul 23 16:49:00 XCPNG30 SM: [22961] self._deactivate(sr_uuid, vdi_uuid, caching_params) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1785, in _deactivate Jul 23 16:49:00 XCPNG30 SM: [22961] self._tap_deactivate(minor) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 1368, in _tap_deactivate Jul 23 16:49:00 XCPNG30 SM: [22961] tapdisk.shutdown() Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 880, in shutdown Jul 23 16:49:00 XCPNG30 SM: [22961] TapCtl.close(self.pid, self.minor, force) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 433, in close Jul 23 16:49:00 XCPNG30 SM: [22961] cls._pread(args) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 296, in _pread Jul 23 16:49:00 XCPNG30 SM: [22961] tapctl._wait(quiet) Jul 23 16:49:00 XCPNG30 SM: [22961] File "/opt/xensource/sm/blktap2.py", line 285, in _wait Jul 23 16:49:00 XCPNG30 SM: [22961] raise self.CommandFailure(self.cmd, **info) Jul 23 16:49:00 XCPNG30 SM: [22961]If I try to start this VM after the above issue I get this error:

vm.start { "id": "ade699f2-42f0-8629-35ea-6fcc69de99d7", "bypassMacAddressesCheck": false, "force": false } { "code": "FAILED_TO_START_EMULATOR", "params": [ "OpaqueRef:a60d0553-a2f2-41e6-9df4-fad745fbacc8", "domid 12", "QMP failure at File \"xc/device.ml\", line 3366, characters 71-78" ], "call": { "method": "VM.start", "params": [ "OpaqueRef:a60d0553-a2f2-41e6-9df4-fad745fbacc8", false, false ] }, "message": "FAILED_TO_START_EMULATOR(OpaqueRef:a60d0553-a2f2-41e6-9df4-fad745fbacc8, domid 12, QMP failure at File \"xc/device.ml\", line 3366, characters 71-78)", "name": "XapiError", "stack": "XapiError: FAILED_TO_START_EMULATOR(OpaqueRef:a60d0553-a2f2-41e6-9df4-fad745fbacc8, domid 12, QMP failure at File \"xc/device.ml\", line 3366, characters 71-78) at Function.wrap (/opt/xo/xo-builds/xen-orchestra-202206111352/packages/xen-api/src/_XapiError.js:16:12) at /opt/xo/xo-builds/xen-orchestra-202206111352/packages/xen-api/src/transports/json-rpc.js:37:27 at AsyncResource.runInAsyncScope (async_hooks.js:197:9) at cb (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/util.js:355:42) at tryCatcher (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/util.js:16:23) at Promise._settlePromiseFromHandler (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:547:31) at Promise._settlePromise (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:604:18) at Promise._settlePromise0 (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:649:10) at Promise._settlePromises (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/promise.js:729:18) at _drainQueueStep (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:93:12) at _drainQueue (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:86:9) at Async._drainQueues (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:102:5) at Immediate.Async.drainQueues [as _onImmediate] (/opt/xo/xo-builds/xen-orchestra-202206111352/node_modules/bluebird/js/release/async.js:15:14) at processImmediate (internal/timers.js:464:21) at process.callbackTrampoline (internal/async_hooks.js:130:17)" }This VM is set up the same way as several other VMs I have running on another cluster but using SRs on NFS mounts.

This is with latest XCP-ng version, all servers patched and up-to-date.

yum update ... No packages marked for update -

@geoffbland Thank you for your tests.

Could you send me the other logs (/var/log/SMlog + kern.log + drbd-kern.log please)? Also check if the LVM volumes are reachable with

linstor resource list. Also, you can check withlvscommand on each host.EIOerror is not a nice error to observe. -

@ronan-a The volumes are reachable on all nodes:

[16:13 XCPNG30 ~]# linstor --controllers=192.168.1.30,192.168.1.31,192.168.1.32 resource list | grep xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG30 | 7001 | InUse | Ok | UpToDate | 2022-07-15 20:03:53 | | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG31 | 7001 | Unused | Ok | UpToDate | 2022-07-15 20:03:59 | | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG32 | 7001 | Unused | Ok | Diskless | 2022-07-15 20:03:51 | [16:12 XCPNG31 ~]# linstor --controllers=192.168.1.30,192.168.1.31,192.168.1.32 resource list | grep xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG30 | 7001 | InUse | Ok | UpToDate | 2022-07-15 20:03:53 | | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG31 | 7001 | Unused | Ok | UpToDate | 2022-07-15 20:03:59 | | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG32 | 7001 | Unused | Ok | Diskless | 2022-07-15 20:03:51 | [16:14 XCPNG32 ~]# linstor --controllers=192.168.1.30,192.168.1.31,192.168.1.32 resource list | grep xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG30 | 7001 | InUse | Ok | UpToDate | 2022-07-15 20:03:53 | | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG31 | 7001 | Unused | Ok | UpToDate | 2022-07-15 20:03:59 | | xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f | XCPNG32 | 7001 | Unused | Ok | Diskless | 2022-07-15 20:03:51 |Volumes appear to be OK on 2 hosts and not present on the third - although with rep set as 2 I think that is expected?

[16:14 XCPNG30 ~]# lvs | grep xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f_00000 linstor_group -wi-ao---- <40.10g [16:15 XCPNG31 ~]# lvs | grep xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f_00000 linstor_group -wi-ao---- <40.10g [16:19 XCPNG32 ~]# lvs | grep xcp-volume-00b34ae3-2ad3-44ea-aa13-d5de1fbf756f No linesAlthough when running the lvs command on each host I am getting a lot of warnings about DRDB volumes - these seem to be volumes that were previously deleted but not cleaned up fully:

/dev/drbd1024: open failed: Wrong medium type /dev/drbd1026: open failed: Wrong medium type /dev/drbd1028: open failed: Wrong medium type /dev/drbd1000: open failed: Wrong medium type /dev/drbd1002: open failed: Wrong medium type /dev/drbd1012: open failed: Wrong medium type /dev/drbd1014: open failed: Wrong medium type /dev/drbd1016: open failed: Wrong medium type /dev/drbd1018: open failed: Wrong medium type /dev/drbd1020: open failed: Wrong medium type /dev/drbd1022: open failed: Wrong medium type -

We've been trying to re-setup linstor on our new pool, and it seems to be working this time. However, when attempting to enable pool HA, it's not working correctly with this error :

[16:36 ovbh-pprod-xen10 ~]# xe pool-ha-enable heartbeat-sr-uuids=a8b860a9-5246-0dd2-8b7f-4806604f219a This operation cannot be performed because this VDI could not be properly attached to the VM. vdi: 5ca46fd4-c315-46c9-8272-5192118e33a9 (Statefile for HA)SMLog

Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11456] lock: opening lock file /var/lock/sm/a8b860a9-5246-0dd2-8b7f-4806604f219a/sr Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11456] lock: acquired /var/lock/sm/a8b860a9-5246-0dd2-8b7f-4806604f219a/sr Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11456] Synchronize metadata... Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11456] VDI 5ca46fd4-c315-46c9-8272-5192118e33a9 loaded! (path=/dev/drbd/by-res/xcp-persistent-ha-statefile/0, hidden=0) Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11456] lock: released /var/lock/sm/a8b860a9-5246-0dd2-8b7f-4806604f219a/sr Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11456] vdi_generate_config {'sr_uuid': 'a8b860a9-5246-0dd2-8b7f-4806604f219a', 'subtask_of': 'OpaqueRef:29c37d59-5cb3-4cfe-aa3f-097ae089480d', 'vdi_ref': 'OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070', 'vdi_on_boot': 'persist', 'args': [], 'vdi_location': '5ca46fd4-c315-46c9-8272-5192118e33a9', 'host_ref': 'OpaqueRef:b5e3bf1f-2c0e-4272-85f5-7cde69a8d98e', 'session_ref': 'OpaqueRef:a1883dc6-76bf-4b2c-9829-6ed610791896', 'device_config': {'group-name': 'linstor_group/thin_device', 'redundancy': '3', 'hosts': 'ovbh-pprod-xen10,ovbh-pprod-xen11,ovbh-pprod-xen12', 'SRmaster': 'true', 'provisioning': 'thin'}, 'command': 'vdi_generate_config', 'vdi_allow_caching': 'false', 'sr_ref': 'OpaqueRef:41cd1a0c-a388-494a-a94d-35ebad53d283', 'local_cache_sr': '539b8459-d081-b16e-607c-34a51e0e21d3', 'vdi_uuid': '5ca46fd4-c315-46c9-8272-5192118e33a9'} Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11456] LinstorVDI.generate_config for 5ca46fd4-c315-46c9-8272-5192118e33a9 Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] lock: opening lock file /var/lock/sm/a8b860a9-5246-0dd2-8b7f-4806604f219a/sr Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] lock: acquired /var/lock/sm/a8b860a9-5246-0dd2-8b7f-4806604f219a/sr Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] VDI 5ca46fd4-c315-46c9-8272-5192118e33a9 loaded! (path=/dev/http-nbd/xcp-persistent-ha-statefile, hidden=False) Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] lock: released /var/lock/sm/a8b860a9-5246-0dd2-8b7f-4806604f219a/sr Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] vdi_attach_from_config {'sr_uuid': 'a8b860a9-5246-0dd2-8b7f-4806604f219a', 'sr_sm_config': {}, 'vdi_path': '/dev/http-nbd/xcp-persistent-ha-statefile', 'device_config': {'group-name': 'linstor_group/thin_device', 'redundancy': '3', 'hosts': 'ovbh-pprod-xen10,ovbh-pprod-xen11,ovbh-pprod-xen12', 'SRmaster': 'true', 'provisioning': 'thin'}, 'command': 'vdi_attach_from_config', 'vdi_uuid': '5ca46fd4-c315-46c9-8272-5192118e33a9'} Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] LinstorVDI.attach_from_config for 5ca46fd4-c315-46c9-8272-5192118e33a9 Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] LinstorVDI.attach for 5ca46fd4-c315-46c9-8272-5192118e33a9 Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] Kill http server /run/http-server-xcp-persistent-ha-statefile.pid (pid=10814) Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] Starting http-disk-server on port 8076... Jul 26 16:37:10 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 0 Jul 26 16:37:11 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 1 Jul 26 16:37:12 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 2 Jul 26 16:37:13 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 3 Jul 26 16:37:14 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 4 Jul 26 16:37:15 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 5 Jul 26 16:37:16 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 6 Jul 26 16:37:17 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 7 Jul 26 16:37:18 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 8 Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] Got exception: [Errno 2] No such file or directory: '/etc/xensource/xhad.conf'. Retry number: 9 Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] Raising exception [46, The VDI is not available [opterr=Failed to start nbd-server: Cannot start persistent NBD server: no XAPI session, nor XHA config file]] Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] ***** LinstorVDI.attach_from_config: EXCEPTION <class 'SR.SROSError'>, The VDI is not available [opterr=Failed to start nbd-server: Cannot start persistent NBD server: no XAPI session, nor XHA config file] Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/LinstorSR", line 2027, in attach_from_config Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] return self.attach(sr_uuid, vdi_uuid) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/LinstorSR", line 1827, in attach Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] return self._attach_using_http_nbd() Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/LinstorSR", line 2764, in _attach_using_http_nbd Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] self._start_persistent_nbd_server(volume_name) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/LinstorSR", line 2647, in _start_persistent_nbd_server Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] opterr='Failed to start nbd-server: {}'.format(e) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] Raising exception [47, The SR is not available [opterr=Unable to attach from config]] Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] ***** generic exception: vdi_attach_from_config: EXCEPTION <class 'SR.SROSError'>, The SR is not available [opterr=Unable to attach from config] Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/SRCommand.py", line 110, in run Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] return self._run_locked(sr) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] rv = self._run(sr, target) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/SRCommand.py", line 294, in _run Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] ret = target.attach_from_config(self.params['sr_uuid'], self.vdi_uuid) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/LinstorSR", line 2032, in attach_from_config Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] opterr='Unable to attach from config' Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] ***** LINSTOR resources on XCP-ng: EXCEPTION <class 'SR.SROSError'>, The SR is not available [opterr=Unable to attach from config] Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/SRCommand.py", line 378, in run Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] ret = cmd.run(sr) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/SRCommand.py", line 110, in run Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] return self._run_locked(sr) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] rv = self._run(sr, target) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/SRCommand.py", line 294, in _run Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] ret = target.attach_from_config(self.params['sr_uuid'], self.vdi_uuid) Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] File "/opt/xensource/sm/LinstorSR", line 2032, in attach_from_config Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522] opterr='Unable to attach from config' Jul 26 16:37:19 ovbh-pprod-xen10 SM: [11522]xensource

Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12893 /var/lib/xcp/xapi||cli] xe pool-ha-enable heartbeat-sr-uuids=a8b860a9-5246-0dd2-8b7f-4806604f219a username=root password=(omitted) Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12893 /var/lib/xcp/xapi|session.login_with_password D:28e65110d9c7|xapi_session] Session.create trackid=aaadbd98bb0d2da814837704c59da59e pool=false uname=root originator=cli is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12894 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:d4e1d279b97d created by task D:28e65110d9c7 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|audit] Pool.enable_ha: pool = '630d94cc-d026-b478-2a9e-ce3bc6759fc6 (OVBH-PROD-XENPOOL03)'; heartbeat_srs = [ OpaqueRef:41cd1a0c-a388-494a-a94d-35ebad53d283 ]; configuration = [ ] Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha] Enabling HA on the Pool. Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ warn||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha] Warning: A possible network anomaly was found. The following hosts possibly have storage PIFs that can be unplugged: A possible network anomaly was found. The following hosts possibly have storage PIFs that are not dedicated:, ovbh-pprod-xen10: bond1 (uuid: 92e5951a-a007-5fcd-cd6e-583e808d3fc4) Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_message] Message.create IP_CONFIGURED_PIF_CAN_UNPLUG 3 Pool 630d94cc-d026-b478-2a9e-ce3bc6759fc6 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|thread_queue] push(email message queue, IP_CONFIGURED_PIF_CAN_UNPLUG): queue = [ IP_CONFIGURED_PIF_CAN_UNPLUG ](1) Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xha_statefile] re-using existing statefile: 5ca46fd4-c315-46c9-8272-5192118e33a9 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xha_metadata_vdi] re-using existing metadata VDI: 3eea65a8-3905-406d-bfdd-8c774bb43ee0 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha_vm_failover] Protected VMs: [ ] Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||4145 ||thread_queue] pop(email message queue) = IP_CONFIGURED_PIF_CAN_UNPLUG Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha_vm_failover] Restart plan for non-agile offline VMs: [ ] Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha_vm_failover] Planning configuration for offline agile VMs = { total_hosts = 3; num_failures = 0; hosts = [ 8a459076 (ovbh-pprod-xen12), 257938591744; 438137c7 (ovbh-pprod-xen11), 262271307776; b5e3bf1f (ovbh-pprod-xen10), 262271311872 ]; vms = [ ]; placement = [ ] } Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha_vm_failover] Computing a specific plan for the failure of VMs: [ ] Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha_vm_failover] Restart plan for agile offline VMs: [ ] Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha_vm_failover] Planning configuration for future failures = { total_hosts = 3; num_failures = 0; hosts = [ 8a459076 (ovbh-pprod-xen12), 257938591744; 438137c7 (ovbh-pprod-xen11), 262271307776; b5e3bf1f (ovbh-pprod-xen10), 262271311872 ]; vms = [ ]; placement = [ ] } Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha_vm_failover] plan_for_n_failures config = { total_hosts = 3; num_failures = 0; hosts = [ 8a459076 (ovbh-pprod-xen12), 257938591744; 438137c7 (ovbh-pprod-xen11), 262271307776; b5e3bf1f (ovbh-pprod-xen10), 262271311872 ]; vms = [ ]; placement = [ ] } Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha_vm_failover] HA failover plan exists for all protected VMs Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha_vm_failover] to_tolerate = 0 planned_for = 0 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha] Detaching any existing statefiles: these are not needed any more Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha] Attempting to permanently attach statefile VDI: OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|static_vdis] permanent_vdi_attach: vdi = OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070; sr = OpaqueRef:41cd1a0c-a388-494a-a94d-35ebad53d283 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|helpers] about to call script: /opt/xensource/bin/static-vdis Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12895 HTTPS 10.2.0.5->:::80|host.get_servertime D:40d083416923|xapi_session] Session.create trackid=2cb32dffdfaa305684431f44c5873f23 pool=true uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=e8c14c89e4b0bc5c3ecf2f0ddf6faa88 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12896 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:d74318d22de3 created by task D:40d083416923 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12895 HTTPS 10.2.0.5->:::80|host.get_servertime D:40d083416923|xmlrpc_client] stunnel pid: 4604 (cached = true) connected to 10.2.0.21:443 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12895 HTTPS 10.2.0.5->:::80|host.get_servertime D:40d083416923|xmlrpc_client] with_recorded_stunnelpid task_opt=None s_pid=4604 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12895 HTTPS 10.2.0.5->:::80|host.get_servertime D:40d083416923|xmlrpc_client] stunnel pid: 4604 (cached = true) returned stunnel to cache Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12895 HTTPS 10.2.0.5->:::80|host.get_servertime D:40d083416923|xapi_session] Session.destroy trackid=2cb32dffdfaa305684431f44c5873f23 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12897 HTTPS 10.2.0.5->:::80|task.create D:5ee8faf4a9fa|taskhelper] task Xapi#getResource /rrd_updates R:58b4da0ce9ec (uuid:0be27ff2-b458-8a76-0a11-f069fdc7f186) created (trackid=e8c14c89e4b0bc5c3ecf2f0ddf6faa88) by task D:5ee8faf4a9fa Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12898 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.logout D:27670cf1c9d3 created by task D:65bd950f3466 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12898 /var/lib/xcp/xapi|session.logout D:6a026daeda09|xapi_session] Session.destroy trackid=cf6600bb9537bf503e328d9387afff9c Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12899 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.slave_login D:baa574e97b12 created by task D:65bd950f3466 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12899 /var/lib/xcp/xapi|session.slave_login D:8c52ad05a8de|xapi_session] Session.create trackid=dd82c958f9ec6e2e5126f98706f39fdf pool=true uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12900 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:d1dfaed156c9 created by task D:8c52ad05a8de Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12901 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:event.from D:45591c3f6bf7 created by task D:65bd950f3466 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12902 /var/lib/xcp/xapi|session.login_with_password D:8a2fe7639b51|xapi_session] Session.create trackid=8ddfe1f61f1f00dc119fa7d8f95c8f60 pool=false uname=root originator=xen-api-scripts-static-vdis is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12904 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:0065eb435b07 created by task D:8a2fe7639b51 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12903 /var/lib/xcp/xapi|session.login_with_password D:5fc61c47cb61|xapi_session] Session.create trackid=6198b4d0eec3bea720a5489b7d9993c8 pool=false uname=__dom0__mail_alarm originator=xen-api-scripts-mail-alarm is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12905 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:66ebbe281c12 created by task D:5fc61c47cb61 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12922 /var/lib/xcp/xapi|session.logout D:bf2abbbb44ab|xapi_session] Session.destroy trackid=6198b4d0eec3bea720a5489b7d9993c8 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [error||4145 ||xapi_message] Unexpected exception in message hook /opt/xensource/libexec/mail-alarm: INTERNAL_ERROR: [ Subprocess exited with unexpected code 1; stdout = [ ]; stderr = [ pool:other-config:mail-destination not specified\x0A ] ] Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||4145 ||thread_queue] email message queue: completed processing 1 items: queue = [ ](0) Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12934 /var/lib/xcp/xapi|VDI.generate_config R:29c37d595cb3|audit] VDI.generate_config: VDI = '5ca46fd4-c315-46c9-8272-5192118e33a9'; host = 'ff631fff-1947-4631-a35d-9352204f98d9 (ovbh-pprod-xen10)' Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12934 /var/lib/xcp/xapi|VDI.generate_config R:29c37d595cb3|sm] SM linstor vdi_generate_config sr=OpaqueRef:41cd1a0c-a388-494a-a94d-35ebad53d283 vdi=OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [ info||12934 /var/lib/xcp/xapi|sm_exec D:e67ad7661ab5|xapi_session] Session.create trackid=5278325068f2d56e7a6449ad96d024c8 pool=false uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 Jul 26 16:37:09 ovbh-pprod-xen10 xapi: [debug||12935 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:88e51aa2b828 created by task D:e67ad7661ab5 Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [debug||12936 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:host.get_other_config D:54ab4886bd6b created by task R:29c37d595cb3 Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [debug||12937 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:SR.get_sm_config D:097aa2e98f20 created by task R:29c37d595cb3 Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [debug||12940 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:VDI.get_by_uuid D:75ce02824999 created by task R:29c37d595cb3 Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [debug||12941 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:VDI.get_sm_config D:06b72052f6e1 created by task R:29c37d595cb3 Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [ info||12934 /var/lib/xcp/xapi|sm_exec D:e67ad7661ab5|xapi_session] Session.destroy trackid=5278325068f2d56e7a6449ad96d024c8 Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [ info||12943 /var/lib/xcp/xapi|session.logout D:f00dc0e4dc46|xapi_session] Session.destroy trackid=8ddfe1f61f1f00dc119fa7d8f95c8f60 Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|helpers] /opt/xensource/bin/static-vdis add 5ca46fd4-c315-46c9-8272-5192118e33a9 HA statefile succeeded [ output = '' ] Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|helpers] about to call script: /opt/xensource/bin/static-vdis Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [ info||12946 /var/lib/xcp/xapi|session.login_with_password D:add1819f775b|xapi_session] Session.create trackid=1e6292226a91eaacb7b5776974b6de3b pool=false uname=root originator=SM is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [debug||12947 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:c9ab7c15ce3a created by task D:add1819f775b Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [ info||12954 /var/lib/xcp/xapi|session.login_with_password D:eeb1f9d34c85|xapi_session] Session.create trackid=f06081966e03b8ea81e41bb411fc3576 pool=false uname=root originator=SM is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49 Jul 26 16:37:10 ovbh-pprod-xen10 xapi: [debug||12955 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:334fc8bfbd81 created by task D:eeb1f9d34c85 Jul 26 16:37:12 ovbh-pprod-xen10 xapi: [debug||12961 HTTPS 10.2.0.5->:::80|host.call_plugin R:ccb65336b597|audit] Host.call_plugin host = '28ae0fc6-f725-4ebc-bf61-1816f1f60e72 (ovbh-pprod-xen12)'; plugin = 'updater.py'; fn = 'check_update' args = [ 'hidden' ] Jul 26 16:37:12 ovbh-pprod-xen10 xapi: [ info||12961 HTTPS 10.2.0.5->:::80|host.call_plugin R:ccb65336b597|xapi_session] Session.create trackid=fa887265fb5b0a83289b5f3bb40d3983 pool=true uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=e8c14c89e4b0bc5c3ecf2f0ddf6faa88 Jul 26 16:37:12 ovbh-pprod-xen10 xapi: [debug||12962 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:e886ff38b224 created by task R:ccb65336b597 Jul 26 16:37:12 ovbh-pprod-xen10 xapi: [debug||12961 HTTPS 10.2.0.5->:::80|host.call_plugin R:ccb65336b597|xmlrpc_client] stunnel pid: 4621 (cached = true) connected to 10.2.0.21:443 Jul 26 16:37:12 ovbh-pprod-xen10 xapi: [debug||12961 HTTPS 10.2.0.5->:::80|host.call_plugin R:ccb65336b597|xmlrpc_client] with_recorded_stunnelpid task_opt=OpaqueRef:ccb65336-b597-49c1-9545-e278e9a59518 s_pid=4621 Jul 26 16:37:12 ovbh-pprod-xen10 xapi: [debug||12961 HTTPS 10.2.0.5->:::80|host.call_plugin R:ccb65336b597|xmlrpc_client] stunnel pid: 4621 (cached = true) returned stunnel to cache Jul 26 16:37:12 ovbh-pprod-xen10 xapi: [ info||12961 HTTPS 10.2.0.5->:::80|host.call_plugin R:ccb65336b597|xapi_session] Session.destroy trackid=fa887265fb5b0a83289b5f3bb40d3983 Jul 26 16:37:13 ovbh-pprod-xen10 xapi: [debug||818 :::80||dummytaskhelper] task dispatch:event.from D:375adb402468 created by task D:095c828033c5 Jul 26 16:37:15 ovbh-pprod-xen10 xapi: [ info||12965 HTTPS 10.2.0.5->:::80|host.get_servertime D:1ead2d4f30f6|xapi_session] Session.create trackid=7846fad60d9b0bef8c545406123f6ab1 pool=true uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=e8c14c89e4b0bc5c3ecf2f0ddf6faa88 Jul 26 16:37:15 ovbh-pprod-xen10 xapi: [debug||12966 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:e0ba66373f7f created by task D:1ead2d4f30f6 Jul 26 16:37:15 ovbh-pprod-xen10 xapi: [debug||12965 HTTPS 10.2.0.5->:::80|host.get_servertime D:1ead2d4f30f6|xmlrpc_client] stunnel pid: 4648 (cached = true) connected to 10.2.0.21:443 Jul 26 16:37:15 ovbh-pprod-xen10 xapi: [debug||12965 HTTPS 10.2.0.5->:::80|host.get_servertime D:1ead2d4f30f6|xmlrpc_client] with_recorded_stunnelpid task_opt=None s_pid=4648 Jul 26 16:37:15 ovbh-pprod-xen10 xapi: [debug||12965 HTTPS 10.2.0.5->:::80|host.get_servertime D:1ead2d4f30f6|xmlrpc_client] stunnel pid: 4648 (cached = true) returned stunnel to cache Jul 26 16:37:15 ovbh-pprod-xen10 xapi: [ info||12965 HTTPS 10.2.0.5->:::80|host.get_servertime D:1ead2d4f30f6|xapi_session] Session.destroy trackid=7846fad60d9b0bef8c545406123f6ab1 Jul 26 16:37:15 ovbh-pprod-xen10 xapi: [ info||12967 HTTPS 10.2.0.5->:::80|task.create D:f7b8730a55b6|taskhelper] task Xapi#getResource /rrd_updates R:051d27e09482 (uuid:17cac94d-85fd-f9e4-adeb-b129582ebd88) created (trackid=e8c14c89e4b0bc5c3ecf2f0ddf6faa88) by task D:f7b8730a55b6 Jul 26 16:37:19 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|helpers] /opt/xensource/bin/static-vdis attach 5ca46fd4-c315-46c9-8272-5192118e33a9 exited with code 1 [stdout = ''; stderr = 'Traceback (most recent call last):\x0A File "/opt/xensource/bin/static-vdis", line 377, in <module>\x0A path = attach(sys.argv[2])\x0A File "/opt/xensource/bin/static-vdis", line 288, in attach\x0A path = call_backend_attach(driver, config)\x0A File "/opt/xensource/bin/static-vdis", line 226, in call_backend_attach\x0A xmlrpc = xmlrpclib.loads(xml[1])\x0A File "/usr/lib64/python2.7/xmlrpclib.py", line 1138, in loads\x0A return u.close(), u.getmethodname()\x0A File "/usr/lib64/python2.7/xmlrpclib.py", line 794, in close\x0A raise Fault(**self._stack[0])\x0Axmlrpclib.Fault: <Fault 47: 'The SR is not available [opterr=Unable to attach from config]'>\x0A'] Jul 26 16:37:19 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha] Caught exception attaching statefile: INTERNAL_ERROR: [ Subprocess exited with unexpected code 1; stdout = [ ]; stderr = [ Traceback (most recent call last):\x0A File "/opt/xensource/bin/static-vdis", line 377, in <module>\x0A path = attach(sys.argv[2])\x0A File "/opt/xensource/bin/static-vdis", line 288, in attach\x0A path = call_backend_attach(driver, config)\x0A File "/opt/xensource/bin/static-vdis", line 226, in call_backend_attach\x0A xmlrpc = xmlrpclib.loads(xml[1])\x0A File "/usr/lib64/python2.7/xmlrpclib.py", line 1138, in loads\x0A return u.close(), u.getmethodname()\x0A File "/usr/lib64/python2.7/xmlrpclib.py", line 794, in close\x0A raise Fault(**self._stack[0])\x0Axmlrpclib.Fault: <Fault 47: 'The SR is not available [opterr=Unable to attach from config]'>\x0A ] ] Jul 26 16:37:19 ovbh-pprod-xen10 xapi: [ info||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|static_vdis] permanent_vdi_detach: vdi = OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070; sr = OpaqueRef:41cd1a0c-a388-494a-a94d-35ebad53d283 Jul 26 16:37:19 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|helpers] about to call script: /opt/xensource/bin/static-vdis Jul 26 16:37:19 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|helpers] /opt/xensource/bin/static-vdis detach 5ca46fd4-c315-46c9-8272-5192118e33a9 succeeded [ output = '' ] Jul 26 16:37:19 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|helpers] about to call script: /opt/xensource/bin/static-vdis Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|helpers] /opt/xensource/bin/static-vdis del 5ca46fd4-c315-46c9-8272-5192118e33a9 succeeded [ output = '' ] Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [debug||12893 /var/lib/xcp/xapi|pool.enable_ha R:91a5f9c321fc|xapi_ha] Caught exception while enabling HA: VDI_NOT_AVAILABLE: [ OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070 ] Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] pool.enable_ha R:91a5f9c321fc failed with exception Server_error(VDI_NOT_AVAILABLE, [ OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070 ]) Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] Raised Server_error(VDI_NOT_AVAILABLE, [ OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070 ]) Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 1/12 xapi Raised at file ocaml/xapi/xapi_ha.ml, line 1196 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 2/12 xapi Called from file ocaml/xapi/xapi_ha.ml, line 1811 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 3/12 xapi Called from file ocaml/xapi/xapi_ha.ml, line 1929 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 4/12 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 24 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 5/12 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 35 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 6/12 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 24 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 7/12 xapi Called from file ocaml/xapi/rbac.ml, line 231 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 8/12 xapi Called from file ocaml/xapi/server_helpers.ml, line 103 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 9/12 xapi Called from file ocaml/xapi/server_helpers.ml, line 121 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 10/12 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 24 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 11/12 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 35 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 12/12 xapi Called from file lib/backtrace.ml, line 177 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [ info||12893 /var/lib/xcp/xapi|session.logout D:0869db223ebc|xapi_session] Session.destroy trackid=aaadbd98bb0d2da814837704c59da59e Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||cli] Converting exception VDI_NOT_AVAILABLE: [ OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070 ] into a CLI response Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] Raised Server_error(VDI_NOT_AVAILABLE, [ OpaqueRef:f352e1aa-bdb7-449b-9291-808331b2f070 ]) Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace] 1/1 xapi Raised at file (Thread 12893 has no backtrace table. Was with_backtraces called?, line 0 Jul 26 16:37:20 ovbh-pprod-xen10 xapi: [error||12893 /var/lib/xcp/xapi||backtrace]