Importing OVA - “Device Already Exists”

-

I’m really new to XCP-NG & XO but I’m loving it so far. Every VM I’ve migrated from ESXi has worked flawlessly - except one. One of them, whenever I try and import the OVA it errors out and the logs say “device already exists”. It goes and creates all of the VMs virtual hard drives. I’m at a loss as to what is actually going on. Can anybody point me in the right direction?

-

I suspect it's due to a similar MAC address

Do you have a more complete log in Settings/logs?

-

@olivierlambert

I didn't see your reply, my appologies.Here is what the log says:

HTTP handler of vm.import undefined { "code": "DEVICE_ALREADY_EXISTS", "params": [ "0" ], "call": { "method": "VBD.create", "params": [ { "bootable": false, "empty": false, "mode": "RW", "other_config": {}, "qos_algorithm_params": {}, "qos_algorithm_type": "", "type": "Disk", "unpluggable": false, "userdevice": "0", "VDI": "OpaqueRef:ceb1f386-f16f-4730-a4b6-bf2a48ce0e1b", "VM": "OpaqueRef:443efe69-7025-4a33-adab-51818258af24" } ] }, "message": "DEVICE_ALREADY_EXISTS(0)", "name": "XapiError", "stack": "XapiError: DEVICE_ALREADY_EXISTS(0) at Function.wrap (/opt/xo/xo-builds/xen-orchestra-202205260849/packages/xen-api/src/_XapiError.js:16:12) at /opt/xo/xo-builds/xen-orchestra-202205260849/packages/xen-api/src/transports/json-rpc.js:37:27 at AsyncResource.runInAsyncScope (node:async_hooks:202:9) at cb (/opt/xo/xo-builds/xen-orchestra-202205260849/node_modules/bluebird/js/release/util.js:355:42) at tryCatcher (/opt/xo/xo-builds/xen-orchestra-202205260849/node_modules/bluebird/js/release/util.js:16:23) at Promise._settlePromiseFromHandler (/opt/xo/xo-builds/xen-orchestra-202205260849/node_modules/bluebird/js/release/promise.js:547:31) at Promise._settlePromise (/opt/xo/xo-builds/xen-orchestra-202205260849/node_modules/bluebird/js/release/promise.js:604:18) at Promise._settlePromise0 (/opt/xo/xo-builds/xen-orchestra-202205260849/node_modules/bluebird/js/release/promise.js:649:10) at Promise._settlePromises (/opt/xo/xo-builds/xen-orchestra-202205260849/node_modules/bluebird/js/release/promise.js:729:18) at _drainQueueStep (/opt/xo/xo-builds/xen-orchestra-202205260849/node_modules/bluebird/js/release/async.js:93:12) at _drainQueue (/opt/xo/xo-builds/xen-orchestra-202205260849/node_modules/bluebird/js/release/async.js:86:9) at Async._drainQueues (/opt/xo/xo-builds/xen-orchestra-202205260849/node_modules/bluebird/js/release/async.js:102:5) at Immediate.Async.drainQueues [as _onImmediate] (/opt/xo/xo-builds/xen-orchestra-202205260849/node_modules/bluebird/js/release/async.js:15:14) at processImmediate (node:internal/timers:466:21) at process.callbackTrampoline (node:internal/async_hooks:130:17)" }This has (unfortunately) 10 hard drives and 2 NICs. I can't see anywhere in XO where I can specify the MAC when I am importing the VM.

-

So there's an issue when there's so many drives it seems. We'd like to have private access to this OVA to try to see what's going on.

-

@olivierlambert I sent you a PM

-

Any update on this here? Just wanted to see if you found a solution. I have a OVA from ESXi that has 6 disks on it and 3 NICs and I am having basically the exact same error here.

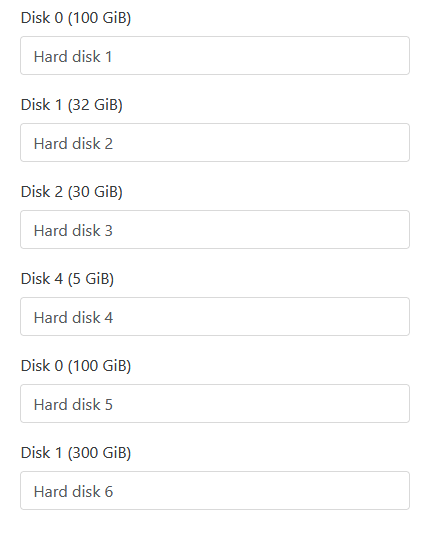

However, mine only creates 3 of the 6 drives requested, it also seems to think there are duplicate drives or something along those lines (see image).

-

Please provide the exact error you have

-

@olivierlambert Yeah no problem!

HTTP handler of vm.import undefined { "code": "DEVICE_ALREADY_EXISTS", "params": [ "1" ], "call": { "method": "VBD.create", "params": [ { "bootable": false, "empty": false, "mode": "RW", "other_config": {}, "qos_algorithm_params": {}, "qos_algorithm_type": "", "type": "Disk", "unpluggable": false, "userdevice": "1", "VDI": "OpaqueRef:f0f82221-4a05-408d-9fab-1fbfc3c451b8", "VM": "OpaqueRef:c3c00d45-b356-49a2-9608-29e423ccaace" } ] }, "message": "DEVICE_ALREADY_EXISTS(1)", "name": "XapiError", "stack": "XapiError: DEVICE_ALREADY_EXISTS(1) at Function.wrap (/usr/local/lib/node_modules/xo-server/node_modules/xen-api/src/_XapiError.js:16:12) at /usr/local/lib/node_modules/xo-server/node_modules/xen-api/src/transports/json-rpc.js:37:27 at AsyncResource.runInAsyncScope (node:async_hooks:201:9) at cb (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/util.js:355:42) at tryCatcher (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/util.js:16:23) at Promise._settlePromiseFromHandler (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/promise.js:547:31) at Promise._settlePromise (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/promise.js:604:18) at Promise._settlePromise0 (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/promise.js:649:10) at Promise._settlePromises (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/promise.js:729:18) at _drainQueueStep (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/async.js:93:12) at _drainQueue (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/async.js:86:9) at Async._drainQueues (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/async.js:102:5) at Immediate.Async.drainQueues [as _onImmediate] (/usr/local/lib/node_modules/xo-server/node_modules/bluebird/js/release/async.js:15:14) at processImmediate (node:internal/timers:466:21) at process.callbackTrampoline (node:internal/async_hooks:130:17)" } -

@planedrop I ended up importing all the drives separately and then creating a VM and assigning the drives to the VM manually. It worked really well to be honest. I remember I had to mess around with it a lot, I can’t remember if I had to convert the drives to a different format or not but I’m leaning towards yes.

-

@isd94 My recollection is that to support 4 or more drives, XenTools has to be installed on the VM.

HTH,

-=Tobias -

@isd94 Hmmmm I'll consider giving this a shot, however my project here is validating a special piece of software with a vendor who may require the OVA be used as part of the setup, so would be great if that were possible.

-

@tjkreidl Interesting, I'll do some digging around to see if I can find any concrete info on this, feels like it should still import fine though and just maybe not work right after booting if that were the case though, right? Since the tools aren't even loaded until boot so it should still be able to create the VHDs and add them to the VM.

-

@planedrop Once the VM is up and has XenTools installed, you should be able to add a lot of disks to it. I ran some VMs with at least 6 or 8 VDIs, as I recall. For fun, I think I added round 20 to a test VM!

-

@tjkreidl This is good to know, however doesn't really help when I am trying to import a OVA from a vendor.

@olivierlambert any suggestions here? I can submit an official support request if needed, just thought it'd be better to post this on the forums first. It is for a production environment though and we have been paying for support, so happy to go that route if needed!

I may also give the manual conversion to VHD format a shot and import those instead like @isd94 ended up doing.

-

@planedrop are you importing this OVA from VMware out of curiosity?

-

@olivierlambert Yes, that is correct, it's an exported OVA from VMWare using their CLI based OVA tool (since you can't do it in the GUI anymore). We are working with a vendor to validate their platform on XCP-ng so we can hopefully ditch VMWare and put everything on XCP-ng which is where the rest of our VMs reside.

-

Would you like to participate to a very early beta test of our new capability to import the VM from VMware directly from VMware API to XCP-ng? (using Xen Orchestra)

-

@olivierlambert I would be interested, however this is a production environment, so what are the risks of that? Does it require a beta version of XOA to be installed?

Thanks for the help by the way, we aren't in some major rush so I don't mind tinkering a bit, just can't have anything cause stability issues.

-

Please open a ticket so we can see in more depth what would be the best options

-

@olivierlambert Will do! I will try to do that today, might be next week though, busy week so far at work lol.

Thanks!