nVidia Tesla P4 for vgpu and Plex encoding

-

We had someone who managed to get Nvidia vGPU working recently, so it should work but I'm not confident to give all details publicly since it's not legal to redistribute or use proprietary packages

In my opinion, the future will be mediated devices, using VFIO or something. And good news: for our DPU work, we are working on an equivalent of VFIO for Xen. So the solution might come from there

-

@olivierlambert could you give me a contact, please? I will contact him in private conversation.

-

Finally after a week I found the solution!

There is no problem with emu-manager.

XCP does not contain necessary packagevgpu.

I copiedvgpufrom Citrix ISO and now it is alive! : ) -

Ah great

But I think our EMU manager won't work, do you confirm you are still using the Citrix one, right?

But I think our EMU manager won't work, do you confirm you are still using the Citrix one, right? -

Steps I have done to make NVIDIA vGPU works:

- Install XCP-ng 8.2.1

- Install all update

yum update reboot- Download NVIDIA vGPU drivers for XenServer 8.2 from NVIDIA site. Version NVIDIA-GRID-CitrixHypervisor-8.2-510.108.03-513.91

- Unzip and install rpm from Host-Drivers

rebootagain- Download free CitrixHypervisor-8.2.0-install-cd.iso from Citrix site

- Open CitrixHypervisor-8.2.0-install-cd.iso with 7-zip, then unzip

vgpubinary file from Packages->vgpu....rpm->vgpu....cpio->.->usr->lib64->xen->bin - Upload

vgputo XCP-ng host to/usr/lib64/xen/binand made it executablechmod +x /usr/lib64/xen/bin/vgpu - Deployed VM with vGPU and it started without any problems

So I did not make any modifications with emu-manager.

My test server is far away from me and it will take some time to download the windows ISO to this test location. Then I will check how it works in the guest OS and report back here.

-

@splastunov Will this be hampered by any licensing issues? To my understanding , NVIDIA vGPU requires a license per user per GPU to work properly. Unless this isn't the case on Xen?

-

@wyatt-made I need few days to test it.

Will report later here -

@wyatt-made Yeah, you need not only licenses for the hosts and any VMs running on them, but also have to run a custom NVIDIA license manager.

-

Mediated devices will be a game changer… Eager to show our results with DPU, that will be the start of it. Some reading on the potential: https://arccompute.com/blog/libvfio-commodity-gpu-multiplexing/

-

No luck yet...

I found 2 configs:

/usr/share/nvidia/vgpu/vgpuConfig.xml.

Seams that nvidia-vgpud.service use this config on start to generate vgpu types.

List of vgpu types you can get with command

nvidia-smi vgpu -sMy output is

GPU 00000000:81:00.0 GRID T4-1B GRID T4-2B GRID T4-2B4 GRID T4-1Q GRID T4-2Q GRID T4-4Q GRID T4-8Q GRID T4-16Q GRID T4-1A GRID T4-2A GRID T4-4A GRID T4-8A GRID T4-16A GRID T4-1B4 GPU 00000000:C1:00.0 GRID T4-1B GRID T4-2B GRID T4-2B4 GRID T4-1Q GRID T4-2Q GRID T4-4Q GRID T4-8Q GRID T4-16Q GRID T4-1A GRID T4-2A GRID T4-4A GRID T4-8A GRID T4-16A GRID T4-1B4- Set of configs located in

/usr/share/nvidia/vgx

Here are individual config for each type

# ls -la | grep "grid_t4" -r--r--r-- 1 root root 530 Oct 20 09:11 grid_t4-16a.conf -r--r--r-- 1 root root 556 Oct 20 09:11 grid_t4-16q.conf -r--r--r-- 1 root root 529 Oct 20 09:11 grid_t4-1a.conf -r--r--r-- 1 root root 529 Oct 20 09:11 grid_t4-1b4.conf -r--r--r-- 1 root root 529 Oct 20 09:11 grid_t4-1b.conf -r--r--r-- 1 root root 555 Oct 20 09:11 grid_t4-1q.conf -r--r--r-- 1 root root 528 Oct 20 09:11 grid_t4-2a.conf -r--r--r-- 1 root root 528 Oct 20 09:11 grid_t4-2b4.conf -r--r--r-- 1 root root 528 Oct 20 09:11 grid_t4-2b.conf -r--r--r-- 1 root root 554 Dec 9 15:45 grid_t4-2q.conf -r--r--r-- 1 root root 529 Oct 20 09:11 grid_t4-4a.conf -r--r--r-- 1 root root 555 Oct 20 09:11 grid_t4-4q.conf -r--r--r-- 1 root root 530 Oct 20 09:11 grid_t4-8a.conf -r--r--r-- 1 root root 556 Oct 20 09:11 grid_t4-8q.confNow I'm trying to change pci_id of vgpu, to make guest OS "think" that vgpu is Quadro RTX 5000 (based on the same chip TU104).

I played around with the configs, but without success.

Any changes in the configs lead to the fact that the VM stops starting, because XCP cannot create vgpu.In the guest OS, I tried to install different drivers manually, but the device does not start.

So I have 2 questions:

-

Is there any option to make change in some "raw" VM config (or something like this) to change vgpu pci_id?

I have tried to export metadata to XVA format, edit it and import.

But after VM start it change all IDs back.... -

Is it possible to create custom vgpu_types?

xe vgpu-type-listshow that all types are RO (readonly).

Seams that they are generating when XCP boot.

-

@splastunov My guess is that it's not going to be possible to do any customizing since the GPU configuration types are managed by the NVIDIA drivers and applications, which incorporate specific types associated with each GPU model. As newer releases appear, sometimes this will change (such as with the introduction of the "B" configurations some years ago).

About the only close equivalent to a "raw" designation for a VM would be to do a passthrough to that VM,but even then , you still are going to be restricted to defining some standard GPU type. -

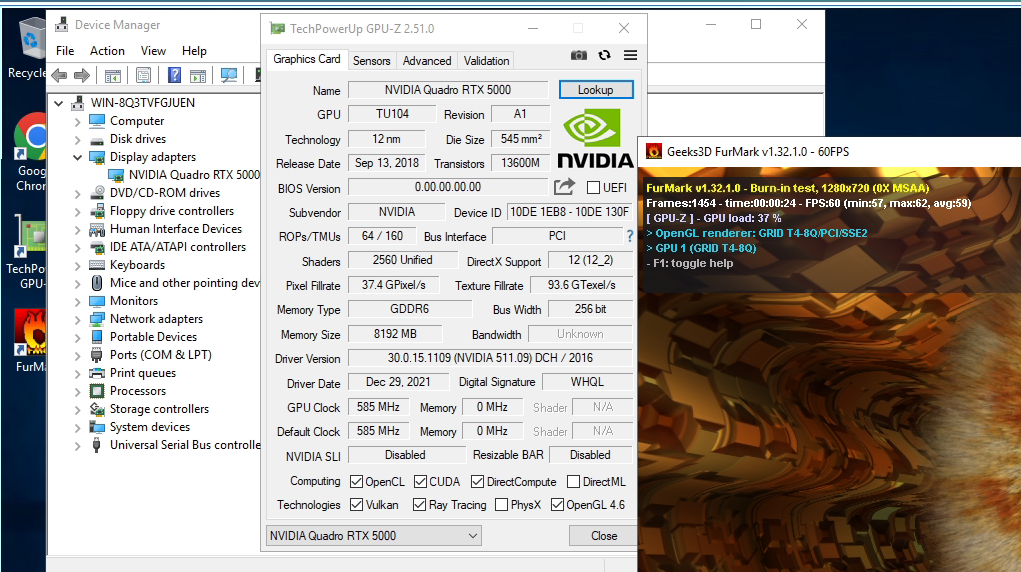

Haha, it finally works!!!

The problem was with the template I used to deploy the VM.

I first deployed a VM from the default Windows 2019 template and it was not possible to install the GPU drivers.After that I tried deploying the VM from the "other installation media" template and now I can install any drivers.

To make it work with different benchmarks, I installed the Quadro RTX 5000 driver (from consumer site).

The result is on the screen. About 60 average FPS.

I think it is limited with driver on host.

As you can see FurMark detected GPU correctly T4-Q8.

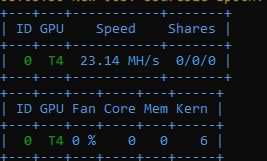

Result from eth classic miner.

Miner detected GPU as T4 too.

No licenses are required : )

-

@splastunov Hmmm, not sure that will work for long for a P4 or T4 without an NVIDIA license. At some point, it will likely throttle down to a maximum of 3 FPS after the grace period expires.

Then again, you might get lucky.

-

Weird, something in the Windows template is make it fail? That's interesting information

-

S splastunov referenced this topic on

S splastunov referenced this topic on

-

Hi everyone,

I'm interested too in the use of Nvidia GRID in XCP-ng because we have a cluster with 3 XCP-ng servers and now a new one with a GPU Nvidia A100. It would be great if I could use it in a new XCP-ng pool, because it's an excellent tool and we already have the knowledge.

Our plan is to virtualize the A100 80 GB GPU so we can use it in various virtual machines, with "slices" of 10/20 GB, for compute tasks (AI, Deep learning, etc.).

So I have two questions:- The trick copying this vgpu executable can be dangerous when updating the XCP-ng server? Maybe overwriten, deleted or something.

- Do you have plan of supporting nvidia vGPU soon? We still can use Qemu over Ubuntu or other Linux with this drivers and everything works ok but XCP-ng is more professional than qemu IMHO.

You are doing a great great job at Vates. Keep going!

Dani -

@Dani I made a post yesterday about Nvidia MiG support. vGPU support on XCP-ng is tricky because of the proprietary code bit that makes vGPU work can't be freely distributed. On the other hand, MiG (which is supported by many Ampere cards like to A100) doesn't requiring licensing like vGPU and seemingly just creates PCI addresses for the card which could, in theory, be passed through to VMs.

CC: @olivierlambert since we briefly talked about this yesterday in my thread.

-

@wyatt-made thanks a lot. What a qick response!!

I'll check your post.

My plan is to install xcp in the A100 server Next week and test It. I will post the results in the forum and maybe will help @olivierlambert and the rest of the community.Dani

-

I will be interested to understand how MiG works and if we are far or not to get a solution for it

-

@splastunov is it still not asking for license ?

-

@hani it began asking for license after one day but without throttling.

I have switched to AMD GPUs