Nvidia MiG Support

-

-

@splastunov

The license server is needed for the virtual machines to work, but the host driver has to work first. Once you have setup the hypervisor with the driver then you have to deploy the license server, wich can run in a virtual machine in the same hypervisor, and then bind the virtual machines with it using some tokens (is a complicated process, by the way. I think Nvidia made it too difficult).I've spent almost two days with this, so maybe trees don't let me see the forest

-

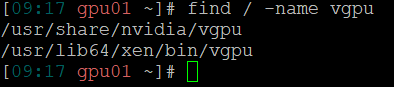

Do you have

vgpubinary file at/usr/lib64/xen/bin/vgpu? -

@olivierlambert I don't have a commercial license of Citrix and I don't know if exists an evaluation one.

Tomorrow I'll try to get some time and install Citrix 8.2 Express, wich is free, but they say vGPU are only available in Premium edition. We'll see. -

@Dani when you have the express version installed, please contact me in the chat, I might be able to help you on that

-

@splastunov Yes I do, but it doesn't work

-

@Dani Strange. Is it executable? Did you tried to follow step by step my instruction to make vGPU work?

-

@splastunov Yes, your steps are the same as I did but I can see some differences.

The big thing is you can see Nvidia GRID vGPU types in XCP-ng center but I can't.

I have two scenarios:- With Citrix drivers I don't have vGPU types in XCP-ng center, so I can't assign them to virtual machines.

- With RHEL drivers XCP-ng center shows vGPU types (with hex names, no commercial names) but the Nvidia driver doesn't load (no nvidia-smi). If I try to assign one of the vGPUs to a virtual machine it won't start throwing the error "can't rebind 0000:81:00.0 driver", wich is the PCI id of the whole card, not the virtual GPU.

Maybe it's beacause the type of GPU? With the A100 doesn't work but with yours does? I don't know

Looks like a dead end street. -

Now I've made another test using Citrix Hypervisor 8.2 Express edition.

Despite Citrix says only Premium edition has support for Nvidia vGPU let’s try it and see what happens.

IMPORTANT: This is only for testing purposes because of this message in Xen Center:

"Citrix Hypervisor 8.2 has reached End of Life for express customers","Citrix Hypervisor 8.2 reached End of Life for express customers on Dec 13, 2021. You are no longer eligible for hotfixes released after this date. Please upgrade to the latest CR."

In fact, Xen Center doesn’t allow you to install updates and throws an error with the license.Test 6. Install Citrix Hypervisor 8.2 Express and Driver for Citrix Hypervisor “NVIDIA-GRID-CitrixHypervisor-8.2-525.105.14-525.105.17-528.89” (version 15.2 in the Nvidia Licensing portal).

-

Install Citrix Hypervisor 8.2 Express

-

PCI device detected:

# lspci | grep -i nvidia 81:00.0 3D controller: NVIDIA Corporation Device 20b5 (rev a1)-

xe host-param-get uuid=<uuid-of-your-server> param-name=chipset-info param-key=iommu

Returns true. Ok. -

Xen Center doens't show Nvidia GPU because there is no “GPU” tab!

I think that's because this is the express version and it's only available in Premium edition. -

Install Citrix driver and reboot:

rpm -iv NVIDIA-vGPU-CitrixHypervisor-8.2-525.105.14.x86_64.rpm- List nvidia loaded modules. Missing i2c. Bad thing.

# lsmod |grep nvidia nvidia 56455168 19- List vfio loaded modules. Nothing. Bad thing.

# lsmod |grep vfio- Check dmesg. This looks normal.

# dmesg | grep -E “NVRM|nvidia” [ 4.490920] nvidia: module license 'NVIDIA' taints kernel. [ 4.568618] nvidia-nvlink: Nvlink Core is being initialized, major device number 239 [ 4.570625] NVRM: PAT configuration unsupported. [ 4.570702] nvidia 0000:81:00.0: enabling device (0000 -> 0002) [ 4.619948] NVRM: loading NVIDIA UNIX x86_64 Kernel Module 525.105.14 Sat Mar 18 01:14:41 UTC 2023 [ 5.511797] NVRM: GPU at 0000:81:00.0 has software scheduler DISABLED with policy BEST_EFFORT.-

nvidia-smi

Normal output. Correct -

nvidia-smi -q

GPU Virtualization Mode Virtualization Mode: Host VGPU Host VGPU Mode: SR-IOV <-- GOOD-

The script /usr/lib/nvidia/sriov-manage is present.

-

Enable virtual functions with /usr/lib/nvidia/sriov-manage -e ALL

If we now check dmesg | grep -E “NVRM|nvidia” we have same errors as in test 3. Errors probing the PCI ID of the virtual functions failing with error -1.

Again I think this is because /sys/class/mdevbus doesn’t exist.

Quick recap: Same problems as with XCP-ng. There is no vfio mdev devices and there is no vGPU types in Xen Center so we can't launch virtual machines with vGPUS.

Result of the test: FAIL.

-

-

Looks like we have to use Linux KVM with this server for now, which is not too good for us (and for me as the BOFH

) because we have another cluster with XCP-ng.

) because we have another cluster with XCP-ng.The thing is f**king my mind is that in other linux distros, like ubuntu for example, everything is detected ok and working properly but in XCP-ng, which is another linux (with modifications, I know), not. I think it's because the lack of vfio but I don't really know.

-

It seems that A100 vGPU isn't supported yet: https://hcl.xenserver.com/gpus/?gpusupport__version=20&vendor=50

-

@olivierlambert OMG! It's true.

It would be a funny situation if you pay Citrix premium license to virtualize the A100 and it's not compatible

Next time i'll check HCL first

Thanks Olivier

-

I'm sure they are working on something, since it's a BIG market for them

Let's stay tuned!

Let's stay tuned! -

Hello, I'm honestly don't know how Citrix vGPU stuff works, but couple of thoughts on this topic:

If I understand correctly, you say Nvidia use VFIO Linux framework to enable mediated devices which can be exported to guest. The VFIO framework isn't supported by XEN, as VFIO need the presense of IOMMU device managed by IOMMU Linux kernel driver. And XEN doesn't proide/virtualize IOMMU access to dom0 (XEN manages IOMMU by itself, but doesn't offer such access to guests)

Bascally to export SR-IOV virtual function to guest with XEN you don't have to use VFIO, you can just assign the virtual function PCI "bdf" id to guest and normally the guest should see this device.

From what I understand Nvidia user-mode toolstack (scripts & binaries) doesn't JUST create SR-IOV virtual functions, but want to access VFIO/MDEV framework, so all this thing fails.

So may be, you can check if you there's some options with Nvidia tools to just create SR-IOV functions, OR try to run VFIO in "no-iommu" mode (no IOMMU presence in Linux kernel required)

BTW, we working on some project where we are intending to use VFIO with dom0, and so we're implementing the IOMMU driver in dom0 kernel, so it would be interesting to know in the future, if this can help with your case.

Hope this help

-

Z zxcp referenced this topic on