Epyc VM to VM networking slow

-

Adding @dthenot in the loop in case it rings a bell.

-

The past couple of days have been pretty nuts, but I've dabbled with testing this and in my configuration with XCP-ng 8.3 with all currently released patches, I top out at 15Gb/s with 8 threads on Win 10. Going further to 16 threads or beyond doesn't really improve things.

Killing core boost, SMT, and setting deterministic performance in BIOS added nearly 2Gb/s on single-threaded iperf.

When running the iperf and watching htop on the XCP-ng server, I see nearly all cores running at 15-20% for the duration of the transfer. That seems excessive.

Iperf on the E3-1230v2...Single thread, 9.27Gbs. Neglibile improvement for more threads. Surprisingly, a similar hit on CPU performance. Not as bad though. 10Gbps traffic hits about 10% or so. Definitely not as bad as on the Epyc system.

I'll do more thorough testing tomorrow.

-

I've found that iperf isnt super great at scaling it's performance, which might be a small factor here.

I too have similar performance figures VM<->VM on a AMD EPYC 7402P 24-Core server. About 6-8Gbit/s.

-

Today, i got my hands on HPE ProLiant DL325 Gen10 server with Epyc 7502 32 core (64 threads) CPU. I have installed XCP-ng 8.2.1, and applied all pathes wth yum update. Installed 2 Debian and 2 Windows 10 VMs. Results are very similar:

Linux to Linux VM on single host: 4 Gbit/sec on single thread, max 6 Gbit/sec on mulčtiple threads.

I have tried various amountss of VCPU (2,4,8.12,16) and various combinations of iperf threads.Windws to Windows VM: 3.5 Gbit/sec on single thread, and 18 Gbit/sec um multiple threads.

All this was with default bios settings, just changed to legacy boot.

Wet performance tuning in bios (c states and other settings), i believe i can get 10-15% more, i will try that tommorow.So, i think this confirms that this is not Supermicro related problem, but something on relation Xen (hypervisor?) <-> AMD CPU.

-

@olivierlambert said in Epyc VM to VM networking slow:

Also, about comparing to KVM doesn't make sense at all: there's no such network/disk isolation in KVM, so you can do zero copy, which will yield to much better performances (at the price of the thin isolation).

Yes, we are all aware of KVM / Xen differences, BUT: there is something important here to consider: I am getting similar result in Winsows VM to VM network traffic on Prox and XCP-ng. This proves that network/disk isolation on XCP-ng isn't slowing anything down.

Prox/KVM Linux VM to VM network speed is the same as with Windows VMs.

Problem is much slower network traffic on Linux VM to VM on single XCP-ng host.

-

That's exactly what I'd like to confirm with the community. If we can spot a different in Windows guests and Linux guests, it might be interesting to find why

-

@nicols said in Epyc VM to VM networking slow:

Today, i got my hands on HPE ProLiant DL325 Gen10 server with Epyc 7502 32 core (64 threads) CPU. I have installed XCP-ng 8.2.1, and applied all pathes wth yum update. Installed 2 Debian and 2 Windows 10 VMs. Results are very similar:

Linux to Linux VM on single host: 4 Gbit/sec on single thread, max 6 Gbit/sec on mulčtiple threads.

I have tried various amountss of VCPU (2,4,8.12,16) and various combinations of iperf threads.Windws to Windows VM: 3.5 Gbit/sec on single thread, and 18 Gbit/sec um multiple threads.

All this was with default bios settings, just changed to legacy boot.

Wet performance tuning in bios (c states and other settings), i believe i can get 10-15% more, i will try that tommorow.So, i think this confirms that this is not Supermicro related problem, but something on relation Xen (hypervisor?) <-> AMD CPU.

Same hardware, VmWare ESXi 8.0, Debian 12 VMs with 4 vCPU and 2GB RAM.

root@debian-on-vmwareto:~# iperf -c 10.33.65.159 ------------------------------------------------------------ Client connecting to 10.33.65.159, TCP port 5001 TCP window size: 16.0 KByte (default) ------------------------------------------------------------ [ 1] local 10.33.65.160 port 59124 connected with 10.33.65.159 port 5001 (icwnd/mss/irtt=14/1448/164) [ ID] Interval Transfer Bandwidth [ 1] 0.0000-10.0094 sec 29.0 GBytes 24.9 Gbits/secwith more threads:

root@debian-on-vmwareto:~# iperf -c 10.33.65.159 -P4 ------------------------------------------------------------ Client connecting to 10.33.65.159, TCP port 5001 TCP window size: 16.0 KByte (default) ------------------------------------------------------------ [ 3] local 10.33.65.160 port 46444 connected with 10.33.65.159 port 5001 (icwnd/mss/irtt=14/1448/107) [ 1] local 10.33.65.160 port 46446 connected with 10.33.65.159 port 5001 (icwnd/mss/irtt=14/1448/130) [ 2] local 10.33.65.160 port 46442 connected with 10.33.65.159 port 5001 (icwnd/mss/irtt=14/1448/136) [ 4] local 10.33.65.160 port 46468 connected with 10.33.65.159 port 5001 (icwnd/mss/irtt=14/1448/74) [ ID] Interval Transfer Bandwidth [ 3] 0.0000-10.0142 sec 7.59 GBytes 6.51 Gbits/sec [ 1] 0.0000-10.0142 sec 15.5 GBytes 13.3 Gbits/sec [ 4] 0.0000-10.0136 sec 7.89 GBytes 6.77 Gbits/sec [ 2] 0.0000-10.0142 sec 14.7 GBytes 12.6 Gbits/sec [SUM] 0.0000-10.0018 sec 45.6 GBytes 39.2 Gbits/secWill try with with windows VMs next.

I know it is apples and oranges, but i would accept speed difference of abbout 10-20%.

Here, we are talking about more tahn 600% difference. -

Those are really interesting results.

How can we as a community best help find the root cause/debug this issue?

For example, is it an ovswitch issue or perhaps something to do with excessive context switches?

-

It's not OVS, it's related to the inherent copy in RAM needed by Xen to ensure the right isolation between guests (including the dom0).

However, to me what's important isn't the difference with VMware, it's the difference between hardware. Old Xeon shouldn't be faster (at equal frequency) than any EPYCs.

-

It could be a cpu/xeon specific optimisation that is very unfortunate on EPYCs. It isn't unheard of.

-

Yeah, that's why I'd like to get more data, and if I have enough, to brainstorm with some Xen dev to think if it's something that could be fixed on "our" side (software) or not (if it's purely a hardware thing)

-

@olivierlambert said in Epyc VM to VM networking slow:

I wonder about the guest kernel too (Debian 11 vs 12)

Here are my results with Debian11 vs. Debian12 on our EPYC 7313P 16-Core Processor on the same host. Fresh and fully updated VMs with 4vcpu /4GB RAM, XCP-NG guest tools 7.30.0-11 are installed.:

All tests were made 3 times showing the best result.

All tests with multiple connections were made three times -P2 /-P4/-P8/-P12/-P16 showing here the best result:

DEBIAN11>DEBIAN11 ------------------------- **root@deb11-master:~# iperf3 -c 192.168.1.95** Connecting to host 192.168.1.95, port 5201 ------------------------------------------------ [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 8.84 GBytes 7.60 Gbits/sec 1687 sender [ 5] 0.00-10.04 sec 8.84 GBytes 7.56 Gbits/sec receiver **root@deb11-master:~# iperf3 -c 192.168.1.95 -P2** Connecting to host 192.168.1.95, port 5201 ------------------------------------------------------------ [SUM] 0.00-10.00 sec 12.0 GBytes 10.3 Gbits/sec 2484 sender [SUM] 0.00-10.04 sec 12.0 GBytes 10.3 Gbits/sec receiverDEBIAN12>DEBIAN12 ------------------------- **root@deb12master:~# iperf3 -c 192.168.1.98** Connecting to host 192.168.1.98, port 5201 ----------------------------------------------- [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 5.12 GBytes 4.40 Gbits/sec 953 sender [ 5] 0.00-10.00 sec 5.12 GBytes 4.39 Gbits/sec receiver **root@deb12master:~# iperf3 -c 192.168.1.98 -P4** Connecting to host 192.168.1.98, port 5201 ----------------------------------------------- [SUM] 0.00-10.00 sec 3.58 GBytes 3.08 Gbits/sec 3365 sender [SUM] 0.00-10.00 sec 3.57 GBytes 3.07 Gbits/sec receiverConclusion: Debian12 with kernel 6.1.55-1 compared to Debain 11 with kernel 5.10.197-1 run less performant on this EPYC host.

I will check now if I could perform the same test with a Windows VM.

Update

A quick test with two Windows 7 VMs, both with 2 vcpu / 2GB RAM have shown the best result with, the latest available Citrix guest tools are installed:

C:\Tools\Iperf3\iperf3.exe -c 192.168.1.108 -P8

In average 11.3 GBits/sec were reached.

-

So we might have something weird in Debian 12 make it a lot slower

-

Perhaps try the Debian 12 guest with mitigations=off

-

Hello guys,

I'll be the one investigating this further, we're trying to compile a list of CPUs and their behavior. First, thank you for your reports and tests, that's already very helpful and gave us some insight already.

Setup

If some of you can help us cover more ground that would be awesome, so here is what would be an ideal for testing to get everyone on the same page:

- An AMD host, obviously

yum install iperf²

- 2 VMs on the same host, with the distribution of your choice¹

- each with 4 cores if possible

- 1GB of ram should be enough if you don't have a desktop environment to load

- iperf2²

¹: it seems some recent kernels do provide a slight boost, but in any case the performance is pretty low for such high grade CPUs.

²: iperf3 is singlethreaded, the-Poption will establish multiple connexions, but it will process all of them in a single thread, so if reaching a 100% cpu usage, it won't get much increase and won't help identifying the scaling on such a cpu. For example on aRyzen 5 7600processor, we do have about the same low perfomances, but using multiple thread will scale, which does not seem to be the case for EPYC Zen1 CPUs.Tests

- do not disable mitigations for now, as its only on kernel side, there are still mitigation active in xen, and from my testing it doesn't seem to help much, and will increase combinatory of results

- for each test, run

xentopon host, and try to get an idea of the top values of each domain when the test is running - run

iperf -son VM1, and let it run (no-P Xthis would stop after X connexion established) - tests:

- vm2vm 1 thread: on VM2, run

iperf -c <ip_VM1> -t 60, note result for v2v 1 thread - vm2vm 4 threads on VM2, run

iperf -c <ip_VM1> -t 60 -P4, note result for v2v 4 threads - host2vm 1 thread: on host, run

iperf -c <ip_VM1> -t 60, note result for h2v 1 thread - host2vm 4 threads on host, run

iperf -c <ip_VM1> -t 60 -P4, note result for h2v 4 threads

- vm2vm 1 thread: on VM2, run

Report template

Here is an example of report template

- Host:

- cpu:

- number of sockets:

- cpu pinning: yes (detail) / no (use automated setting)

- xcp-ng version:

- output of

xl info -nespecially thecpu_topologysection in a code block.

- VMs:

- distrib & version

- kernel version

- Results:

- v2m 1 thread: throughput / cpu usage from xentop³

- v2m 4 threads: throughput / cpu usage from xentop³

- h2m 1 thread: througput / cpu usage from xentop³

- h2m 4 threads: througput / cpu usage from xentop³

³: I note the max I see while test is running in vm-client/vm-server/host order.

What was tested

Mostly for information, here are a few tests I ran which did not seem to improve performances.

- disabling the mitigations of various security issues at host and VM boot time using kernel boot parameters:

noibrs noibpb nopti nospectre_v2 spectre_v2_user=off spectre_v2=off nospectre_v1 l1tf=off nospec_store_bypass_disable no_stf_barrier mds=off mitigations=off. Note this won't disable them at xen level as there are patches that enable the fixes for the related hardware with no flags to disable them. - disabling AVX passing

noxsavein kernel boot parameters as there is a known issue on Zen CPU avoided boosting when a core is under heavy AVX load, still no changes. - Pinning: I tried to use a single "node" in case the memory controllers are separated, I tried avoiding the "threads" on the same core, and I tried to spread load accross nodes, althrough it seems to give a sllight boost, it still is far from what we should be expecting from such CPUs.

- XCP-ng 8.2 and 8.3-beta1, seems like 8.3 is a tiny bit faster, but tends to jitter a bit more, so I would not deem that as relevant either.

Not tested it myself but @nicols tried on the same machine giving him about 3Gbps as we all see, on VMWare, and it went to ~25Gbps single threaded and about 40Gbps with 4 threads, and with proxmox about 21.7Gbps (I assume single threaded) which are both a lot more along what I would expect this hardware to produce.

@JamesG did test windows and debian guests and got about the same results.

Althrough we do get a small boost by increasing threads (or connexions in case of iperf3), it still is far from what we can see on other setups with vmware or proxmox).

Althrough Olivier's pool with zen4 desktop cpu do scale a lot better than EPYCs when increasing the number of threads, it still is not providing us with expected results for such powerful cpus in single thread (we do not even reach vmware single thread performances with 4 threads).

Althrough @Ajmind-0 test show a difference between debian versions, results even on debian 11 are stil not on par with expected results.

Disabling AVX only provided an improvement on my home FX cpu, which are known to not have real "threads" and share computing unit between 2 threads of a core, so it does make sense. (this is not shown in the table)

It seems that memcpy in the glibc is not related to the issue,

dd if=/dev/zero of=/dev/nullhas decent performances on these machines (1.2-1.3GBytes/s), and it's worth keeping in mind that both kernel and xen have their own implementation, so it could play a small role in filling the ring buffer in iperf, but I feel like the libc memcpy() is not at play here.Tests table

I'll update this table with updated results, or maybe repost it in further post.

Throughputs are in Gbit/s, noted as

Gfor shorter table entries.CPU usages are for (VMclient/VMserver/dom0) in percentage as shown in

xentop.user cpu family market v2v 1T v2v 4T h2v 1T h2v 4T notes vates fx8320-e piledriver desktop 5.64 G (120/150/220) 7.5 G (180/230/330) 9.5 G (0/110/160) 13.6 G (0/300/350) not a zen cpu, no boost vates EPYC 7451 Zen1 server 4.6 G (110/180/250) 6.08 G (180/220/300) 7.73 G (0/150/230) 11.2 G (0/320/350) no boost vates Ryzen 5 7600 Zen4 desktop 9.74 G (70/80/100) 19.7 G (190/260/300) 19.2G (0/110/140) 33.9 G (0/310/350) Olivier's pool, no boost nicols EPYC 7443 Zen3 server 3.38 G (?) iperf3 nicols EPYC 7443 Zen3 server 2.78 G (?) 4.44 G (?) iperf2 nicols EPYC 7502 Zen2 server similar ^ similar ^ iperf2 JamesG EPYC 7302p Zen2 server 6.58 G (?) iperf3 Ajmind-0 EPYC 7313P Zen3 server 7.6 G (?) 10.3 G (?) iperf3, debian11 Ajmind-0 EPYC 7313P Zen3 server 4.4 G (?) 3.07G (?) iperf3, debian12 vates EPYC 9124 Zen4 server 1.16 G (16/17/??⁴) 1.35 G (20/25/??⁴) N/A N/A !xcp-ng, Xen 4.18-rc + suse 15 vates EPYC 9124 Zen4 server 5.70 G (100/140/200) 10.4 G (230/250/420) 10.7 G (0/120/200) 15.8 G (0/320/380) no boost vates Ryzen 9 5950x Zen3 desktop 7.25 G (30/35/60) 16.5 G (160/210/300) 17.5 G (0/110/140) 27.6 G (0/270/330) no boost ⁴: xentop on this host shows 3200% on dom0 all the time, profiling does not seem to show anything actually using CPU, but may be related to the extremely poor performance

last updated: 2023-11-29 16:46

All help is welcome! For those of you who already provided tests I integrated in the table, feel free to not rerun tests, it looks like following the exact protocol and provided more data won't make much of a difference and I don't want to waste your time!

Thanks again to all of you for your insight and your patience, it looks like this is going to be a deep rabbit hole, I'll do my best to get to the bottom of this as soon as possible.

- An AMD host, obviously

-

Heya!

Just chiming in that we (WDMAB) Are keeping tabs on this thread as well as our ongoing support ticket with you guys.

Saw our result up on the list.

If we can do ANYTHING further to assist then please do tell us. We are available 24/7 to solve this issue since it is very heavily impacting to our new production deployment.Regards.

Mathias W. -

@bleader I've been investigating this issue on my own system and came across this discussion. I know this is a somewhat old thread so I hope it's ok to contribute more data here!

Host:

- CPU: EPYC 7302p

- Number of sockets: 1

- CPU pinning: no

- XCP-NG version: 8.3 beta 2, Xen 4.17 (everything current as of the time of writing)

- Output of

xl info -n:

host : xcp-ng release : 4.19.0+1 version : #1 SMP Wed Jan 24 17:19:11 CET 2024 machine : x86_64 nr_cpus : 32 max_cpu_id : 31 nr_nodes : 1 cores_per_socket : 16 threads_per_core : 2 cpu_mhz : 3000.001 hw_caps : 178bf3ff:7ed8320b:2e500800:244037ff:0000000f:219c91a9:00400004:00000780 virt_caps : pv hvm hvm_directio pv_directio hap gnttab-v1 gnttab-v2 total_memory : 130931 free_memory : 24740 sharing_freed_memory : 0 sharing_used_memory : 0 outstanding_claims : 0 free_cpus : 0 cpu_topology : cpu: core socket node 0: 0 0 0 1: 0 0 0 2: 1 0 0 3: 1 0 0 4: 4 0 0 5: 4 0 0 6: 5 0 0 7: 5 0 0 8: 8 0 0 9: 8 0 0 10: 9 0 0 11: 9 0 0 12: 12 0 0 13: 12 0 0 14: 13 0 0 15: 13 0 0 16: 16 0 0 17: 16 0 0 18: 17 0 0 19: 17 0 0 20: 20 0 0 21: 20 0 0 22: 21 0 0 23: 21 0 0 24: 24 0 0 25: 24 0 0 26: 25 0 0 27: 25 0 0 28: 28 0 0 29: 28 0 0 30: 29 0 0 31: 29 0 0 device topology : device node No device topology data available numa_info : node: memsize memfree distances 0: 132338 24740 10 xen_major : 4 xen_minor : 17 xen_extra : .3-3 xen_version : 4.17.3-3 xen_caps : xen-3.0-x86_64 hvm-3.0-x86_32 hvm-3.0-x86_32p hvm-3.0-x86_64 xen_scheduler : credit xen_pagesize : 4096 platform_params : virt_start=0xffff800000000000 xen_changeset : $Format:%H$, pq ??? xen_commandline : dom0_mem=7568M,max:7568M watchdog ucode=scan dom0_max_vcpus=1-16 crashkernel=256M,below=4G console=vga vga=mode-0x0311 cc_compiler : gcc (GCC) 11.2.1 20210728 (Red Hat 11.2.1-1) cc_compile_by : mockbuild cc_compile_domain : [unknown] cc_compile_date : Wed Feb 28 10:12:19 CET 2024 build_id : 9a011a28e29a21a7643376b36aec959253587d42 xend_config_format : 4Test set 1:

Server and client were both Debian 12 (

Linux 6.1.0-18-amd64 #1 SMP PREEMPT_DYNAMIC Debian 6.1.76-1 (2024-02-01) x86_64) with 4 cores.VM to VM (1 thread):

iperf -c 192.168.1.66 -t 60 ------------------------------------------------------------ Client connecting to 192.168.1.66, TCP port 5001 TCP window size: 16.0 KByte (default) ------------------------------------------------------------ [ 1] local 192.168.1.69 port 55530 connected with 192.168.1.66 port 5001 (icwnd/mss/irtt=14/1448/551) [ ID] Interval Transfer Bandwidth [ 1] 0.0000-60.0213 sec 38.2 GBytes 5.47 Gbits/sec xentop: 100 / 150 / 250VM to VM (4 threads):

iperf -c 192.168.1.66 -t 60 -P4 ------------------------------------------------------------ Client connecting to 192.168.1.66, TCP port 5001 TCP window size: 16.0 KByte (default) ------------------------------------------------------------ [ 2] local 192.168.1.69 port 35702 connected with 192.168.1.66 port 5001 (icwnd/mss/irtt=14/1448/531) [ 4] local 192.168.1.69 port 35708 connected with 192.168.1.66 port 5001 (icwnd/mss/irtt=14/1448/576) [ 1] local 192.168.1.69 port 35714 connected with 192.168.1.66 port 5001 (icwnd/mss/irtt=14/1448/458) [ 3] local 192.168.1.69 port 35692 connected with 192.168.1.66 port 5001 (icwnd/mss/irtt=14/1448/744) [ ID] Interval Transfer Bandwidth [ 2] 0.0000-60.0141 sec 12.4 GBytes 1.77 Gbits/sec [ 1] 0.0000-60.0129 sec 13.9 GBytes 1.99 Gbits/sec [ 3] 0.0000-60.0141 sec 14.5 GBytes 2.07 Gbits/sec [ 4] 0.0000-60.0301 sec 12.2 GBytes 1.75 Gbits/sec [SUM] 0.0000-60.0071 sec 53.0 GBytes 7.58 Gbits/sec xentop: 165 / 200 / 380Host to VM (1 thread):

iperf -c 192.168.1.66 -t 60 ------------------------------------------------------------ Client connecting to 192.168.1.66, TCP port 5001 TCP window size: 297 KByte (default) ------------------------------------------------------------ [ 3] local 192.168.1.1 port 37804 connected with 192.168.1.66 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-60.0 sec 6.58 GBytes 942 Mbits/sec xentop: N/A / 135 / 145Host to VM (4 threads):

iperf -c 192.168.1.66 -t 60 -P4 ------------------------------------------------------------ Client connecting to 192.168.1.66, TCP port 5001 TCP window size: 112 KByte (default) ------------------------------------------------------------ [ 5] local 192.168.1.1 port 37812 connected with 192.168.1.66 port 5001 [ 3] local 192.168.1.1 port 37808 connected with 192.168.1.66 port 5001 [ 6] local 192.168.1.1 port 37814 connected with 192.168.1.66 port 5001 [ 4] local 192.168.1.1 port 37810 connected with 192.168.1.66 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-60.0 sec 1.63 GBytes 233 Mbits/sec [ 6] 0.0-60.0 sec 2.08 GBytes 298 Mbits/sec [ 4] 0.0-60.0 sec 1.07 GBytes 154 Mbits/sec [ 5] 0.0-60.0 sec 1.80 GBytes 257 Mbits/sec [SUM] 0.0-60.0 sec 6.58 GBytes 942 Mbits/sec xentop: N/A / 155 / 155Test set 2:

Server:

FreeBSD 14 (FreeBSD 14.0-RELEASE (GENERIC) #0 releng/14.0-n265380-f9716eee8ab4: Fri Nov 10 05:57:23 UTC 2023)with 4 cores.

Client: Debian 12 (Linux 6.1.0-18-amd64 #1 SMP PREEMPT_DYNAMIC Debian 6.1.76-1 (2024-02-01) x86_64) with 4 cores.VM to VM (1 thread):

iperf -c 192.168.1.64 -t 60 ------------------------------------------------------------ Client connecting to 192.168.1.64, TCP port 5001 TCP window size: 16.0 KByte (default) ------------------------------------------------------------ [ 1] local 192.168.1.69 port 38572 connected with 192.168.1.64 port 5001 (icwnd/mss/irtt=14/1448/905) [ ID] Interval Transfer Bandwidth [ 1] 0.0000-60.0089 sec 21.3 GBytes 3.04 Gbits/sec xentop: 125 / 355 / 325VM to VM (4 threads):

iperf -c 192.168.1.64 -t 60 -P4 ------------------------------------------------------------ Client connecting to 192.168.1.64, TCP port 5001 TCP window size: 16.0 KByte (default) ------------------------------------------------------------ [ 3] local 192.168.1.69 port 50068 connected with 192.168.1.64 port 5001 (icwnd/mss/irtt=14/1448/753) [ 1] local 192.168.1.69 port 50078 connected with 192.168.1.64 port 5001 (icwnd/mss/irtt=14/1448/513) [ 4] local 192.168.1.69 port 50088 connected with 192.168.1.64 port 5001 (icwnd/mss/irtt=14/1448/411) [ 2] local 192.168.1.69 port 50070 connected with 192.168.1.64 port 5001 (icwnd/mss/irtt=14/1448/676) [ ID] Interval Transfer Bandwidth [ 4] 0.0000-60.0299 sec 9.48 GBytes 1.36 Gbits/sec [ 1] 0.0000-60.0299 sec 6.56 GBytes 938 Mbits/sec [ 3] 0.0000-60.0301 sec 11.2 GBytes 1.60 Gbits/sec [ 2] 0.0000-60.0293 sec 6.61 GBytes 947 Mbits/sec [SUM] 0.0000-60.0146 sec 33.8 GBytes 4.84 Gbits/sec xentop: 220 / 400 / 730Host to VM (1 thread):

iperf -c 192.168.1.64 -t 60 ------------------------------------------------------------ Client connecting to 192.168.1.64, TCP port 5001 TCP window size: 212 KByte (default) ------------------------------------------------------------ [ 3] local 192.168.1.1 port 58464 connected with 192.168.1.64 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-60.0 sec 6.58 GBytes 941 Mbits/sec xentop: N/A / 295 / 205Host to VM (4 threads):

iperf -c 192.168.1.64 -t 60 -P4 ------------------------------------------------------------ Client connecting to 192.168.1.64, TCP port 5001 TCP window size: 130 KByte (default) ------------------------------------------------------------ [ 5] local 192.168.1.1 port 58470 connected with 192.168.1.64 port 5001 [ 3] local 192.168.1.1 port 58468 connected with 192.168.1.64 port 5001 [ 4] local 192.168.1.1 port 58472 connected with 192.168.1.64 port 5001 [ 6] local 192.168.1.1 port 58474 connected with 192.168.1.64 port 5001 [ ID] Interval Transfer Bandwidth [ 5] 0.0-60.0 sec 1.73 GBytes 247 Mbits/sec [ 3] 0.0-60.0 sec 1.56 GBytes 224 Mbits/sec [ 4] 0.0-60.0 sec 1.73 GBytes 247 Mbits/sec [ 6] 0.0-60.0 sec 1.56 GBytes 224 Mbits/sec [SUM] 0.0-60.0 sec 6.58 GBytes 942 Mbits/sec xentop: N/A / 280 / 205Conclusion:

No special tuning on any of the VMs, just a fresh install from the netboot ISO for each respective OS.

I also don't fully understand why my host seems to be limited to 1Gb. The management interface is 1Gb, but that shouldn't matter? The other physical NIC is 10Gb SFP+, just for the sake of completeness.

Please let me know if there's anything at all that I can do to help with this!

-

FYI, we are discussing with AMD and another external company to find the culprit, active work is in the pipes.

-

@timewasted Thanks for sharing, as long as we haven't found a solution, it is always good to have more feedback, so thanks for that.

For FreeBSD it usus the same principle of network driver, but it seems to have lower performances, not only on EPYC system, this could be another investigation for later

I am indeed surprised by your vm/host results, I generally get a way greater performance there in my tests. I agree the management NIC speed should not impact it at all… You said no tuning so I guess no pinning or anything, therefore I don't really see why that is right now.

-

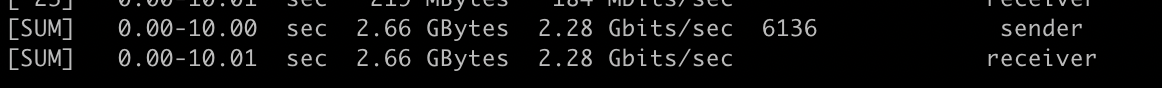

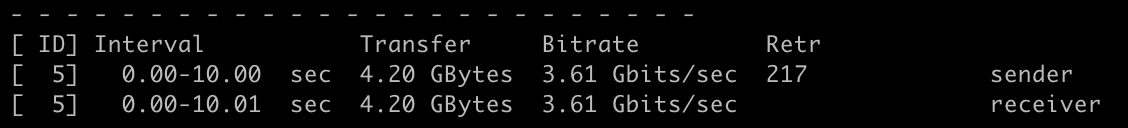

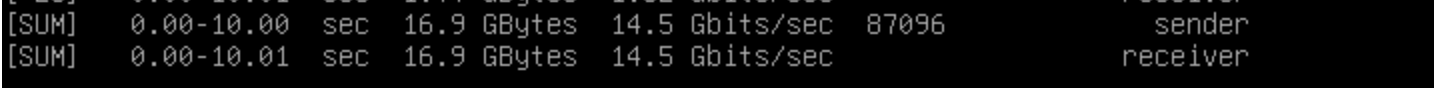

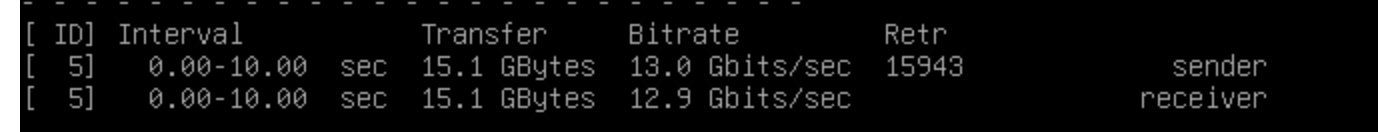

@bleader I stumpled upon this thread and this issue kept me wondering so I did a quick test on our systems:

Running iperf3 on ou HP's with AMD EPYC 7543P cpu's, debian12 to debian12 vm I get

iperf3 -c 192.168.1.19 -P 10

iperf3 -c 192.168.1.19

Same on a HP with Intel Xeon E5-2667

iperf3 -c 192.168.1.113 -P 10

iperf3 -c 192.168.1.113

FREAKY!

Doesn't affect us because we don't have inter-VM traffic to speak off.