First SMAPIv3 driver is available in preview

-

The goal is to test the fact that it runs OK for a bit, so we are sure to not miss anything. Fio is your friend to benchmark in a VM, remember that it's still blktap behind, so if you want better performance numbers, do it with multiple VDIs at once.

-

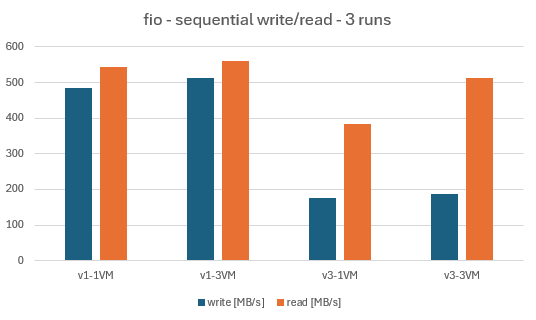

I tested SMAPIv1 on XCP 8.2.1 against SMAPIv3 on XCP 8.3b2 using the same host (a HP ProDesk 400 G6 with a i5-10500T CPU, 32GB RAM). A 1 TB Samsung 860 EVO SSD drive was used as the test SR, while XCP was booted from a 512 M.2 KIOXA NVMe drive. Fio (

fio-3.37) was compiled from source on an up-to-date Debian 12 VM (2 vCPU, 4 GiB RAM, 32GiB drive) which was copied twice so that three identical VM could runfioin parallel.After an initial

fiorun to create the files, a script run three sequential write and read tests (e.g.fio --name=fio --ioengine=libaio --randrepeat=1 --direct=1 --fallocate=none --ramp_time=10 --size=4G --iodepth=64 --loops=50 --group_reporting --numjobs=1 --rw=write --bs=1M). The script first ran on one VM, followed by a run on three VMs in parallel. IOPs and bandwidths were averaged.

v1-1VMare the results for one VM on a SMAPIv1 SR (XCP 8.2.1) whilev3-3VMare the results for three VMs in parallel an a SMAPIv3 SR (XCP 8.3b2).While I'm not sure if this approach is really valid (e.g. the average load of the host went through the roof when three VMs performed

fioin parallel), it does suggest that the bandwidth of SMAPIv3 is not yet en-par to that of SMAPIv1. But I could be wrong and this is an early previews of SMAPIv3. Looking forward to more performance results on SMAPIv3. -

Hi,

I'm not sure to understand. What kind of SMAPIv1 SR did you try to compare with ZFS on v3?

-

Can you provide a link to the github repo where we can find the source-code of this smapiv3 driver?

-

-

@olivierlambert

i meant the source for this package: xcp-ng-xapi-storage-volume-zfsvolso that we can see how this new driver is implemented

-

That's inside the repo I posted

-

Has anyone tried a backup using the new driver? I created a new test pool with one of my previous hosts and made SMAPIv3 ZFS storage. I can create a VM just fine, but when I try and add it to my existing backup job, it keeps erroring out with "stream has ended with not enough data (actual: 485, expected: 512)"

Is this expected?

-

You can only do full backup for now, not incremental.

-

@olivierlambert Since it's the first backup, it should be full, correct? Does Delta backup not work at all even if force full is enabled?

-

I mean the backup feature, it only works with XVA underneath (so the full backup feature that is doing a full everytime)

-

I've started using the SMAPIv3 driver too. It's working well so far. I'm keeping my VM boot disks on

mdraid1, and using azfsmirror via SMAPIv3 for large data disks.I have a question about backups... Is it safe to use

syncoidto directly synchronize the ZFS volumes to an external backup?syncoidcreates a snapshot at the start of the send process. But, I also have rolling snapshots configured through Xen-Orchestra. Will thesyncoidsnapshot mess up Xen-Orchestra?If this isn't safe or isn't a good idea, I'll just use rsync to back up the filesystem contents inside the VM that the volume is mounted to...

-

On my side, I have no idea, because I never used

syncoid. Have you asked their dev about this? -

if i understand correctly i would rephrase the question this way:

does xen-orchestra name the snapshots in a way which is unique to xen-orchestra and does xoa know which snapshots belong to it or does it use the latest snapshots no matter how they are named.

@hsnyder: i dont think you can simply use zfs snapshots without xen snapshots because it dont think that they will be crash-consistent.

if syncoid is similar to zrepl you have to check that is doesnt prune the zfs snapshots from xoa.

-

Question for @yann probably then

-

@rfx77 Thanks for clarifying my question, your reading of it was correct.

I've just realized that

syncoidhas an option,--no-sync-snap, which I think avoids creating a dedicated snapshot for the purpose of the transfer, and instead just transfers over the pre-existing snapshots. If that's indeed what it does, then this solves all potential problems, because the existing snapshots are taken from xen-orchestra. I'll do a test to confirm this is indeed the behavior and then will reply again. -

If I understand he question correctly, the requirement is that the snapshot naming convention by ZFS-vol and by

syncoiddon't collide.

What convention issyncoidusing? The current ZFS-vol driver just assigns a unique integer name to each volume/snapshot, and there would be an error when it attempts to create a snapshot with a new integer name that another tool would have created on its own. -

@hsnyder Hi!

I would let syncoid do a snapshot, check the name and look if there could be any potential naming conflict. if thats not the case i would keep it as it was.

you can check if syncoid keeps the snapshots on the targetanyhow i would recommend zrepl for your tasks. its the tool used by nearly anyone who does zfs replication things. We are extensively using it for many Hub-Spoke sync architectures.

-

@rfx77 thanks for the recommendations. I looked into zrepl and it seems like a good solution as well. However, since I'm using this new zfs beta driver in production, I've decided I'm going to do the backup at the VM filesystem level, i.e. with rsync, instead of at the ZFS level. I figure that strategy is slightly safer in the event of bugs with the driver. I know that's debatable - it would depend on the bug, but this approach feels safer to me.

-

Hello @olivierlambert ,

I am joining this topic as I have a few questions about SMAPIv3:

-

Will it allow provisioning of VDIs larger than 2TB?

-

Will it enable thin provisioning on iSCSI SRs?

Currently, the blockers I encounter are related to my iSCSI storage. This is a major differentiating factor compared to other vendors, and resolving these blockers would significantly increase your market share.

Thanks !

-