PCIe NIC passing through - dropped packets, no communication

-

I'm looking for help with setting up PCIe NIC passthrough to VM. I tried with several Linux distributions on clean XCP-NG 8.2 and 8.3 and with different 10GbE network cards (Intel/Emulex/Mellanox).

I've used Supermicro and Gigabyte server class motherboard and Xeon and Ryzen CPUs.

The outcome is the same in each case. The device is nicely passed to the VM it appears correctly and except MSI and MSI-X interrupt related errors displayed during boot, the device seems to be operational at guest OS.

The main problem is that I can't communicate through those interfaces.

MAC addresses are visible on the switch but all RX packets are dropped, at least this is reported in interfaces counters on guest OS.Additionally despite the fact the I hided NIC for dom0 there are still bridge interfaces related with those passthrough interfaces operational and their MAC addresses are also visible on switch side.

Can someone help me here?

THX in advance for sharing some of your wisdom. -

@icompit Are you trying to pass this card through to the VM so there is a physical card assigned to this VM?

-

From the beginning:

- I have bare metal system with 2 NICs on motherboard and 2 NICs installed in PCIe slots

- I have installed XCP-NG on top of this hardware and I time to have 2 NICs from motherboard in use for hypervisor and two from PCIe slots passed through to VM.

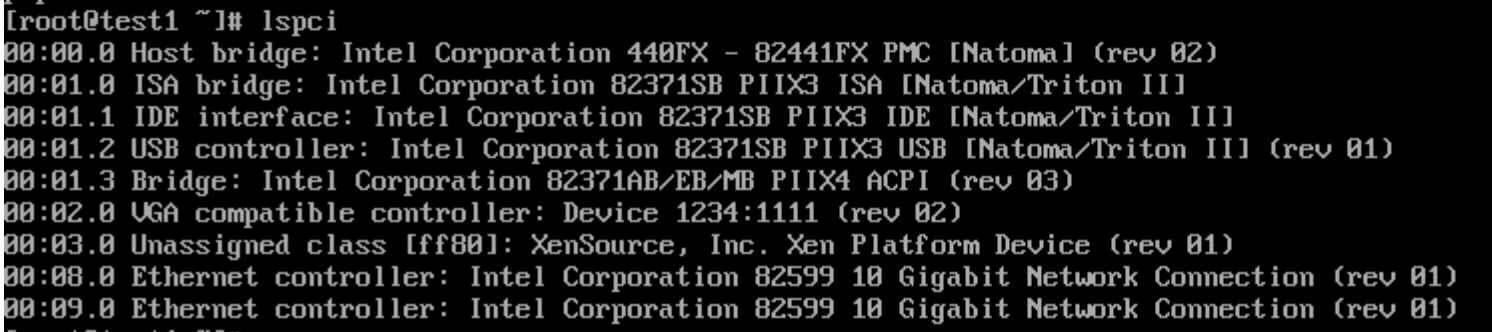

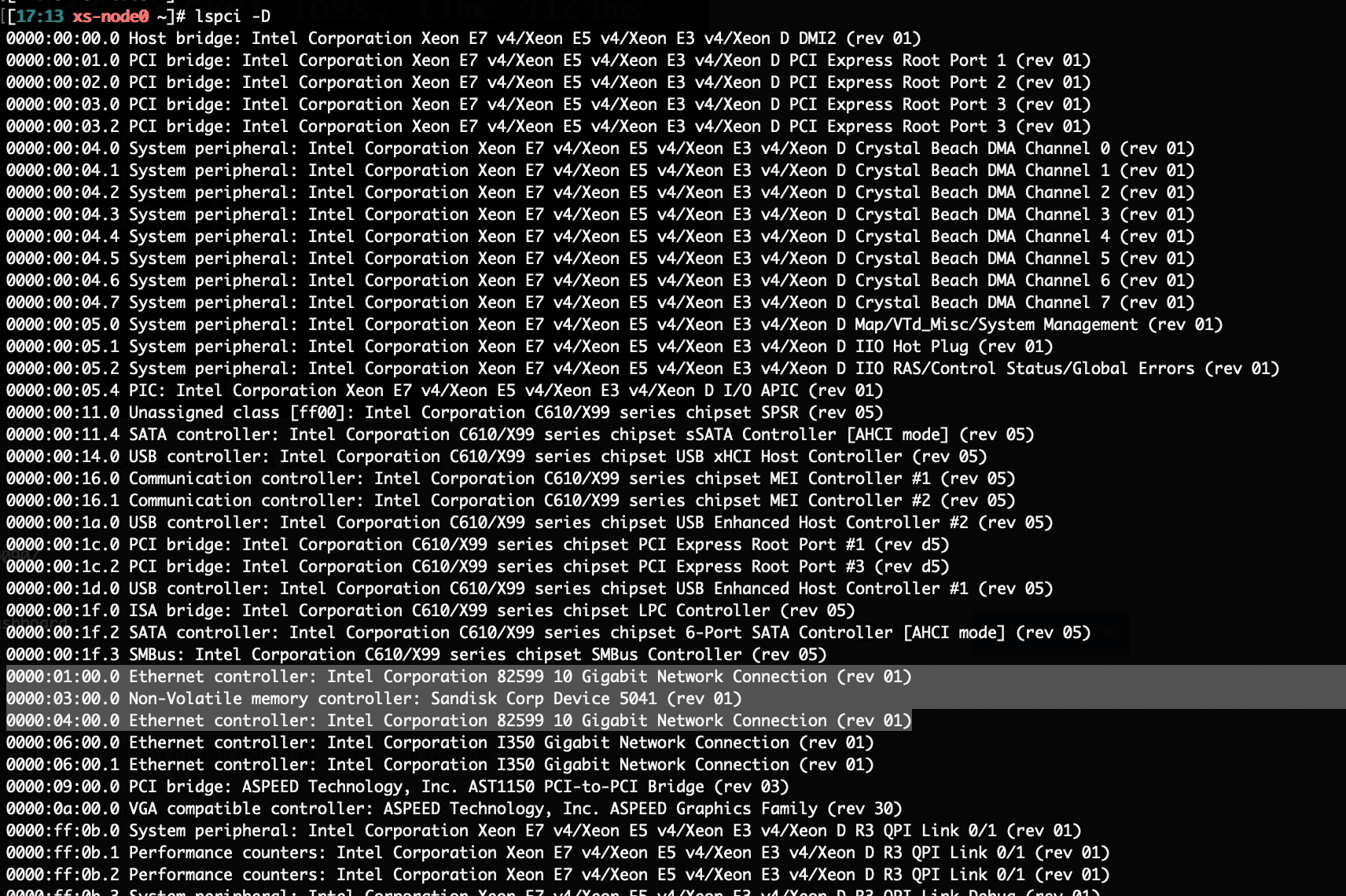

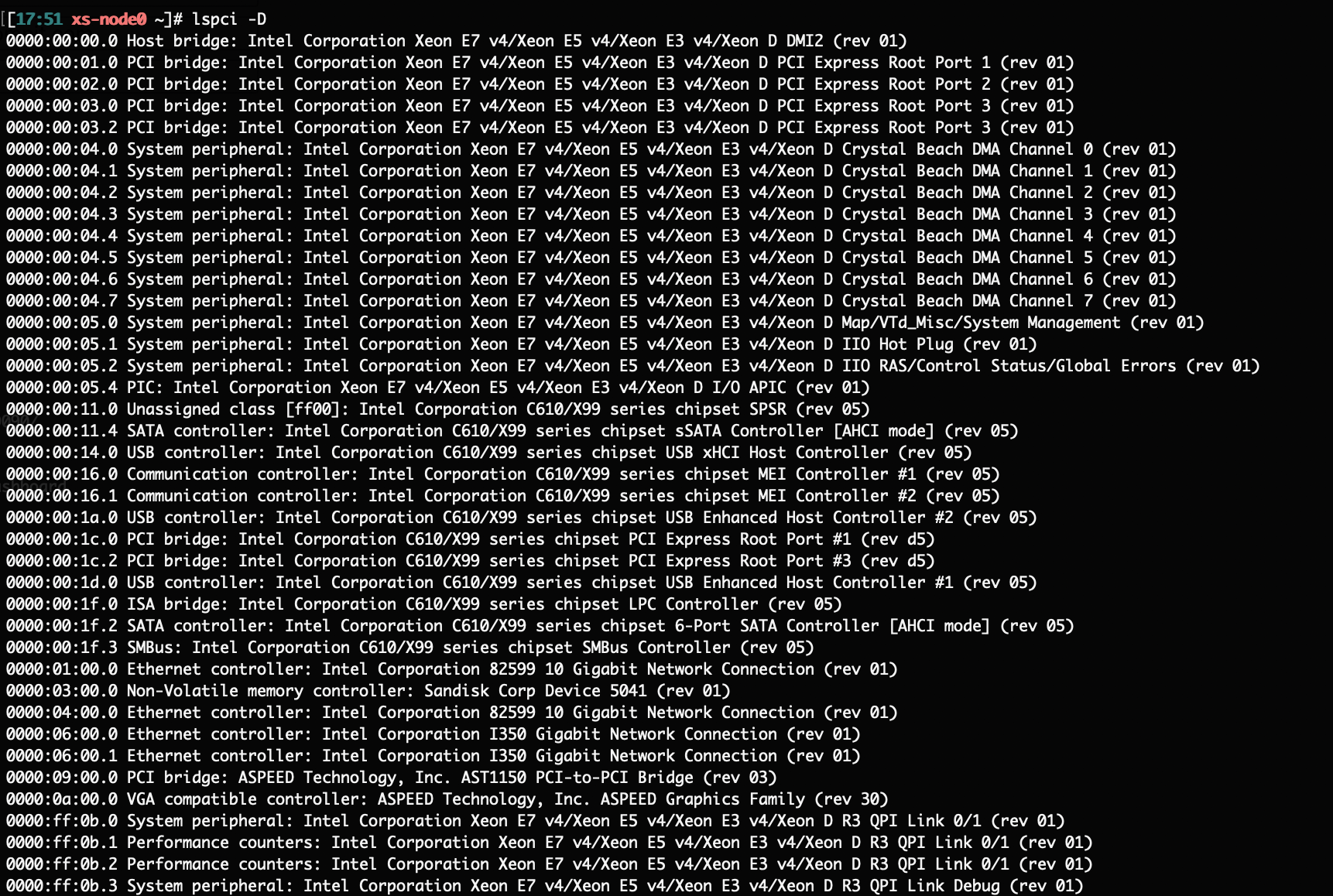

I'm using lspci -D to find devices which I need to passthrough at XCP-NG console

0000:01:00.0 Ethernet controller: Intel Corporation 82599 10 Gigabit Network Connection (rev 01)

0000:03:00.0 Non-Volatile memory controller: Sandisk Corp Device 5041 (rev 01)

0000:04:00.0 Ethernet controller: Intel Corporation 82599 10 Gigabit Network Connection (rev 01)

0000:06:00.0 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

0000:06:00.1 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)and with command:

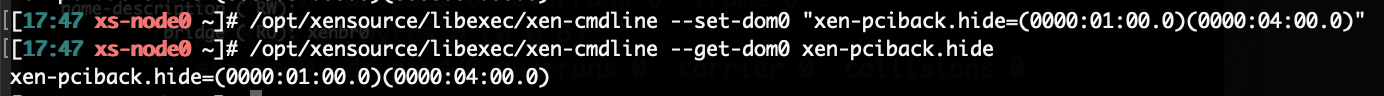

/opt/xensource/libexec/xen-cmdline --set-dom0 "xen-pciback.hide=(0000:01:00.0)(0000:04:00.0)"

I prepare them to passthrough.

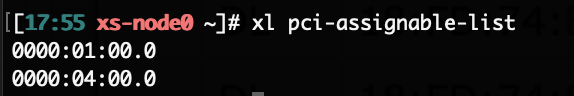

After reboot I check does the devices are ready:

*[00:12 xs-node0 ~]# xl pic-assignable-list

0000:01:00.0

0000:04:00.0 *So from this side all looks OK.

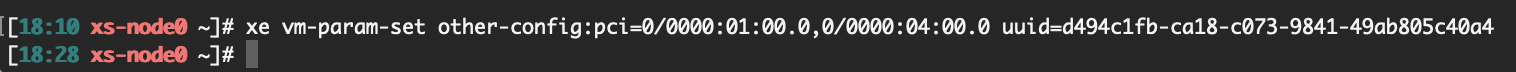

Now I'm adding those devices to VM with command:

xe vm-param-set other-config:pci=0/0000:01:00.0,0/0000:04:00.0 uuid=xxxx

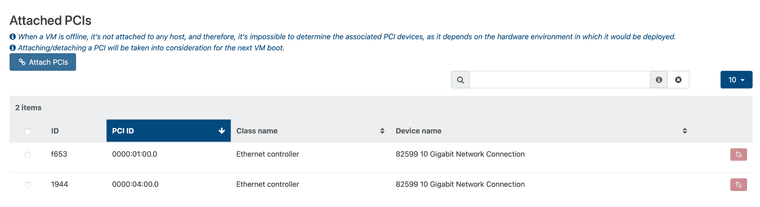

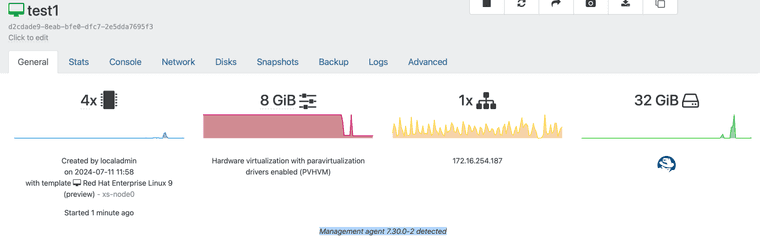

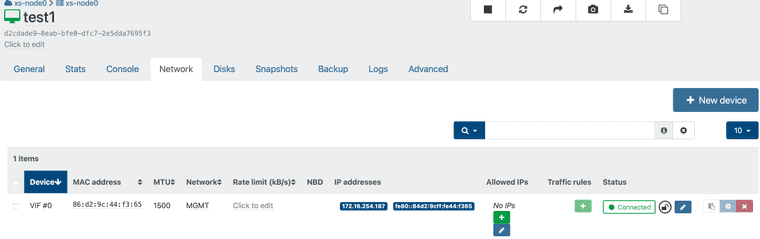

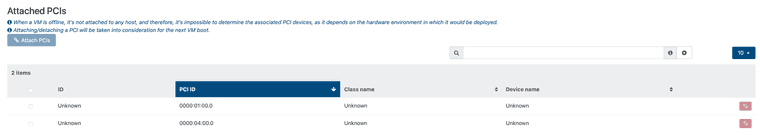

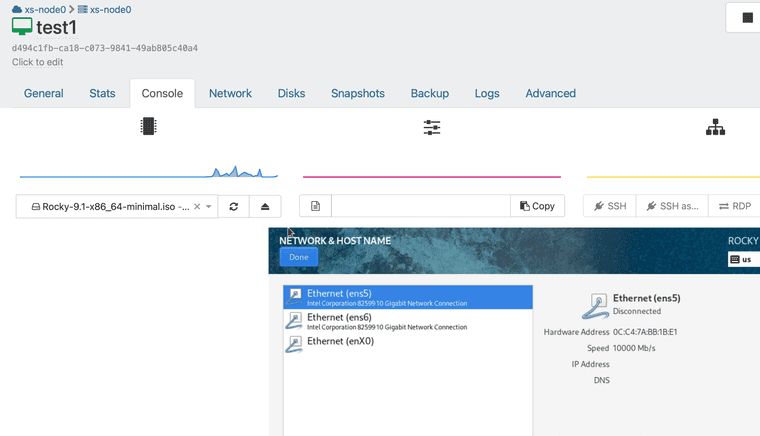

I can see at XO that both cards are configured for the VM

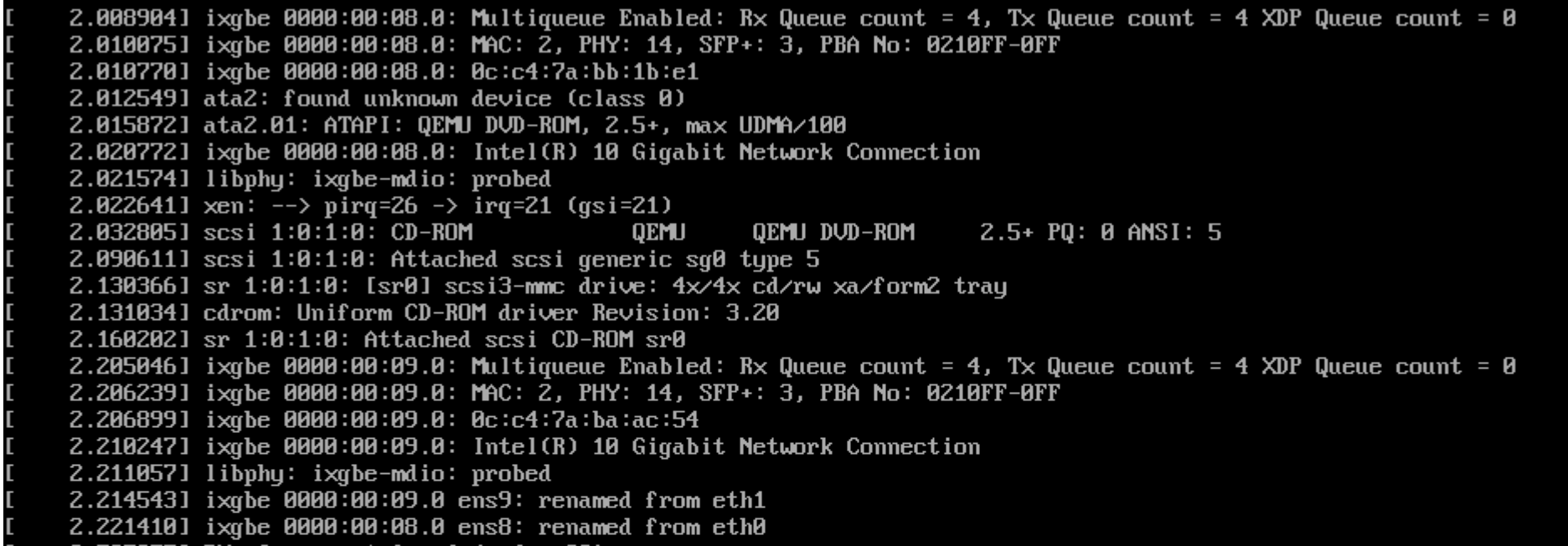

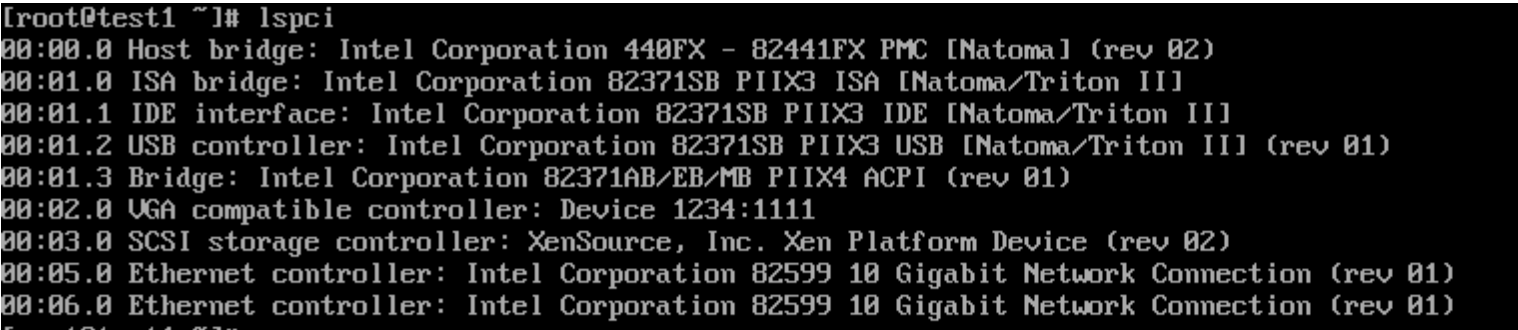

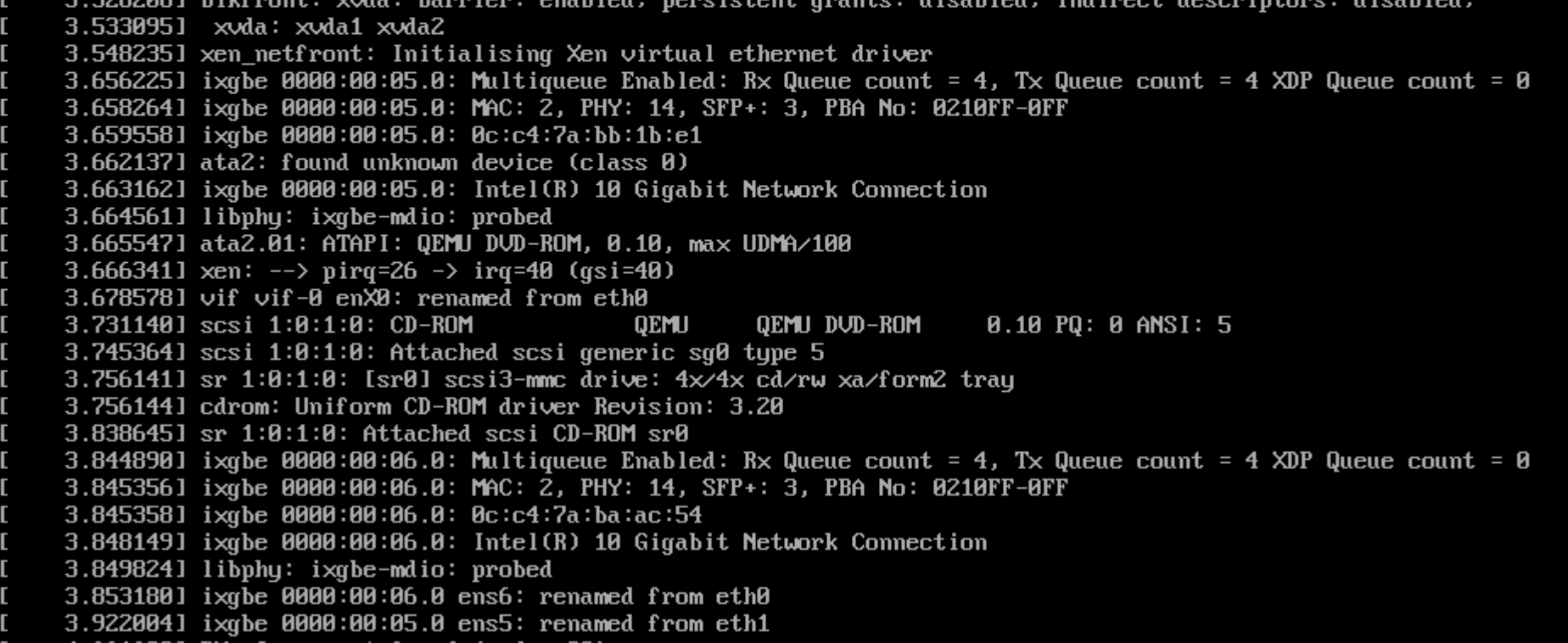

and when I start the VM I can see the cards:

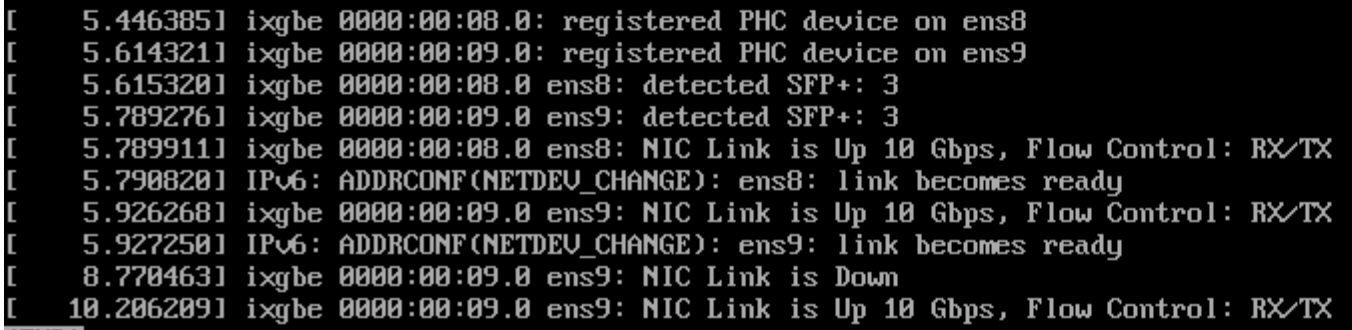

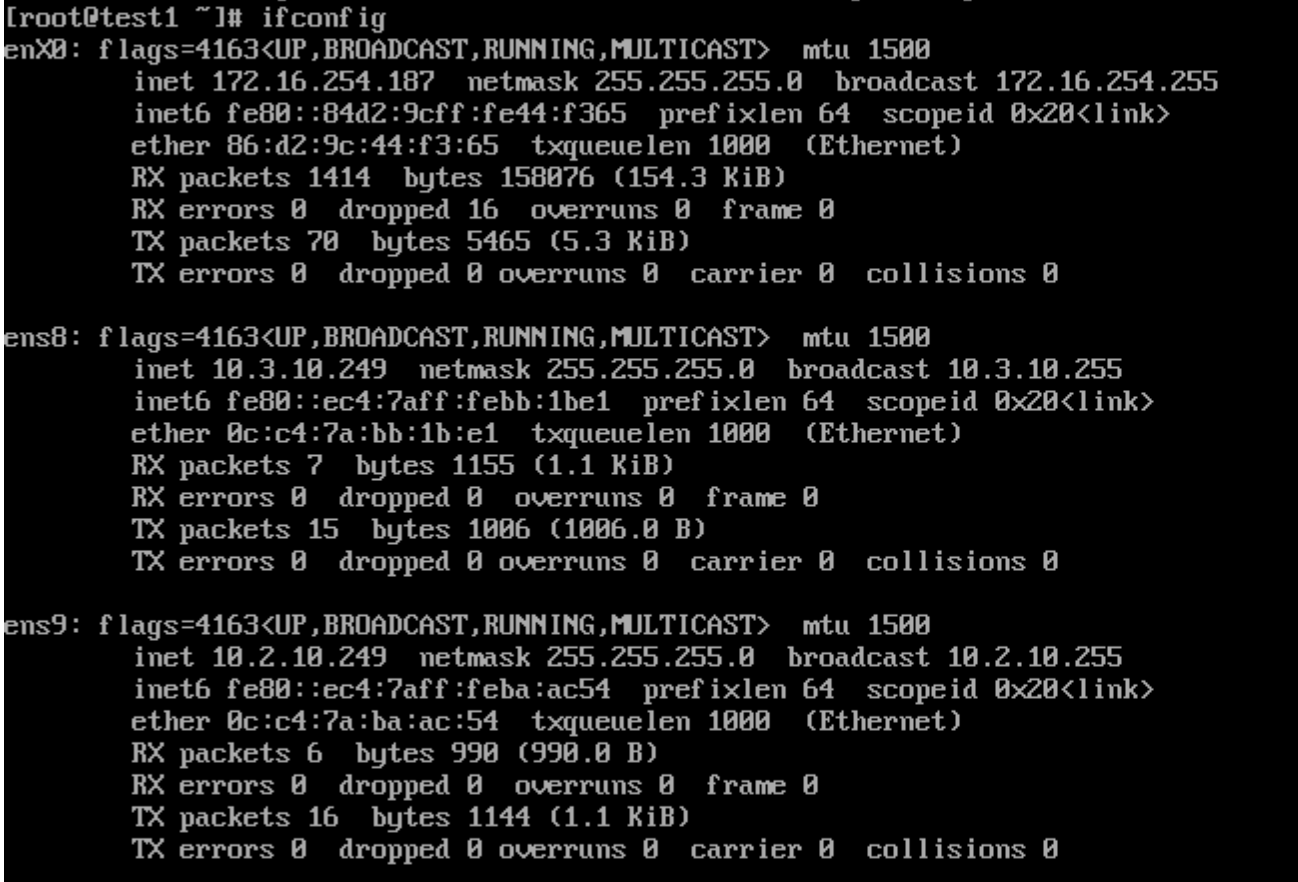

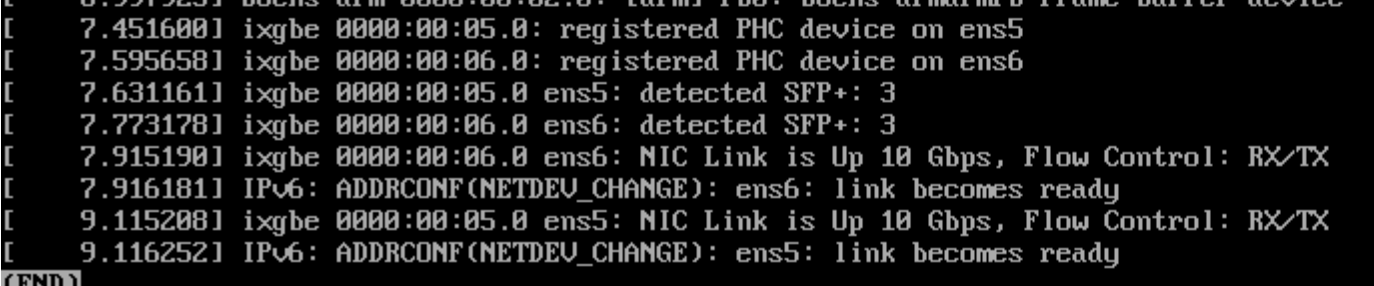

Both links are UP and at this point all looks OK.

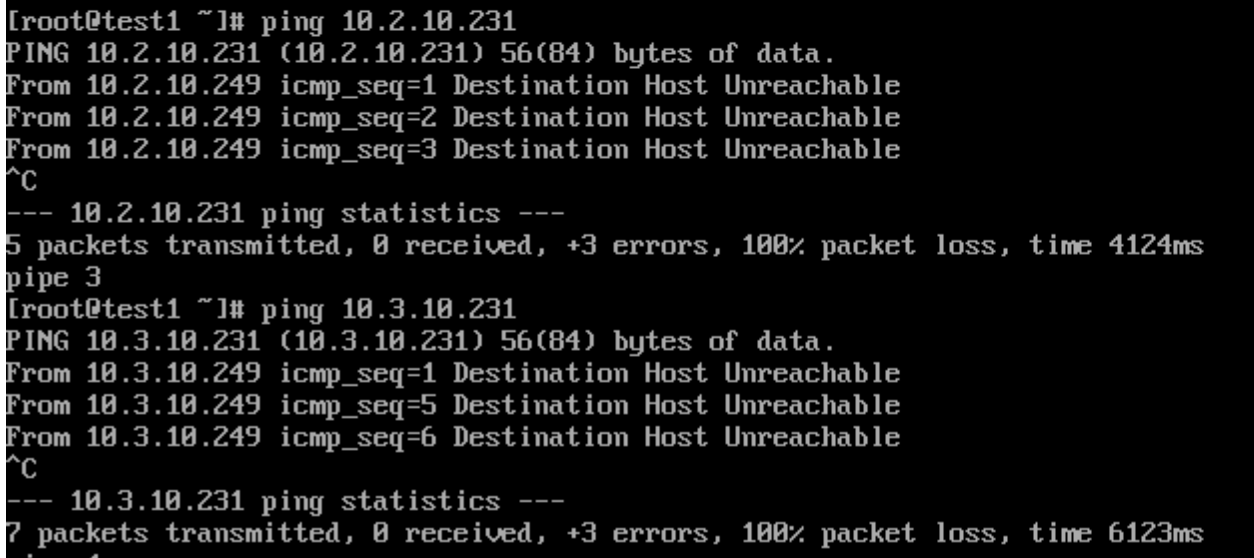

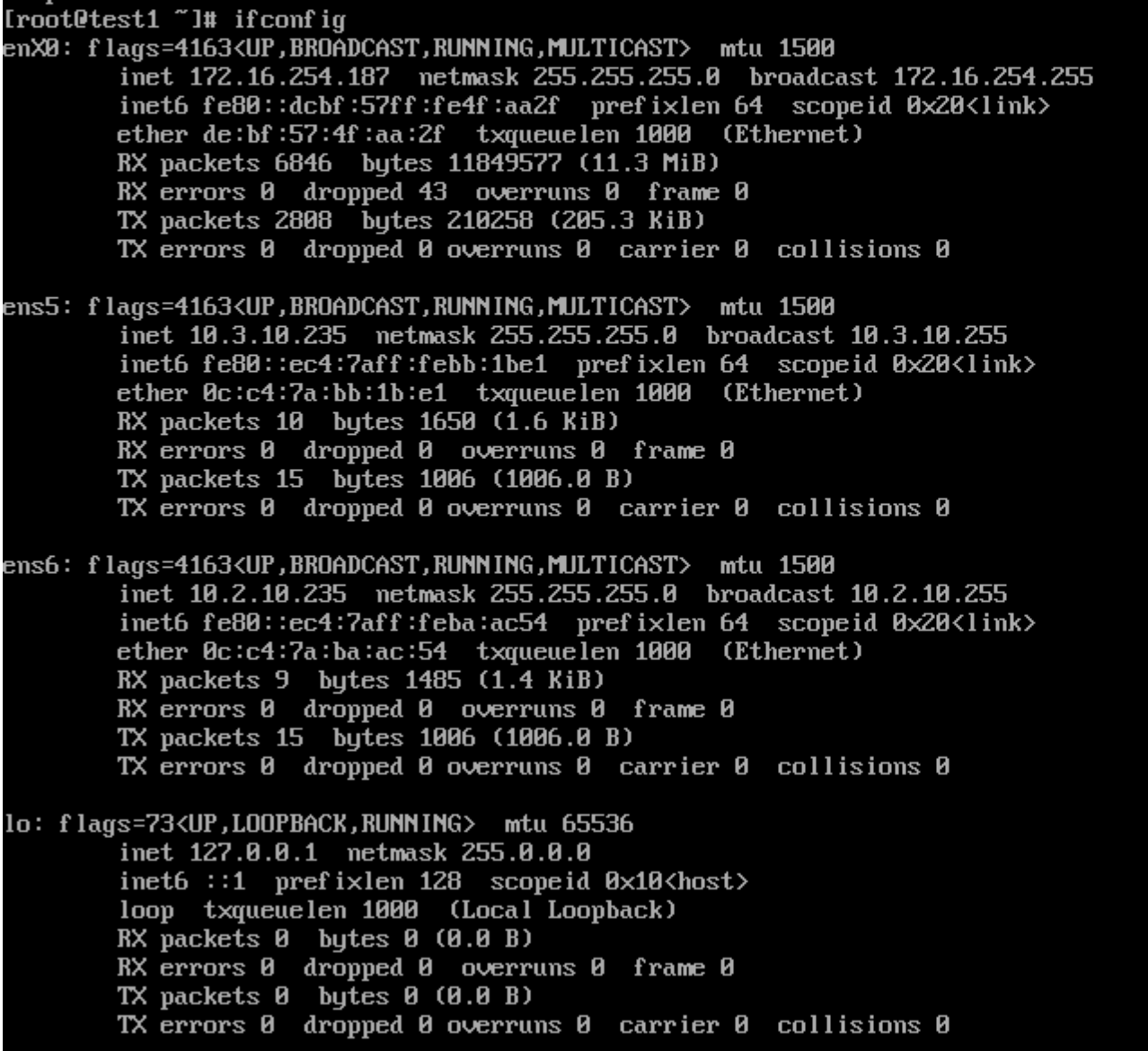

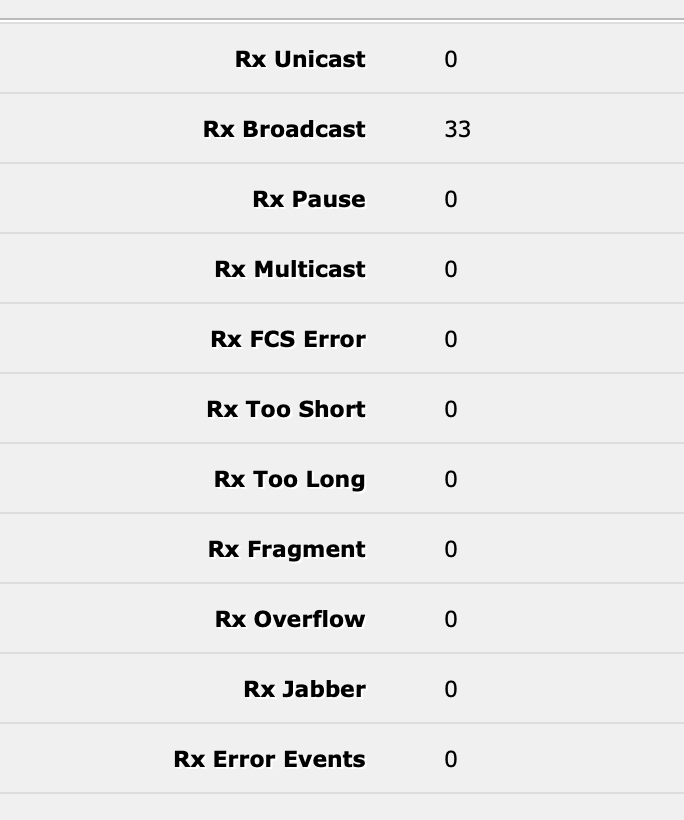

Unfortunate when I try to ping the host which is on the same network to which cards are connected I get:

When I try to ping the TX counter on VM interface increase... but seems that package doesn't leave the box because RX counter doesn't increase on switch side.

I can add that Management agent 7.30.0-2 has been installed on VM.

The 3rd interface which is configured on this VM works OK.

-

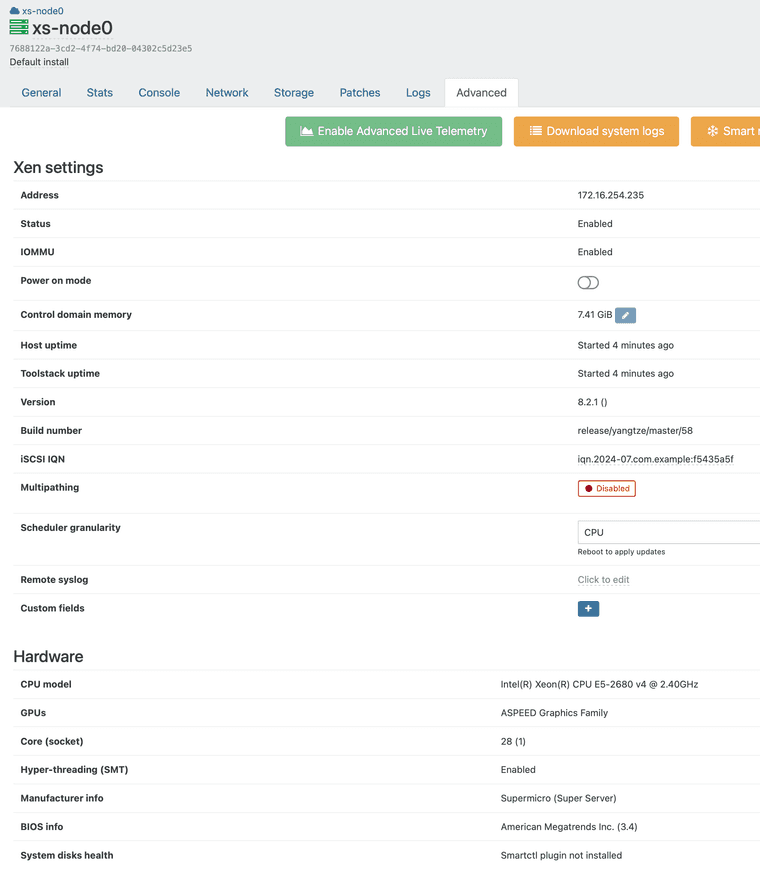

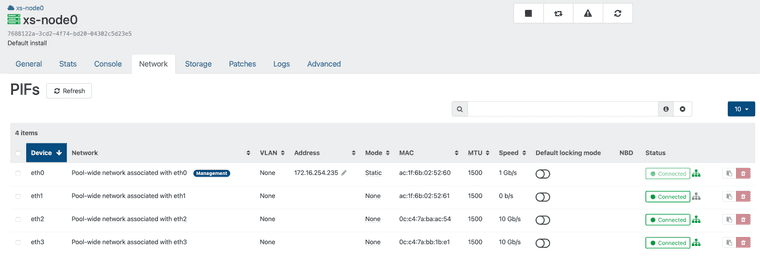

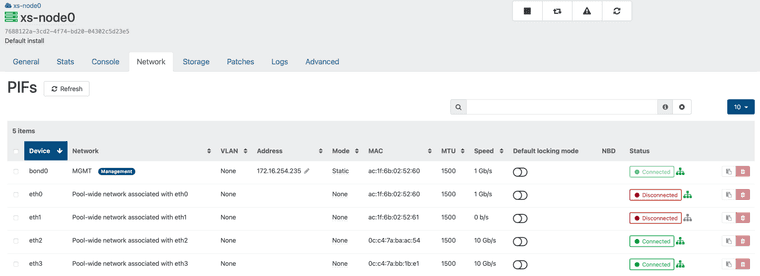

I've made clean install of xcp-ng 8.2.1 and applied all the patches available to this day again to collect all the details.

After installation:

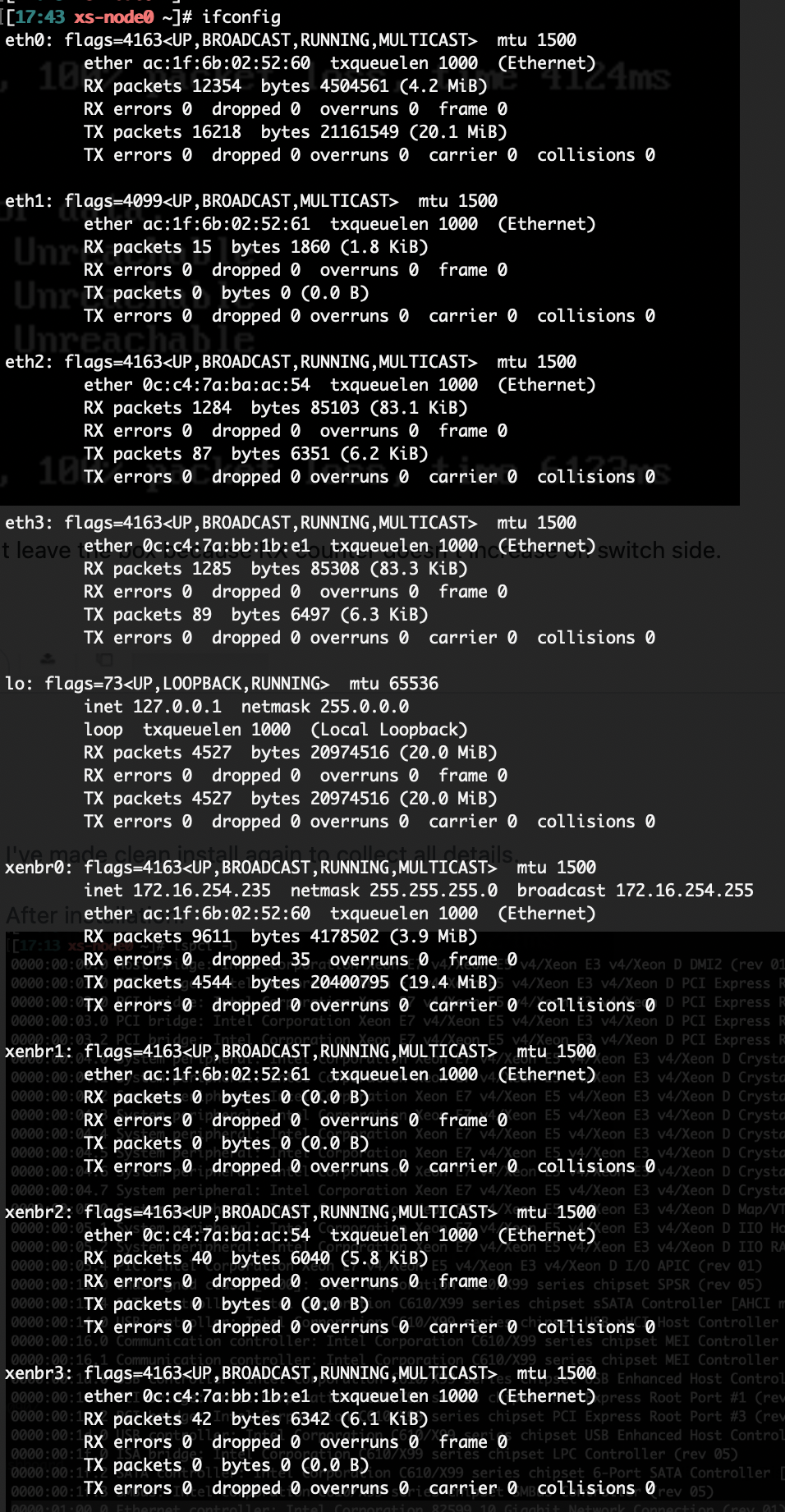

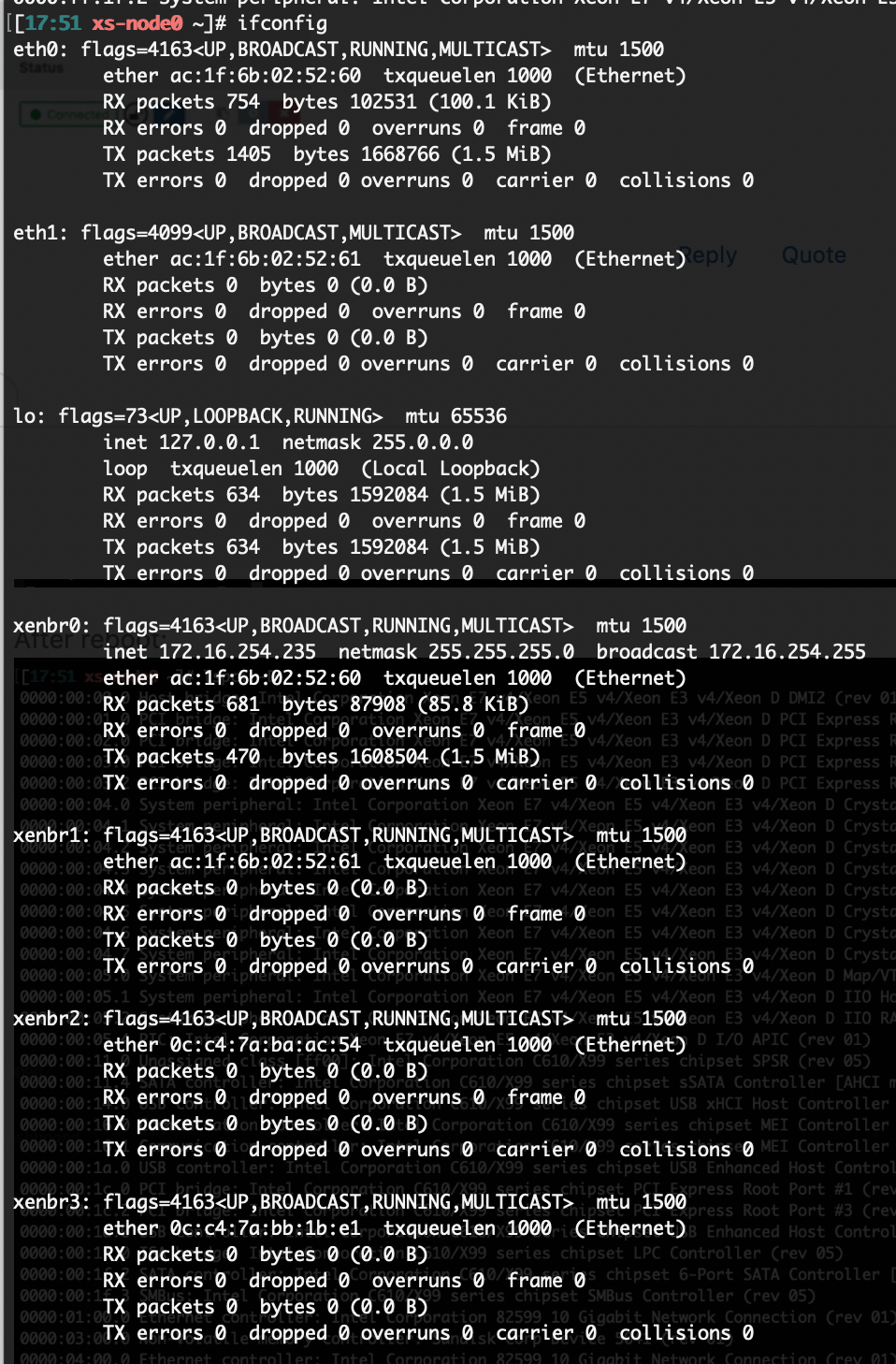

After reboot:

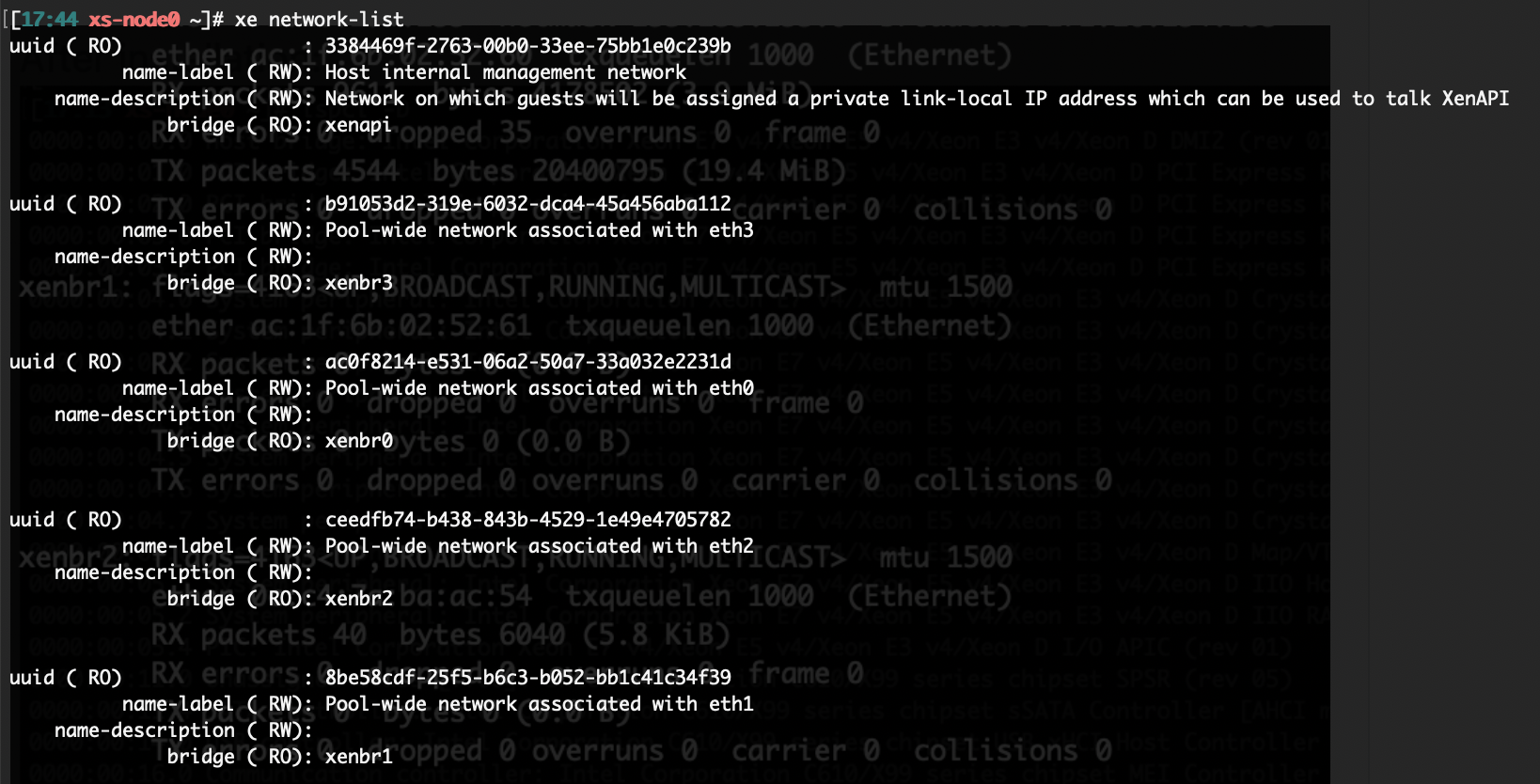

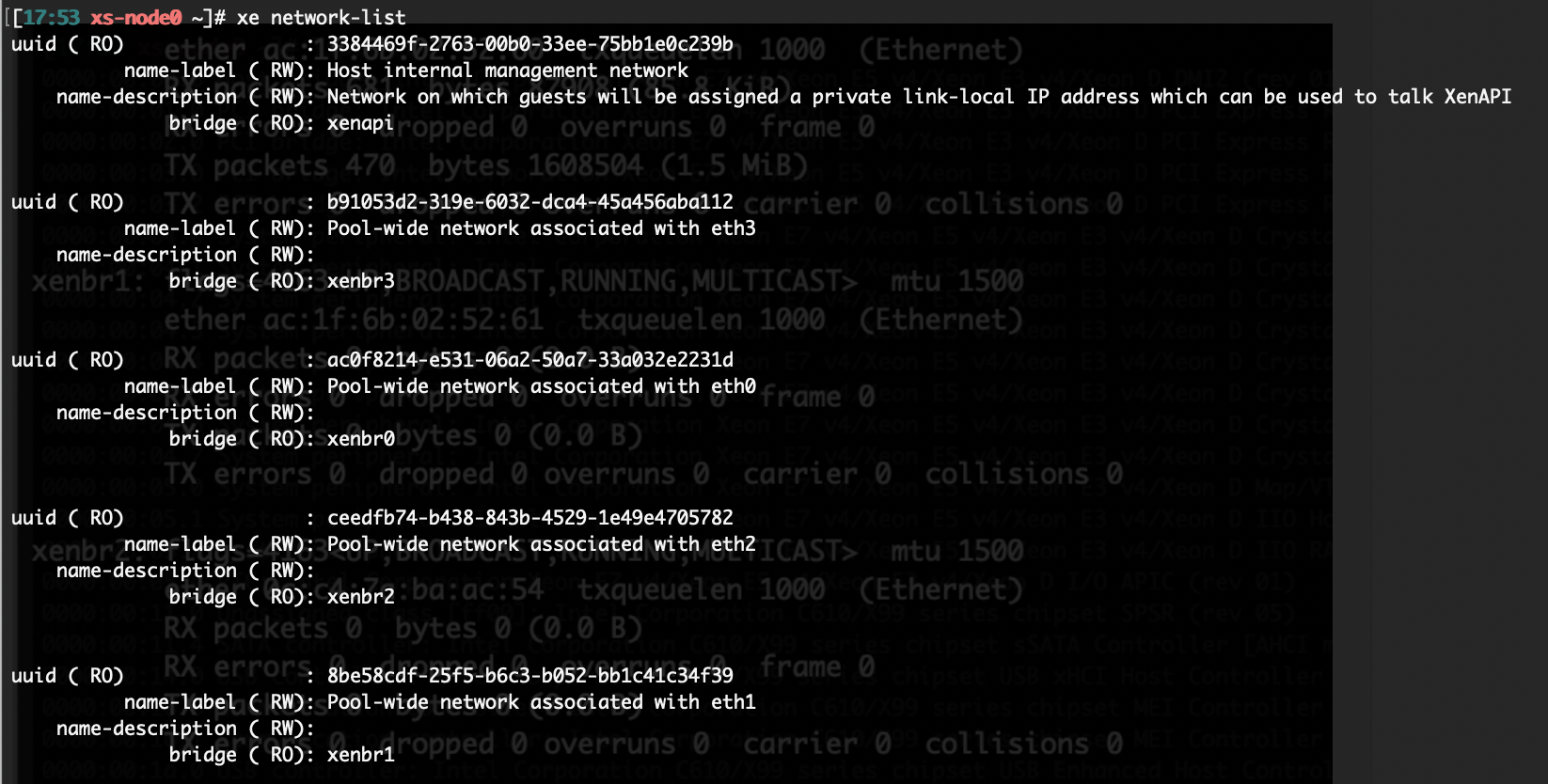

NIC are hidden from dom0, the interfaces eth2 and eth3 are not visible but the xenbr2 and xenbr3 are still there and are operational.

This is a bit strange for me as I thought those interfaces should be not present and this should lead that both xenbr interfaces at lest should be down... without operational connection.

I see the MAC addresses fot those interfaces on switch side.

Anyway both interfaces are available for passthrough.

I've bonded eth1 and eth2 before setting up the VM target for NIC for passthrough.

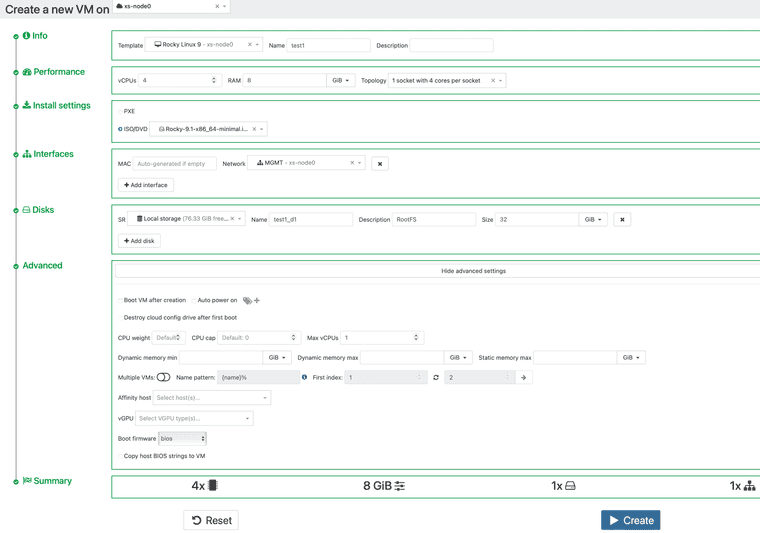

VM installation:

Before I start the VM and proceed with installation I will add those cards.

|

|I see at XO that that NICs are assigned for the VM.

During installation cards are available:

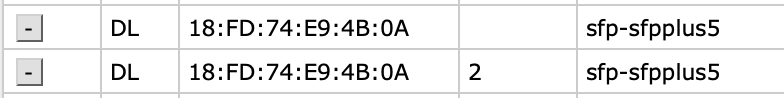

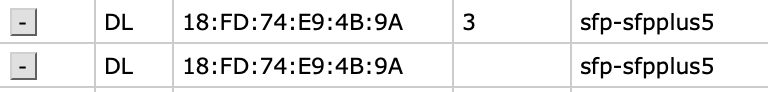

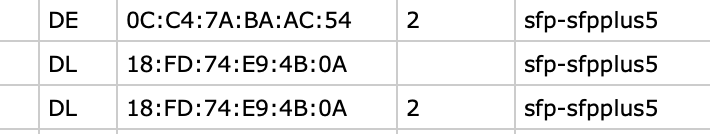

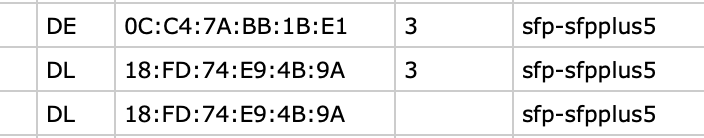

But what is worth to mention on switch side apart from MAC of card I'm starting to see also MAC from xenbr2 and xenbr3 interfaces.

After starting VM:

When I start ping to the host in the network connected to the passthrough NIC on switch side I can see only:

No packet received on VM level, counters doesn't increase.

Is there something which I miss to configure on hypervisor level???

-

Problem solved with adding the kernel parameter wit command:

/opt/xensource/libexec/xen-cmdline --set-dom0 "pci=realloc=on"

-

@icompit said in PCIe NIC passing through - dropped packets, no communication:

--set-dom0 "pci=realloc=on"

Did you had a message in

xl dmesgto put you in that right direction? I'm curious about this workaround, first time I heard it. Nice catch

-

No, the logs from hypervisor as well as from dom0 didn't lead me to this.

Logs from VM OS while trying use those NICs generated some logs which after checking on the Internet lead to RHEL support case where adding this parameter to kernel was a confirmed solution. At XCP-NG forum there was also a thread which mentioned this option for passthrough device but it was related with GPU if I recall correctly.

Anyway this has helped me with Intel NICs.

Still no luck with Marvel FastlinQ NICs but there the problem is more related with driver included in kernel on guest OS. I'm still trying to solve it.

I didn't test this yet with Mellanox cards.

I'm trying to squeeze my lab environment as much as I can to save on electricity... the prices went crazy nowadays.

I'm trying to use StarWind VSAN (free license for 3 nodes and HA storage) and those NICs with SATA controller and NVMe ssd I needed to passthrough to VM. Server nodes are so powerful today so even single socket server can handle quite a lot..

I did some tests today with two LUNs presented over multi path iSCSI and this may work quite nice.

One instance of StarWind VSAN on one XCP-NG node, one on second and communication over dedicated NICs.. HA and replication on interfaces connected directly between StarWind nodes without switch.

I don't need much in terms of computing power and storage spece.. It might be perfect solution of two node cluster setups which may fit many of small customers or labs as my.

Of course this require more testing... but my lab is perfect place to experiment

-

I quickly discussed with Xen developers and they are curious about your issue. Can you do a

lspci -vvvvin your Dom0, first while you have still the optionpci=realloc, and once without it, and see if we can spot any difference regarding your NIC. This might be helpful (you can provide both outputs at https://paste.vates.tech)

(you can provide both outputs at https://paste.vates.tech) -

F Fionn referenced this topic on

F Fionn referenced this topic on

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (either via email, or push notification). You'll also be able to save bookmarks and upvote posts to show your appreciation to other community members.

With your input, this post could be even better 💗

Register Login