@KPS

Unfortunate, no. I just replicate and avoid moving live VM on the same host, target for replication.

Latest posts made by icompit

-

RE: DUPLICATE_MAC_SEED

-

RE: VM backup retry - status failed despite it was done on second attempt

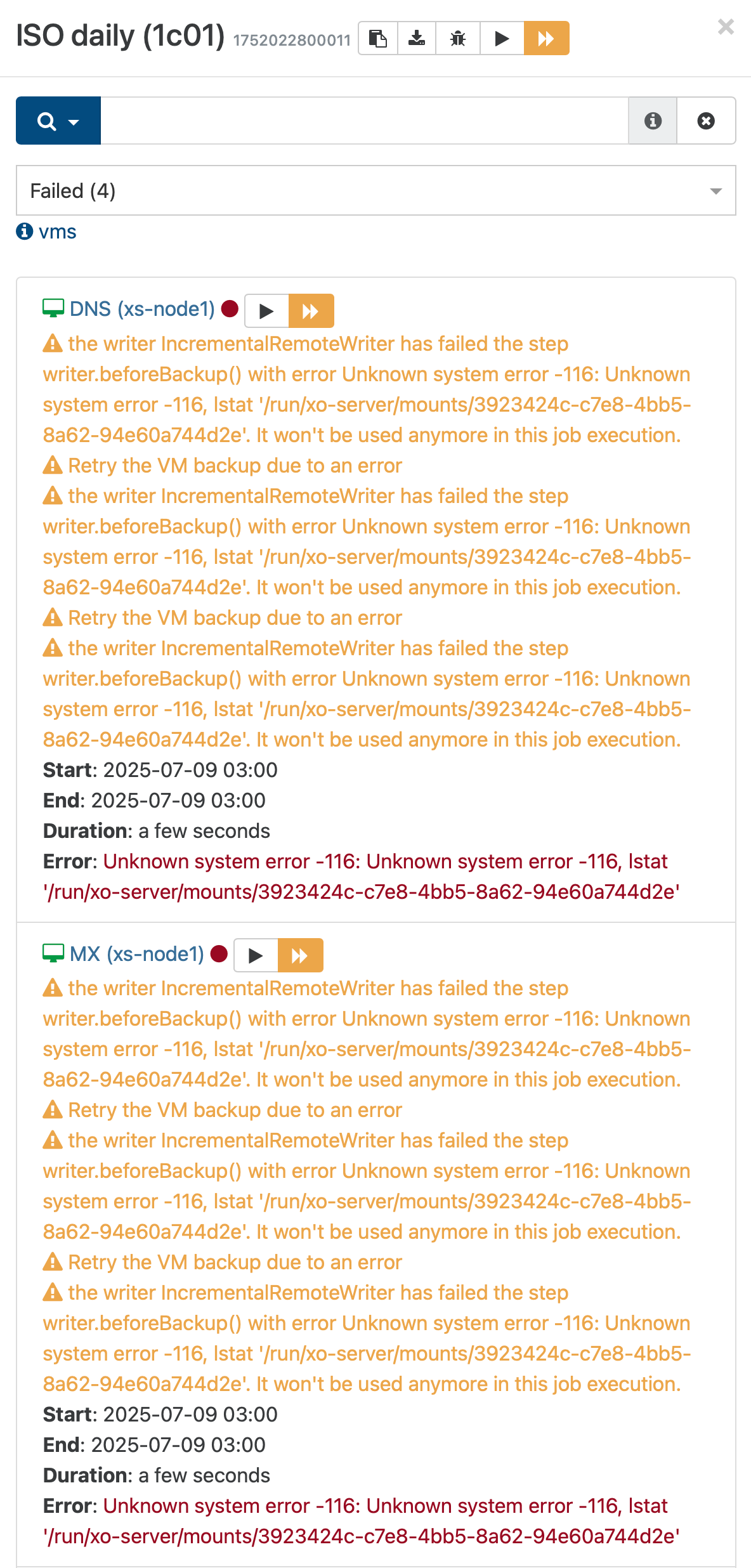

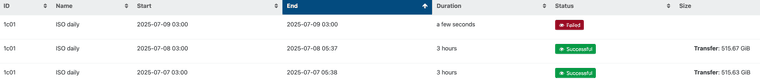

From today morning...

Yesterday all was ok.

-

RE: VM backup retry - status failed despite it was done on second attempt

@lsouai-vates

Sure, I understand issues might be related to changes of backup processing under the hood.

I hope my report going to help with identification of bugs.

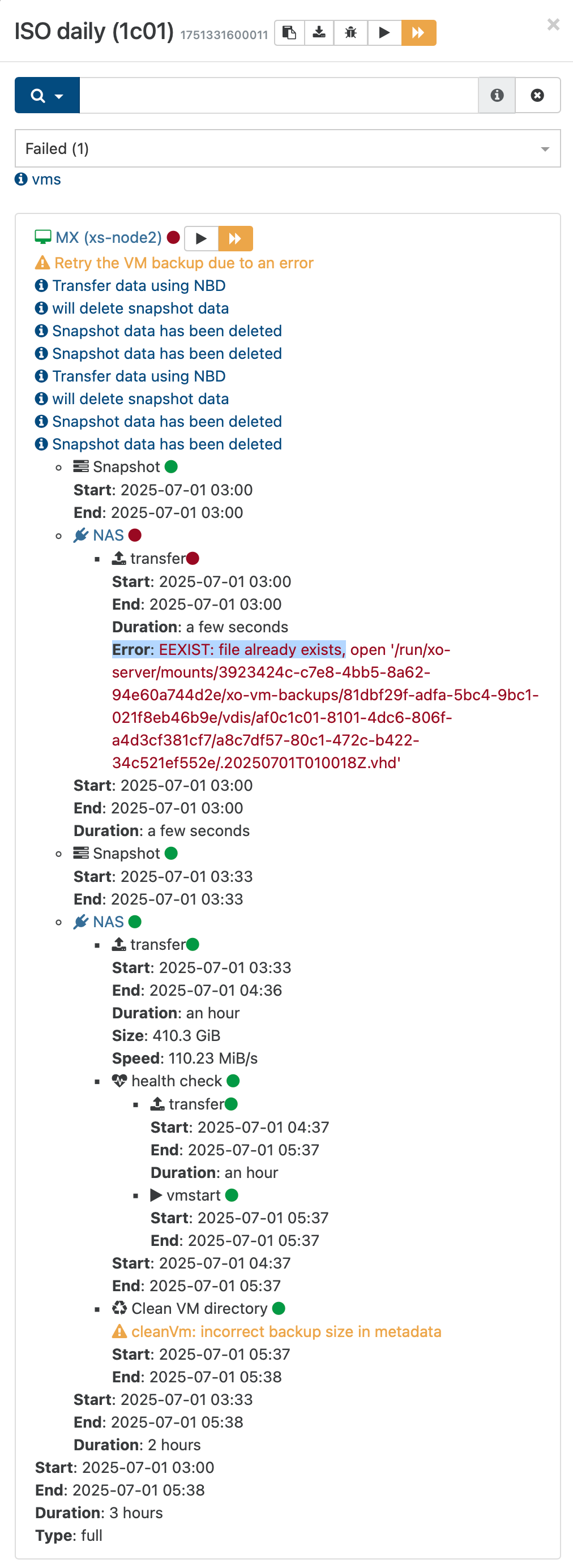

Does the EEXIST error are also related with this? -

VM backup retry - status failed despite it was done on second attempt

Hi,

I see that changes of backup engine causing a lot of new errors.

- There are many "Error: EEXIST: file already exists" which never happened in the past. Restart of the same backup usually just works.

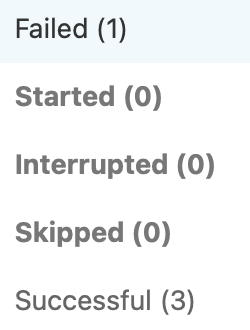

- Due to 1 I've added option "retry" to each backup and now even if error occurs second attempt is successful but overall status of backup tasks is set to failed.

This is how it looks like.

This backup job is an old one which running at my environment for months if not years.

Backups are stored on NAS via NFS.Other VMs processed in this job are successfully processed.

Backup job logs attached.

2025-07-01T01_00_00.011Z - backup NG.json.txt -

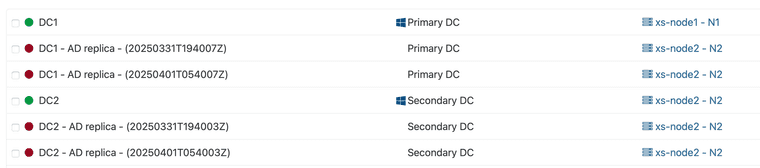

RE: DUPLICATE_MAC_SEED

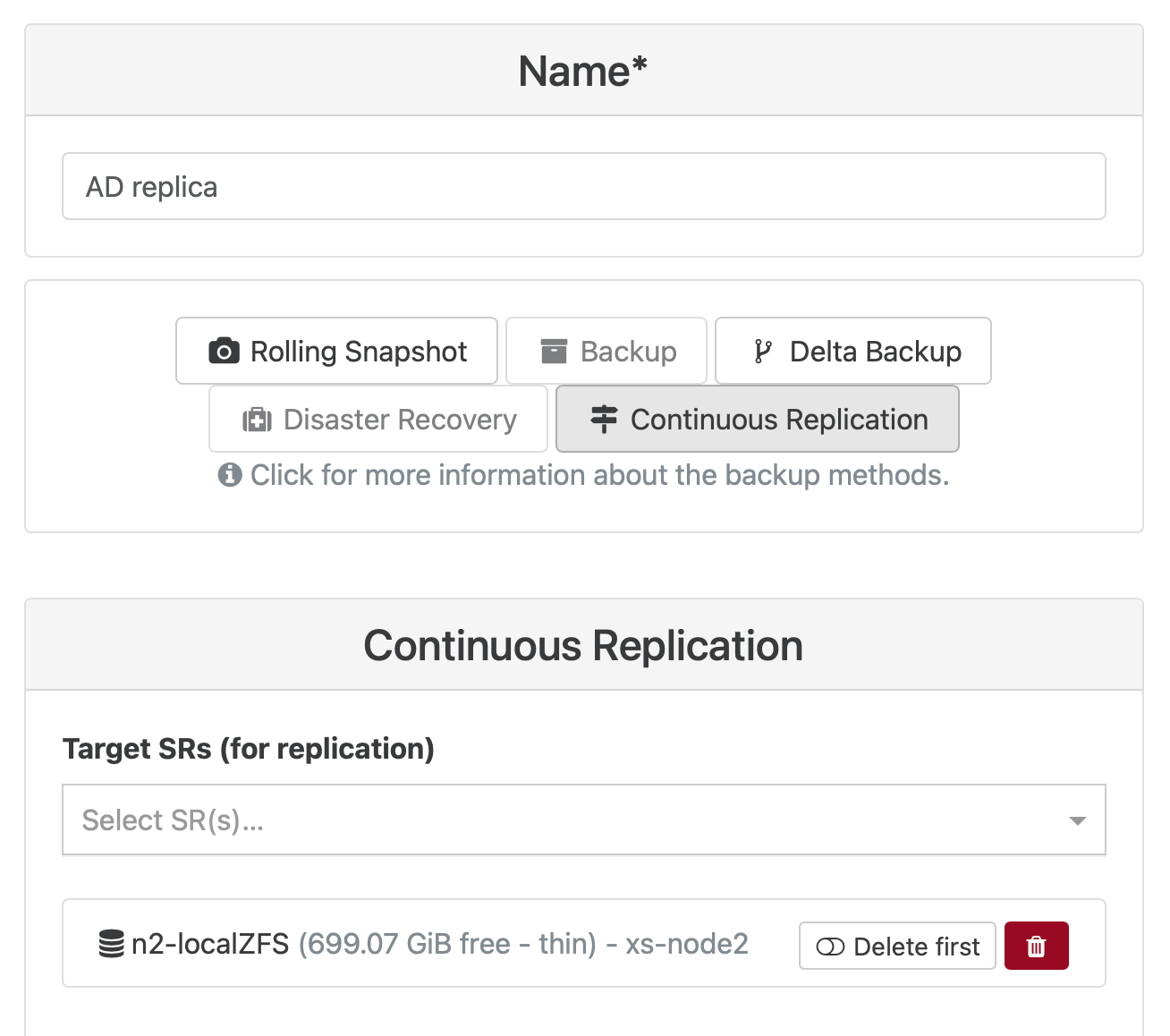

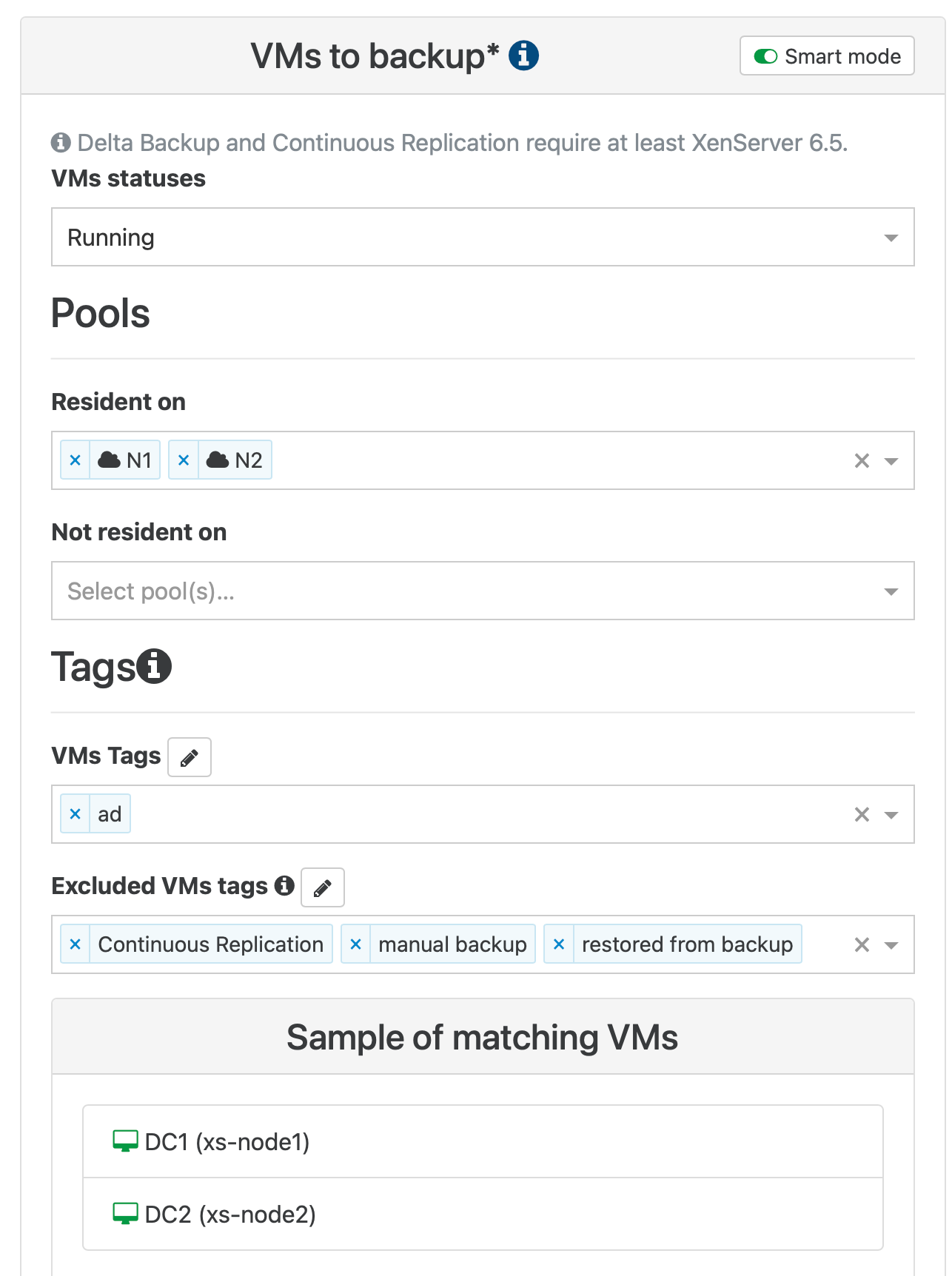

Look I have xs-node1 and xs-node2 as you can see DC1 running on node1 and have replica to separate, replica dedicated, SR at node2... DC2 running on node2 and have replica also to this replica dedicated SR.

Both xs-node1 and xs-node2 have only local storage NVMe for running VMs and xs-node2 have also ZFS pool for replicas.

When I'm trying to migrate DC1 to xs-node2 on NVMe SR I'm getting this DUPLICATE_MAC_SEED error.

Only one DC1 VM running across both xs-nodes...

-

RE: DUPLICATE_MAC_SEED

@DustinB Main reason is related with OS license costs... I agree that it would be easier to have single pool.

-

DUPLICATE_MAC_SEED

I have 2 XCP-NG hosts one is used to run VMs and other is a target for VMs replica.

When I try migrate running VM to other host which is a target of backup replica, so there are VM objects created during replication, I get error DUPLICATE_MAC_SEED.

I need to move all running VMs to this second host to perform maintenance of main one.

It was possible before.Xen Orchestra, commit 6d993

Master, commit ec782 -

RE: CBT: the thread to centralize your feedback

@icompit

Xen Orchestra, commit a5967

Master, commit a5967