XCP-ng 8.3 updates announcements and testing

-

New security and maintenance update candidate

A new XSA (Xen Security Advisory) was published on the 8th of July, related to hardware vulnerabilities in several AMD CPUS. Updated microcode mitigate it, and Xen is updated to adapt to the changes in the CPU features. We also publish other non-urgent updates which we had in the pipe for the next update release.

Security updates

amd-microcode:- Update to 20250626-1 as redistributed by XenServer.

xen-*:- Fix XSA-471 - New speculative side-channel attacks have been discovered, affecting systems running all versions of Xen and AMD Fam19h CPUs (Zen3/4 microarchitectures). An attacker could infer data from other contexts. There are no current mitigations, but AMD is producing microcode to address the issue, and patches for Xen are available. These attacks, named Transitive Scheduler Attacks (TSA) by AMD, include CVE-2024-36350 (TSA-SQ) and CVE-2024-36357 (TSA-L1).

Maintenance updates

http-nbd-transfer:- Fix missing import exceptions in log files.

- Fix a potential HA startup failure with LINSTOR.

xo-lite: update to 0.12.1- [Charts] Fix tooltip overflow when too close to the edge

- [Host/VM/Dashboard] Fix timestamp on some charts

Test on XCP-ng 8.3

yum clean metadata --enablerepo=xcp-ng-testing yum update --enablerepo=xcp-ng-testing rebootThe usual update rules apply: pool coordinator first, etc.

Versions:

amd-microcode: 20250626-1.1.xcpng8.3http-nbd-transfer: 1.7.0-1.xcpng8.3xen: 4.17.5-15.1.xcpng8.3xo-lite: 0.12.1-1.xcpng8.3

What to test

Normal use and anything else you want to test.

Test window before official release of the updates

~2 days.

-

S stormi forked this topic on

S stormi forked this topic on

-

And fixes for XCP-ng 8.2 are coming but they require more work to backport the patches.

-

Tested on my usual Intel test servers you've seen me post in this thread in the past as well as a 2 host AMD Epyc pool,(HP DL325 Gen10) rolling pool reboot worked as expected, hosts rebooted without issue, backups and replication appear to function as usual.

-

@gduperrey Installed and running on Intel systems, and Zen3 system that sees the microcode update.

-

Installed fine. Didn't get a chance to deep dive and/or test features.

-

Updates published: https://xcp-ng.org/blog/2025/07/15/july-2025-security-update-2-for-xcp-ng-8-3-lts/

Thank you for the tests!

-

@gduperrey im getting the following trying to update:

yum clean metadata && yum check-update Loaded plugins: fastestmirror Cleaning repos: xcp-ng-base xcp-ng-updates 4 metadata files removed 3 sqlite files removed 0 metadata files removed Loaded plugins: fastestmirror Loading mirror speeds from cached hostfile Excluding mirror: updates.xcp-ng.org * xcp-ng-base: mirrors.xcp-ng.org Excluding mirror: updates.xcp-ng.org * xcp-ng-updates: mirrors.xcp-ng.org xcp-ng-base/signature | 473 B 00:00:00 xcp-ng-base/signature | 3.0 kB 00:00:00 !!! xcp-ng-updates/signature | 473 B 00:00:00 xcp-ng-updates/signature | 3.0 kB 00:00:00 !!! xcp-ng-updates/primary_db FAILED http://mirrors.xcp-ng.org/8/8.3/updates/x86_64/repodata/0a94f9d57be162987a7e5162b4471809af2ee0897e97cc24187077fb58804380-primary.sqlite.bz2: [Errno 14] HTTPS Error 404 - Not Found-:--:-- ETA Trying other mirror. To address this issue please refer to the below wiki article https://wiki.centos.org/yum-errors If above article doesn't help to resolve this issue please use https://bugs.centos.org/. (1/2): xcp-ng-base/primary_db | 3.9 MB 00:00:02 xcp-ng-updates/primary_db FAILED http://mirrors.xcp-ng.org/8/8.3/updates/x86_64/repodata/0a94f9d57be162987a7e5162b4471809af2ee0897e97cc24187077fb58804380-primary.sqlite.bz2: [Errno 14] HTTPS Error 404 - Not Found-:--:-- ETA Trying other mirror. http://mirrors.xcp-ng.org/8/8.3/updates/x86_64/repodata/0a94f9d57be162987a7e5162b4471809af2ee0897e97cc24187077fb58804380-primary.sqlite.bz2: [Errno 14] HTTPS Error 404 - Not Found Trying other mirror. One of the configured repositories failed (XCP-ng Updates Repository), and yum doesn't have enough cached data to continue. At this point the only safe thing yum can do is fail. There are a few ways to work "fix" this: 1. Contact the upstream for the repository and get them to fix the problem. 2. Reconfigure the baseurl/etc. for the repository, to point to a working upstream. This is most often useful if you are using a newer distribution release than is supported by the repository (and the packages for the previous distribution release still work). 3. Run the command with the repository temporarily disabled yum --disablerepo=xcp-ng-updates ... 4. Disable the repository permanently, so yum won't use it by default. Yum will then just ignore the repository until you permanently enable it again or use --enablerepo for temporary usage: yum-config-manager --disable xcp-ng-updates or subscription-manager repos --disable=xcp-ng-updates 5. Configure the failing repository to be skipped, if it is unavailable. Note that yum will try to contact the repo. when it runs most commands, so will have to try and fail each time (and thus. yum will be be much slower). If it is a very temporary problem though, this is often a nice compromise: yum-config-manager --save --setopt=xcp-ng-updates.skip_if_unavailable=true failure: repodata/0a94f9d57be162987a7e5162b4471809af2ee0897e97cc24187077fb58804380-primary.sqlite.bz2 from xcp-ng-updates: [Errno 256] No more mirrors to try. http://mirrors.xcp-ng.org/8/8.3/updates/x86_64/repodata/0a94f9d57be162987a7e5162b4471809af2ee0897e97cc24187077fb58804380-primary.sqlite.bz2: [Errno 14] HTTPS Error 404 - Not FoundPerhaps mirrors are still syncing?

-

@flakpyro Yes, it always takes a little time for the mirrors to synchronize.

I'm getting correct feedback from the main repository (https://updates.xcp-ng.org/) with the correct updates available.

-

@gduperrey I wonder why now I have to run those commands on my hosts each time for the updates to show:

yum clean all rm -rf /var/cache/yumNot fun

-

Uggg... Just after I upgraded 8.2.1 to 8.3.x and did updates. Guess I have more waiting for me for later today or tomorrow.

-

@flakpyro

I'm good now! -

Updated around 43 hosts without issue.

-

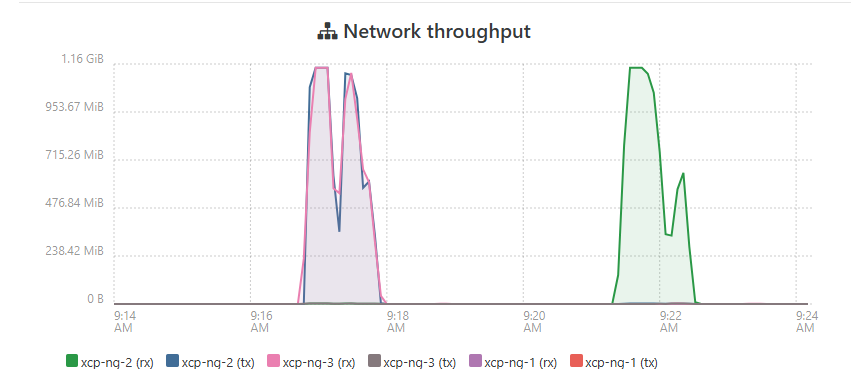

I'm doing the latest production level updates announced yesterday... My pool has never migrated VMs as fast as it is now.

That was migrating several at a time, moving VMs back to "balanced" at the end is moving them at about half that speed one at a time. This is more than double what I was able to do with the same hardware on 8.2.1. It looks like it is actually capping at the maximum 10gbps speeds that the x710 cards and my switch can handle, that's never happened, not even on my lab which has been on v8.3 for over a year.

(note to self, might be time to upgrade those x520 cards in the lab to x710)

Next month's Windows updates might be interesting, hope this increase holds for that process too.

-

This post is deleted! -

said in XCP-ng 8.3 updates announcements and testing:

@gduperrey I wonder why now I have to run those commands on my hosts each time for the updates to show:

yum clean all rm -rf /var/cache/yumNot fun

@gduperrey Can you explain why this is happening and how to get updates showing automatically again?

-

@manilx said in XCP-ng 8.3 updates announcements and testing:

yum clean all

I can't explain it. I can't say what's going on on your system, especially with so little information.

Personally, I run the following commands all the time:

yum clean metadata ; yum updateI very rarely need to use

yum clean all, and I don't remember having to do an additionalrm. And yet, I run the above commands a lot during testing campaigns. -

@gduperrey said in XCP-ng 8.3 updates announcements and testing:

yum clean metadata ; yum update

OK, I'll try this next time.

P.S I've updated the 2 hosts via XO all teh time from 8.3 beta. Always showed patches available in XO. Started with the previous 8.3 updates that they didn't show and I had to run those commands.

-

@manilx We recently upgraded our Koji build system. This may have caused disruptions in this recent update release yesterday, where an XML file was generated multiple times. This has now been fixed and should not happen again. This may explain the issue encountered this time, particularly with the notification of updates via XO.

Note that normally yum metadata expires after a few hours and so it should normally return to normal on its own.

-

New update candidates for you to test!

A new batch of non-urgent updates is ready for user tests before a future collective release. Below are the details about these.

Maintenance updates

blktap: Fix a bad integer conversion that interrupts valid coalesce calls on large VDIs. This fixes an error that could occur on VHD coalesces, generating logs on the SMAPI side.kernel:- Fix compatibility issues with Minisforum MS-A2 machines. For more information, you can consult this forum post.

- Backport fix for CVE-2020-28374, a vulnerability that is unlikely to be exploitable in XCP-ng, fixed as defence-in-depth.

xapi&xen:- Add a new

/etc/xenopsd.conf.ddirectory, in which users can add a.conffile with configuration values for xenopsd. - Patch Xen to support a new option allowing to activate the remapping of grant-tables as Writeback. This fixes a performance issue for Linux Guests on AMD processors. Guests need their kernel to support the feature which enables this fix (Linux distributions that have recent enough kernels or apply fixes from the mainline LTS kernels are OK. Older ones are not. Some currently supported LTS distros don't have the patch yet: RHEL 8 and 9 and their derivatives are not ready yet - no effect on older distros such as Ubuntu 20.04. See partial list below). Windows and *BSD guests were not affected by the performance problem this change solves.

- While we are confident with this change, we decided to make it opt-in at first, so that users be conscious of the change and also know how to revert it if any side effects remain in edge cases. To enable the fix pool-wide, create a file named

/etc/xenopsd.conf.d/custom.confwith the following line:xen-platform-pci-bar-uc=false- Then restart the toolstack on the host:

xe-toolstack-restart - Then add the configuration and restart the toolstack on every other hosts of the pool

- Then stop and start VMs so that the setting is applied to them at boot.

- Then restart the toolstack on the host:

- In a future update, this will become the default.

- This is not the end of the way towards better performance on AMD EPYC servers, but it's significant progress!

- Add a new

xo-lite:- [Host/VM/Dashboard] Fix display error due to inversion of upload and download

- [Sidebar] Updated sidebar to auto close when the screen is small

- [SearchBar] Updated query search bar to work in responsive (PR #8761)

- [Pool,Host/Dashboard] CPU provisioning considers all VMs instead of just running VMs

- For more details, we invite you to read the blog post about the latest Xen-Orchestra update.

OS support for the AMD performance workaround:

- Debian: 11 (5.10: TODO), 12 (6.1: OK)

- Ubuntu: 20.04 LTS (5.4: EOL), 22.04 LTS (5.15: SOON, HWE 6.8: OK), 24.04 LTS (6.8 & HWE 6.14: OK)

- openSUSE Leap, 15.5 (5.14: EOL) 15.6 (6.4: OK)

- SUSE Enterprise (LTSS) : SLE15 SP3 - LTSS (5.3: Not upstream), SLE15 SP4/5 - LTSS (5.14: Not upstream), SLE15 SP6+ (OK)

- RHEL (+derivates): 8 (4.19: EOL-ish?), 9 (5.14: Not upstream), 10 (6.12: OK)

- Fedora: All supported: OK (37+)

- Alpine Linux: All supported: OK (v3.18+)

- EOL = distro is EOL

- Not upstream = not covered by Linux stable project (i.e probably needs discussions with distro)

- SOON: Distro needs to update its kernel

Test on XCP-ng 8.3

yum clean metadata --enablerepo=xcp-ng-testing yum update --enablerepo=xcp-ng-testing rebootThe usual update rules apply: pool coordinator first, etc.

Versions:

blktap: 3.55.5-2.3.xcpng8.3kernel: 4.19.19-8.0.38.4.xcpng8.3xapi: 25.6.0-1.11.xcpng8.3xen: 4.17.5-15.2.xcpng8.3xo-lite: 0.13.1-1.xcpng8.3

What to test

Normal use and anything else you want to test.

Test window before official release of the updates

None defined, but early feedback is always better than late feedback, which is in turn better than no feedback

-

Updated both of my test hosts.

Machine 1:

Intel Xeon E-2336

SuperMicro board.Machine 2:

Minisforum MS-01

i9-13900H (e-cores disabled)

32 GB Ram

Using Intel X710 onboard NICEverything rebooted and came up fine. None of my test systems are AMD based at the moment!