Posted this as I personally found this configuration quite involved, and the permissions earlier in the thread were insufficient to make it work when using AWS KMS for bucket encryption as well as the XO provided encryption secret.

Posts

-

RE: Backup to S3 aborted what permissions are required?

-

RE: Backup to S3 aborted what permissions are required?

@jensolsson-se

I have backups working to S3 using IAM permissions and KMS on S3.

Right now backing up a 1TB VM to S3 in an hour which is great.

First thing - don't create the directory where the backups will be stored on S3 in advance, it will get created automatically or fail otherwise complaining that it should be empty

Then you need the permissions:

I created them in Json assigned to a group and assigned that to an IAM user to be used as a service account. Note the key that is referred to is a key which is a property of that IAM user, not to be confused with the symetric encryption key which will need to be assigned to your bucket.

{ "Version": "2012-10-17", "Statement": [ { "Sid": "AllowBucketListing", "Effect": "Allow", "Action": [ "s3:ListBucket", "s3:GetBucketLocation", "s3:ListBucketVersions" ], "Resource": [ "arn:aws:s3:::your-bucket-name-here", "arn:aws:s3:::your-bucket-name-here/*" ] }, { "Sid": "AllowObjectOperations", "Effect": "Allow", "Action": [ "s3:GetObject", "s3:PutObject", "s3:DeleteObject", "s3:DeleteObjectVersion", "s3:ListBucketMultipartUploads", "s3:ListMultipartUploadParts", "s3:AbortMultipartUpload", "s3:GetObjectVersion", "kms:GenerateDataKey" ], "Resource": [ "arn:aws:s3:::your-bucket-name-here/*", "arn:aws:s3:::your-bucket-name-here" ] }, { "Sid": "AllowKeyAccess", "Effect": "Allow", "Action": [ "kms:GenerateDataKey", "kms:Decrypt" ], "Resource": "arn:aws:kms:your-region-here:your-numeric-account-id-here:key/the-uuid-of-the-encryption-key-for-your-bucket-here" } ] } -

RE: Cannot Import VMDK Through Import > Disk (migrating from ESXi, all methods not working)

@planedrop I have exactly the same issue. I've exported the disk as two files ( the flat one is 800GB) and the wheel is simply spinning 24 hours later. Did you manage to resolve this?

The direct import from ESXi didn't work for me either. My biggest criticism of this would be that there is no feedback to indicate whether it it working or not, an orange task appears, clicking to see the logs hangs indefinitely. I'm not sure if there is a way to upload the pair of files that make up the VMDK to the machine over SCP and then kick off the import from there.

-

RE: More than 64 vCPU on Debian11 VM and AMD EPYC

Now attempting to push this further - when I go beyond 128 CPUs on the VM configuration I am getting the following:

vm.start { "id": "d9b39e2d-a95b-b8bf-dc5f-01d176c49c70", "bypassMacAddressesCheck": false, "force": false } { "code": "INTERNAL_ERROR", "params": [ "xenopsd internal error: Xenctrl.Error(\"22: Invalid argument\")" ], "call": { "method": "VM.start", "params": [ "OpaqueRef:82abc808-84b8-4bc5-9db9-2e6ef20a5e4a", false, false ] }, "message": "INTERNAL_ERROR(xenopsd internal error: Xenctrl.Error(\"22: Invalid argument\"))", "name": "XapiError", "stack": "XapiError: INTERNAL_ERROR(xenopsd internal error: Xenctrl.Error(\"22: Invalid argument\")) at Function.wrap (file:///opt/xo/xo-builds/xen-orchestra-202401131411/packages/xen-api/_XapiError.mjs:16:12) at file:///opt/xo/xo-builds/xen-orchestra-202401131411/packages/xen-api/transports/json-rpc.mjs:35:21" } -

RE: More than 64 vCPU on Debian11 VM and AMD EPYC

@olivierlambert Thanks to everyone's great advice. I've now managed a further more than 20 fold increase by using PCI passthrough on the 3 x NVMe drives, machine is only PCIe 3.x but still I'm getting 10.5GB /s reading on the test with fio and just over 1GB/s write.

My bottleneck for compiling is now once again the CPUs.

I seem to be unable to exceed 128 CPUs, was hoping to assign more as the host has 176 but it is struggling, at the moment my build is pinning those 128 at 100% CPU for 30 minutes so this could potentially offer a fairly significant improvement.

Overall quite pleased to be squeezing this much performance out of some old HPE Gen 9 hardware. May look at adding another disk to the mirror, but at some point the write penalty may outweigh the excellent read performance. I've put chosen slots based on ensuring each NVMe's PCI lanes are connected to a different host CPU.

May try another experiment with smaller PCIe devices and bifurication and see if I can test the upper limits of the throughput. 9 slots to play with!

-

RE: More than 64 vCPU on Debian11 VM and AMD EPYC

@olivierlambert will repeat on everything!

-

RE: More than 64 vCPU on Debian11 VM and AMD EPYC

@olivierlambert Interestingly, so far I've seen about a 40% increase in write performance and IOPS from adjusting the scheduler in dom0 by adding elevator=noop as a kernel parameter and a further 10% from repeating the same on the VM.

I'm going to experiment next with migrating the disks so that the mirror is achieved in the VM with three separate pifs instead of in dom0. Then may try other more radical approaches like passthrough.

-

RE: More than 64 vCPU on Debian11 VM and AMD EPYC

@olivierlambert following a similar approach of multiple VDIs and going raid 1 with 3 way mirror (integrity is critical) will I still see a similar read performance increase, I'm not so worried about the write penalty?

-

RE: More than 64 vCPU on Debian11 VM and AMD EPYC

@TodorPetkov that was very helpful. I've added acpi=off to grub and I am now able to get 128 "CPUs" running, which is double.

When I go beyond this I get the following error when attempting to start the VM

INTERNAL_ERROR(xenopsd internal error: Xenctrl.Error("22: Invalid argument"))

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 128

On-line CPU(s) list: 0-127

Thread(s) per core: 1

Core(s) per socket: 32

Socket(s): 4

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 79

Model name: Intel(R) Xeon(R) CPU E7-8880 v4 @ 2.20GHz

Stepping: 1

CPU MHz: 2194.589

BogoMIPS: 4389.42

Hypervisor vendor: Xen

Virtualization type: full

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 56320K

NUMA node0 CPU(s): 0-127Going to move some stuff around and try passthrough for the M.2 drives next as IOPs is now the biggest performance barrier for this particular workload.

-

RE: More than 64 vCPU on Debian11 VM and AMD EPYC

@TodorPetkov Top tip! Thank you - going to try this out

-

RE: More than 64 vCPU on Debian11 VM and AMD EPYC

@olivierlambert @POleszkiewicz Thanks to you both for all of these ideas - I will have a go at changing the kernel and moving the NVMe to pass through in the first instance. Will report back on results.

-

RE: More than 64 vCPU on Debian11 VM and AMD EPYC

I'm getting stuck with this too - on Debian 11 VM - DL 580 with 4 x Xeon E7-8880 v4 + 3 Samsung 990 Pro 4TB with RAID 1.

Effectively the XCP-NG host has 176 "cores" i.e. with the hyperthreading. But I'm only able to use 64 of them. I was also only able to configure the VM with 120 cores too as 30 with 4 sockets. (Physical architecture has 4 sockets), but I think only 64 actually work.

So I'm compiling AOSP, for a clean build, VM is sticking at max CPU for 30 minutes and I would dearly like to reduce that time, as it could be a compile after a tiny change, so progress is painfully slow. The other thing is the linking phase of this build, I'm only seeing 7000 IOPs with the last 10 minute display. I realize this may under read as the traffic could be quite "bursty" but, having 3 mirrored Samsung 990 Pro drives I would expect more. This makes this part heavily disk bound, the over all process takes 70 minutes.

-

RE: Constant refreshing of interface

Hi,

On Master, as of yesterday, I see this only when I am logged in using an IP address on the URL instead of the hostname. Either way it is SSL with trusted certificate.

There are no console errors

Browser is Microsoft Edge on Windows

No difference in behaviour between incognito mode and normal.

Yes I see all the VMs

There is an improvement - now if I am creating a new VM only the selected network is reverting back to default when it refreshes, the other things like number of cores and ram is remaining when I set it, whereas previously these also were wiped back to default every 20 seconds or so.

Thanks

Alex

-

RE: Constant refreshing of interface

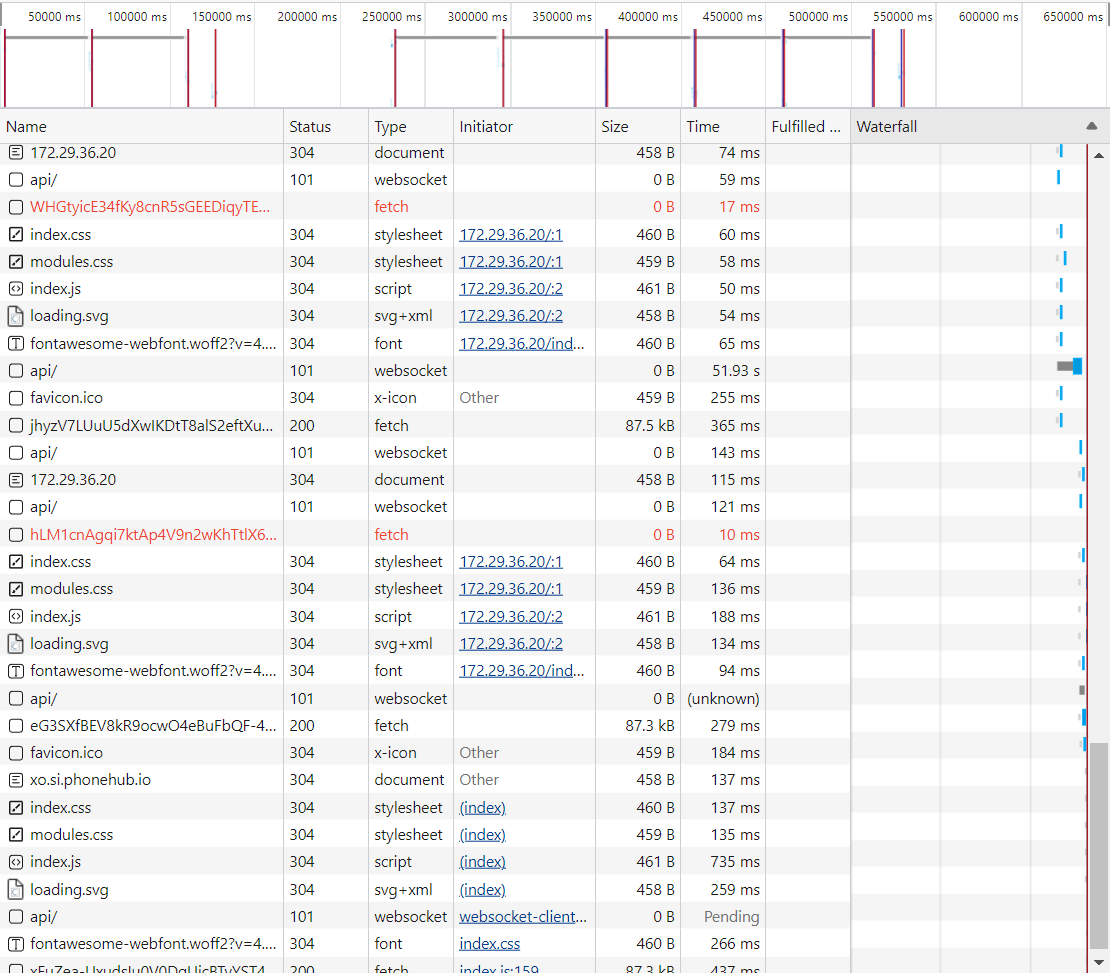

@pdonias IT is something to do with this fetch - perhaps that attempts to use http instead of https. You can see at the bottom of the timeline that as soon as it went to using the name of the site this stopped refreshing.

-

RE: Constant refreshing of interface

@pdonias I think I have found something - it only happens if you access XO using an IP address. I have server's IP listed as an alternate subject name on the cert so it should be okay but when I switch to using the full domain name, it stops refreshing.

-

RE: Constant refreshing of interface

@olivierlambert Nice, no small task! Your company seems to take a very similar approach to ours in terms of just building difficult stuff.

-

RE: Constant refreshing of interface

@pdonias In terms of the good behaviour - it is good that content is up to date all the time on the view but my interface seems to be using a brutal approach to this, glad to hear that this is not considered normal.

-

RE: Constant refreshing of interface

@pdonias Hi - yes exactly that - it is like I clicked refresh, so if you are creating a new VM the form gets wiped out for example, just before you complete it.

-

RE: Constant refreshing of interface

@olivierlambert Thanks, when I was pausing and unpausing 15 or so VMs to allow me to meddle with a NAS it took a couple of clicks on several of the machines before the pause took effect. Also incidentally when unpausing this is distinct from starting so I was unable to easily select all to continue. But I must say that this pause feature is excellent.

I'm not sure if related too but attempting to get the storage to migrate back from NAS to local also seems to take a few tries before it takes effect, not sure if this is also caused by the same interface issue.

On the reloading - this is good behaviour in most cases but could do with a little more targeting, so perhaps not updating bits of the DOM which perform actions like forms unless the underlying resource has changed to a state which would render the action invalid.