I'm running XO af5d8 and have been following along the delta backup issues in the last week or so. This is a homelab, so I cleared the decks and started with a fresh backup last night – new remote, cleared all old backups and snapshots.

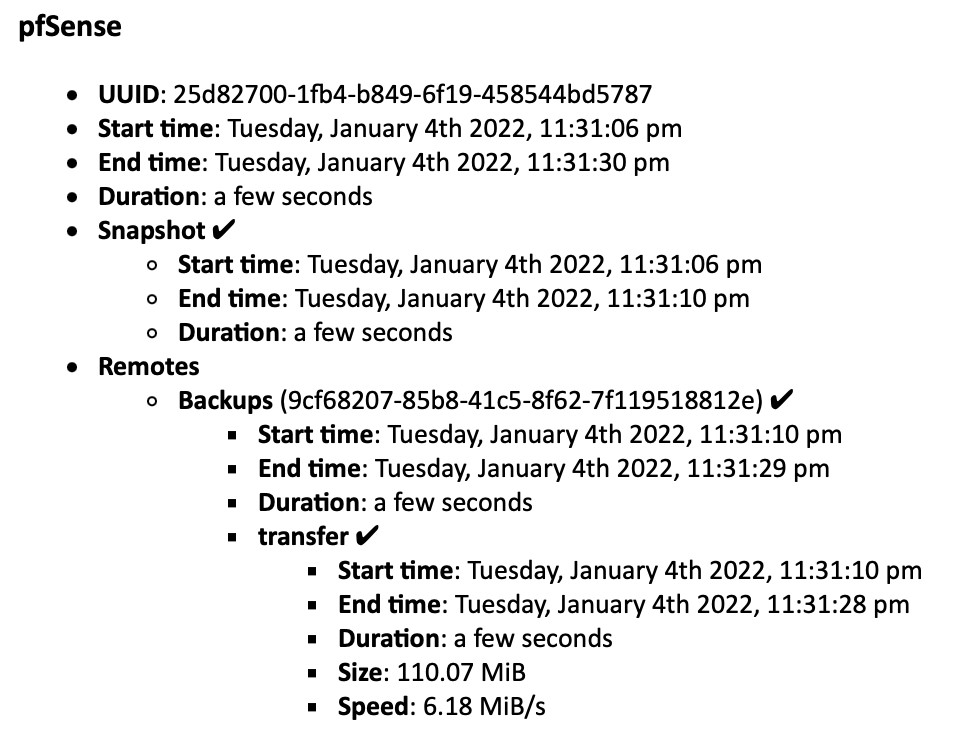

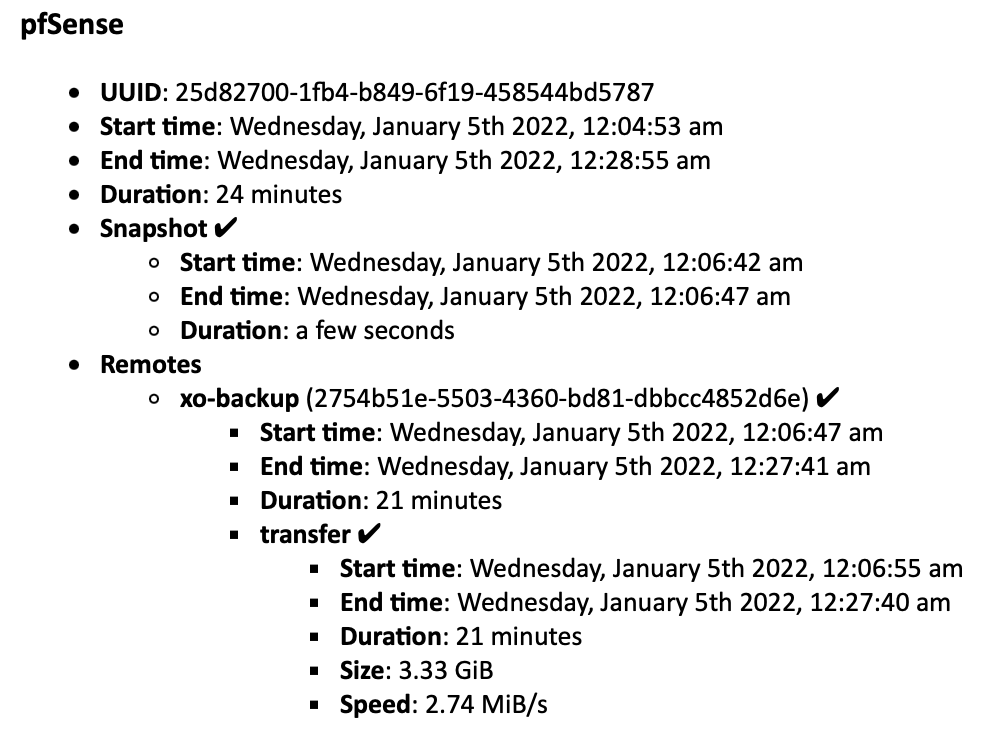

I'm trying to understand transfer sizes. I've configured a nightly delta backup job, and as you'd expect, the first backup was a full (given I'd started with a clean slate). I've got two remotes configured for this job: a local NFS share and a Backblaze S3 bucket:

- The e-mailed backup report states

258.3GiB transferred

- The XO backup log on the backup overview screen shows

129.15GiB transferred

- The output of

du -sh on the NFS share has 39GiB in the folder

- The S3 bucket size according to Backblaze is

46.7 GiB

I'm confused!

Equally confusing, looking into the detail of one of my container VMs, cont-01:

- Has a reported transfer size of

59.18GiB in the XO backup overview screen logs.

- Has

3.8GiB reported disk usage according to df -h

- The associated VHD file on disk reports

189MiB from du -h

I'm missing something here. How to I make sense of this, and what is causing the disparity in sizes? Why would a VM which, seemingly has a maximum of about 4GB of data result in a reported nearly 60GB transfer? Even though, it would seem that the amount of data being stored in the remote is clearly a fraction of that.

Is this a bug, a misunderstanding by me (almost certainly) or something else?

Cheers,

Andrew