Disclaimer - I am not a professional; some parts of this guide maybe incorrect and may negatively affect your XCP-NG host. Always ensure you have backups. Do not test on your production hosts - this is for homelabs only. (It is highly recommended to spin up a nested xcpng host if you wish to test this).

I hope it helps someone

The goal of this post is to assist XCP-ng Homelab users change the default TianoCore Logo to something more aesthetically pleasing. This guide leverages docker, so you will need to ensure you have that running on your system.

On your machine, lets create a new working directory called 'tianocore' - the name doesnt really matter.

mkdir tianocore

cd tianocore

Now we're in our working directory, lets clone the xcp-ng-build-env repo from here: https://github.com/xcp-ng/xcp-ng-build-env (note that I'm using the https method with wget), after which we can un-extract the contents, and remove the file we just downloaded:

wget https://github.com/xcp-ng/xcp-ng-build-env/archive/refs/heads/master.zip

unzip master.zip

rm master.zip

This should create a folder called "xcp-ng-build-env-master" - lets move into that directory:

cd xcp-ng-build-env-master

Run the build.sh script and make sure to specify your version. This will create a docker container that contains all the tools we need to create an updated RPM with the new logo

./build.sh 8.2

NOTE: if you get any errors trying to run the script, please check your docker config.

Once the above script has run, and the script steps have executed, you should be back at the command line.

We are now ready to start the container. Run the following, making sure to modify the -b flag for your build version

./run.py -b=8.2 --rm --volume=./data:/data

This will create and pass through a folder call 'data' to the container, which can be accessed in the container. If this works correctly, you should now have a terminal inside the container, and it should look similar to this:

[builder@0c8bf329eeee ~]$>

If you open another terminal, and check the xcp-ng-build-env-master folder, you should see a new 'data' folder - try placing a file in it.

Now, go back to your other terminal inside the container; if you then do an "ls /data", you should be able to see the file you copied across!

Great! We're getting there...

Now, lets get the edk2 RPM src from here: https://koji.xcp-ng.org/buildinfo?buildID=2495 - make sure you get the correct version (I.E in this guide, we're getting the version for xcp-ng 8.2). This source rpm contains the Tianocore UEFI logo which we want to change:

wget https://koji.xcp-ng.org/kojifiles/packages/edk2/20180522git4b8552d/1.4.6.xcpng8.2/src/edk2-20180522git4b8552d-1.4.6.xcpng8.2.src.rpm

We now have our Source RPM. We need to extract it, make the changes, zip it back up and then recompile, so lets get to it! Use "rpm2cpio" to unextract the rpm

rpm2cpio edk2-20180522git4b8552d-1.4.6.xcpng8.2.src.rpm | cpio -idmv

You will see this create a bunch of .patch files, and a .tar.gz file with the same name as our src.rpm.

We now need to unextract the .tar.gz file to a folder:

tar -xvf edk2-20180522git4b8552d.tar.gz

Now if we do an "ls" we can see we have extracted the .tar.gz file into a folder (again, with the same name, but missing the .tar.gz suffix).

The EUFI logo is hiding in the new folder under "MdeModulePkg/Logo" and is called "Logo.bmp".

At this stage, you need to find and download the new UEFI logo you want, and ensure it's converted into a .bmp bitmap file. Transparency doesn't seem to be supported, but you can use up to 24bit bmp file for full colour! Name the file "Logo.bmp"

In your other terminal, copy the new .bmp file to the tianocore/xcp-ng-build-env/data folder. Then, from the container, it should be accessible via /data/Logo.bmp

From within the container, copy the Logo.bmp from our /data/ folder over to the edk2-20180522git4b8552d/MdeModulePkg/Logo/ folder:

cp /data/Logo.bmp edk2-20180522git4b8552d/MdeModulePkg/Logo/Logo.bmp

Now that we have uploaded our new image, we need to tar up the folder:

tar -czf edk2-20180522git4b8552d.tar.gz edk2-20180522git4b8552d

(NOTE: this should overwrite the 'old' .tar.gz folder in the same directory with the same name)

Now, in our current directory, we need to create 2 folders, one called "SOURCES" and one called "SPECS"

mkdir SOURCES

mkdir SPECS

We now need to move all of our .patch files, as well as the modified .tar.gz files into the SOURCES folder

mv *.patch SOURCES/

mv *.tar.gz SOURCES/

And finally, we move our edk2.spec file into the SPEC folder

mv *.spec SPECS/

NOTE: If you wanted to at this stage, you can clean up the directory by removing the edk2 folder and the original source RPM with the following:

rm -r edk2-20180522git4b8552d/

rm edk2-20180522git4b8552d-1.4.6.xcpng8.2.src.rpm

We're almost ready to compile, but first, we need to make sure we have all the build dependencies installed:

sudo yum-builddep SPECS/*.spec -y

We should now be ready to re-compile. This should result in some new folders being created, but we really want the new .rpm package that should be created in a folder called "RPMS" after we run the following command:

rpmbuild -ba SPECS/*.spec --define "_topdir $(pwd)"

Once the above command has finished compiling (and resulted in an exit 0 code), if we run "ls", we can see that we have our newly created RPMS folder, and if we run 'ls RPMS", we should be able to see a subdirectory called "x86_64", and if we look inside that with "ls RPMS/x86_64/", we can see that we have our new RPM - edk2-20180522git4b8552d-1.4.6.xcpng8.2.x86_64.rpm - WOOHOO!

Lets copy that new RPM to our data folder so we can access it from our main machine:

cp RPMS/x86_64/edk2-20180522git4b8552d-1.4.6.xcpng8.2.x86_64.rpm /data/

Now if you go to the terminal on you main machine, and check the tianocore/xcp-ng-build-env-master/data folder, we can see the .rpm!

Now we have the file on our main machine, we need to copy it over to the XCP-NG host with scp:

scp tianocore/xcp-ng-build-env-master/data/edk2-20180522git4b8552d-1.4.6.xcpng8.2.x86_64.rpm root@<xcp-ng-host-ip>:/tmp

Once the file has copied to the host, ssh to it:

ssh root@<xcp-ng-host-ip>

Once on the host, cd to the /tmp directory and you should be able to see your .rpm file that was copied over with scp as above.

Now, to actually apply the new package, we need to tell rpm to update the package and replacefiles, so our new logo takes effect:

rpm -U --replacefiles --replacepkgs edk2-20180522git4b8552d-1.4.6.xcpng8.2.x86_64.rpm

Once that has run (should be really really quick), head to your XOA, select the host and restart the toolstack (or just run "xe-toolstack-restart" from your ssh sesison)

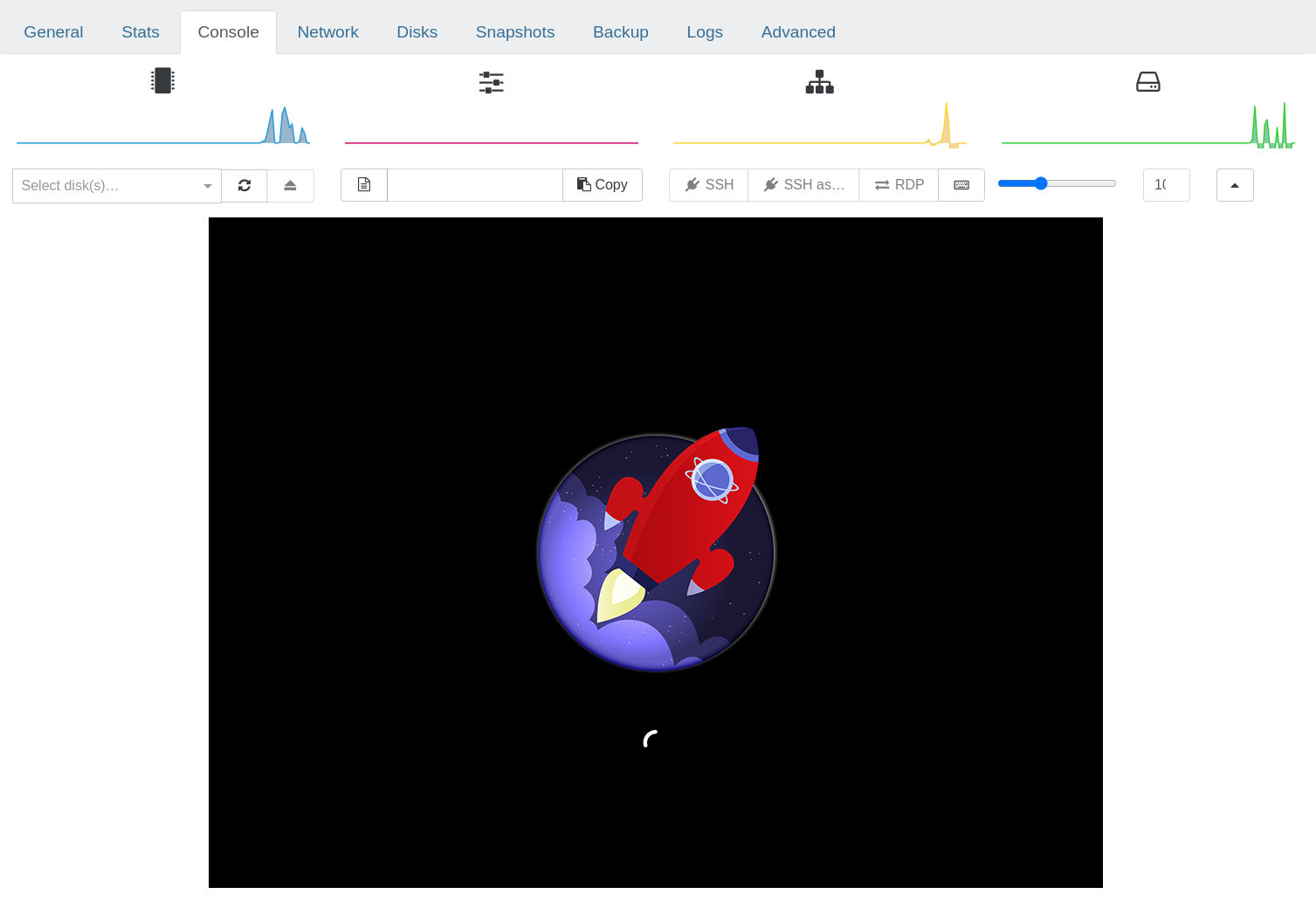

Once the toolstack has restarted, try launching a new UEFI VM and you should be greeted with the new Logo! 🥳