@stormi

It seems to be good here!

@olivierlambert when changes are made (like IP change in the VM), it is now instantly reported by XO

I will be able to go back to my work to integrate a BGP deamon to announce locals IPs to the network (I started last year to work on the xe-deamon but never had the time to finish it, and some months after I saw you blog post about moving to rust for it).

Thank you!

PS: i'm using these 2 scripts to list all interfaces drivers version accross our servers :

$ cat get_network_drivers_info.sh

#!/bin/bash

format="| %-13.13s | %-20.20s | %-20.20s | %-10.10s | %-7.7s | %-10.10s | %-30.30s | %-s \n"

printf "${format}" "date" "hostname" "OS" "interface" "driver" "version" "firmware" "yum"

printf "${format}" "----------------------------" "----------------------------" "----------------------------" "----------------------------" "----------------------------" "----------------------------" "----------------------------" "----------------------------"

if [ $# -gt 0 ]; then

servers=($(echo ${BASH_ARGV[*]}))

else

servers=($(cat host.json | jq -r '.[] | .address' | egrep -v "^192.168.124.9$"))

fi

for line in ${servers[@]}; do

scp get_network_drivers_info.sh.tpl ${line}:/tmp/get_network_drivers_info.sh > /dev/null 2>&1;

ssh -n ${line} bash /tmp/get_network_drivers_info.sh 2> /dev/null;

if [ $? -ne 0 ]; then

echo "${line} fail" >&2

fi

done

$ cat get_network_drivers_info.sh.tpl

#!/bin/bash

format="| %-13.13s | %-20.20s | %-20.20s | %-10.10s | %-7.7s | %-10.10s | %-30.30s | %-s \n"

d=$(date '+%Y%m%d-%H%M')

name=$(hostname)

cd /sys/class/net/

for interface in $(ls -l /sys/class/net/ | awk '/\/pci/ {print $9}'); do

version=$(ethtool -i ${interface} | awk '/^version:/ {$1=""; print}')

firmware=$(ethtool -i ${interface} | awk '/^firmware-version:/ {$1=""; print}')

driver=$(ethtool -i ${interface} | awk '/^driver:/ {$1=""; print}')

YUM=$(which yum)

if [ $? -eq 0 ]; then

packages=$(yum list installed | awk '/ixgbe/ {print $1"@"$2}' | tr '\n' ',')

else

packages="NA"

fi

os_version=$(lsb_release -d | awk '{$1=""} 1' | sed 's/XenServer/XS/; s/ (xenenterprise)//; s/release //')

printf "${format}" "${d}" "${name}" "${os_version}" "${interface}" "${driver}" "${version}" "${firmware}" "${packages}"

done

PS: host.json file is generated via : xo-cli --list-objects type=host

@stormi Hello, some week after, I can confirm that the problem is solved here by using intel-ixgbe.x86_64@5.5.2-2.1.xcpng8.1 or intel-ixgbe.x86_64@5.5.2-2.1.xcpng8.2

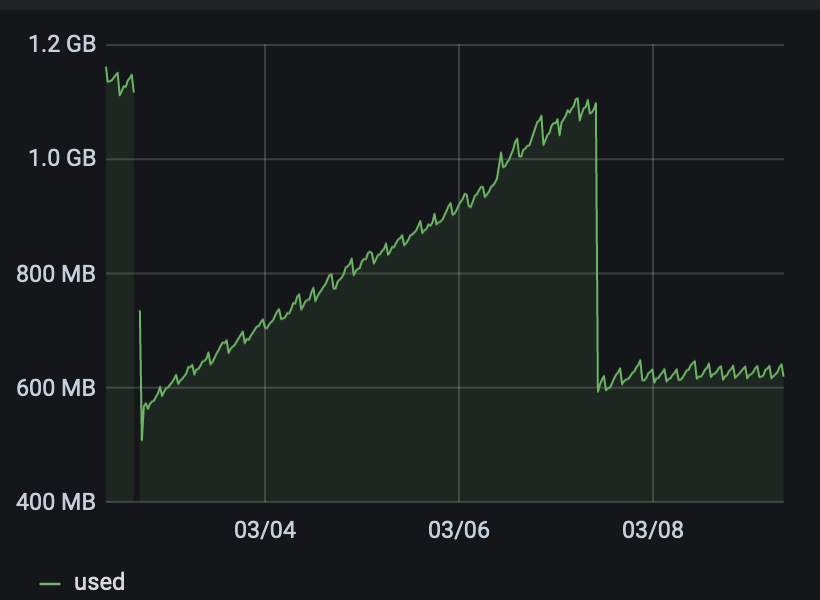

@stormi I have installed intel-ixgbe 5.5.2-2.1.xcpng8.2 on my server s0267. Let's wait a some days to check if the memleak is solved by this patch.

@stormi

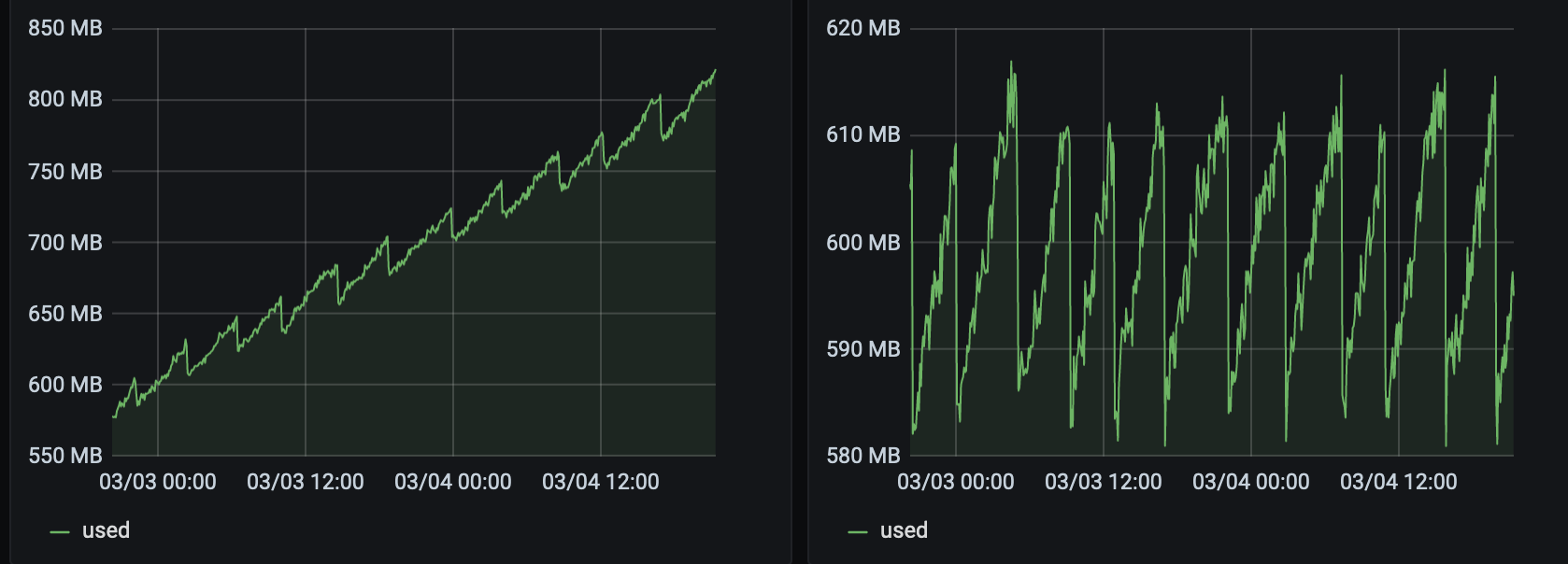

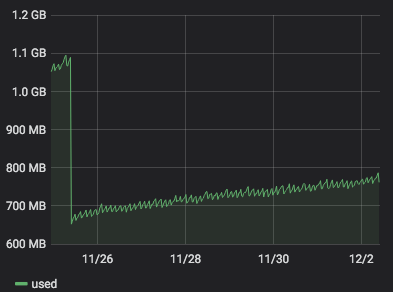

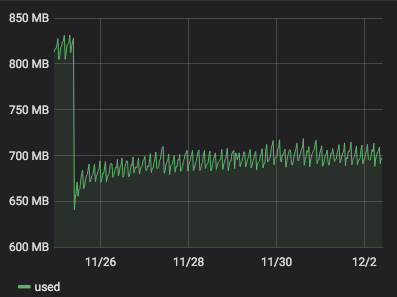

The 2 servers have been reinstalled with an up to date 8.2. They host each 2 VMs that are doing the same thing (~100Mb/s of netdata stream).

The right one has the 5.9.4-1.xcpng8.2, the left one has 5.5.2-2.xcpng8.2.

The patch seem to be OK for me.

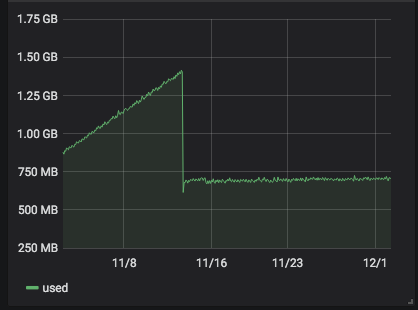

server 266 with alt-kernel: still no problem.

server 268 with 4.19.19-6.0.10.1.xcpng8.1: the problem has begun some days ago after some stable days.

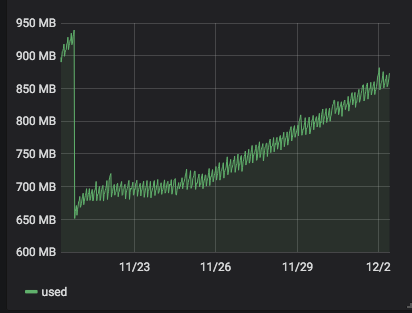

server 272 with 4.19.19-6.0.11.1.0.1.patch53disabled.xcpng8.1:

)

)

server 273 with 4.19.19-6.0.11.1.0.1.patch62disabled.xcpng8.1:

It seems that 4.19.19-6.0.11.1.0.1.patch62disabled.xcpng8.1 is more stable than 4.19.19-6.0.11.1.0.1.patch53disabled.xcpng8.1. But it is a but early to be sure.

@stormi For the kernel-4.19.19-6.0.10.1.xcpng8.1 test, i'm not sure it solve the problem because I get a small memory increase. We have to wait a bit more

@stormi I have installed the two kernels

272 ~]# yum list installed kernel | grep kernel

kernel.x86_64 4.19.19-6.0.11.1.0.1.patch53disabled.xcpng8.1

273 ~]# yum list installed kernel | grep kernel

kernel.x86_64 4.19.19-6.0.11.1.0.1.patch62disabled.xcpng8.1

I have removed the modification in /etc/modprobe.d/dist.conf on server 273.

We have to wait a little bit now

@stormi @r1

Four days later, I get:

search extra built-in weak-updates override updates: problem still present@stormi I have a server with only search extra built-in weak-updates override updates. We will see if it is better.

@r1 installed and it works :

# dmesg | grep kmem

[ 6.181218] kmemleak: Kernel memory leak detector initialized

[ 6.181223] kmemleak: Automatic memory scanning thread started

I will check the leaks tomorrow.

Hello,

We have the same problem here on multiple servers.

We also have 10G interfaces. We use ext local SR.

(::bbxl0271) (2 running) [08:43 bbxl0271 ~]# lsmod | sort -k 2 -n -r

ipv6 548864 313 nf_nat_ipv6

sunrpc 413696 18 lockd,nfsv3,nfs_acl,nfs

ixgbe 380928 0

fscache 380928 1 nfs

nfs 307200 2 nfsv3

libata 274432 2 libahci,ahci

xhci_hcd 258048 1 xhci_pci

scsi_mod 253952 15 fcoe,scsi_dh_emc,sd_mod,dm_multipath,scsi_dh_alua,scsi_transport_fc,usb_storage,libfc,bnx2fc,uas,megaraid_sas,libata,sg,scsi_dh_rdac,scsi_dh_hp_sw

aesni_intel 200704 0

megaraid_sas 167936 4

nf_conntrack 163840 6 xt_conntrack,nf_nat,nf_nat_ipv6,nf_nat_ipv4,openvswitch,nf_conncount

bnx2fc 159744 0

dm_mod 151552 5 dm_multipath

openvswitch 147456 12

libfc 147456 3 fcoe,bnx2fc,libfcoe

hid 122880 2 usbhid,hid_generic

mei 114688 1 mei_me

lockd 110592 2 nfsv3,nfs

cnic 81920 1 bnx2fc

libfcoe 77824 2 fcoe,bnx2fc

usb_storage 73728 1 uas

scsi_transport_fc 69632 3 fcoe,libfc,bnx2fc

ipmi_si 65536 0

ipmi_msghandler 61440 2 ipmi_devintf,ipmi_si

usbhid 57344 0

sd_mod 53248 5

tun 49152 0

nfsv3 49152 1

x_tables 45056 6 xt_conntrack,iptable_filter,xt_multiport,xt_tcpudp,ipt_REJECT,ip_tables

mei_me 45056 0

sg 40960 0

libahci 40960 1 ahci

ahci 40960 0

8021q 40960 0

nf_nat 36864 3 nf_nat_ipv6,nf_nat_ipv4,openvswitch

fcoe 32768 0

dm_multipath 32768 0

uas 28672 0

lpc_ich 28672 0

ip_tables 28672 2 iptable_filter

i2c_i801 28672 0

cryptd 28672 3 crypto_simd,ghash_clmulni_intel,aesni_intel

uio 20480 1 cnic

scsi_dh_alua 20480 0

nf_defrag_ipv6 20480 2 nf_conntrack,openvswitch

mrp 20480 1 8021q

ipmi_devintf 20480 0

aes_x86_64 20480 1 aesni_intel

acpi_power_meter 20480 0

xt_tcpudp 16384 9

xt_multiport 16384 1

xt_conntrack 16384 5

xhci_pci 16384 0

stp 16384 1 garp

skx_edac 16384 0

scsi_dh_rdac 16384 0

scsi_dh_hp_sw 16384 0

scsi_dh_emc 16384 0

pcbc 16384 0

nsh 16384 1 openvswitch

nfs_acl 16384 1 nfsv3

nf_reject_ipv4 16384 1 ipt_REJECT

nf_nat_ipv6 16384 1 openvswitch

nf_nat_ipv4 16384 1 openvswitch

nf_defrag_ipv4 16384 1 nf_conntrack

nf_conncount 16384 1 openvswitch

llc 16384 2 stp,garp

libcrc32c 16384 3 nf_conntrack,nf_nat,openvswitch

ipt_REJECT 16384 3

iptable_filter 16384 1

intel_rapl_perf 16384 0

intel_powerclamp 16384 0

hid_generic 16384 0

grace 16384 1 lockd

glue_helper 16384 1 aesni_intel

ghash_clmulni_intel 16384 0

garp 16384 1 8021q

crypto_simd 16384 1 aesni_intel

crct10dif_pclmul 16384 0

crc_ccitt 16384 1 ipv6

crc32_pclmul 16384 0

I'll install the kmemleak kernel on one server today.

@tcorp8310 for information you can use the xe template-export template-uuid= filename= command to export a template into a file.