@olivierlambert Yes, direct access is fine.

Posts

-

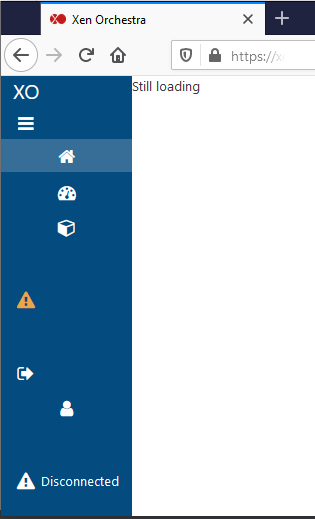

XOce "Still Loading"

My Nginx RP .conflocation / { proxy_pass https://192.168.1.200:443; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; proxy_set_header Connection "upgrade"; proxy_set_header Upgrade $http_upgrade; proxy_http_version 1.1; proxy_redirect default; proxy_read_timeout 1800; }Yes, I used the https://xen-orchestra.com/docs/reverse_proxy.html instructions. No, it's not working right. Please help.

-

RE: vGPU - which graphics card supported?

I am running a WX7100 under XCP-ng 7.6. It only allows full GPU passthrough. So you can run multiple VMS on the server, but only one at a time can be assigned the GPU. I have tested it to work properly with Server 2016 std, Server 2019 std, and Win10 pro. Currently I have it crunching BOINC under win10pro and here are the stats.... BOINCstats for Norby

-

RE: XCP-ng Windows Management Agent

I did try that, but it still did not want to recognize and load the PV drivers properly. The VM is still limping with the realtek divers and. It is performing noticeably slow. Oddly others upgraded without a hitch.

-

RE: XCP-ng 7.5 - MegaRAID SAS 9240-8i hang/reboot issue.

@r1 I have since replaced the LSI 9240-8i in this machine with a LSI 9210-8i. The Server has been running stable for a few months. I took the LSI 9240-8i and put it in a new build and installed XCP-ng on it, and it is running stable too. You can see the specs in my profile. What this boils down to, Is I believe it must be something where the hardware drivers don't want to play nice together. Both are running the same versions of XCP-ng (and are in the same pool just for kicks).

-

RE: XCP-ng Windows Management Agent

1, Upgrade Pool Master First.

- Disable HA for Pool

- Shuffle all running VMs to another server in Pool.

- Place server in Maintenance mode+, designate new Pool Master

- Mount XCP-ng iso in Virtual Media drive on Server

- Reboot Server to Virtual Media

- Follow Upgrade steps

- Unmount Virtual Media and reboot server.

- Designate as Pool Master

2, Upgrade Subsequent servers in Pool

- Shuffle all running VMs to another server in Pool.

- Place server in Maintenance mode+

- Mount XCP-ng iso in Virtual Media drive on Server

- Reboot Server to Virtual Media

- Follow Upgrade steps

- Unmount Virtual Media and reboot server.

3, Repeat part 2 for all remaining servers in the Pool.

- Enable HA for Pool

+ = I manually shuffle the VMs before placing it in Maintenance Mode. The autmation does ok for a couple VMs but when there are a half-dozen in play it gets a little wonky and fails part way through. You wind up attempting two or three times and restarting the toolstack a few timesbefore they are all "automatically" moved. Manually I can move two or 3 at once to differing servers and get done a bit quicker and less of a headache.

I had 3 servers (Xen 1,2 & 3 we'll call them) in this pool running approximately 20 VMs under XS 7.1. Between the remaining two, I was able to keep them running while any particular Server was being upgraded to XCP-ng 7.6. When I upgraded Xen1, Xen 2 and 3 ran all the VMs from a shared SR. When it came time to upgrade Xen2, I shuffled all the sites off the server onto Xen1. Xen3 wasn'e be messed with. Then I upgraded Xen3 and all the sites on it went to Xen2. After I was done with Xen3, I balanced the VMs out across the pool. It seemed to go smoothly, but shortly after I was done, I noticed alarms going off for one of the VMs (VM2 we'll call it). VM1, 2, 3 and 4 are all windows VMs. VMs 1, 2, and 3 are Server 2012 R2, VM4 is a Windows 10 Ent virtual workstation I use to do all this stuff with. VM4 actually floats around from Pool to Pool depending where I need it and what for. During this process It was running from Local Storage on Xen2 or Xen3. The bulk of the rest of the VMs are Linux based, they all chose to play nice too.

So when I investigated the alarm, it turns out the VM detected new hardware and unloaded the PV drivers and loaded the QEMU, Realtek and so-on. The other VM1, 3 and 4 did not react the same and all still have their PV optimizations even after subsequent reboots. (I couldn't live migrate the VMs, I had to shut them down, move them, and reboot them until all servers in the pool were on the same version)

In an attempt to resolve the issue...

- I tried installing the Citrix Drivers (All other VMs are using Citrix drivers carried over from XS 7.1) That didn't work.

-I found RC2 and the directions to uninstall the Citrix Drivers and install RC2. I uninstalled, cleaned as directed (your directions fail to mention a service needs to be disable in order to delete the last xen file from System32) and instaRC2. That didn't work. - I doublechecked the files and Device manager, and ran c:\Program Files\xcp-ng\XenTools\InstallAgent.exe DEFAULT (cmd as Admin). I got the notice to reboot and followed through. On reboot another notice popped up but it still wasn't loading.

- I rebooted 3 more times (as was mentioned) and still no luck.

- I found RC3 and followed the same process with RC3. Still not luck.

- Now another SysAdmin is working with me and we've tried cleansing the system as prescribed and installing manually. I even found a how-to from a Veeam issue with similar symptoms (https://forums.veeam.com/veeam-agent-for-windows-f33/veeam-bare-metal-recovery-on-xenserver-with-pv-nic-drivers-t48304.html) so I made an edit to a couple registry keys and still no luck.

As of right now, we're still poking around the one copy, while the other copy goes "Time Traveling." (see Previous post).

-

RE: XCP-ng Windows Management Agent

So I've been testing XCP-ng since the 7.5 release first came out. It has been my experience the PV drivers are automatically detected and install from Windows. (I have created new windows VMs and they come up already optimized.)

Recently I have been upgrading our servers from XS 7.1 to XCP-ng 7.6. The vast majority took the upgrade, like a textbook and are fine. VMs running either the previous Citrix or the Windows Update drivers and up and optimized without a need to install these packages. EXCEPT (there's always an exception, right?) I have a Windows Server 2012 R2 VM and it doesn't want to play nice. After the Hypervisors were upgraded, and the shuffling was all said and done. this machine will not load the PV drivers. I've tried the "clean your system" how-to, the manual install, drivers RC2 and RC3, even harvested the Citrix drivers in a somewhat creative way and they'll all install, but Windows insists on loading the Realtek driver for the NIC and other 'less than optimized drivers." Like Kirk is ST IV, "I'm going to attempt time travel" to save this whale, by exporting the VM and then importing it into an older version of XS where I can try to install the guest utils properly. Xen won't let you simply "move" to an older version of the server.

The odd part is, I have an essentially Identical VM which went through the upgrade process the same way and it's fine. I also have a Windows 2010 Enterprise VM that survived fine too. This one for some odd reason, isn't.

I have two copies of this server running and I'm trying different solutions concurrently. I'm open to other suggestions.

-

RE: XCP-ng 7.5 - MegaRAID SAS 9240-8i hang/reboot issue.

@mpyusko BTW.... Yes, it did "repair" the SR, but it did not rebuild the array. It is functioning off one drive only. the other is now out of sync. Not a perfect transition, but it's nice to know my data isn't lost by simply swapping adapters. Clearly I'll need to build a software array to rectify the issue.

-

RE: XCP-ng 7.5 - MegaRAID SAS 9240-8i hang/reboot issue.

I just received an LSI SAS-9211 I ordered. I have the exact same controller running in a production environment for a web-hosting company. Similar architecture (HP DL380 G6) and it operates flawlessly with XS 7.1 so I figured I would try on in this machine. Initially I wanted the 9240 for it's hardware based RAID6. However with ZFS support rolling out in the new versions of XS/XCP-ng, there are greater gains to be had there. So opting for a different JBOD mode controller was a fair choice.

Interesting things to note.

- Both brand new LSI cards

- they each use different drivers.

- 9240 supports a broad range of RAID levels 0,1,10,5,6,etc and JBOD

- 9211supports RAD levels 0,1,10, 1E/10E and JBOD

- My system was originally configure for two drives in a 1TB RAID1 volume and 4 drives in a 9TB RAID5 volume.

I removed the 9240 and installed the 9211 making sure the port 0 = 0 and 1 = 1. I then booted the system and entered the LSI setup. unlike the 924, there was no option to import existing arrays. Rather than detsroy everything on the 1TB array (the 9TB was still empty) I opted to just boot straight to XCP-ng 7.6 without any changes. The last time I shut down the server I detached all the storage volumes from the VMs. (A quick trick I learned... detatch volumes, export VMs - only takes a couple minutes and a few KB - then dd flashdrive to backup flash. Upgrade Xen, reattach drives. Keeps you from risking your data, especially on critical machines.) When I booted this time, all the SR's were broken but a quick repair brought them back and when I reattached the volumes, the VMs booted. Remember this was originally a Hardware level Array. I'm still trying to peek into the Array to see if both drives are functioning, but appears healthy so far.

The big question is will the system still generate the same issue? Well, one is a megaraid controller and the other isn't so they use different drivers. Here is the output...

[root@vincent ~]# lspci |grep LSI 07:00.0 Serial Attached SCSI controller: LSI Logic / Symbios Logic SAS2008 PCI-Express Fusion-MPT SAS-2 [Falcon] (rev 03) [root@vincent ~]# lspci -vv -s 07:00.0 07:00.0 Serial Attached SCSI controller: LSI Logic / Symbios Logic SAS2008 PCI-Express Fusion-MPT SAS-2 [Falcon] (rev 03) Subsystem: LSI Logic / Symbios Logic Device 3020 Control: I/O+ Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+ Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx- Latency: 0, Cache Line Size: 64 bytes Interrupt: pin A routed to IRQ 40 Region 0: I/O ports at ec00 [size=256] Region 1: Memory at df2bc000 (64-bit, non-prefetchable) [size=16K] Region 3: Memory at df2c0000 (64-bit, non-prefetchable) [size=256K] Expansion ROM at df200000 [disabled] [size=512K] Capabilities: [50] Power Management version 3 Flags: PMEClk- DSI- D1+ D2+ AuxCurrent=0mA PME(D0-,D1-,D2-,D3hot-,D3cold-) Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME- Capabilities: [68] Express (v2) Endpoint, MSI 00 DevCap: MaxPayload 4096 bytes, PhantFunc 0, Latency L0s <64ns, L1 <1us ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset+ DevCtl: Report errors: Correctable- Non-Fatal+ Fatal+ Unsupported+ RlxdOrd+ ExtTag- PhantFunc- AuxPwr- NoSnoop+ FLReset- MaxPayload 256 bytes, MaxReadReq 512 bytes DevSta: CorrErr- UncorrErr- FatalErr- UnsuppReq- AuxPwr- TransPend- LnkCap: Port #0, Speed 5GT/s, Width x8, ASPM L0s, Exit Latency L0s <64ns, L1 <1us ClockPM- Surprise- LLActRep- BwNot- LnkCtl: ASPM Disabled; RCB 64 bytes Disabled- CommClk+ ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt- LnkSta: Speed 5GT/s, Width x8, TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt- DevCap2: Completion Timeout: Range BC, TimeoutDis+, LTR-, OBFF Not Supported DevCtl2: Completion Timeout: 65ms to 210ms, TimeoutDis-, LTR-, OBFF Disabled LnkCtl2: Target Link Speed: 5GT/s, EnterCompliance- SpeedDis- Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS- Compliance De-emphasis: -6dB LnkSta2: Current De-emphasis Level: -6dB, EqualizationComplete-, EqualizationPhase1- EqualizationPhase2-, EqualizationPhase3-, LinkEqualizationRequest- Capabilities: [d0] Vital Product Data pcilib: sysfs_read_vpd: read failed: Input/output error Not readable Capabilities: [a8] MSI: Enable- Count=1/1 Maskable- 64bit+ Address: 0000000000000000 Data: 0000 Capabilities: [c0] MSI-X: Enable+ Count=15 Masked- Vector table: BAR=1 offset=00002000 PBA: BAR=1 offset=00003800 Capabilities: [100 v1] Advanced Error Reporting UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol- UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt+ UnxCmplt+ RxOF- MalfTLP- ECRC- UnsupReq- ACSViol- UESvrt: DLP+ SDES+ TLP+ FCP+ CmpltTO+ CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC+ UnsupReq- ACSViol- CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- NonFatalErr- CEMsk: RxErr+ BadTLP+ BadDLLP+ Rollover+ Timeout+ NonFatalErr+ AERCap: First Error Pointer: 00, GenCap+ CGenEn- ChkCap+ ChkEn- Capabilities: [138 v1] Power Budgeting <?> Capabilities: [150 v1] Single Root I/O Virtualization (SR-IOV) IOVCap: Migration-, Interrupt Message Number: 000 IOVCtl: Enable- Migration- Interrupt- MSE- ARIHierarchy+ IOVSta: Migration- Initial VFs: 16, Total VFs: 16, Number of VFs: 0, Function Dependency Link: 00 VF offset: 1, stride: 1, Device ID: 0072 Supported Page Size: 00000553, System Page Size: 00000001 Region 0: Memory at 0000000000000000 (64-bit, non-prefetchable) Region 2: Memory at 0000000000000000 (64-bit, non-prefetchable) VF Migration: offset: 00000000, BIR: 0 Capabilities: [190 v1] Alternative Routing-ID Interpretation (ARI) ARICap: MFVC- ACS-, Next Function: 0 ARICtl: MFVC- ACS-, Function Group: 0 Kernel driver in use: mpt3sas [root@vincent ~]#And specifically the Module in use...

[root@vincent ~]# modinfo mpt3sas |grep -i version version: 22.00.00.00 srcversion: 80624A1362CD953ED59AF65 vermagic: 4.4.0+10 SMP mod_unload modversions [root@vincent ~]#(Yes, my server is named after the robot in the Black Hole)

-

RE: XCP-ng 7.5 - MegaRAID SAS 9240-8i hang/reboot issue.

@olivierlambert It will be. Kali with 4.15 and Debian with 4.9 both do not exhibit the issue. However Xenserver and XCP-ng both do. I'd be interested to compare their compiler settings as to what they do and do not include.

-

RE: XCP-ng 7.5 - MegaRAID SAS 9240-8i hang/reboot issue.

Debain Stretch reports:

# modinfo megaraid_sas | grep version version: 06.811.02.00-rc1 srcversion: 64B34706678212A7A9CC1B1 vermagic: 4.9.0-7-amd64 SMP mod_unload modversionsand it completed successfully.

Unfortunately, the NIC drivers are not configured for this boot flash, so I can't copy and past the console output.

-

RE: XCP-ng 7.5 - MegaRAID SAS 9240-8i hang/reboot issue.

Yes, you are understanding correctly. I have Root, Console, iDRAC, KVM and physical access to the machine

The SEL reports:

Normal 0.000202Mon Oct 15 2018 03:24:03 An OEM diagnostic event has occurred. Normal 0.000201Mon Oct 15 2018 03:24:03 An OEM diagnostic event has occurred. Normal 0.000200Mon Oct 15 2018 03:24:03 An OEM diagnostic event has occurred. Normal 0.000199Mon Oct 15 2018 03:24:03 An OEM diagnostic event has occurred. Non-Recoverable 0.000198Mon Oct 15 2018 03:24:03 CPU 1 machine check detected. Normal 0.000197Mon Oct 15 2018 03:24:00 An OEM diagnostic event has occurred. Critical 0.000196Mon Oct 15 2018 03:24:00 A bus fatal error was detected on a component at bus 0 device 9 function 0. Critical 0.000195Mon Oct 15 2018 03:23:59 A bus fatal error was detected on a component at slot 3.Please note, I have tried changing slots, the same issue occurs, and the SEL reports accordingly. The kern.log does not have any applicable output. Neither in 'normal' mode, nor in "safe mode". same applies to dmesg. I'm running tail -f from both files. It there is any output, it's not being logged or displayed.

Under "safe mode" the output is:

# lspci -vv -s 07:00.0 07:00.0 RAID bus controller: LSI Logic / Symbios Logic MegaRAID SAS 2008 [Falcon] (rev 03) Subsystem: LSI Logic / Symbios Logic MegaRAID SAS 9240-8i Control: I/O+ Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+ Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx- Latency: 0, Cache Line Size: 64 bytes Interrupt: pin A routed to IRQ 40 Region 0: I/O ports at ec00 [size=256] Region 1: Memory at df2bc000 (64-bit, non-prefetchable) [size=16K] Region 3: Memory at df2c0000 (64-bit, non-prefetchable) [size=256K] Expansion ROM at df200000 [disabled] [size=256K] Capabilities: [50] Power Management version 3 Flags: PMEClk- DSI- D1+ D2+ AuxCurrent=0mA PME(D0-,D1-,D2-,D3hot-,D3cold-) Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME- Capabilities: [68] Express (v2) Endpoint, MSI 00 DevCap: MaxPayload 4096 bytes, PhantFunc 0, Latency L0s <64ns, L1 <1us ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset+ DevCtl: Report errors: Correctable- Non-Fatal+ Fatal+ Unsupported+ RlxdOrd+ ExtTag- PhantFunc- AuxPwr- NoSnoop+ FLReset- MaxPayload 256 bytes, MaxReadReq 512 bytes DevSta: CorrErr- UncorrErr- FatalErr- UnsuppReq- AuxPwr- TransPend- LnkCap: Port #0, Speed 5GT/s, Width x8, ASPM L0s, Exit Latency L0s <64ns, L1 <1us ClockPM- Surprise- LLActRep- BwNot- LnkCtl: ASPM Disabled; RCB 64 bytes Disabled- CommClk+ ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt- LnkSta: Speed 5GT/s, Width x8, TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt- DevCap2: Completion Timeout: Range BC, TimeoutDis+, LTR-, OBFF Not Supported DevCtl2: Completion Timeout: 65ms to 210ms, TimeoutDis-, LTR-, OBFF Disabled LnkCtl2: Target Link Speed: 5GT/s, EnterCompliance- SpeedDis- Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS- Compliance De-emphasis: -6dB LnkSta2: Current De-emphasis Level: -6dB, EqualizationComplete-, EqualizationPhase1- EqualizationPhase2-, EqualizationPhase3-, LinkEqualizationRequest-I really don't feel I should be having this issue since it is all mainstream, enterprise hardware. The only thing "odd" about this server is I pulled out the PERC 6/i controller and installed a brand new LSI controller because I my drives exceed the 2TB limit of the PERC. Even when idle in Maintenance Mode, it will still randomly reboot with the same SEL output. This makes it too unstable to run for production, or even a dev environment. It could be minutes, hours, or days between random reboots. Probably due to the kernel accessing the controller for some health check or something. In Maintenance Mode, there are no VMs running, just XCP-ng, and that's it. The system is on a conditioned powersource with battery-backup. So I am ruling out dips and spikes. The iDRAC also reports on power quality, usage, and health. Everything is good. As I said before, this does not happen under Kali. I probably have other boot flashes for other OSes and distros I can try. But the fact is, if it was hardware related, then it would never be stable.

-

RE: XCP-ng 7.5 - MegaRAID SAS 9240-8i hang/reboot issue.

Just got to it again....

***** ahci Version Info ***** version: 3.0 srcversion: 35F0A9078B4BB938E54A1E7 vermagic: 4.4.0+10 SMP mod_unload modversions ***** megaraid_sas Version Info ***** version: 07.703.05.00 srcversion: 2A8AB66F9A16F0542FC2173 vermagic: 4.4.0+10 SMP mod_unload modversionslspci -v output

[root@vincent nfs]# lspci -v -s 07:00.0 07:00.0 RAID bus controller: LSI Logic / Symbios Logic MegaRAID SAS 2008 [Falcon] (rev 03) Subsystem: LSI Logic / Symbios Logic MegaRAID SAS 9240-8i Flags: bus master, fast devsel, latency 0, IRQ 40 I/O ports at ec00 [size=256] Memory at df2bc000 (64-bit, non-prefetchable) [size=16K] Memory at df2c0000 (64-bit, non-prefetchable) [size=256K] Expansion ROM at df200000 [disabled] [size=256K] Capabilities: [50] Power Management version 3 Capabilities: [68] Express Endpoint, MSI 00 Capabilities: [d0] Vital Product Data Capabilities: [a8] MSI: Enable- Count=1/1 Maskable- 64bit+ Capabilities: [c0] MSI-X: Enable+ Count=15 Masked- Capabilities: [100] Advanced Error Reporting Capabilities: [138] Power Budgeting <?> Capabilities: [150] Single Root I/O Virtualization (SR-IOV) Capabilities: [190] Alternative Routing-ID Interpretation (ARI) Kernel driver in use: megaraid_sas [root@vincent nfs]#lspci -vv output

[root@vincent nfs]# lspci -vv -s 07:00.0 07:00.0 RAID bus controller: LSI Logic / Symbios Logic MegaRAID SAS 2008 [Falcon] (rev 03) Subsystem: LSI Logic / Symbios Logic MegaRAID SAS 9240-8i Control: I/O+ Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+ Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx- Latency: 0, Cache Line Size: 64 bytes Interrupt: pin A routed to IRQ 40 Region 0: I/O ports at ec00 [size=256] Region 1: Memory at df2bc000 (64-bit, non-prefetchable) [size=16K] Region 3: Memory at df2c0000 (64-bit, non-prefetchable) [size=256K] Expansion ROM at df200000 [disabled] [size=256K] Capabilities: [50] Power Management version 3 Flags: PMEClk- DSI- D1+ D2+ AuxCurrent=0mA PME(D0-,D1-,D2-,D3hot-,D3cold-) Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME- Capabilities: [68] Express (v2) Endpoint, MSI 00 DevCap: MaxPayload 4096 bytes, PhantFunc 0, Latency L0s <64ns, L1 <1us ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset+ DevCtl: Report errors: Correctable- Non-Fatal+ Fatal+ Unsupported+ RlxdOrd+ ExtTag- PhantFunc- AuxPwr- NoSnoop+ FLReset- MaxPayload 256 bytes, MaxReadReq 512 bytes DevSta: CorrErr- UncorrErr- FatalErr- UnsuppReq- AuxPwr- TransPend- LnkCap: Port #0, Speed 5GT/s, Width x8, ASPM L0s, Exit Latency L0s <64ns, L1 <1us ClockPM- Surprise- LLActRep- BwNot- LnkCtl: ASPM Disabled; RCB 64 bytes Disabled- CommClk+ ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt- LnkSta: Speed 5GT/s, Width x8, TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt- DevCap2: Completion Timeout: Range BC, TimeoutDis+, LTR-, OBFF Not Supported DevCtl2: Completion Timeout: 65ms to 210ms, TimeoutDis-, LTR-, OBFF Disabled LnkCtl2: Target Link Speed: 5GT/s, EnterCompliance- SpeedDis- Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS- Compliance De-emphasis: -6dB LnkSta2: Current De-emphasis Level: -6dB, EqualizationComplete-, EqualizationPhase1- EqualizationPhase2-, EqualizationPhase3-, LinkEqualizationRequest-And then same result. Ugh.

-

RE: XCP-ng 7.5 - MegaRAID SAS 9240-8i hang/reboot issue.

@r1 said in XCP-ng 7.5 - MegaRAID SAS 9240-8i hang/reboot issue.:

Can you post

#ls -lhandmd5sumoutput of it?-rw-r--r-- 1 root root 40K Oct 5 13:28 megaraid_sas-07.703.05.00-1.x86_64.rpm e1e232eab5d90308144bf3c47665cedd megaraid_sas-07.703.05.00-1.x86_64.rpm -

RE: XCP-ng 7.5 - MegaRAID SAS 9240-8i hang/reboot issue.

@r1 said in XCP-ng 7.5 - MegaRAID SAS 9240-8i hang/reboot issue.:

@mpyusko Please get the driver from link and

[root@xcp-ng-rjv ~]# yum install megaraid_sas-07.703.05.00-1.x86_64.rpm

[root@xcp-ng-rjv ~]# rmmod megaraid_sas

[root@xcp-ng-rjv ~]# modprobe megaraid_sasI did what you requested....

[root@vincent Downloads]# yum install megaraid_sas-07.703.05.00-1.x86_64.rpm Loaded plugins: fastestmirror Cannot open: megaraid_sas-07.703.05.00-1.x86_64.rpm. Skipping. Error: Nothing to do [root@vincent Downloads]# rpm -Uhv megaraid_sas-07.703.05.00-1.x86_64.rpm error: megaraid_sas-07.703.05.00-1.x86_64.rpm: not an rpm package (or package manifest): [root@vincent Downloads]# -

RE: XCP-ng 7.5 - MegaRAID SAS 9240-8i hang/reboot issue.

For the record...

@mpyusko said in XCP-ng 7.5 - MegaRAID SAS 9240-8i hang/reboot issue.:

Same thing happens in XS 7.6 "upgrading" from XS 7.5. Interenstingly enough post upgrade, the upper-left corner says Xenserver 7.5 but the stats field and Xencenter report 7.6.

Same thing happens in a clean installation of XS 7.6 too.XS 7.5

***** megaraid_sas Version Info ***** version: 07.701.18.00-rc1 srcversion: 550B32DFFACE241631510C5 vermagic: 4.4.0+10 SMP mod_unload modversionsXS 7.6

***** megaraid_sas Version Info ***** version: 07.701.18.00-rc1 srcversion: 550B32DFFACE241631510C5 vermagic: 4.4.0+10 SMP mod_unload modversions -

RE: XCP-ng 7.6 beta

I have not tested the 7.6 beta yet, however When I just did an upgrade from XS 7.5 to XS 7.6 the upper-left corner retained XS 7.5 versioning, while the stats field and Xencenter/XCP-ng Center displayed 7.6. You may want to look into it.

-

RE: XCP-ng 7.5 - MegaRAID SAS 9240-8i hang/reboot issue.

Same thing happens in XS 7.6 "upgrading" from XS 7.5. Interenstingly enough post upgrade, the upper-left corner says Xenserver 7.5 but the stats field and Xencenter report 7.6.

Same thing happens in a clean installation of XS 7.6 too. -

RE: XCP-ng 7.5 - MegaRAID SAS 9240-8i hang/reboot issue.

@olivierlambert said in XCP-ng 7.5 - MegaRAID SAS 9240-8i hang/reboot issue.:

- Same issue with XenServer 7.5?

YES! (Just got around to testing it.)