@AbyssMoon I run it on Windows 10, but I think it's weird it worker before and now it doesn't. Do you have any other newer Windows available to try it on?

Posts

-

RE: EOL: XCP-ng Center has come to an end (New Maintainer!)posted in News

-

RE: EOL: XCP-ng Center has come to an end (New Maintainer!)posted in News

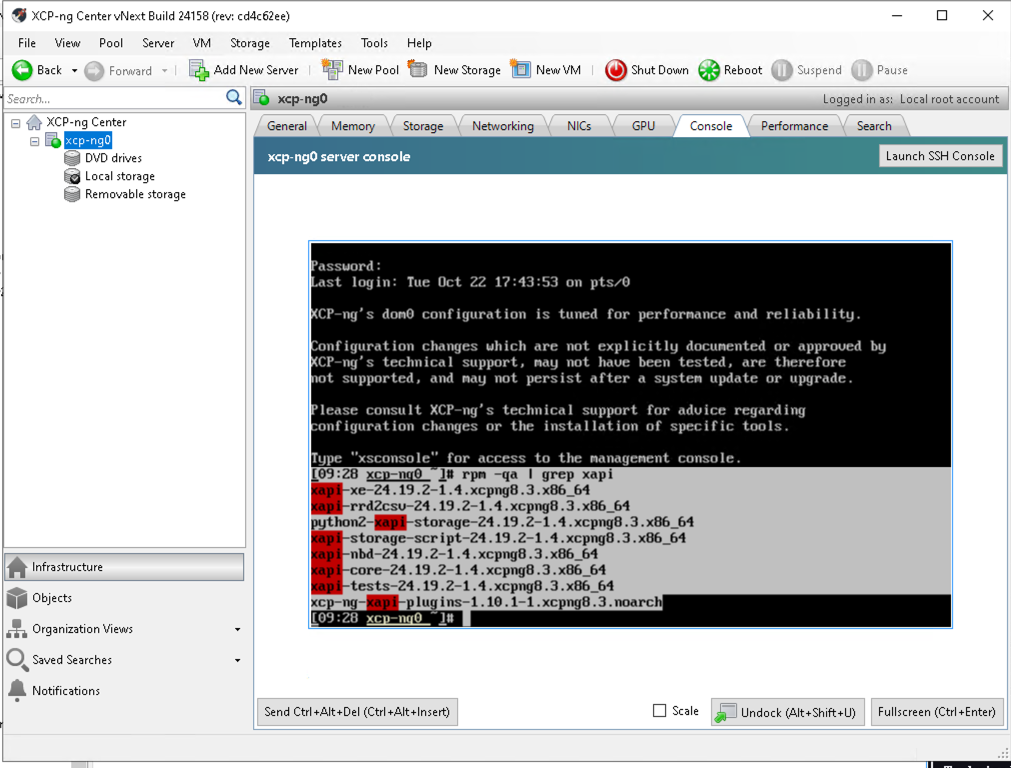

I just downloaded it myself from same link and tried, look on the screenshot, versions are matching, it works to me. I think you must have some different problem, maybe firewall?

-

RE: EOL: XCP-ng Center has come to an end (New Maintainer!)posted in News

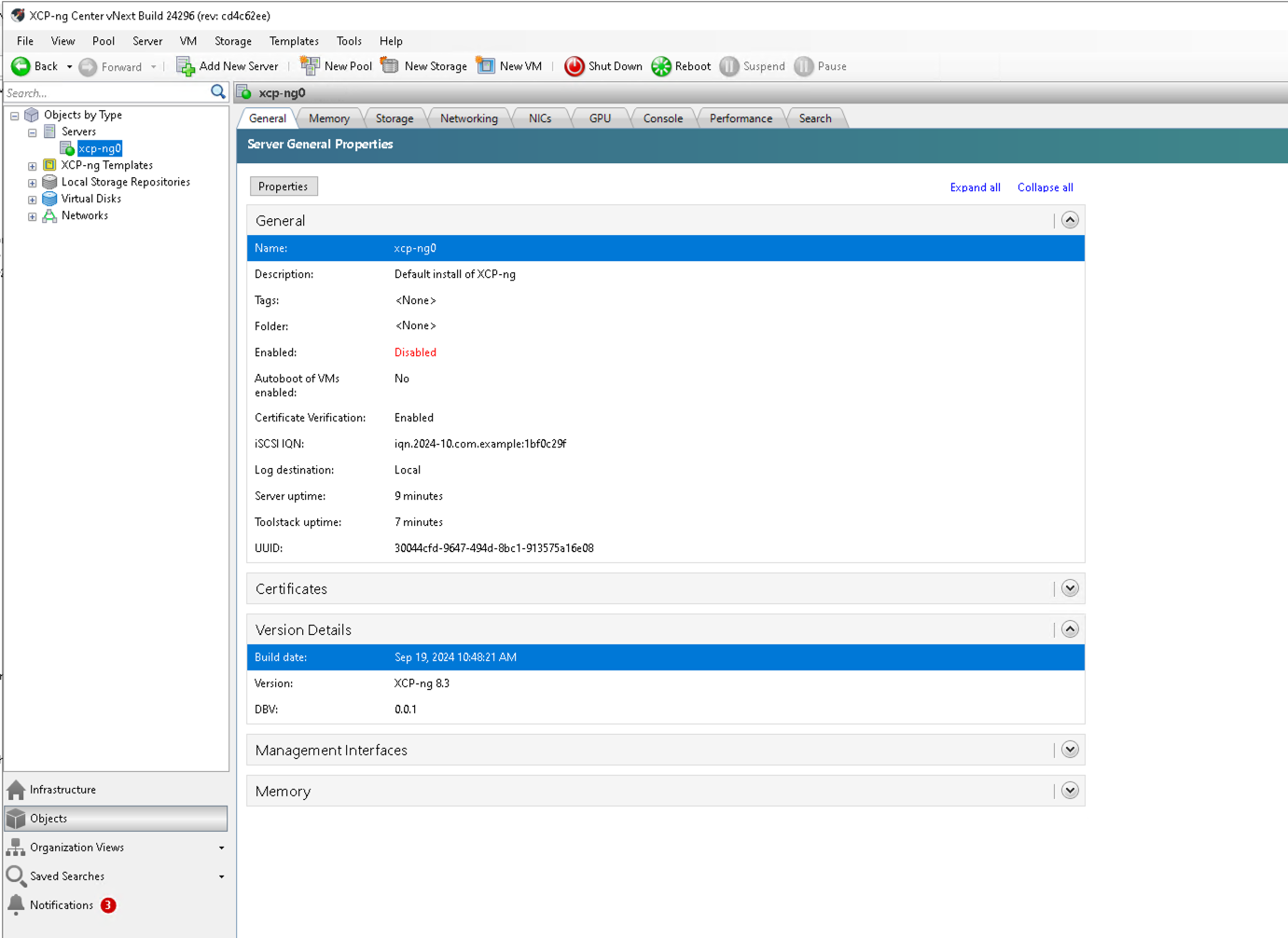

@AbyssMoon yes I did, but you clearly use different version of XCP-ng admin, not my build. Compare build numbers.

-

RE: EOL: XCP-ng Center has come to an end (New Maintainer!)posted in News

@olivierlambert BTW due to inactivity of "new maintainer" I think I could probably volunteer to become another co-maintainer, but can't promise to be overly active either.

-

RE: EOL: XCP-ng Center has come to an end (New Maintainer!)posted in News

@O_V_K Does that version I posted not work?

-

RE: EOL: XCP-ng Center has come to an end (New Maintainer!)posted in News

@Seneken that build I shared here works with latest XCP-ng, current maintainer on github just didn't make a release for latest codebase, but master branch in github works with latest version just fine

-

RE: EOL: XCP-ng Center has come to an end (New Maintainer!)posted in News

@manilx yes because the fix was made 2 weeks ago https://github.com/xcp-ng/xenadmin/commit/cd4c62ee48a2288a01c8b902df4eba0293b27b8e but latest download is from January. Version I shared is from last HEAD of repository, it's newer than what you had.

-

RE: EOL: XCP-ng Center has come to an end (New Maintainer!)posted in News

@manilx here is compiled last development version that works to me with 8.3 - https://cloud.bena.rocks/s/kEWmsmg8ABtQJZe using VS 2022

-

RE: EOL: XCP-ng Center has come to an end (New Maintainer!)posted in News

I take it back

@manilx I just tried last version of XCP-ng (from development branch) and it works with XCP-ng 8.3 beta2 just fine. The fix is already implemented. What doesn't work to you? Are you just missing a built version?

-

RE: EOL: XCP-ng Center has come to an end (New Maintainer!)posted in News

@manilx do we know what has changed in 8.3 that made this "incompatibility"? Seems like some kind of easter-egg from Citrix that Vates seems to have incorporated from their upstream by accident?

-

RE: EOL: XCP-ng Center has come to an end (New Maintainer!)posted in News

hello, @michael-manley do you have any plans for builds that work on macOS and Linux desktops? I tried and managed to compile this in VS for macOS but it doesn't even launch (it crashes on start, segmentation fault).

It would be really nice to be able to use XenCenter without requiring Windows in the same time.

-

RE: what is kernel-alt?posted in Development

Hello, I can confirm that the patches I made contain significant stability improvements, I faced again kernel crashes related to CEPH but only on xcp-ng hosts that aren't patched, for example this is one of the bugs I am hitting on unpatched kernel:

[Fri Oct 13 11:10:32 2023] libceph: osd1 up [Fri Oct 13 11:10:34 2023] libceph: osd1 up [Fri Oct 13 11:10:39 2023] libceph: osd7 up [Fri Oct 13 11:10:40 2023] libceph: osd7 up [Fri Oct 13 11:10:41 2023] WARNING: CPU: 6 PID: 32615 at net/ceph/osd_client.c:554 request_reinit+0x128/0x150 [libceph] [Fri Oct 13 11:10:41 2023] Modules linked in: btrfs xor zstd_compress lzo_compress raid6_pq zstd_decompress xxhash rbd tun ebtable_filter ebtables ceph libceph rpcsec_gss_krb5 nfsv4 nfs fscache bnx2fc(O) cnic(O) uio fcoe libfcoe libfc scsi_transport_fc bonding bridge 8021q garp mrp stp llc dm_multipath ipt_REJECT nf_reject_ipv4 xt_tcpudp xt_multiport xt_conntrack nf_conntrack nf_defrag_ipv6 nf_defrag_ipv4 libcrc32c iptable_filter intel_powerclamp crct10dif_pclmul crc32_pclmul ghash_clmulni_intel pcbc dm_mod aesni_intel aes_x86_64 crypto_simd cryptd glue_helper sg ipmi_si ipmi_devintf ipmi_msghandler video backlight acpi_power_meter nfsd auth_rpcgss oid_registry nfs_acl lockd grace sunrpc ip_tables x_tables rndis_host cdc_ether usbnet mii hid_generic usbhid hid raid1 md_mod sd_mod ahci libahci xhci_pci igb(O) libata [Fri Oct 13 11:10:41 2023] ixgbe(O) xhci_hcd scsi_dh_rdac scsi_dh_hp_sw scsi_dh_emc scsi_dh_alua scsi_mod ipv6 crc_ccitt [Fri Oct 13 11:10:41 2023] CPU: 6 PID: 32615 Comm: kworker/6:19 Tainted: G W O 4.19.0+1 #1 [Fri Oct 13 11:10:41 2023] Hardware name: Supermicro Super Server/X12STH-LN4F, BIOS 1.2 06/23/2022 [Fri Oct 13 11:10:41 2023] Workqueue: ceph-msgr ceph_con_workfn [libceph] [Fri Oct 13 11:10:41 2023] RIP: e030:request_reinit+0x128/0x150 [libceph] [Fri Oct 13 11:10:41 2023] Code: 5d 41 5e 41 5f c3 48 89 f9 48 c7 c2 b1 77 83 c0 48 c7 c6 96 ad 83 c0 48 c7 c7 98 5b 85 c0 31 c0 e8 ed a8 b9 c0 e9 37 ff ff ff <0f> 0b e9 41 ff ff ff 0f 0b e9 60 ff ff ff 0f 0b 0f 1f 84 00 00 00 [Fri Oct 13 11:10:41 2023] RSP: e02b:ffffc90045b67b88 EFLAGS: 00010202 [Fri Oct 13 11:10:41 2023] RAX: 0000000000000002 RBX: ffff8881c6704f00 RCX: ffff8881f27a10e0 [Fri Oct 13 11:10:41 2023] RDX: ffffffff00000002 RSI: ffff8881c7e97448 RDI: ffff8881c7d5b780 [Fri Oct 13 11:10:41 2023] RBP: ffff8881c6704700 R08: ffff8881c7e97450 R09: ffff8881c7e97450 [Fri Oct 13 11:10:41 2023] R10: 0000000000000000 R11: 0000000000000000 R12: ffff8881c7d5b780 [Fri Oct 13 11:10:41 2023] R13: fffffffffffffffe R14: 0000000000000000 R15: 0000000000000001 [Fri Oct 13 11:10:41 2023] FS: 0000000000000000(0000) GS:ffff8881f2780000(0000) knlGS:0000000000000000 [Fri Oct 13 11:10:41 2023] CS: e033 DS: 0000 ES: 0000 CR0: 0000000080050033 [Fri Oct 13 11:10:41 2023] CR2: 00007f7ef7bf2000 CR3: 0000000136dbc000 CR4: 0000000000040660 [Fri Oct 13 11:10:41 2023] Call Trace: [Fri Oct 13 11:10:41 2023] send_linger+0x55/0x200 [libceph] [Fri Oct 13 11:10:41 2023] ceph_osdc_handle_map+0x4e7/0x6b0 [libceph] [Fri Oct 13 11:10:41 2023] dispatch+0x2ff/0xbc0 [libceph] [Fri Oct 13 11:10:41 2023] ? read_partial_message+0x265/0x810 [libceph] [Fri Oct 13 11:10:41 2023] ? ceph_tcp_recvmsg+0x6f/0xa0 [libceph] [Fri Oct 13 11:10:41 2023] ceph_con_workfn+0xa51/0x24f0 [libceph] [Fri Oct 13 11:10:41 2023] ? xen_hypercall_xen_version+0xa/0x20 [Fri Oct 13 11:10:41 2023] ? xen_hypercall_xen_version+0xa/0x20 [Fri Oct 13 11:10:41 2023] ? __switch_to_asm+0x34/0x70 [Fri Oct 13 11:10:41 2023] ? xen_force_evtchn_callback+0x9/0x10 [Fri Oct 13 11:10:41 2023] ? check_events+0x12/0x20 [Fri Oct 13 11:10:41 2023] process_one_work+0x165/0x370 [Fri Oct 13 11:10:41 2023] worker_thread+0x49/0x3e0 [Fri Oct 13 11:10:41 2023] kthread+0xf8/0x130 [Fri Oct 13 11:10:41 2023] ? rescuer_thread+0x310/0x310 [Fri Oct 13 11:10:41 2023] ? kthread_bind+0x10/0x10 [Fri Oct 13 11:10:41 2023] ret_from_fork+0x1f/0x40 [Fri Oct 13 11:10:41 2023] ---[ end trace 1ac50e4ca0f4e449 ]--- [Fri Oct 13 11:10:50 2023] libceph: osd4 up [Fri Oct 13 11:10:50 2023] libceph: osd4 up [Fri Oct 13 11:10:51 2023] WARNING: CPU: 11 PID: 3500 at net/ceph/osd_client.c:554 request_reinit+0x128/0x150 [libceph] [Fri Oct 13 11:10:51 2023] Modules linked in: btrfs xor zstd_compress lzo_compress raid6_pq zstd_decompress xxhash rbd tun ebtable_filter ebtables ceph libceph rpcsec_gss_krb5 nfsv4 nfs fscache bnx2fc(O) cnic(O) uio fcoe libfcoe libfc scsi_transport_fc bonding bridge 8021q garp mrp stp llc dm_multipath ipt_REJECT nf_reject_ipv4 xt_tcpudp xt_multiport xt_conntrack nf_conntrack nf_defrag_ipv6 nf_defrag_ipv4 libcrc32c iptable_filter intel_powerclamp crct10dif_pclmul crc32_pclmul ghash_clmulni_intel pcbc dm_mod aesni_intel aes_x86_64 crypto_simd cryptd glue_helper sg ipmi_si ipmi_devintf ipmi_msghandler video backlight acpi_power_meter nfsd auth_rpcgss oid_registry nfs_acl lockd grace sunrpc ip_tables x_tables rndis_host cdc_ether usbnet mii hid_generic usbhid hid raid1 md_mod sd_mod ahci libahci xhci_pci igb(O) libata [Fri Oct 13 11:10:51 2023] ixgbe(O) xhci_hcd scsi_dh_rdac scsi_dh_hp_sw scsi_dh_emc scsi_dh_alua scsi_mod ipv6 crc_ccitt [Fri Oct 13 11:10:51 2023] CPU: 11 PID: 3500 Comm: kworker/11:16 Tainted: G W O 4.19.0+1 #1 [Fri Oct 13 11:10:51 2023] Hardware name: Supermicro Super Server/X12STH-LN4F, BIOS 1.2 06/23/2022 [Fri Oct 13 11:10:51 2023] Workqueue: ceph-msgr ceph_con_workfn [libceph] [Fri Oct 13 11:10:51 2023] RIP: e030:request_reinit+0x128/0x150 [libceph] [Fri Oct 13 11:10:51 2023] Code: 5d 41 5e 41 5f c3 48 89 f9 48 c7 c2 b1 77 83 c0 48 c7 c6 96 ad 83 c0 48 c7 c7 98 5b 85 c0 31 c0 e8 ed a8 b9 c0 e9 37 ff ff ff <0f> 0b e9 41 ff ff ff 0f 0b e9 60 ff ff ff 0f 0b 0f 1f 84 00 00 00 [Fri Oct 13 11:10:51 2023] RSP: e02b:ffffc900461d7b88 EFLAGS: 00010202 [Fri Oct 13 11:10:51 2023] RAX: 0000000000000002 RBX: ffff8881c62b6d00 RCX: 0000000000000000 [Fri Oct 13 11:10:51 2023] RDX: ffff8881c59c0740 RSI: ffff888137a50200 RDI: ffff8881c59c04a0 [Fri Oct 13 11:10:51 2023] RBP: ffff8881c62b6b00 R08: ffff8881f17c2e00 R09: ffff8881f162ba00 [Fri Oct 13 11:10:51 2023] R10: 0000000000000000 R11: 000000000000cb1b R12: ffff8881c59c04a0 [Fri Oct 13 11:10:51 2023] R13: fffffffffffffffe R14: 0000000000000000 R15: 0000000000000001 [Fri Oct 13 11:10:51 2023] FS: 0000000000000000(0000) GS:ffff8881f28c0000(0000) knlGS:0000000000000000 [Fri Oct 13 11:10:51 2023] CS: e033 DS: 0000 ES: 0000 CR0: 0000000080050033 [Fri Oct 13 11:10:51 2023] CR2: 00007fa6188896c8 CR3: 00000001b7014000 CR4: 0000000000040660 [Fri Oct 13 11:10:51 2023] Call Trace: [Fri Oct 13 11:10:51 2023] send_linger+0x55/0x200 [libceph] [Fri Oct 13 11:10:51 2023] ceph_osdc_handle_map+0x4e7/0x6b0 [libceph] [Fri Oct 13 11:10:51 2023] dispatch+0x2ff/0xbc0 [libceph] [Fri Oct 13 11:10:51 2023] ? read_partial_message+0x265/0x810 [libceph] [Fri Oct 13 11:10:51 2023] ? ceph_tcp_recvmsg+0x6f/0xa0 [libceph] [Fri Oct 13 11:10:51 2023] ceph_con_workfn+0xa51/0x24f0 [libceph] [Fri Oct 13 11:10:51 2023] ? check_preempt_curr+0x84/0x90 [Fri Oct 13 11:10:51 2023] ? ttwu_do_wakeup+0x19/0x140 [Fri Oct 13 11:10:51 2023] process_one_work+0x165/0x370 [Fri Oct 13 11:10:51 2023] worker_thread+0x49/0x3e0 [Fri Oct 13 11:10:51 2023] kthread+0xf8/0x130 [Fri Oct 13 11:10:51 2023] ? rescuer_thread+0x310/0x310 [Fri Oct 13 11:10:51 2023] ? kthread_bind+0x10/0x10 [Fri Oct 13 11:10:51 2023] ret_from_fork+0x1f/0x40 [Fri Oct 13 11:10:51 2023] ---[ end trace 1ac50e4ca0f4e44a ]--- [Fri Oct 13 11:11:00 2023] rbd: rbd1: no lock owners detected [Fri Oct 13 11:11:07 2023] rbd: rbd1: no lock owners detectedpatched hosts don't see any of them, logs just say:

[Fri Oct 13 11:11:47 2023] libceph: osd1 up [Fri Oct 13 11:11:54 2023] libceph: osd7 up [Fri Oct 13 11:12:05 2023] libceph: osd4 upAnd this is only the less severe crash, I am sometimes facing crashes that make CEPH completely inaccessible on unpatched hypervisors, requiring host reboot.

I strongly recommend incorporating these patches to anyone who is using CEPH with xcp-ng

-

RE: what is kernel-alt?posted in Development

great, anyway, here is the patch I made - https://github.com/xcp-ng-rpms/kernel/pull/9

I am still testing it though, but it compiles and works on XCP-ng lab I have just fine. It's going to take a while until I can confirm if it fixes the issues I was encountering though, they were rather rare.

-

what is kernel-alt?posted in Development

Hello,

Because of a number of bugs and issues in current dom0 kernel, I am currently backporting ceph code from kernel 4.19.295 to https://github.com/xcp-ng-rpms/kernel - it works great, but while I was upgrading the kernel on my test cluster, I noticed there is a package kernel-alt which contains 4.19.265

What is that kernel? Is it stable? Does it contain all usual patches? Which repo does it come from?

-

RE: CEPHFS - why not CEPH RBD as SR?posted in Development

Hello, just a follow-up I figured out probable fix for performance issues (the locking issue seems to have disappeared on its own I suspect it happened only due to upgrade process as pool contained mix of 8.0 and 8.2 hosts)

It was caused by very slow (several second) executions of basic LVM commands - pvs / lvs etc took many seconds. When started with debug options it seems it took excessive amount of time scanning iSCSI volumes in /dev/mapper as well as the individual LVs that were also presented in /dev/mapper as if they were PVs - it actually subsequently ignored them but still those were (in my case) hundreds of LVs and each had to be open to check metadata and size.

After modifying /etc/lvm/master/lvm.conf by adding this:

# /dev/sd.* is there to avoid scanning RAW disks that are used via UDEV for storage backend filter = [ "r|/dev/sd.*|", "r|/dev/mapper/.*|" ]Performance of LVM commands improved from ~5 seconds to less than 0.1 second and issue with slow startup / shutdown / snapshot of VMs (sometimes they took almost 10 minutes) was resolved.

Of course this filter needs to be adjusted based on specific needs of the given situation. In my case both /dev/sd* as well as /dev/mapper devices are NEVER used by LVM backed SRs, so it was safe to ignore them for me. (all my LVM SRs are from /dev/rbd/)

-

RE: CEPHFS - why not CEPH RBD as SR?posted in Development

OK, on other note, another concept that others might find interesting in the future, and that would be very nice if supported in XCP-ng 9 or newer versions, would be ability to run user provided containers in some docker-like environment. I know it's considered a very bad practice to run anything extra in dom0, but I noticed that CEPH OSDs (the daemons responsible for managing the actual storage units like HDDs or SSDs in CEPH) benefit from being as close to the hardware as possible.

In a hyperconvergent setup I am working with, this means that ideally OSDs should have as direct access to the storage hardware as possible, which is unfortunately possible only in few ways:

- Fake "passthrough" via udev SR (that's what I do now - not great, not terrible, still a lot of "emulation" and overhead from TAP mechanism and many hypercalls needed to access the HW, wasting the CPU and other resources)

- Real passthrough via PCI-E (VT-d) passthrough - this works great in theory, but since you are giving direct access to storage controller, you need a dedicated one (HBA card) just for the VM that runs OSDs - works fast but requires expensive extra hardware and is complex to setup.

- Utilize fact that dom0 has direct access to HW and run OSDs directly in dom0 - that's where container engine like docker would be handy.

Last option is cheapest way to get a real direct HW access while sharing storage controller with dom0 in some at least little bit isolated environment, in which you don't need to taint the runtime of dom0 too much, but unfortunately my experiments with this failed so far for couple reasons:

- Too old kernel with some modules missing (Docker needs a network bridge kernel module to work properly, which is not available unless you turn on bridge network backend)

- Too old runtime (based on CentOS 7)

If this kind of containerization would be supported by default, it would make it possible to host these "pluggable" software defined storage solutions directly in dom0, utilizing HW much more efficiently.

-

RE: CEPHFS - why not CEPH RBD as SR?posted in Development

@olivierlambert great! Is there any repository where I can find those patches? Maybe I can try to port them into a newer kernel and try if it resolves some of the problems I am seeing. Thanks

-

RE: CEPHFS - why not CEPH RBD as SR?posted in Development

OK BTW is there any reason we are using such an ancient kernel? I am fairly sure that newer version of kernel addresses huge amount of various bugs that were identified in libceph kernel implementation over the time. Or is there any guide how to build a custom XCP-ng dom0 kernel from newer upstream? (I understand - no support, no warranty etc.)

-

RE: CEPHFS - why not CEPH RBD as SR?posted in Development

I will experiment with it in my lab, but I was hoping I could stick with shared LVM concept, because it's really easy to manage and work with.