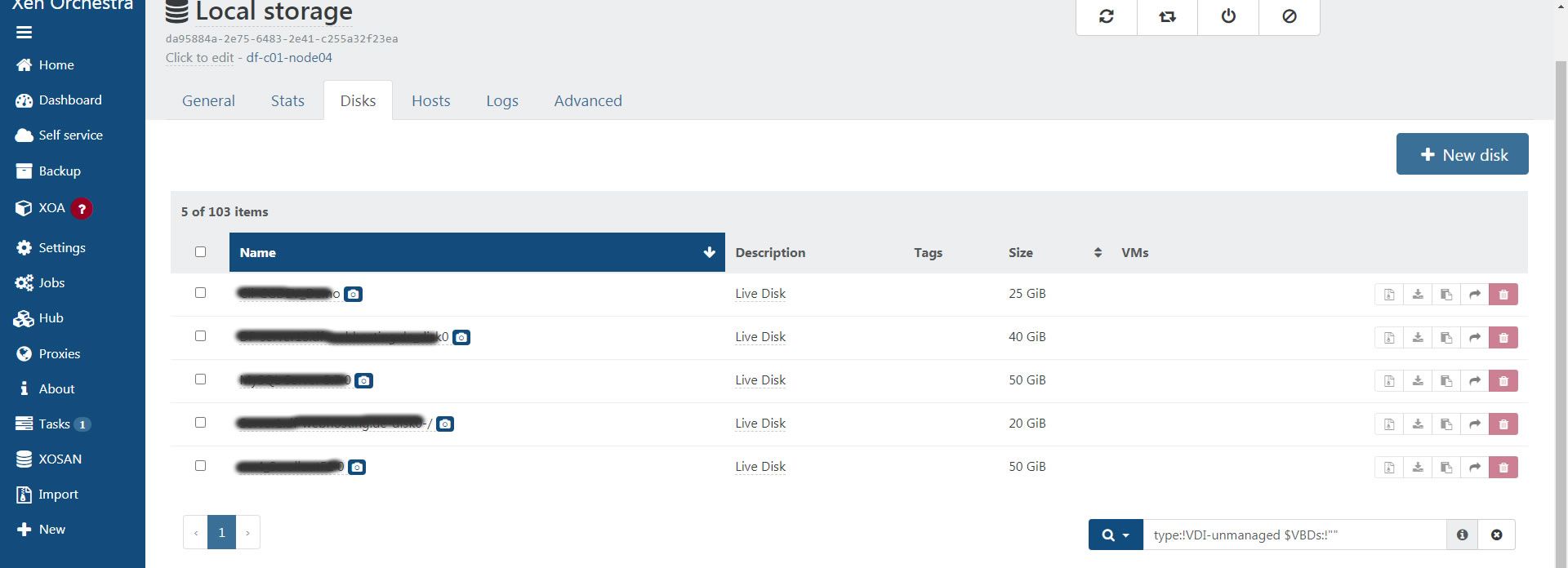

How can I determine which VM is causing this problem?

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.830066:xen_platform_log xen platform: XENVIF|__AllocatePages: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.830198:xen_platform_log xen platform: XENVIF|ReceiverPacketCtor: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.830311:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail2

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.830458:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.830605:xen_platform_log xen platform: XENVIF|__AllocatePages: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.830712:xen_platform_log xen platform: XENVIF|ReceiverPacketCtor: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.830797:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail2

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.830885:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.831006:xen_platform_log xen platform: XENVIF|__AllocatePages: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.831094:xen_platform_log xen platform: XENVIF|ReceiverPacketCtor: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.831185:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail2

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.831260:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.831333:xen_platform_log xen platform: XENVIF|__AllocatePages: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.831407:xen_platform_log xen platform: XENVIF|ReceiverPacketCtor: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.831478:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail2

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.831551:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.884264:xen_platform_log xen platform: XENVIF|__AllocatePages: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.884519:xen_platform_log xen platform: XENVIF|ReceiverPacketCtor: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.884615:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail2

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.884721:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.923891:xen_platform_log xen platform: XENVIF|__AllocatePages: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.924054:xen_platform_log xen platform: XENVIF|ReceiverPacketCtor: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.924207:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail2

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.924319:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.924505:xen_platform_log xen platform: XENVIF|__AllocatePages: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.924675:xen_platform_log xen platform: XENVIF|ReceiverPacketCtor: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.924803:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail2

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.924913:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.927026:xen_platform_log xen platform: XENVIF|__AllocatePages: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.927185:xen_platform_log xen platform: XENVIF|ReceiverPacketCtor: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.927303:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail2

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.927436:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.927657:xen_platform_log xen platform: XENVIF|__AllocatePages: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.927772:xen_platform_log xen platform: XENVIF|ReceiverPacketCtor: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.927903:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail2

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.927993:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.928136:xen_platform_log xen platform: XENVIF|__AllocatePages: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.928255:xen_platform_log xen platform: XENVIF|ReceiverPacketCtor: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.928396:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail2

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.928479:xen_platform_log xen platform: XENBUS|CacheCreateObject: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.932786:xen_platform_log xen platform: XENVIF|__AllocatePages: fail1 (c0000017)

Nov 1 13:05:00 df-c01-node04 qemu-dm-25[29890]: 29890@1730462700.932906:xen_platform_log xen platform: XENVIF|ReceiverPacketCtor: fail1 (c0000017)