@stormi After the informed change, the backup occurred with 100% success, on no disk in the coalesce chain.

We're migrating another cluster to XCP-ng 8!

Thanks for the support, quick return and attention.

@stormi After the informed change, the backup occurred with 100% success, on no disk in the coalesce chain.

We're migrating another cluster to XCP-ng 8!

Thanks for the support, quick return and attention.

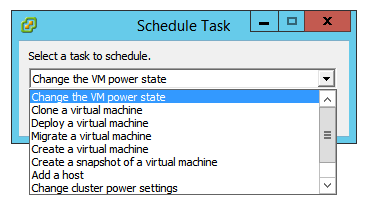

If possible, create in the XPC-ng Center the option of Schedule Tasks, such as VMWare ... the options are simple but make the administrator's life easier.

If you can also report to Citrix ...

@olivierlambert Even updating the host from 8.0 to 8.2 (with last update level) and after cluster and NFS migration, the problem persists.

We updated the virtualization agent on the virtual server to the latest available version from Citrix and we were able to back it up for a few weeks...but the problem reoccurred, again only for the same server.

Are there any logs I can paste to help identify this failure?

@olivierlambert Is there any difference between "traditional Backup" and Export VM performed by Xen Orchestra?

Even changing the cluster virtual server, the problem still occurs. However, the Export operation works normally.

@olivierlambert But any reason why this issue only occurs for this VM? Even cloning the VM, the problem happens with the clone... even changing the NFS Server, the problem happens... Let's try moving it to a new cluster.

@_danielgurgel Here is complete log of the operation.

vm.copy

{

"vm": "54676579-2328-d137-1002-0f32920eab23",

"sr": "50c59b18-5b5c-2eed-8c82-b8f7fdc8e9b5",

"name": "VM_NAME"

}

{

"call": {

"method": "VM.destroy",

"params": [

"OpaqueRef:fc032b38-d8d7-43ab-983c-f54bc9dc6f85"

]

},

"message": "operation timed out",

"name": "TimeoutError",

"stack": "TimeoutError: operation timed out

at Promise.call (/opt/xen-orchestra/node_modules/promise-toolbox/timeout.js:13:16)

at Xapi._call (/opt/xen-orchestra/packages/xen-api/src/index.js:644:37)

at /opt/xen-orchestra/packages/xen-api/src/index.js:722:21

at loopResolver (/opt/xen-orchestra/node_modules/promise-toolbox/retry.js:94:23)

at Promise._execute (/opt/xen-orchestra/node_modules/bluebird/js/release/debuggability.js:384:9)

at Promise._resolveFromExecutor (/opt/xen-orchestra/node_modules/bluebird/js/release/promise.js:518:18)

at new Promise (/opt/xen-orchestra/node_modules/bluebird/js/release/promise.js:103:10)

at loop (/opt/xen-orchestra/node_modules/promise-toolbox/retry.js:98:12)

at retry (/opt/xen-orchestra/node_modules/promise-toolbox/retry.js:101:10)

at Xapi._sessionCall (/opt/xen-orchestra/packages/xen-api/src/index.js:713:20)

at Xapi.call (/opt/xen-orchestra/packages/xen-api/src/index.js:247:14)

at loopResolver (/opt/xen-orchestra/node_modules/promise-toolbox/retry.js:94:23)

at Promise._execute (/opt/xen-orchestra/node_modules/bluebird/js/release/debuggability.js:384:9)

at Promise._resolveFromExecutor (/opt/xen-orchestra/node_modules/bluebird/js/release/promise.js:518:18)

at new Promise (/opt/xen-orchestra/node_modules/bluebird/js/release/promise.js:103:10)

at loop (/opt/xen-orchestra/node_modules/promise-toolbox/retry.js:98:12)

at Xapi.retry (/opt/xen-orchestra/node_modules/promise-toolbox/retry.js:101:10)

at Xapi.call (/opt/xen-orchestra/node_modules/promise-toolbox/retry.js:119:18)

at Xapi.destroy (/opt/xen-orchestra/@xen-orchestra/xapi/src/vm.js:324:16)

at Xapi._copyVm (file:///opt/xen-orchestra/packages/xo-server/src/xapi/index.mjs:322:9)

at Xapi.copyVm (file:///opt/xen-orchestra/packages/xo-server/src/xapi/index.mjs:337:7)

at Api.callApiMethod (file:///opt/xen-orchestra/packages/xo-server/src/xo-mixins/api.mjs:304:20)"

}

We are getting backup error on only 1 server in our pool. We've already swapped NFS storage and done a FULL CLONE of the VM for testing, but we're still failing (all other servers work fine in the backup operation to the same NFS Server).

I have not found anything related to this error and the snapshot operations are working correctly. Any tips to solve this problem?

transfer

Start: Jul 27, 2021, 08:50:02 AM

End: Jul 27, 2021, 09:39:49 AM

Duration: an hour

Error: HTTP connection abruptly closed

Start: Jul 27, 2021, 08:50:02 AM

End: Jul 27, 2021, 09:39:49 AM

Duration: an hour

Error: HTTP connection abruptly closed

Start: Jul 27, 2021, 08:49:33 AM

End: Jul 27, 2021, 09:44:45 AM

Duration: an hour

Error: all targets have failed, step: writer.run()

Type: full

@olivierlambert said in XCP-ng 8.2.0 RC now available!:

So the IQN was correctly "saved" during the upgrade, it sounds correct to me. Is that host in a pool? Can you check for the other hosts?

All others are correct and with other guests running.

I'll do some testing by removing the iSCSI connections altogether and replugging the host that has been updated.

If you have any tips on how to solve it, i appreciate your help.

@olivierlambert said in XCP-ng 8.2.0 RC now available!:

xe host-list uuid=<host uuid> params=other-config

[13:01 CLI220 ~]# xe host-list uuid=e1d9e417-961b-43f4-8750-39300e197692 params=other-config

other-config (MRW) : agent_start_time: 1604332891.; boot_time: 1604323953.; iscsi_iqn: iqn.2020-11.com.svm:cli219; rpm_patch_installation_time: 1604320091.898; last_blob_sync_time: 1570986114.22; multipathing: true; MAINTENANCE_MODE_EVACUATED_VMS_SUSPENDED: ; MAINTENANCE_MODE_EVACUATED_VMS_HALTED: ; MAINTENANCE_MODE_EVACUATED_VMS_MIGRATED: ; multipathhandle: dmp

[13:02 CLI219 ~]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2020-11.com.svm:cli219

InitiatorAlias=CLI219

Reconnection via iSCSI is failing. Upgrade pool from 8.0 to 8.2 (Starting with the Master host).

Nov 2 10:36:59 CLI219 SM: [10134] Warning: vdi_[de]activate present for dummy

Nov 2 10:37:00 CLI219 SM: [10200] Setting LVM_DEVICE to /dev/disk/by-scsid/36005076d0281000108000000000000d7

Nov 2 10:37:00 CLI219 SM: [10200] Setting LVM_DEVICE to /dev/disk/by-scsid/36005076d0281000108000000000000d7

Nov 2 10:37:00 CLI219 SM: [10200] Raising exception [97, Unable to retrieve the host configuration ISCSI IQN parameter]

Nov 2 10:37:00 CLI219 SM: [10200] ***** LVHD over iSCSI: EXCEPTION <class 'SR.SROSError'>, Unable to retrieve the host configuration ISCSI IQN parameter

Nov 2 10:37:00 CLI219 SM: [10200] File "/opt/xensource/sm/SRCommand.py", line 376, in run

Nov 2 10:37:00 CLI219 SM: [10200] sr = driver(cmd, cmd.sr_uuid)

Nov 2 10:37:00 CLI219 SM: [10200] File "/opt/xensource/sm/SR.py", line 147, in __init__

Nov 2 10:37:00 CLI219 SM: [10200] self.load(sr_uuid)

Nov 2 10:37:00 CLI219 SM: [10200] File "/opt/xensource/sm/LVMoISCSISR", line 86, in load

Nov 2 10:37:00 CLI219 SM: [10200] iscsi = BaseISCSI.BaseISCSISR(self.original_srcmd, sr_uuid)

Nov 2 10:37:00 CLI219 SM: [10200] File "/opt/xensource/sm/SR.py", line 147, in __init__

Nov 2 10:37:00 CLI219 SM: [10200] self.load(sr_uuid)

Nov 2 10:37:00 CLI219 SM: [10200] File "/opt/xensource/sm/BaseISCSI.py", line 150, in load

Nov 2 10:37:00 CLI219 SM: [10200] raise xs_errors.XenError('ConfigISCSIIQNMissing')

Nov 2 10:37:00 CLI219 SM: [10200]