@olivierlambert But it does not sound like a proble. As long as the UI is doing a good job in making the pending change visible, what's the harm? Others do it in a similar fashion

Best posts made by blackliner

-

RE: How to increase XOA-memory with XOA

-

RE: How to increase XOA-memory with XOA

Instead of forcing a reboot when changing the CPU or memory resources, the new settings could be "saved" and applied at the next possible time, e.g. when there is a reboot.

Latest posts made by blackliner

-

RE: Sdn controller and physical network

@nikade How do you "pair" the XCP-ng SDN with your routing setup?

-

RE: Sdn controller and physical network

Most folks don't really have an L3 router beyond their firewall appliance

Ouch, you just excluded all enterprise customers

But I guess they all use the commercial side of XCP-ng and don't read these forums

But I guess they all use the commercial side of XCP-ng and don't read these forums

-

RE: How to increase XOA-memory with XOA

@olivierlambert But it does not sound like a proble. As long as the UI is doing a good job in making the pending change visible, what's the harm? Others do it in a similar fashion

-

RE: How to increase XOA-memory with XOA

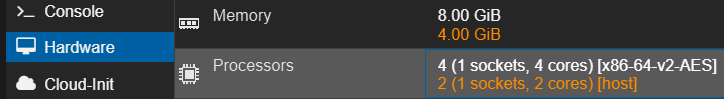

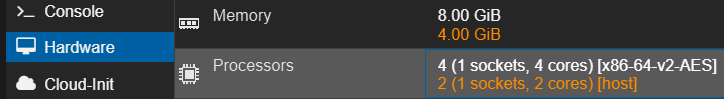

@olivierlambert said in How to increase XOA-memory with XOA:

"can't apply now, would you like to do the change by shutdown/start the VM?"

this 100%! Because then the approach looks like this:

this 100%! Because then the approach looks like this:- UI - changing the resources

- UI - trigger a shutdown

- UI - go to the xo-lite and launch the VM

instead of

- UI - change the resources, get prompted for a halt+boot, press ok, resulting in the chicken and egg problem plus an error message shortly before the UI goes dark

- UI - go to the xo-lite and figure out what is going on, realize you can't change resources in xo-lite

- CLI - ssh to the master

- CLI - run the command to upgrade resources

- CLI - start the VM (I hope you copied the UUID, because this command does not take vm names)

-

RE: How to increase XOA-memory with XOA

Instead of forcing a reboot when changing the CPU or memory resources, the new settings could be "saved" and applied at the next possible time, e.g. when there is a reboot.

-

RE: How to increase XOA-memory with XOA

could XCP-ng support the use case by accepting new resource quotas, and apply them on the next reboot?

-

RE: Alternative to AWS EC2 Autoscaling groups

@AtaxyaNetwork Hi!

We use this for self hosted github action or buildkite CI runners.

- Managed runners are usually 5-20x more expensive

- We need stateful runners (vs ephemeral) for our bazel workloads to run optimally

- During busy hours we want to scale up our fleet, and overnight, when no code is being deceloped, we scale down to 0. This is achieved (more or less) through an AWS lambda function that sets the scale value based on the CI job queue.

How would scaling down look like with your proposed solution based on terraform?

-

Alternative to AWS EC2 Autoscaling groups

We have a few hundred EC2 instances that are managed by Autoscaling Groups. For those who don't know what this is, Autoscaling groups allow you to define a VM template (launch tempate in AWS jargon) and instance types, and then you can easily scale from 0 to any number of EC2 instances. They all will use the same template, follow lifecycles (what to do before shutting down), and can even scale automatically (on a schedule, or using a lambda)

I now want to leverage our own hardware to accomplish something similar, and XCP-ng is one contender. Any ideas how I could accomplish something similar with XCP-ng? Thanks!