@doogie06

register cli: xo-cli --register http://url login

then remove all tasks: xo-cli rest del tasks

Tristis Oris

@Tristis Oris

Best posts made by Tristis Oris

-

RE: Clearing Failed XO Tasks

-

RE: New Rust Xen guest tools

Problem with broken package on rhel8-9 looks resolved now. Done multiple tests.

-

RE: New Rust Xen guest tools

@DustinB said in New Rust Xen guest tools:

systemctl enable xe

yep, found them.

full steps for RHEL:wget https://gitlab.com/xen-project/xen-guest-agent/-/jobs/6041608360/artifacts/raw/RPMS/x86_64/xen-guest-agent-0.4.0-0.fc37.x86_64.rpm rpm -i xen-guest-agent* yum remove -y xe-guest-utilities-latest systemctl enable xen-guest-agent.service --now -

RE: New Rust Xen guest tools

@chrisfonte new tools will remove the old one during install.

-

RE: Can not create a storage for shared iso

@ckargitest that was fixed few weeks ago. Do you use latest commit?

-

RE: Pool is connected but Unknown pool

@julien-f @olivierlambert

2371109b6fea26c15df28caed132be2108a0d88e

Fixed now, thanks you. -

RE: Rolling Pool Update - not possible to resume a failed RPU

@olivierlambert During RPU - yes. i mean manual update in case of failure.

Latest posts made by Tristis Oris

-

RE: CloudConfigDrive removal

Ok, my syntax is oudated.

resize_rootfs: true growpart: mode: auto devices: ['/dev/xvda3'] ignore_growroot_disabled: false runcmd: - pvresize /dev/xvda3 - lvextend -r -l +100%FREE /dev/ubuntu-vg/ubuntu-lv || truethis one works.

final_message.doesn't support any macro like%(uptime) %(UPTIME) -

Unable to copy template

Can't copy VM template to some pools, but it still works with other.

Yes, different updates on each pool is installed.vm.copy { "vm": "548c201e-59a8-bb5e-86ca-00e8b8d48fd7", "sr": "82764645-babd-fa0e-35c7-8c0534be3275", "name": "Ubuntu 24.04.3 [tuned]" } { "code": "IMPORT_INCOMPATIBLE_VERSION", "params": [], "url": "", "task": { "uuid": "2951db7e-0584-1319-4fc8-2b9c369a79a0", "name_label": "[XO] VM import", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20260211T10:20:31Z", "finished": "20260211T10:20:31Z", "status": "failure", "resident_on": "OpaqueRef:3570b538-189d-6a16-fe61-f6d73cc545dc", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "IMPORT_INCOMPATIBLE_VERSION" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename string.ml)(line 128))((process xapi)(filename string.ml)(line 132))((process xapi)(filename ocaml/libs/log/debug.ml)(line 218))((process xapi)(filename ocaml/xapi/import.ml)(line 2373))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 74)))" }, "pool_master": "3fa65753-db21-4925-8302-24d93f352b08", "SR": "82764645-babd-fa0e-35c7-8c0534be3275", "message": "IMPORT_INCOMPATIBLE_VERSION()", "name": "XapiError", "stack": "XapiError: IMPORT_INCOMPATIBLE_VERSION() at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202602091212/packages/xen-api/_XapiError.mjs:16:12) at default (file:///opt/xo/xo-builds/xen-orchestra-202602091212/packages/xen-api/_getTaskResult.mjs:13:29) at Xapi._addRecordToCache (file:///opt/xo/xo-builds/xen-orchestra-202602091212/packages/xen-api/index.mjs:1078:24) at file:///opt/xo/xo-builds/xen-orchestra-202602091212/packages/xen-api/index.mjs:1112:14 at Array.forEach (<anonymous>) at Xapi._processEvents (file:///opt/xo/xo-builds/xen-orchestra-202602091212/packages/xen-api/index.mjs:1102:12) at Xapi._watchEvents (file:///opt/xo/xo-builds/xen-orchestra-202602091212/packages/xen-api/index.mjs:1275:14)" } -

RE: CloudConfigDrive removal

Also i tried to auto extend drive, but this is only thing which not works. Packages update run without issue.

# nope resize_rootfs: true growpart: mode: auto devices: ['/, /dev/xvda'] ignore_growroot_disabled: false # ok package_update: true package_upgrade: true -

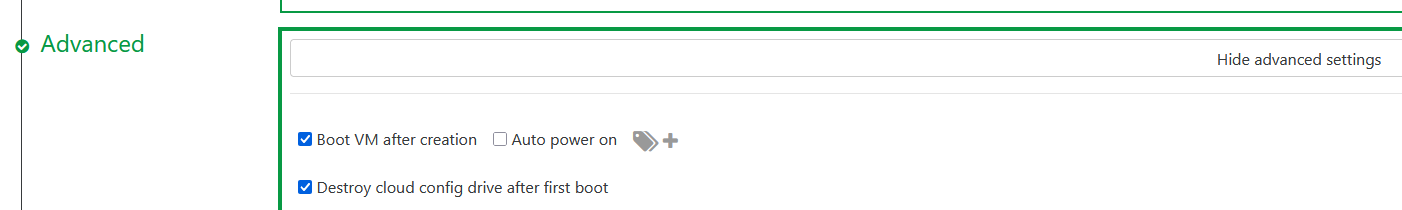

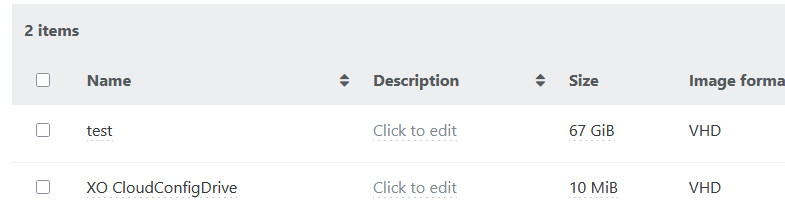

CloudConfigDrive removal

I try to get how is this button should work.

only one task at .conf

# Base hostname: {name}%

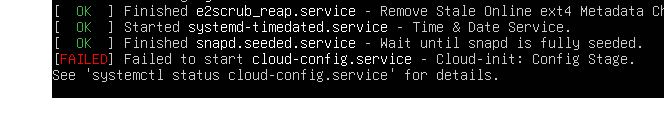

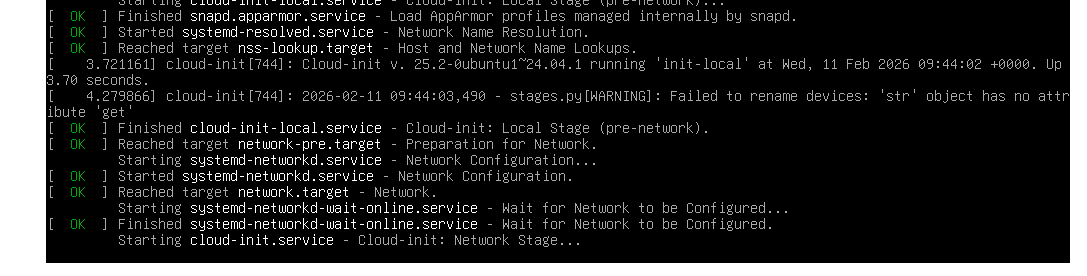

sudo journalctl -u cloud-config -f Feb 11 12:40:14 clonme systemd[1]: Starting cloud-config.service - Cloud-init: Config Stage... Feb 11 12:40:15 test123 cloud-init[1037]: Cloud-init v. 25.2-0ubuntu1~24.04.1 running 'modules:config' at Wed, 11 Feb 2026 09:40:15 +0000. Up 8.85 seconds. Feb 11 12:40:15 test123 systemd[1]: cloud-config.service: Main process exited, code=exited, status=1/FAILURE Feb 11 12:40:15 test123 systemd[1]: cloud-config.service: Failed with result 'exit-code'. Feb 11 12:40:15 test123 systemd[1]: Failed to start cloud-config.service - Cloud-init: Config Stage.old hostname at log, but all changes from config is applied to host.

Next run

systemctl status cloud-config ● cloud-config.service - Cloud-init: Config Stage Loaded: loaded (/usr/lib/systemd/system/cloud-config.service; enabled; preset: enabled) Active: active (exited) since Wed 2026-02-11 12:44:06 MSK; 3min 0s ago Process: 787 ExecStart=/usr/bin/cloud-init modules --mode=config (code=exited, status=0/SUCCESS) Main PID: 787 (code=exited, status=0/SUCCESS) CPU: 362ms Feb 11 12:44:06 test123 systemd[1]: Starting cloud-config.service - Cloud-init: Config Stage... Feb 11 12:44:06 test123 cloud-init[936]: Cloud-init v. 25.2-0ubuntu1~24.04.1 running 'modules:config' at Wed, 11 Feb 2026 09:44:06 +0000. Up 7.42 seconds. Feb 11 12:44:06 test123 systemd[1]: Finished cloud-config.service - Cloud-init: Config Stage.Few times shutdown, but drive still not removed

After 3rd cycle drive is disappeared.

When i test it with force poweroff, i got error and VM stuck at

greystate till next poweroff.

But after this, the drive been removed.vm.stop { "id": "102e48dd-d6f6-c8a6-7f10-e449fb43dcb2", "force": true, "bypassBlockedOperation": false } { "code": "INTERNAL_ERROR", "params": [ "Object with type VM and id 102e48dd-d6f6-c8a6-7f10-e449fb43dcb2/vbd.xvdb does not exist in xenopsd" ], "task": { "uuid": "a345de27-ac17-ab29-c66b-2afd629d724e", "name_label": "Async.VM.hard_shutdown", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20260211T09:33:50Z", "finished": "20260211T09:33:54Z", "status": "failure", "resident_on": "OpaqueRef:b31541df-b7c1-27fc-85d0-36a5c1d94242", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "INTERNAL_ERROR", "Object with type VM and id 102e48dd-d6f6-c8a6-7f10-e449fb43dcb2/vbd.xvdb does not exist in xenopsd" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename ocaml/xapi-client/client.ml)(line 7))((process xapi)(filename ocaml/xapi-client/client.ml)(line 19))((process xapi)(filename ocaml/xapi-client/client.ml)(line 7874))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 39))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 144))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 39))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 2169))((process xapi)(filename ocaml/xapi/rbac.ml)(line 229))((process xapi)(filename ocaml/xapi/rbac.ml)(line 239))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 78)))" }, "message": "INTERNAL_ERROR(Object with type VM and id 102e48dd-d6f6-c8a6-7f10-e449fb43dcb2/vbd.xvdb does not exist in xenopsd)", "name": "XapiError", "stack": "XapiError: INTERNAL_ERROR(Object with type VM and id 102e48dd-d6f6-c8a6-7f10-e449fb43dcb2/vbd.xvdb does not exist in xenopsd) at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202602091212/packages/xen-api/_XapiError.mjs:16:12) at default (file:///opt/xo/xo-builds/xen-orchestra-202602091212/packages/xen-api/_getTaskResult.mjs:13:29) at Xapi._addRecordToCache (file:///opt/xo/xo-builds/xen-orchestra-202602091212/packages/xen-api/index.mjs:1078:24) at file:///opt/xo/xo-builds/xen-orchestra-202602091212/packages/xen-api/index.mjs:1112:14 at Array.forEach (<anonymous>) at Xapi._processEvents (file:///opt/xo/xo-builds/xen-orchestra-202602091212/packages/xen-api/index.mjs:1102:12) at Xapi._watchEvents (file:///opt/xo/xo-builds/xen-orchestra-202602091212/packages/xen-api/index.mjs:1275:14)" } -

RE: New Rust Xen guest tools

Not sure is it an issue. Agent itself is working.

Welcome to Ubuntu 24.04.3 LTS (GNU/Linux 6.14.0-37-generic x86_64)systemctl status xen-guest-agent ● xen-guest-agent.service - Xen guest agent Loaded: loaded (/usr/lib/systemd/system/xen-guest-agent.service; enabled; preset: enabled) Active: active (running) since Mon 2026-02-09 14:28:41 MSK; 36min ago Main PID: 959 (xen-guest-agent) Tasks: 17 (limit: 19041) Memory: 4.8M (peak: 6.7M) CPU: 76ms CGroup: /system.slice/xen-guest-agent.service └─959 /usr/sbin/xen-guest-agent Feb 09 14:28:41 oris systemd[1]: Started xen-guest-agent.service - Xen guest agent. Feb 09 14:28:41 oris xen-guest-agent[959]: cannot parse yet os version Custom("24.04")Welcome to Ubuntu 24.04.3 LTS (GNU/Linux 6.17.0-14-generic x86_64)systemctl status xen-guest-agent ● xen-guest-agent.service - Xen guest agent Loaded: loaded (/usr/lib/systemd/system/xen-guest-agent.service; enabled; preset: enabled) Active: active (running) since Mon 2026-02-09 15:04:31 MSK; 1s ago Main PID: 9058 (xen-guest-agent) Tasks: 17 (limit: 76999) Memory: 2.5M (peak: 4.7M) CPU: 32ms CGroup: /system.slice/xen-guest-agent.service └─9058 /usr/sbin/xen-guest-agent Feb 09 15:04:31 k3s xen-guest-agent[9058]: Specified IFLA_INET6_CONF NLA attribute holds more(most likely new kernel) data which is unknown to netlink-packet-route crate, expecting 236, got 240 Feb 09 15:04:31 k3s xen-guest-agent[9058]: Specified IFLA_INET6_CONF NLA attribute holds more(most likely new kernel) data which is unknown to netlink-packet-route crate, expecting 236, got 240 Feb 09 15:04:31 k3s xen-guest-agent[9058]: Specified IFLA_INET6_CONF NLA attribute holds more(most likely new kernel) data which is unknown to netlink-packet-route crate, expecting 236, got 240 Feb 09 15:04:31 k3s xen-guest-agent[9058]: Specified IFLA_INET6_CONF NLA attribute holds more(most likely new kernel) data which is unknown to netlink-packet-route crate, expecting 236, got 240 Feb 09 15:04:31 k3s xen-guest-agent[9058]: Specified IFLA_INET6_CONF NLA attribute holds more(most likely new kernel) data which is unknown to netlink-packet-route crate, expecting 236, got 240 Feb 09 15:04:31 k3s xen-guest-agent[9058]: Specified IFLA_INET6_CONF NLA attribute holds more(most likely new kernel) data which is unknown to netlink-packet-route crate, expecting 236, got 240 Feb 09 15:04:31 k3s xen-guest-agent[9058]: Specified IFLA_INET6_CONF NLA attribute holds more(most likely new kernel) data which is unknown to netlink-packet-route crate, expecting 236, got 240 Feb 09 15:04:31 k3s xen-guest-agent[9058]: Specified IFLA_INET6_CONF NLA attribute holds more(most likely new kernel) data which is unknown to netlink-packet-route crate, expecting 236, got 240 Feb 09 15:04:31 k3s xen-guest-agent[9058]: Specified IFLA_INET6_CONF NLA attribute holds more(most likely new kernel) data which is unknown to netlink-packet-route crate, expecting 236, got 240 Feb 09 15:04:31 k3s xen-guest-agent[9058]: Specified IFLA_INET6_CONF NLA attribute holds more(most likely new kernel) data which is unknown to netlink-packet-route crate, expecting 236, got 240 -

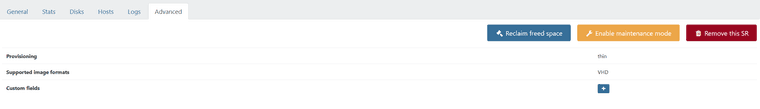

RE: Unable to remove non-existent SR

@Danp damned, i forget about this button) Now it works, thanks.