@benapetr Nice, great.

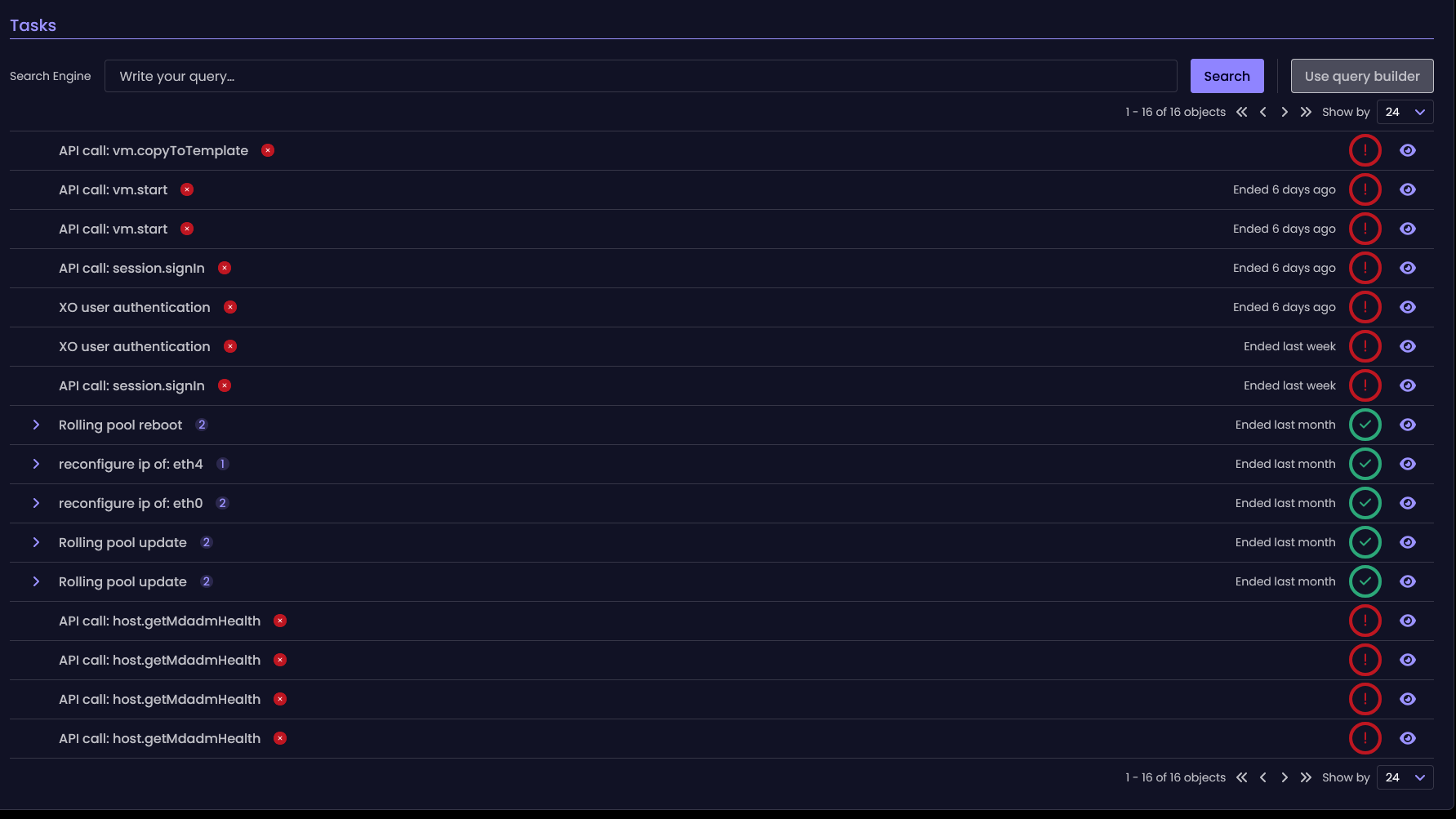

How about to implement a launch of CR backup VMs?

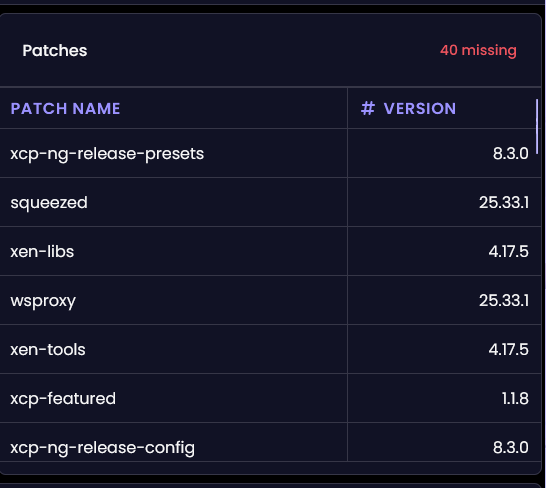

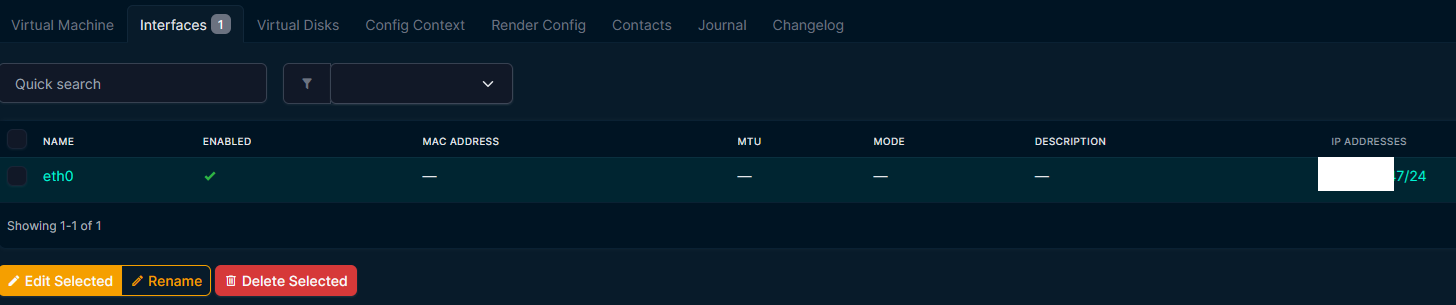

https://xcp-ng.org/forum/assets/uploads/files/1769679350079-677dfcbb-211b-452f-ad8f-db59e2860579-изображение.png

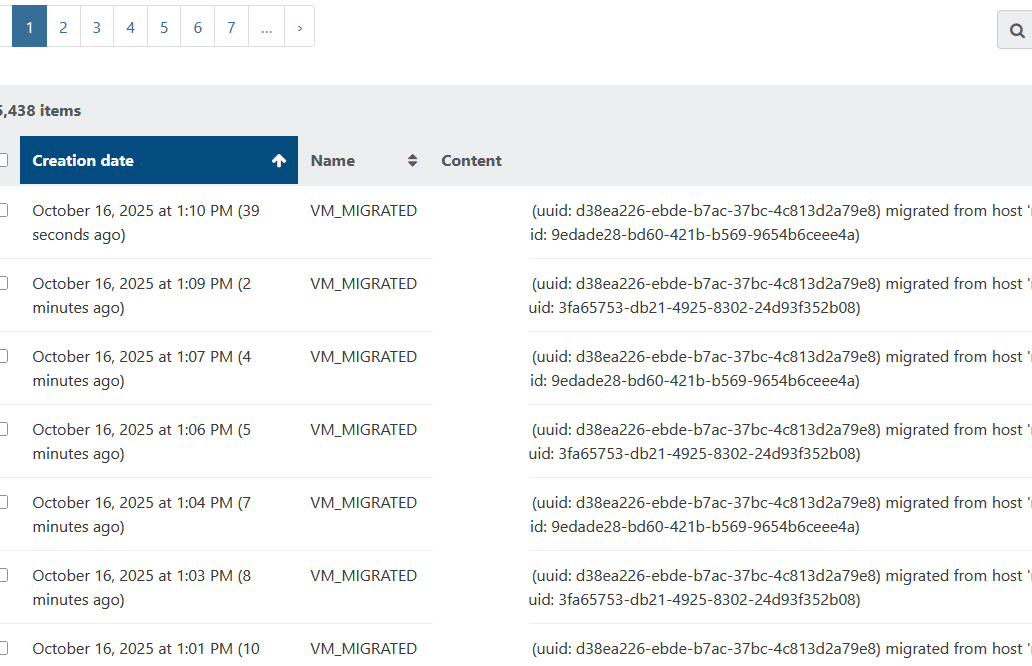

https://xcp-ng.org/forum/assets/uploads/files/1769679584572-3e39d799-a2fd-4cb0-8e2e-6b9c4312a2e6-изображение.png