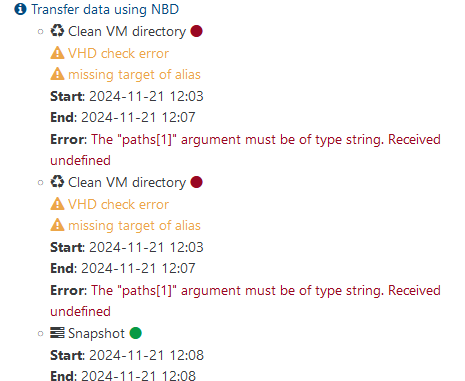

@stephane-m-dev that happens again for 1 vm.

{

"data": {

"type": "VM",

"id": "316e7303-c9c9-9bb6-04ef-83948ee1b19e",

"name_label": "name"

},

"id": "1732299284886",

"message": "backup VM",

"start": 1732299284886,

"status": "failure",

"tasks": [

{

"id": "1732299284997",

"message": "clean-vm",

"start": 1732299284997,

"status": "failure",

"warnings": [

{

"data": {

"path": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241101T181520Z.alias.vhd",

"error": {

"generatedMessage": true,

"code": "ERR_ASSERTION",

"actual": false,

"expected": true,

"operator": "=="

}

},

"message": "VHD check error"

},

{

"data": {

"alias": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241101T181520Z.alias.vhd"

},

"message": "missing target of alias"

}

],

"end": 1732299341663,

"result": {

"code": "ERR_INVALID_ARG_TYPE",

"message": "The \"paths[1]\" argument must be of type string. Received undefined",

"name": "TypeError",

"stack": "TypeError [ERR_INVALID_ARG_TYPE]: The \"paths[1]\" argument must be of type string. Received undefined\n at resolve (node:path:1169:7)\n at normalize (/opt/xo/xo-builds/xen-orchestra-202411191133/@xen-orchestra/fs/dist/path.js:21:27)\n at NfsHandler.__unlink (/opt/xo/xo-builds/xen-orchestra-202411191133/@xen-orchestra/fs/dist/abstract.js:412:32)\n at NfsHandler.unlink (/opt/xo/xo-builds/xen-orchestra-202411191133/node_modules/limit-concurrency-decorator/index.js:97:24)\n at checkAliases (file:///opt/xo/xo-builds/xen-orchestra-202411191133/@xen-orchestra/backups/_cleanVm.mjs:132:25)\n at async Array.<anonymous> (file:///opt/xo/xo-builds/xen-orchestra-202411191133/@xen-orchestra/backups/_cleanVm.mjs:284:5)\n at async Promise.all (index 1)\n at async RemoteAdapter.cleanVm (file:///opt/xo/xo-builds/xen-orchestra-202411191133/@xen-orchestra/backups/_cleanVm.mjs:283:3)"

}

},

{

"id": "1732299285125",

"message": "clean-vm",

"start": 1732299285125,

"status": "failure",

"warnings": [

{

"data": {

"path": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241101T181520Z.alias.vhd",

"error": {

"generatedMessage": true,

"code": "ERR_ASSERTION",

"actual": false,

"expected": true,

"operator": "=="

}

},

"message": "VHD check error"

},

{

"data": {

"alias": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241101T181520Z.alias.vhd"

},

"message": "missing target of alias"

}

],

"end": 1732299343111,

"result": {

"code": "ERR_INVALID_ARG_TYPE",

"message": "The \"paths[1]\" argument must be of type string. Received undefined",

"name": "TypeError",

"stack": "TypeError [ERR_INVALID_ARG_TYPE]: The \"paths[1]\" argument must be of type string. Received undefined\n at resolve (node:path:1169:7)\n at normalize (/opt/xo/xo-builds/xen-orchestra-202411191133/@xen-orchestra/fs/dist/path.js:21:27)\n at NfsHandler.__unlink (/opt/xo/xo-builds/xen-orchestra-202411191133/@xen-orchestra/fs/dist/abstract.js:412:32)\n at NfsHandler.unlink (/opt/xo/xo-builds/xen-orchestra-202411191133/node_modules/limit-concurrency-decorator/index.js:97:24)\n at checkAliases (file:///opt/xo/xo-builds/xen-orchestra-202411191133/@xen-orchestra/backups/_cleanVm.mjs:132:25)\n at async Array.<anonymous> (file:///opt/xo/xo-builds/xen-orchestra-202411191133/@xen-orchestra/backups/_cleanVm.mjs:284:5)\n at async Promise.all (index 3)\n at async RemoteAdapter.cleanVm (file:///opt/xo/xo-builds/xen-orchestra-202411191133/@xen-orchestra/backups/_cleanVm.mjs:283:3)"

}

},

{

"id": "1732299343953",

"message": "snapshot",

"start": 1732299343953,

"status": "success",

"end": 1732299346495,

"result": "ee646d05-83b2-31d8-e54b-0d3b0cf7df1d"

},

{

"data": {

"id": "4b6d24a3-0b1e-48d5-aac2-a06e3a8ee485",

"isFull": false,

"type": "remote"

},

"id": "1732299346495:0",

"message": "export",

"start": 1732299346495,

"status": "success",

"tasks": [

{

"id": "1732299353253",

"message": "transfer",

"start": 1732299353253,

"status": "success",

"end": 1732299450434,

"result": {

"size": 9674571776

}

},

{

"id": "1732299501828:0",

"message": "clean-vm",

"start": 1732299501828,

"status": "success",

"warnings": [

{

"data": {

"parent": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241101T181520Z.alias.vhd",

"child": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241102T180758Z.alias.vhd"

},

"message": "parent VHD is missing"

},

{

"data": {

"parent": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241102T180758Z.alias.vhd",

"child": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241103T180648Z.alias.vhd"

},

"message": "parent VHD is missing"

},

{

"data": {

"parent": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241103T180648Z.alias.vhd",

"child": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241104T180802Z.alias.vhd"

},

"message": "parent VHD is missing"

},

{

"data": {

"parent": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241104T180802Z.alias.vhd",

"child": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241105T181019Z.alias.vhd"

},

"message": "parent VHD is missing"

},

{

"data": {

"backup": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/20241104T180802Z.json",

"missingVhds": [

"/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241104T180802Z.alias.vhd"

]

},

"message": "some VHDs linked to the backup are missing"

},

{

"data": {

"backup": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/20241102T180758Z.json",

"missingVhds": [

"/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241102T180758Z.alias.vhd"

]

},

"message": "some VHDs linked to the backup are missing"

},

{

"data": {

"backup": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/20241103T180648Z.json",

"missingVhds": [

"/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241103T180648Z.alias.vhd"

]

},

"message": "some VHDs linked to the backup are missing"

},

{

"data": {

"backup": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/20241105T181019Z.json",

"missingVhds": [

"/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241105T181019Z.alias.vhd"

]

},

"message": "some VHDs linked to the backup are missing"

}

],

"end": 1732299518747,

"result": {

"merge": false

}

}

],

"end": 1732299518760

},

{

"data": {

"id": "8da40b08-636f-450d-af15-3264b9692e1f",

"isFull": false,

"type": "remote"

},

"id": "1732299346496",

"message": "export",

"start": 1732299346496,

"status": "success",

"tasks": [

{

"id": "1732299353244",

"message": "transfer",

"start": 1732299353244,

"status": "success",

"end": 1732299450546,

"result": {

"size": 9674571776

}

},

{

"id": "1732299451765",

"message": "clean-vm",

"start": 1732299451765,

"status": "success",

"warnings": [

{

"data": {

"parent": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241101T181520Z.alias.vhd",

"child": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241102T180758Z.alias.vhd"

},

"message": "parent VHD is missing"

},

{

"data": {

"parent": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241102T180758Z.alias.vhd",

"child": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241103T180648Z.alias.vhd"

},

"message": "parent VHD is missing"

},

{

"data": {

"parent": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241103T180648Z.alias.vhd",

"child": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241104T180802Z.alias.vhd"

},

"message": "parent VHD is missing"

},

{

"data": {

"parent": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241104T180802Z.alias.vhd",

"child": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241105T181019Z.alias.vhd"

},

"message": "parent VHD is missing"

},

{

"data": {

"backup": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/20241103T180648Z.json",

"missingVhds": [

"/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241103T180648Z.alias.vhd"

]

},

"message": "some VHDs linked to the backup are missing"

},

{

"data": {

"backup": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/20241104T180802Z.json",

"missingVhds": [

"/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241104T180802Z.alias.vhd"

]

},

"message": "some VHDs linked to the backup are missing"

},

{

"data": {

"backup": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/20241102T180758Z.json",

"missingVhds": [

"/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241102T180758Z.alias.vhd"

]

},

"message": "some VHDs linked to the backup are missing"

},

{

"data": {

"backup": "/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/20241105T181019Z.json",

"missingVhds": [

"/xo-vm-backups/316e7303-c9c9-9bb6-04ef-83948ee1b19e/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/53843891-126f-4f0c-b645-8e8aa0a41b36/20241105T181019Z.alias.vhd"

]

},

"message": "some VHDs linked to the backup are missing"

}

],

"end": 1732299501791,

"result": {

"merge": false

}

}

],

"end": 1732299501828

}

],

"infos": [

{

"message": "Transfer data using NBD"

}

],

"end": 1732299518760

}

],

"end": 1732299518761

}