Yay! Santa came early this year!!

Latest posts made by ScarfAntennae

-

RE: Gather CPU utilization of host as variable for prometheus exporter

-

RE: Is it possible yet to select a specific network adapter for Backups?

Is this possible now? I can only see the management NIC.

-

RE: Gather CPU utilization of host as variable for prometheus exporter

I was about to link Mike's work here, but wanted to say how we desperately need a metrics system that would be exposed from a centralized location. There's no way in hell I'm giving a docker host credential access to the hypervisor it resides on (and all the other hypervisors) - on the other hand, if the metrics were exposed only via XO and limited in terms of where these metrics could be accessed from, now that would be a sold implementation.

-

Just an idea :) - (SATA controller passthrough)

Hey, since I'm running TrueNAS virtualized, I wanted to passthrough the SATA controller.

This led me to a journey of updating to 8.3, just so I could do it through xen orchestra, which I did. After I toggled the controller, the system restarted and all of a sudden xcp-ng didn't want to boot. It was stuck in the dracut emergency shell.

I had absolutely no idea why, until I realized the system itself is on a SATA SSD, and not an m.2 drive.

I had to manually change the grub config for it to be able to boot to the main config again, which was a bit stressful.

Anyways, my idea, just adding a little popup warning, or something similar that this could happen if users have xcp-ng installed on a SATA drive, just to prevent future headaches for homelabbers such as myself, but in reality, it could happen to anyone.

-

RE: Xen Orchestra Prometheus Backup Metrics?

Good things take time.

Your team is doing god's work.

Remember to stay healthy, in both mind and body! -

RE: Xen Orchestra Prometheus Backup Metrics?

Got it, thanks!

I'm certain many if not all enterprise users would be interested in such a feature. -

RE: Xen Orchestra Prometheus Backup Metrics?

@olivierlambert That's where my question comes in

Any plans on having these exposed for monitoring in Grafana?

-

RE: Xen Orchestra Prometheus Backup Metrics?

@olivierlambert Could you confirm please if netdata provides such metrics? ^

-

RE: Xen Orchestra Prometheus Backup Metrics?

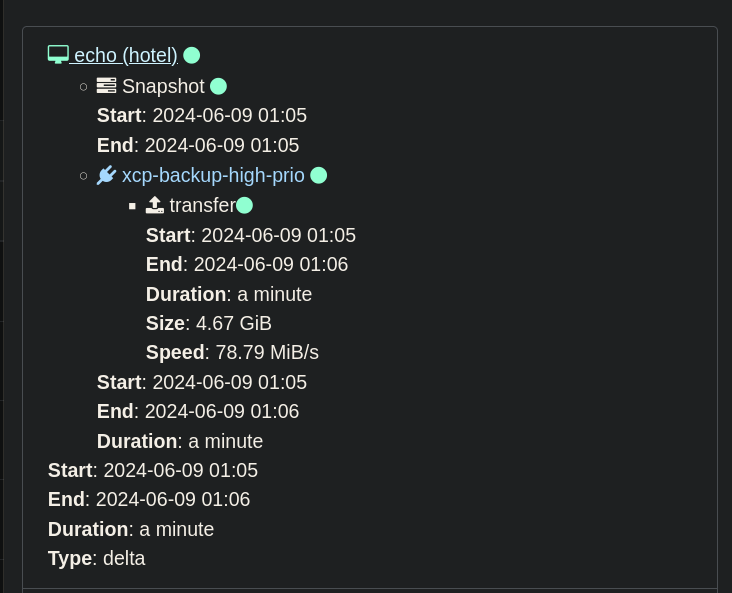

@olivierlambert Just to confirm, I want to look at the size of daily backups created by Xen Orchestra, and the time/speed it took for them to complete, basically this page, but on a graph:

I'm not sure that is something netdata can offer?