@olivierlambert any other suggestions? time sync with guest tools for example?

Our client can't use Internet access at all.

Latest posts made by topsecret

-

RE: VM time sync with Dom0

-

VM time sync with Dom0

Hello.

How to sync time with xcp-ng host? My VM doesn't have Internet access and NTP sync can't work in this case. -

RE: leaf-coalesce: EXCEPTION. " Unexpected bump in size"

I powered off one VM with two 2Tb disks, overall coalesce time was about 3-4 hours.

Running VM still has VDI to coalesce. I found proccess "/usr/bin/vhd-util coalesce --debug -n /dev/VG_XenStorage-de024eb7-ce14-5487-e229-7ca321b103a2/VHD-b5d6ab41-50dc-4116-a23c-e453b93ce161"

Can I run it again to parallel coalesce process? -

RE: leaf-coalesce: EXCEPTION. " Unexpected bump in size"

@ronan-a This cluster based on Huawei CH121 V5 servers with Intel(R) Xeon(R) Gold 5120T CPU @ 2.20GHz, 320 Gb RAM and Intel(R) Xeon(R) Gold 6138T CPU @ 2.00GHz, 512 Gb RAM. We have more than a half free RAM and CPU on each server according to Xen Orchestra. SAS storage free capacity is about 45% (17Tb).

Problem disks capacity are about 2Tb

We can't stop virtual machines, it affects productive service -

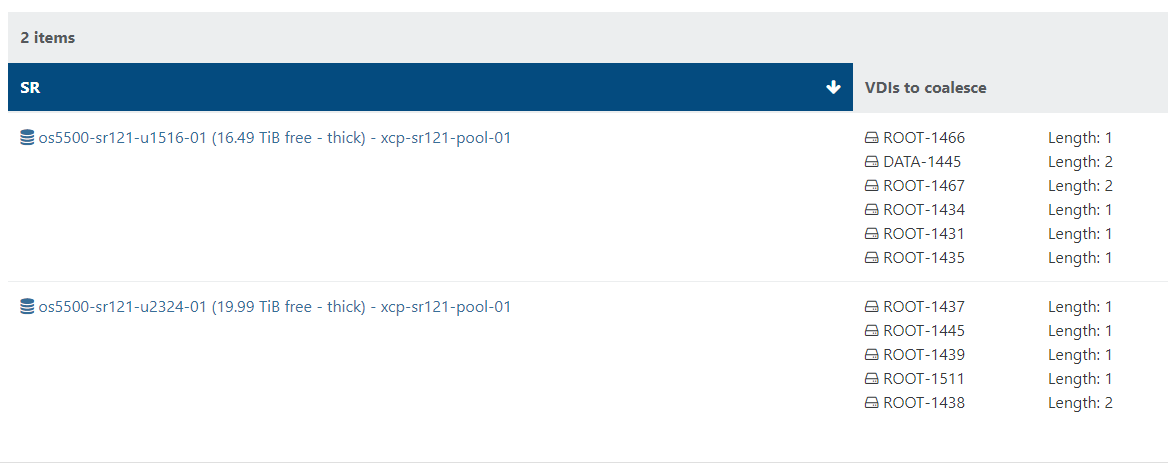

leaf-coalesce: EXCEPTION. " Unexpected bump in size"

I use xcp-ng 8.2.0 and I have several unhealthy VDIs after removimg snapshots.

In /var/log/SMlog I see the next:Aug 161 14:23:04 xcp-sr121-u0112-s1 SM: [13443] lock: released /var/lock/sm/lvm-e8b61db5-e776-9b83-c051-01823799be22/91026479-cc7d-4ed2-9062-1a53e54c748c

Aug 16 14:23:04 xcp-sr121-u0112-s1 SM: [13443] ['/usr/bin/vhd-util', 'query', '--debug', '-s', '-n', '/dev/VG_XenStorage-e8b61db5-e776-9b83-c051-01823799be22/VHD-91026479-cc7d-4ed2-9062-1a53e54c748c']

Aug 16 14:23:04 xcp-sr121-u0112-s1 SM: [13443] pread SUCCESS

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] No progress, attempt: 3

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] Aborted coalesce

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] Iteration: 1 -- Initial size 50564555264 --> Final size 42630242816

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] Iteration: 2 -- Initial size 42630242816 --> Final size 46032163328

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] Iteration: 3 -- Initial size 46032163328 --> Final size 47671136768

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] Iteration: 4 -- Initial size 47671136768 --> Final size 53155394048

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] Unexpected bump in size, compared to minimum acheived

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] Starting size was 50564555264

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] Final size was 53155394048

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] Minimum size acheived was 42630242816

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] Removed leaf-coalesce from 91026479VHD

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] ~~~~~~~~~~~~~~~~~~~~*

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] ***********************

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] * E X C E P T I O N *

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] ***********************

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] leaf-coalesce: EXCEPTION <class 'util.SMException'>, VDI 91026479-cc7d-4ed2-9062-1a53e54c748c could not be coalesced

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] File "/opt/xensource/sm/cleanup.py", line 1774, in coalesceLeaf

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] self._coalesceLeaf(vdi)

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] File "/opt/xensource/sm/cleanup.py", line 2049, in _coalesceLeaf

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] .format(uuid=vdi.uuid))

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443]

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] ~~~~~~~~~~~~~~~~~~~~*

Aug 16 14:23:04 xcp-sr121-u0112-s1 SMGC: [13443] Leaf-coalesce failed on 91026479VHD, skippingI changed in /opt/xensource/sm/cleanup.py:

LIVE_LEAF_COALESCE_MAX_SIZE from "20 * 1024 * 1024 " up to "16384 * 1024 * 1024 "

LIVE_LEAF_COALESCE_TIMEOUT from 10 to 400

MAX_ITERATIONS_NO_PROGRESS from 3 to 6

MAX_ITERATIONS from 10 to 20And I still can't get rid of "VDIs to coalesce"

Thanks!