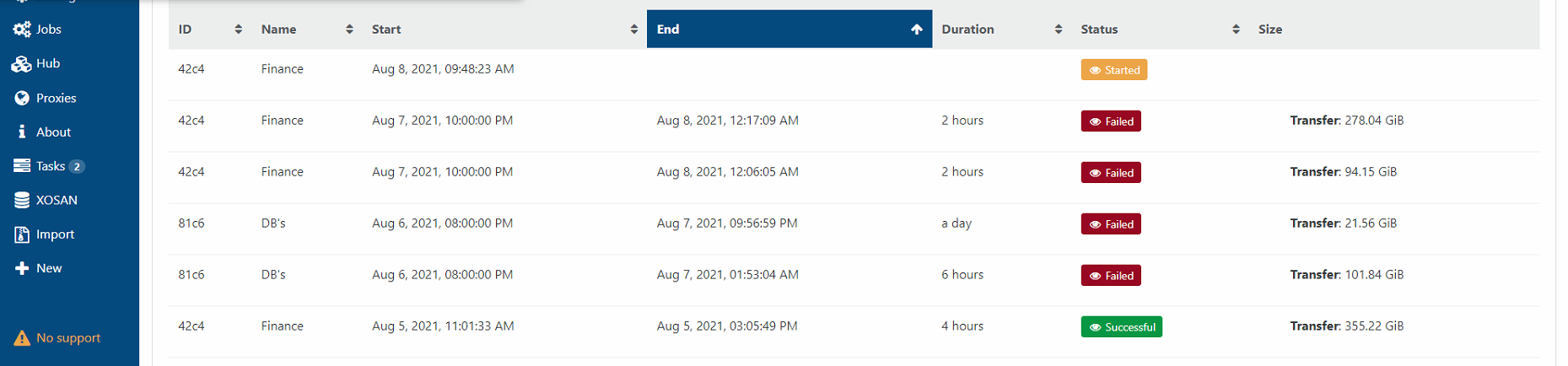

backup fail after last update

-

All my backups are failing with 'Lock file is already being held'.

they run at night and fails.

when i start them manually they work fine...Please help.

Joe

{ "data": { "mode": "full", "reportWhen": "failure" }, "id": "1627495200006", "jobId": "d6eb656d-b0cc-4dc6-b9ef-18b6ca58b838", "jobName": "Domain", "message": "backup", "scheduleId": "d7fe0858-fc02-46f5-9626-bc380df1f993", "start": 1627495200006, "status": "failure", "infos": [ { "data": { "vms": [ "ca171dfc-261a-4ae8-0f3d-857b8e31a0e7", "3d523a78-1da6-39e6-b7ca-cec9ef3833f6", "4203343e-3904-6d71-e207-23a9d7985112", "4e1499d4-c27c-413d-6678-34a684951fc9", "68e574cb-1f7b-fb49-3c1a-4e4d4db0239f" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "ca171dfc-261a-4ae8-0f3d-857b8e31a0e7" }, "id": "1627495200896", "message": "backup VM", "start": 1627495200896, "status": "success", "tasks": [ { "id": "1627495207124", "message": "snapshot", "start": 1627495207124, "status": "success", "end": 1627495266667, "result": "41d02d2b-9de4-88d8-43c8-53fc17c1cbfd" }, { "data": { "id": "f2ad79cd-6bbe-47ad-908f-bfef50d0400c", "type": "remote", "isFull": true }, "id": "1627495266715", "message": "export", "start": 1627495266715, "status": "success", "tasks": [ { "id": "1627495267668", "message": "transfer", "start": 1627495267668, "status": "success", "end": 1627503631984, "result": { "size": 128382542336 } } ], "end": 1627503633193 } ], "end": 1627503635631 }, { "data": { "type": "VM", "id": "3d523a78-1da6-39e6-b7ca-cec9ef3833f6" }, "id": "1627495200913", "message": "backup VM", "start": 1627495200913, "status": "success", "tasks": [ { "id": "1627495207126", "message": "snapshot", "start": 1627495207126, "status": "success", "end": 1627495220878, "result": "5db04304-7f98-d580-b054-90f34cdbfa81" }, { "data": { "id": "f2ad79cd-6bbe-47ad-908f-bfef50d0400c", "type": "remote", "isFull": true }, "id": "1627495220927", "message": "export", "start": 1627495220927, "status": "success", "tasks": [ { "id": "1627495220956", "message": "transfer", "start": 1627495220956, "status": "success", "end": 1627497418929, "result": { "size": 29895727616 } } ], "end": 1627497419120 } ], "end": 1627497421876 }, { "data": { "type": "VM", "id": "4203343e-3904-6d71-e207-23a9d7985112" }, "id": "1627497421876:0", "message": "backup VM", "start": 1627497421876, "status": "failure", "end": 1627497421901, "result": { "code": "ELOCKED", "file": "/mnt/Domain/xo-vm-backups/4203343e-3904-6d71-e207-23a9d7985112", "message": "Lock file is already being held", "name": "Error", "stack": "Error: Lock file is already being held\n at /opt/xen-orchestra/node_modules/proper-lockfile/lib/lockfile.js:68:47\n at callback (/opt/xen-orchestra/node_modules/graceful-fs/polyfills.js:299:20)\n at FSReqCallback.oncomplete (fs.js:193:5)\n at FSReqCallback.callbackTrampoline (internal/async_hooks.js:134:14)" } }, { "data": { "type": "VM", "id": "4e1499d4-c27c-413d-6678-34a684951fc9" }, "id": "1627497421901:0", "message": "backup VM", "start": 1627497421901, "status": "failure", "end": 1627497421917, "result": { "code": "ELOCKED", "file": "/mnt/Domain/xo-vm-backups/4e1499d4-c27c-413d-6678-34a684951fc9", "message": "Lock file is already being held", "name": "Error", "stack": "Error: Lock file is already being held\n at /opt/xen-orchestra/node_modules/proper-lockfile/lib/lockfile.js:68:47\n at callback (/opt/xen-orchestra/node_modules/graceful-fs/polyfills.js:299:20)\n at FSReqCallback.oncomplete (fs.js:193:5)\n at FSReqCallback.callbackTrampoline (internal/async_hooks.js:134:14)" } }, { "data": { "type": "VM", "id": "68e574cb-1f7b-fb49-3c1a-4e4d4db0239f" }, "id": "1627497421918", "message": "backup VM", "start": 1627497421918, "status": "success", "tasks": [ { "id": "1627497421964", "message": "snapshot", "start": 1627497421964, "status": "success", "end": 1627497429017, "result": "a6af0609-80f7-4f7a-e47f-6fbbbd2c5454" }, { "data": { "id": "f2ad79cd-6bbe-47ad-908f-bfef50d0400c", "type": "remote", "isFull": true }, "id": "1627497429132", "message": "export", "start": 1627497429132, "status": "success", "tasks": [ { "id": "1627497429621", "message": "transfer", "start": 1627497429621, "status": "success", "end": 1627500681632, "result": { "size": 38368017408 } } ], "end": 1627500682070 } ], "end": 1627500684793 } ], "end": 1627503635631 } -

Ping @julien-f

-

@joearnon It means that there is already another job working on this VM at the same time.

-

@julien-f well, there is no other job on the VM's, and it happens on all my predefined jobs. only automatic jobs does not work from the last update. i did not do any change in the jobs, and they worked fine for the past 4 month. I've only updated the version of orchestra and it started.

-

@joearnon So this 2 particulat VMs failed every time? When you tried to start the job manually they work fine? May be try changing the schedule to test?

-

@tony no, its all my jobs. this is an example of a job that fails. i can give you all my jobs but you;ll see the same error.

if i run the jobs manually, even all together, all works fine. but it's annoying, and disturbing. -

@joearnon Are you sure they all failed? From the log you posted only 2 out of 5 failed, the other 3 backed up just fine.

-

@tony the job failed, not all the VM's failed. some has one VM fail, some has 4 VM's fail.

-

@joearnon So are the VMs that failed always the same ones or are they random? Are they all on the same SR?

-

@tony random VM's, not on the same SR.

-

@joearnon Hmm... if they are random VMs, then it probably isn't scheduling issue since that would normally cause the same set of VMs to be locked. Not sure whats going on... may be you can disable all but one backup job to see if that changes anything.

-

-

-

@joearnon Can you post logs of both backup instances (of the same job) that ran, see if they are different and in which way?

You can also try to reconfigure the back up job to only a single tag parameter to see if that is the issue.