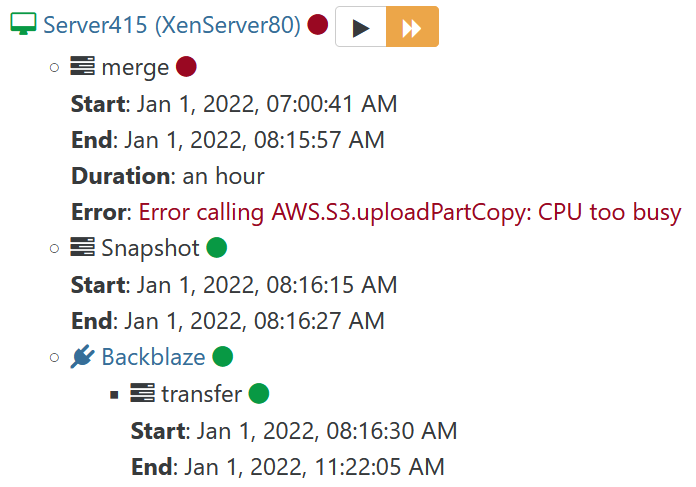

Delta Backups Failing: AWS.S3.uploadPartCopy: CPU too busy

-

Hi,

Is the following error from XCP-ng or from Backblaze servers?

Thx,

SW

-

Hmm never heard of this one, any idea @florent ?

-

@olivierlambert never heard of this one . I will search if it's a backblaze or a xo message

since it use uploadPartCopy , it should be during a full (xva ) backup -

there isn't much documentation online, but I found here ( https://www.reddit.com/r/backblaze/comments/bvufz0/servers_are_often_too_busy_is_this_normal_b2/ ) that we should retry when this error occurs

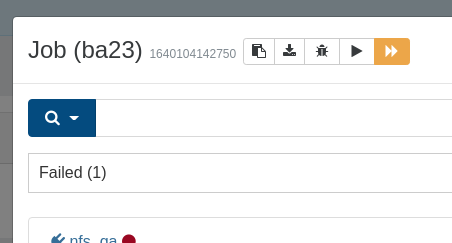

can you copy the full log of this backup ? It would be easier If we could get a machine code ( probably a http code like 50x ) as explained here : https://www.backblaze.com/blog/b2-503-500-server-error/

You can get it by clicking on the second icons from the left in your job report

-

@florent , thank you! I tried clicking on the report bug but it returned an error saying url too long. So I've attached the backup log.backup_log.txt

-

thank you @stevewest15

I don't have the info I wanted inside, but I think we'll have to handle the 500 error of b2 with a retry . Theses errors are uncommon on S3, but are here by design in b2 -

@florent , thank you for your help on this! I'm wondering if we should rely on b2 for our offsite disaster recovery. Other than testing the backups done to b2, is there a method XO ensures the backups are valid and all data made to the S3 buckets?

Thank You,

SW

-

@stevewest15 I don't have any advice of the fiability of b2

Their design require us to make a minor modification to our upload service .During a full xva backup to a S3 like service, each part uploaded is uploaded along its hash and that is used to control the integrity

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (either via email, or push notification). You'll also be able to save bookmarks and upvote posts to show your appreciation to other community members.

With your input, this post could be even better 💗

Register Login