VDI_IO_ERROR Continuous Replication on clean install.

-

Thanks for your reply @olivierlambert .

-

Is the following logs normal ? I tried to do CR to this server on SSD it fails < 5 mins. When I do CR between Sata Disk -> Sata Disk it works well. When I use another software like xackup to copy it managed to migrate over.

Dec 16 16:03:09 megaman SM: [19074] sr_update {'sr_uuid': '0c89eabe-f70c-a8df-d86e-8ebfa93acbd2', 'subtask_of': 'DummyRef:|1bd73082-34e9-4f14-bcef-fd6af84372d7|SR.stat', 'args': [], 'host_ref': 'OpaqueRe$ Dec 16 16:03:39 megaman SM: [19252] sr_update {'sr_uuid': '0c89eabe-f70c-a8df-d86e-8ebfa93acbd2', 'subtask_of': 'DummyRef:|7120995b-0b5f-4ffb-8615-0aee36bce30f|SR.stat', 'args': [], 'host_ref': 'OpaqueRe$ Dec 16 16:04:09 megaman SM: [19427] sr_update {'sr_uuid': '0c89eabe-f70c-a8df-d86e-8ebfa93acbd2', 'subtask_of': 'DummyRef:|e5077e90-da43-4aba-a3ab-4cb37a4bfa5c|SR.stat', 'args': [], 'host_ref': 'OpaqueRe$ Dec 16 16:04:39 megaman SM: [19603] sr_update {'sr_uuid': '0c89eabe-f70c-a8df-d86e-8ebfa93acbd2', 'subtask_of': 'DummyRef:|f325d88f-bd39-4759-b070-645cbbb9d225|SR.stat', 'args': [], 'host_ref': 'OpaqueRe$ Dec 16 16:05:09 megaman SM: [19915] sr_update {'sr_uuid': '0c89eabe-f70c-a8df-d86e-8ebfa93acbd2', 'subtask_of': 'DummyRef:|fd6270d5-e090-432c-bd1c-7c5837b7fd24|SR.stat', 'args': [], 'host_ref': 'OpaqueRe$ Dec 16 16:05:39 megaman SM: [20108] sr_update {'sr_uuid': '0c89eabe-f70c-a8df-d86e-8ebfa93acbd2', 'subtask_of': 'DummyRef:|ee983899-4c6f-49cf-a960-4bf7e4d6cd25|SR.stat', 'args': [], 'host_ref': 'OpaqueRe$ Dec 16 16:06:09 megaman SM: [20290] sr_update {'sr_uuid': '0c89eabe-f70c-a8df-d86e-8ebfa93acbd2', 'subtask_of': 'DummyRef:|d275ee38-1bac-4917-b929-e91553274e11|SR.stat', 'args': [], 'host_ref': 'OpaqueRe$ Dec 16 16:06:39 megaman SM: [20478] sr_update {'sr_uuid': '0c89eabe-f70c-a8df-d86e-8ebfa93acbd2', 'subtask_of': 'DummyRef:|cbf822ed-f23a-444e-b34e-9c4192c6a09a|SR.stat', 'args': [], 'host_ref': 'OpaqueRe$ Dec 16 16:07:09 megaman SM: [20658] sr_update {'sr_uuid': '0c89eabe-f70c-a8df-d86e-8ebfa93acbd2', 'subtask_of': 'DummyRef:|9f48a062-b351-4d50-bf2b-d8de31ee6d53|SR.stat', 'args': [], 'host_ref': 'OpaqueRe$ Dec 16 16:07:39 megaman SM: [20842] sr_update {'sr_uuid': '0c89eabe-f70c-a8df-d86e-8ebfa93acbd2', 'subtask_of': 'DummyRef:|e0ae9a2b-2f19-46ec-90e0-89e017cfc3ae|SR.stat', 'args': [], 'host_ref': 'OpaqueRe$ Dec 16 16:08:09 megaman SM: [21062] sr_update {'sr_uuid': '0c89eabe-f70c-a8df-d86e-8ebfa93acbd2', 'subtask_of': 'DummyRef:|4123612d-f2d7-4a8d-b500-6bc8e1aadb7d|SR.stat', 'args': [], 'host_ref': 'OpaqueRe$ Dec 16 16:08:27 megaman SM: [21308] lock: opening lock file /var/lock/sm/14e167a3-8cff-a180-4108-9d72795811d1/sr Dec 16 16:08:27 megaman SM: [21308] lock: acquired /var/lock/sm/14e167a3-8cff-a180-4108-9d72795811d1/sr Dec 16 16:08:27 megaman SM: [21308] ['/usr/sbin/td-util', 'query', 'vhd', '-vpfb', '/var/run/sr-mount/14e167a3-8cff-a180-4108-9d72795811d1/f3449a8c-a6fb-4fac-83b0-e7770bce5480.vhd'] Dec 16 16:08:27 megaman SM: [21308] pread SUCCESS Dec 16 16:08:27 megaman SM: [21308] vdi_snapshot {'sr_uuid': '14e167a3-8cff-a180-4108-9d72795811d1', 'subtask_of': 'DummyRef:|c89e8410-e7de-4495-ab2b-fee217285d01|VDI.snapshot', 'vdi_ref': 'OpaqueRef:a55$ Dec 16 16:08:27 megaman SM: [21308] Pause request for f3449a8c-a6fb-4fac-83b0-e7770bce5480 Dec 16 16:08:27 megaman SM: [21308] Calling tap-pause on host OpaqueRef:4f79dd6c-0bc5-42b8-bfc6-092fe7e291c5 Dec 16 16:08:27 megaman SM: [21341] lock: opening lock file /var/lock/sm/f3449a8c-a6fb-4fac-83b0-e7770bce5480/vdi Dec 16 16:08:27 megaman SM: [21341] lock: acquired /var/lock/sm/f3449a8c-a6fb-4fac-83b0-e7770bce5480/vdi Dec 16 16:08:27 megaman SM: [21341] Pause for f3449a8c-a6fb-4fac-83b0-e7770bce5480 Dec 16 16:08:27 megaman SM: [21341] Calling tap pause with minor 2 Dec 16 16:08:27 megaman SM: [21341] ['/usr/sbin/tap-ctl', 'pause', '-p', '26926', '-m', '2'] Dec 16 16:08:27 megaman SM: [21341] = 0 Dec 16 16:08:27 megaman SM: [21341] lock: released /var/lock/sm/f3449a8c-a6fb-4fac-83b0-e7770bce5480/vdi Dec 16 16:08:27 megaman SM: [21308] FileVDI._snapshot for f3449a8c-a6fb-4fac-83b0-e7770bce5480 (type 2) -

yes i think im dumb. waiting when you will look at logs which i not provided

i see nothing interesting except one repeatable "Failed to lock /var/lock/sm/.nil/lvm on first attempt, blocked by PID 25021"

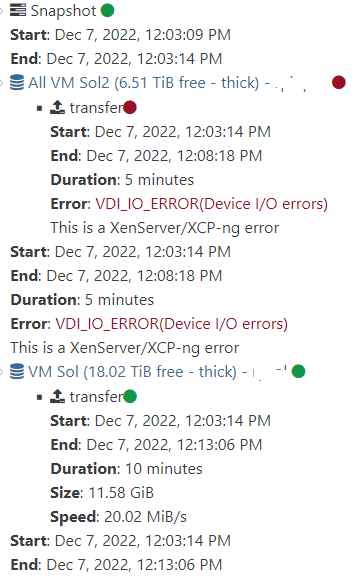

and task duration before fail always 5min. i think that some hardcoded timers?

Start: Dec 16, 2022, 12:44:50 PM

End: Dec 16, 2022, 12:50:15 PM

part 1 https://pastebin.com/xZAXEiq1

part 2 https://pastebin.com/Lmhermgx -

T Tristis Oris referenced this topic on

T Tristis Oris referenced this topic on

-

@Tristis-Oris Hey man, were you able to solve this? I'm facing the same issue after a reinstall. Continuous Replication fails exactly at 5 minutes.

Thanks in advance -

@yomono nope still investigating. i got

SR_NOT_SUPPORTEDat log. -

-

Can you double check you are using a recent commit on

master? -

@olivierlambert In my case, I'm indeed using the latest commit. I played around with old commits yesterday (as old as two or three months) but same result. Right now, I'm using the latest (commited an hour ago).

I can share my SMlogs if you want but I'm also getting the "SR_NOT SUPPORTED" error. I tried to backup different VMs on different sources servers, and to different servers destinations. My next try will be reinstalling XO -

Indeed, try to wipe it entirely, and rebuild.

-

@olivierlambert that a old problem) but yes, usually about latest. Just repeated all tests on

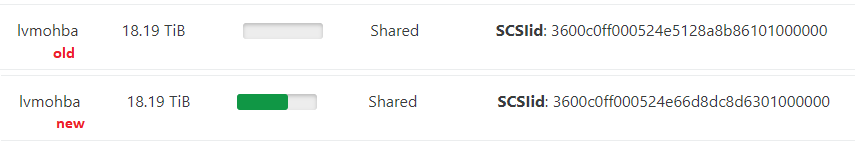

3c7d3.- CR stop working right after clean 8.2.1 installation. at same day.

- replaced FC to iscsi (only bcz i need iscsi here

), created new LUN > same story.

), created new LUN > same story. - 8.2.0 clean install, no updated - CR works.

- don't tried usual backups to this SR, don't need them here.

- VM migration works at any setup.

problem only with one storage

Dell EMC PowerVault ME4012. all other huawei, iscsi - works fine. But not sure if i have another clean 8.2.1 pools. Maybe only some nodes.logs now. I'm doing 2 CR backups.

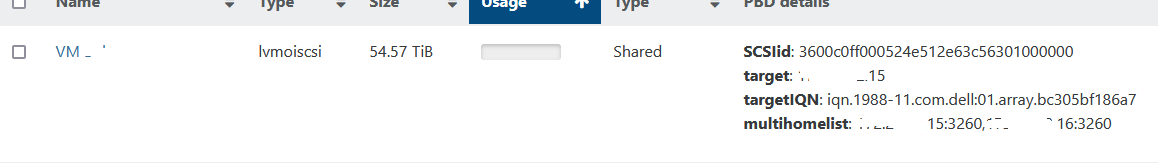

- Xen 8.2.1, clean host, no any VM. iscsi 60Tb lun. Xen show only 50Tb

Jan 16 15:15:30 test SMGC: [10010] SR f3fd ('LUN') (2 VDIs in 2 VHD trees): Jan 16 15:15:30 test SMGC: [10010] a459d14f[VHD](50.000G//50.105G|ao) Jan 16 15:15:30 test SMGC: [10010] 1789f7a7[VHD](50.000G//50.105G|ao) Jan 16 15:15:47 test SMGC: [10338] SR f3fd ('LUN') (1 VDIs in 1 VHD trees): Jan 16 15:15:47 test SMGC: [10338] a459d14f[VHD](50.000G//50.105G|ao) Jan 16 15:15:47 test SMGC: [10338]here it 60Tb.

2 vms, 2 error -

SR_NOT_SUPPORTEDJan 16 15:10:43 test SM: [6596] result: {'params_nbd': 'nbd:unix:/run/blktap-control/nbd/f3fd46f7-5ce4-e5e0-53e9-059ce4775a7b/1789f7a7-05a0-411c-aa80-dcc659f8b45f', 'o_direct_reason': 'SR_NOT_SUPPORTED', 'params': '/dev/sm/backend/f3fd46f7-5ce4-e5e0-53e9-059ce4775a7b/1789f7a7-05a0-411c-aa80-dcc659f8b45f', 'o_direct': True, 'xenstore_data': {'scsi/0x12/0x80': 'AIAAEjE3ODlmN2E3LTA1YTAtNDEgIA==', 'scsi/0x12/0x83': 'AIMAMQIBAC1YRU5TUkMgIDE3ODlmN2E3LTA1YTAtNDExYy1hYTgwLWRjYzY1OWY4YjQ1ZiA=', 'vdi-uuid': '1789f7a7-05a0-411c-aa80-dcc659f8b45f', 'mem-pool': 'f3fd46f7-5ce4-e5e0-53e9-059ce4775a7b'}} Jan 16 15:10:49 test SM: [6834] result: {'params_nbd': 'nbd:unix:/run/blktap-control/nbd/f3fd46f7-5ce4-e5e0-53e9-059ce4775a7b/a459d14f-ae92-4a77-8574-30442126624b', 'o_direct_reason': 'SR_NOT_SUPPORTED', 'params': '/dev/sm/backend/f3fd46f7-5ce4-e5e0-53e9-059ce4775a7b/a459d14f-ae92-4a77-8574-30442126624b', 'o_direct': True, 'xenstore_data': {'scsi/0x12/0x80': 'AIAAEmE0NTlkMTRmLWFlOTItNGEgIA==', 'scsi/0x12/0x83': 'AIMAMQIBAC1YRU5TUkMgIGE0NTlkMTRmLWFlOTItNGE3Ny04NTc0LTMwNDQyMTI2NjI0YiA=', 'vdi-uuid': 'a459d14f-ae92-4a77-8574-30442126624b', 'mem-pool': 'f3fd46f7-5ce4-e5e0-53e9-059ce4775a7b'}}and some small like

Jan 16 15:14:30 test SM: [9387] Failed to lock /var/lock/sm/.nil/lvm on first attempt, blocked by PID 9357 Line 184: Jan 16 15:10:29 test SM: [6376] Failed to lock /var/lock/sm/.nil/lvm on first attempt, blocked by PID 6348 Line 571: Jan 16 15:10:59 test SM: [7141] Failed to lock /var/lock/sm/.nil/lvm on first attempt, blocked by PID 7115 Line 717: Jan 16 15:11:30 test SM: [7457] Failed to lock /var/lock/sm/.nil/lvm on first attempt, blocked by PID 7428 Line 1927: Jan 16 15:15:43 test SM: [10146] unlink of attach_info failednothing more with error status at log.

-

because of weird size, tried 8.2.1 with iscsi 20Tb LUN.

same resultSR_NOT_SUPPORTED. -

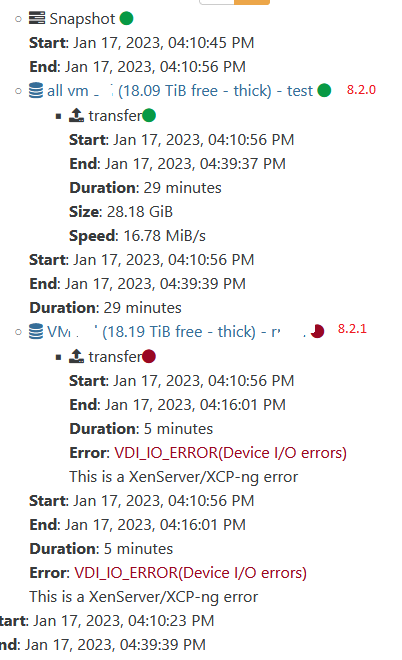

now 8.2.0 clean install, no any updates.

it works.

here both hosts connected to same SR.

- 8.2.0 full updates > 8.2.1 release/yangtze/master/58

CR still working.

4.1. unmount LUN, mount again.

working. -

I have no idea, sorry. So to recap:

- doesn't work on a 8.2.1 fresh install with updates

- works on older 8.2.0

- work on 8.2.0 updated to 8.2.1

It doesn't sound like an XO bug in your case.

-

@olivierlambert yes. so what to do next?)

-

Trying to figure the setup so we can try to reproduce, and also switching various things until there's a clear pattern.

Eg: can you try with an NFS share to see if you have the same issue? If it's iSCSI related, that would help us to investigate.

-

i can't, this is only SAN storage.

any point to test on 8.3 alpha? -

You can, it's still another test that might help us to pinpoint something

-

@olivierlambert I would like to add that after this recap I realized... I also had to reinstall XCP so in my case it's also a fresh 8.2.1 install! At least. knowing that, I can do a 8.2.0 + upgrade installation.. (that's what I used to have). I can also try 8.3 alpha, it's not like I have anything to lose at this point (that server is only to contain XO, there is nothing else there)

Anyways.. the fresh 8.2.1 install is definitely the common point here -

Also with iSCSI storage, right?

-

@olivierlambert not really. This time is just local ext storage, SATA drives.

-

In LVM or thin? It might be 2 different problems, so I'm trying to sort this out.

-

@olivierlambert both! I have both mixed in my servers and I tried in both when I did the tests