Lost access to all servers

-

/var/log/xensource.logI am not sure what to look for so I hope this is righ

-

Hope someone can help me understand what the issue is

-

Ronan is in vacation now, but he'll take a look when he's back

(tomorrow maybe, Monday I'm pretty sure)

(tomorrow maybe, Monday I'm pretty sure) -

@olivierlambert Thank you very much for letting me know

-

-

@fred974 Hi, well first, how many hosts do you have?

We recommend to use at least 3 hosts, (4 is more robust). And also what's your replication count on your LINSTOR SR?

I ask these questions because it's possible that a problem on a host has caused reboots on the whole pool and finally the emergency state.Now: can you share the kern.log files of each host? And execute this command (on each machine) please:

drbdsetup status xcp-persistent-database -

@ronan-a said in Lost access to all servers:

well first, how many hosts do you have?

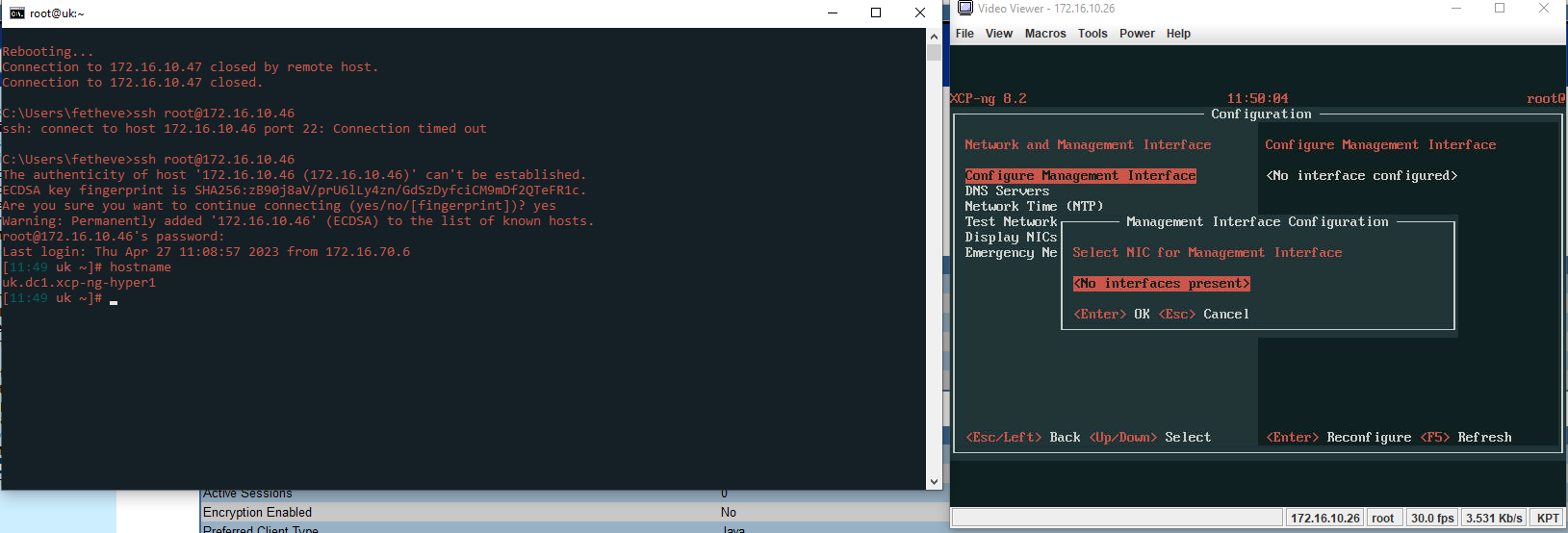

We have 4x hosts.

Host1 was the original master (host2 is new master) and I think the DRBD replication count is 3 (how can I double check?)

Host1:[21:15 uk ~]# drbdsetup status xcp-persistent-database xcp-persistent-database role:Secondary disk:Diskless quorum:no uk.dc1.xcp-ng-hyper2 connection:Connecting uk.dc1.xcp-ng-hyper3 connection:Connecting uk.dc1.xcp-ng-hyper4 connection:ConnectingHost2, 3 and 4 has

[21:18 uk ~]# drbdsetup status xcp-persistent-database # No currently configured DRBD found. xcp-persistent-database: No such resourcekern.log files host1

host1_kern.log.txtkern.log files host2

host2_kern.log.txtkern.log files host3

host3_kern.log.txtkern.log files host4

host4_kern.log.txtOur monitor reported the first VM been down at 11am which is reflected in the log file. We also have ourly snapshot so I was wondering if this could also been the reason why. I hope the file above can help us understand the issue. Also, should I put host1 back as master?

Thank you

-

@fred974 I'll take a look at the logs. Thanks. What's the ouput of

lvs? If the database is not active, execute:vgchange -ay linstor_group. -

@ronan-a said in Lost access to all servers:

Thanks. What's the ouput of lvs

host1

[11:25 uk ~]# lvs Device read short 82432 bytes remaining Device read short 40960 bytes remaining Device read short 40960 bytes remaining Device read short 40960 bytes remaining Device read short 40960 bytes remaining Device read short 98304 bytes remaining LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert MGT VG_XenStorage-f7d16827-19e0-c57d-a720-c7fba180d4af -wi------- 4.00m 28b8eb58-a6a2-c2fa-ad1e-b339b531330f XSLocalEXT-28b8eb58-a6a2-c2fa-ad1e-b339b531330f -wi-ao---- <517.40g thin_device linstor_group twi-aotz-- 2.18t 3.28 12.11 xcp-persistent-ha-statefile_00000 linstor_group Vwi-a-tz-- 8.00m thin_device 50.00 xcp-persistent-redo-log_00000 linstor_group Vwi-a-tz-- 260.00m thin_device 2.31 xcp-volume-126b1370-6042-40ce-8184-22a771fbf1e4_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 0.11 xcp-volume-24413a81-84b6-4242-a245-6076d5670bb4_00000 linstor_group Vwi-a-tz-- 20.05g thin_device 42.69 xcp-volume-3c45b809-33b7-40a3-a602-01e1511327e7_00000 linstor_group Vwi-a-tz-- 10.03g thin_device 45.23 xcp-volume-46caa8c3-2585-4296-a756-2d96cf2141df_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 8.10 xcp-volume-6b125e04-7134-40e3-85ce-19417c186ac5_00000 linstor_group Vwi-a-tz-- <50.12g thin_device 54.75 xcp-volume-78744846-0432-44e6-a135-021f6b5dc072_00000 linstor_group Vwi-a-tz-- 20.00m thin_device 90.00 xcp-volume-7a83c50f-6bd5-4d7e-89a5-c3dee95bdd0b_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 1.13 xcp-volume-91a4068e-73cc-402f-87eb-2f631e66d6e2_00000 linstor_group Vwi-a-tz-- 20.05g thin_device 64.23 xcp-volume-ac921e7e-71ab-4ee9-8d61-5a55fe5fc369_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 0.11 xcp-volume-c1a113f6-9d1e-45f6-9b7d-656327523ce3_00000 linstor_group Vwi-a-tz-- 20.00m thin_device 90.00 xcp-volume-c31378ba-1ec6-4756-ab54-67c49b2ecd51_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 17.40 xcp-volume-d017c7e9-c2bc-422e-a94c-580d7001f5d0_00000 linstor_group Vwi-a-tz-- 20.00m thin_device 90.00 xcp-volume-d0b249b1-fb43-4013-bd6e-67c5fbdcd9b5_00000 linstor_group Vwi-a-tz-- 20.00m thin_device 90.00 xcp-volume-d8d37107-bb28-4884-9fb2-e771b4df1c70_00000 linstor_group Vwi-a-tz-- 10.03g thin_device 45.33 xcp-volume-fb436790-958a-46a3-b38f-aaca7d6738c8_00000 linstor_group Vwi-a-tz-- <50.12g thin_device 0.11 xcp-volume-fec259ee-bee0-4118-b5ba-09035aad8ca2_00000 linstor_group Vwi-a-tz-- 10.03g thin_device 44.47host2

[11:28 uk ~]# lvs Device read short 82432 bytes remaining Device read short 40960 bytes remaining Device read short 40960 bytes remaining Device read short 40960 bytes remaining Device read short 40960 bytes remaining Device read short 98304 bytes remaining LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert MGT VG_XenStorage-f7d16827-19e0-c57d-a720-c7fba180d4af -wi-a----- 4.00m ae8a3b6f-b412-0294-43f6-6c11250c6927 XSLocalEXT-ae8a3b6f-b412-0294-43f6-6c11250c6927 -wi-ao---- <517.40g thin_device linstor_group twi-aotz-- 2.18t 3.49 12.21 xcp-persistent-database_00000 linstor_group Vwi-a-tz-- 1.00g thin_device 6.03 xcp-persistent-ha-statefile_00000 linstor_group Vwi-a-tz-- 8.00m thin_device 50.00 xcp-persistent-redo-log_00000 linstor_group Vwi-a-tz-- 260.00m thin_device 2.31 xcp-volume-24413a81-84b6-4242-a245-6076d5670bb4_00000 linstor_group Vwi-a-tz-- 20.05g thin_device 42.69 xcp-volume-2ac918c0-1feb-4ad9-97d6-dcc561832b5d_00000 linstor_group Vwi-a-tz-- 10.03g thin_device 73.19 xcp-volume-3c45b809-33b7-40a3-a602-01e1511327e7_00000 linstor_group Vwi-a-tz-- 10.03g thin_device 45.23 xcp-volume-3e025e5e-c339-4e0c-b8ca-eb4e509ce24d_00000 linstor_group Vwi-a-tz-- <4.02g thin_device 51.99 xcp-volume-46caa8c3-2585-4296-a756-2d96cf2141df_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 8.10 xcp-volume-6b125e04-7134-40e3-85ce-19417c186ac5_00000 linstor_group Vwi-a-tz-- <50.12g thin_device 54.75 xcp-volume-78744846-0432-44e6-a135-021f6b5dc072_00000 linstor_group Vwi-a-tz-- 20.00m thin_device 90.00 xcp-volume-7a83c50f-6bd5-4d7e-89a5-c3dee95bdd0b_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 1.13 xcp-volume-9e32d56e-7c7d-443b-955a-57015b968375_00000 linstor_group Vwi-a-tz-- 6.02g thin_device 96.63 xcp-volume-ac921e7e-71ab-4ee9-8d61-5a55fe5fc369_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 0.11 xcp-volume-c176df5f-5ef6-46b3-841e-93ab0b5af30e_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 11.33 xcp-volume-c31378ba-1ec6-4756-ab54-67c49b2ecd51_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 17.40 xcp-volume-d017c7e9-c2bc-422e-a94c-580d7001f5d0_00000 linstor_group Vwi-a-tz-- 20.00m thin_device 90.00 xcp-volume-f6901916-1ce0-4757-88b6-642b96c4ab80_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 6.03 xcp-volume-fb436790-958a-46a3-b38f-aaca7d6738c8_00000 linstor_group Vwi-a-tz-- <50.12g thin_device 0.11 xcp-volume-fec259ee-bee0-4118-b5ba-09035aad8ca2_00000 linstor_group Vwi-a-tz-- 10.03g thin_device 44.47host3

[11:25 uk ~]# lvs Device read short 82432 bytes remaining Device read short 40960 bytes remaining Device read short 40960 bytes remaining Device read short 40960 bytes remaining Device read short 40960 bytes remaining Device read short 98304 bytes remaining LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert MGT VG_XenStorage-f7d16827-19e0-c57d-a720-c7fba180d4af -wi------- 4.00m 5792308f-7a3c-e62d-07c5-21ac24d3a56a XSLocalEXT-5792308f-7a3c-e62d-07c5-21ac24d3a56a -wi-ao---- <517.40g thin_device linstor_group twi-aotz-- 2.18t 3.54 12.23 xcp-persistent-database_00000 linstor_group Vwi-a-tz-- 1.00g thin_device 6.03 xcp-persistent-ha-statefile_00000 linstor_group Vwi-a-tz-- 8.00m thin_device 50.00 xcp-persistent-redo-log_00000 linstor_group Vwi-a-tz-- 260.00m thin_device 2.31 xcp-volume-126b1370-6042-40ce-8184-22a771fbf1e4_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 0.11 xcp-volume-24413a81-84b6-4242-a245-6076d5670bb4_00000 linstor_group Vwi-a-tz-- 20.05g thin_device 42.69 xcp-volume-2ac918c0-1feb-4ad9-97d6-dcc561832b5d_00000 linstor_group Vwi-a-tz-- 10.03g thin_device 73.19 xcp-volume-3e025e5e-c339-4e0c-b8ca-eb4e509ce24d_00000 linstor_group Vwi-a-tz-- <4.02g thin_device 51.99 xcp-volume-46caa8c3-2585-4296-a756-2d96cf2141df_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 8.10 xcp-volume-6b125e04-7134-40e3-85ce-19417c186ac5_00000 linstor_group Vwi-a-tz-- <50.12g thin_device 54.75 xcp-volume-91a4068e-73cc-402f-87eb-2f631e66d6e2_00000 linstor_group Vwi-a-tz-- 20.05g thin_device 64.23 xcp-volume-9e32d56e-7c7d-443b-955a-57015b968375_00000 linstor_group Vwi-a-tz-- 6.02g thin_device 96.63 xcp-volume-c176df5f-5ef6-46b3-841e-93ab0b5af30e_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 11.33 xcp-volume-c1a113f6-9d1e-45f6-9b7d-656327523ce3_00000 linstor_group Vwi-a-tz-- 20.00m thin_device 90.00 xcp-volume-d0b249b1-fb43-4013-bd6e-67c5fbdcd9b5_00000 linstor_group Vwi-a-tz-- 20.00m thin_device 90.00 xcp-volume-d8d37107-bb28-4884-9fb2-e771b4df1c70_00000 linstor_group Vwi-a-tz-- 10.03g thin_device 45.33 xcp-volume-f6901916-1ce0-4757-88b6-642b96c4ab80_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 6.03 xcp-volume-fb436790-958a-46a3-b38f-aaca7d6738c8_00000 linstor_group Vwi-a-tz-- <50.12g thin_device 0.11host4

[11:25 uk ~]# lvs Device read short 82432 bytes remaining Device read short 40960 bytes remaining Device read short 40960 bytes remaining Device read short 40960 bytes remaining Device read short 40960 bytes remaining Device read short 98304 bytes remaining LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert MGT VG_XenStorage-f7d16827-19e0-c57d-a720-c7fba180d4af -wi------- 4.00m 3d07204c-eec9-caf1-f86a-fab419537889 XSLocalEXT-3d07204c-eec9-caf1-f86a-fab419537889 -wi-ao---- <517.40g thin_device linstor_group twi-aotz-- 2.18t 2.52 11.73 xcp-persistent-database_00000 linstor_group Vwi-a-tz-- 1.00g thin_device 6.03 xcp-volume-126b1370-6042-40ce-8184-22a771fbf1e4_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 0.11 xcp-volume-2ac918c0-1feb-4ad9-97d6-dcc561832b5d_00000 linstor_group Vwi-a-tz-- 10.03g thin_device 73.19 xcp-volume-3c45b809-33b7-40a3-a602-01e1511327e7_00000 linstor_group Vwi-a-tz-- 10.03g thin_device 45.23 xcp-volume-3e025e5e-c339-4e0c-b8ca-eb4e509ce24d_00000 linstor_group Vwi-a-tz-- <4.02g thin_device 51.99 xcp-volume-78744846-0432-44e6-a135-021f6b5dc072_00000 linstor_group Vwi-a-tz-- 20.00m thin_device 90.00 xcp-volume-7a83c50f-6bd5-4d7e-89a5-c3dee95bdd0b_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 1.13 xcp-volume-91a4068e-73cc-402f-87eb-2f631e66d6e2_00000 linstor_group Vwi-a-tz-- 20.05g thin_device 64.23 xcp-volume-9e32d56e-7c7d-443b-955a-57015b968375_00000 linstor_group Vwi-a-tz-- 6.02g thin_device 96.63 xcp-volume-ac921e7e-71ab-4ee9-8d61-5a55fe5fc369_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 0.11 xcp-volume-c176df5f-5ef6-46b3-841e-93ab0b5af30e_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 11.33 xcp-volume-c1a113f6-9d1e-45f6-9b7d-656327523ce3_00000 linstor_group Vwi-a-tz-- 20.00m thin_device 90.00 xcp-volume-c31378ba-1ec6-4756-ab54-67c49b2ecd51_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 17.40 xcp-volume-d017c7e9-c2bc-422e-a94c-580d7001f5d0_00000 linstor_group Vwi-a-tz-- 20.00m thin_device 90.00 xcp-volume-d0b249b1-fb43-4013-bd6e-67c5fbdcd9b5_00000 linstor_group Vwi-a-tz-- 20.00m thin_device 90.00 xcp-volume-d8d37107-bb28-4884-9fb2-e771b4df1c70_00000 linstor_group Vwi-a-tz-- 10.03g thin_device 45.33 xcp-volume-f6901916-1ce0-4757-88b6-642b96c4ab80_00000 linstor_group Vwi-a-tz-- <40.10g thin_device 6.03 xcp-volume-fec259ee-bee0-4118-b5ba-09035aad8ca2_00000 linstor_group Vwi-a-tz-- 10.03g thin_device 44.47@ronan-a said in Lost access to all servers:

If the database is not active, execute: vgchange -ay linstor_group.

How do I know if the database is active or not?

-

@ronan-a did you get a chance to review the log? Did you see anything that can help me move forward?

Thank you -

@fred974 I was a little bit busy, I can take a look at your problems tomorrow.

In the worst case, do you have a way to open a ssh connection to your servers? -

@ronan-a thank you very much. Do you want me to open a tunnel via Xen Orchestra?

-

@fred974 If you can yes.

Send me the code using the chat.

Send me the code using the chat. -

@ronan-a Thank you very much for helping fixing my pool

-

@fred974

It would be great, if you could write down some lines about the issue and how it could get fixed -

@KPS The DRBD volume of the LINSTOR database was not created by the driver. We just restarted few services + the hosts to fix that. Unfortunately, we have no explanation for what could have happened. So unfortunately I don't have much more interesting information to give. However if a person finds himself again in this situation, I can assist him in order to see if we can obtain more interesting logs.

-

This post is deleted! -

@ronan-a I will let you and the community know If I run into this problem again. I just find out that we had one of the NFS server that kept rebooting around the same time so I do wonder if it could have contribute to the issue if the hosts couldn't connect to it or not. I am not advanced enough to know if there is any corelation.

-

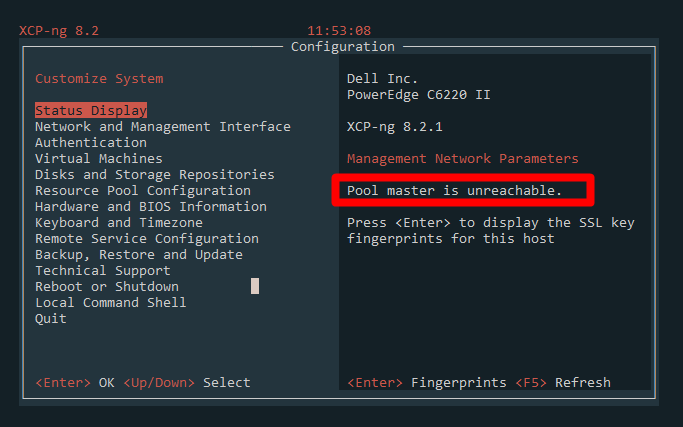

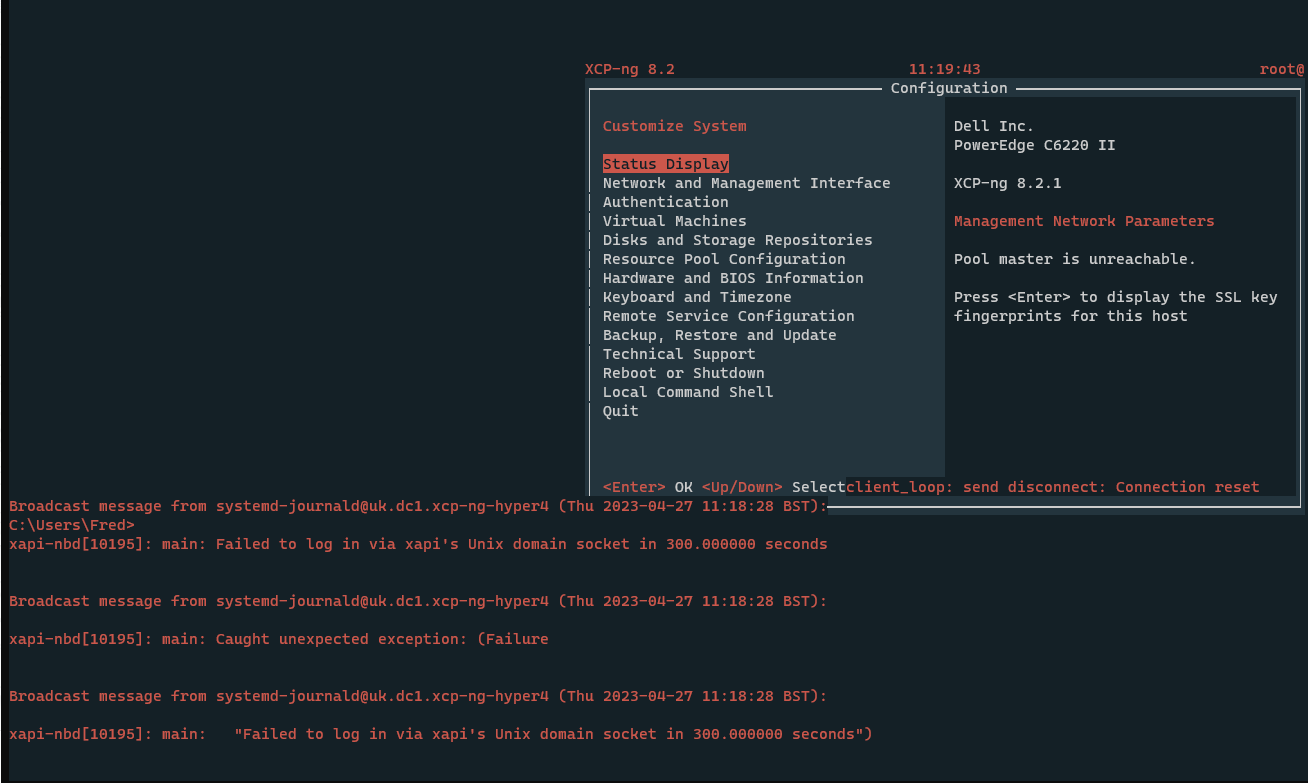

I may be having a similar issue to the one you helped @fred974 with last month. One (xcp-ng3) of the three hosts on my lab environment dropped out of the SR in the past day or so. I believe it may have coincided with a failed live migration to that host and/or a forced reboot of the host after that failed migration. Here's what I am seeing on the affected host:

[09:14 xcp-ng3 ~]# linstor node list Error: Unable to connect to linstor://localhost:3370: [Errno 99] Cannot assign requested address [09:14 xcp-ng3 ~]# drbdadm status xcp-persistent-database role:Secondary disk:UpToDate quorum:no xcp-ng1 connection:StandAlone xcp-ng2 connection:StandAloneThe other two hosts, xcp-ng1 and xcp-ng2, are still operating without issue. XO sees xcp-ng3 and does not throw any errors unless I attempt any action that utilizes the SR (makes sense). It seems apparent that the linstor controller is not running, as any linstor command results in the connection error above. Thoughts? Any other logs you need?

FWIW, I'd normally just wipe the host and reinstall, but I wanted to bring it to your attention in case there's any value to the project.

-

I think that might be interesting for @ronan-a indeed