Suggestions for new servers

-

I'm planning to buy new servers with NVMe (U.2) based local SR (and install xcp-ng 8.3).

I've some questions:

- can i use a single software (mdadm) RAID1 or RAID10 volume for both OS and SR?

- Is better to use two distinct (mdadm) volumes for OS (2xSSD RAID1) and SR (2xNVMe RAID1)?

- Is VROC an option (I think no because it is a software raid)?

- Is hw controller an option to evaluate?

- Is there any size limit (I'm thinking to use 2 x 7.68 TB U.2 NVMe Samsung PM9A3 DC Series)?

- I will opt for a CPU (2 x Intel Gold 5317) with good single-thread performance to maximize tapdisk throughput. Is it a good idea?

- Other parts: Intel X550T2 2x10 gbe, 256 GB DDR4-3200 RAM, redundant power supply

Any other suggestion about storage for a new server?

Thanks

-

Short answer: pretty good choice.

No VROC, mdadm is OK. In general I prefer to split between system disks and storage disks, because it means you have a nice flexibility for storage without any interference on the system part (RAID1 for the system is perfect, storage depends on what you want to achieve). But it's more convenience related than anything else. Intel NIC: it's OK.

-

I'll try to answer with my opinions in order:

- Yes you can, but IMO this should be avoided, not like super risky or anything but it's nice to have things separate

- I think so yes

- As @olivierlambert mentioned, no on VROC

- All my XCP-ng installs that are in prod use a hardware RAID controller instead of software RAID, generally it's preferred (in fact I think Vates team told me that in a ticket) for production use cases, not that software can't work and that's what I use in my Homelab with no issues, but it's nice to have something I am more confident in, in production

- In terms of disks, don't believe so, in terms of VHDs it's 2TiB just FYI

- Gold 5317 should be solid, would also mention if you are looking for solid pre-made servers 45Drives makes some great options, like their C8 (only issue with it is PCIe airflow, fans for that are connected to the disk backplane so they run at the lowest possible speed and you can't change it, can be fixed pretty easily by moving the fans to the motherboard fan headers for higher speed though)

- All looks good, X550T2s are fantastic and should be reliable, like I said above though be sure you have good PCIe airflow

-

Tanks a lot @olivierlambert and @planedrop for your answers.

IMHO with NVMe (PCIe devices) RAID1 / RAID10 configuration the mdadm overhead on the CPU should be negligible, and also reliability should be very good.

Anyway I'll investigate the cost of hardware RAID controller. -

@Paolo You're not wrong here, it should be reliable and CPU cost should be pretty small, but I still personally always go with hardware RAID for production (unless I'm using a NAS that needs native access to the disks).

-

My new servers will be available in the next week. The full specs are:

- 2 x CPU Xeon Gold 5317

- 384 GB DDR4-3200 RAM

- 2 x 10Gb Intel X550 cards

- Adaptec SmartRAID 3102E-81

- 2 x 480 GB SDD

- 2 x 12.8 TB NVMe

Planned setup (KISS):

- XCP installed on 2x480 GB SDD in RAID1 with HW controller

- SR on local storage using NVMe units with SW RAID1

- no HA

- single-host pools with VM replication between hosts/pools

- backup on external NFS file server

- networking 10 Gbe (xcp-ng hosts, backup file server)

Questions / your opinion about:

- Is 8.3 version suitable for production use?

- How can i download beta2 ISO?

- I'm thinking to use ZFS/RAID1 instead of mdadm/EXT4 for local storage (with at least 16 GB on Dom0). Is a good choice?

- bonded links (2 x 10Gb) for xcp-ng server and backup file server (with the switch) are useful or the max. backup speed remain 10 Gb?

- any other suggestion?

Thanks

-

Why not use HW raid for both sets of disks? I suspect that this card would support multiple disk arrays. Also, you may want to rethink the use of ZFS. I don't have any experience with it; however, I've read that it can lead to data loss if not setup correctly.

-

I concur here with @Danp I think you should use the hardware RAID card for both sets of disks. ZFS is great and I'm quite experienced with it overall (though not in XCP-ng in specific), but hardware RAID makes more sense for a hypervisor host IMO.

-

I gave up on the hardware controller because in case of failure I need to have one in reserve for data recovery.

-

@dariosplit While that is true, it's still safer to do that than software RAID, at least in this setup. In my experience hardware RAID controller failures are super rare, have literally never come across one that wasn't like 10+ years old.

-

@planedrop I also don't see the point of XCP installed on SDD in RAID1 with HW controller. When and if it breaks, install it again.

I also prefer SR on local storage using one NVMe unit and continuous replication to another. -

@dariosplit I mean, it still would be best to avoid breaking it in the first place, it's not always going to be as simple as reinstall and you're good to go, things can get damaged if an SSD dies during use etc.... Could end up requiring restore from backup etc... I'd just avoid that in production.

-

I have to say, the recommendation to use hardware based RAID is somewhat shocking. You're throwing away massive amounts of performance by funneling all potentially hundreds of lanes worth of NVMe disks into a single controller with a measly 16 lanes. SDS is the future, wether that be through something like md or ZFS.

-

@xxbiohazrdxx Sure, but why do you need a massive amount of storage on a local hypervisor? That's when it's time for a SAN.

-

@planedrop Well, more importantly, with Hardware Raid, you often get blind and Hotswap capabilities.

With Software Raid, you need to prepare the system by telling md to eject a disk and then replace said disk in the array with its replacement.

Software Raid is very good, but has its drawbacks.

-

@DustinB This is also a good point, they have their ups and downs. I do think eventually hardware RAID will completely die, but that's still a ways off. Software is certainly what I would call overall superior, but hardware RAID still has good use cases for the time being.

-

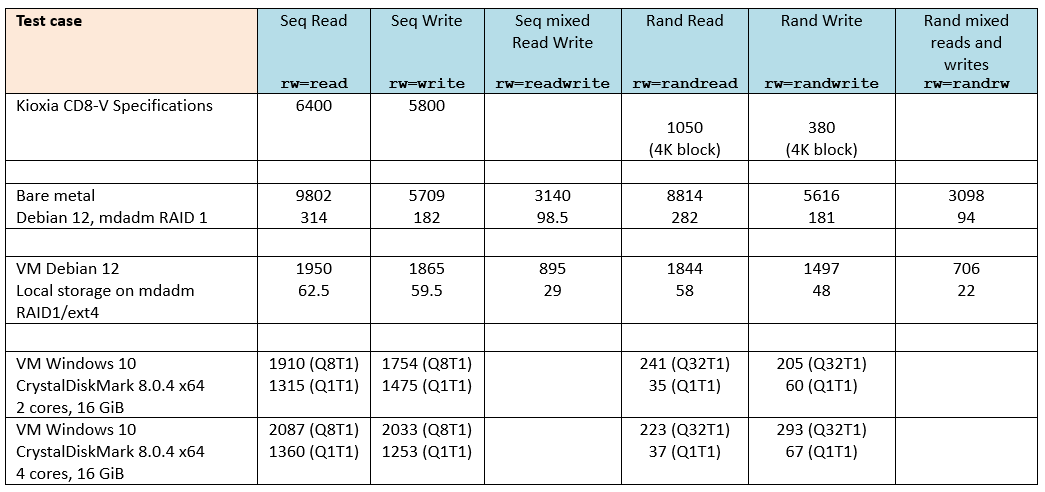

I've done some benchmarks with my new servers and want to share results with you.

Server:

- CPU: 2 x Intel Gold 5317

- RAM: 512GB DDR4-3200

- XCP-ng: 8.3-beta2

fio parameters common to all tests:

--direct=1 --rw=randwrite --filename=/mnt/md0/test.io --size=50G --ioengine=libaio --iodepth=64 --time_based --numjobs=4 --bs=32K --runtime=60 --eta-newline=10VM Debian: 4 vCPU, 4 GB Memory, tools installed

VM Windows: 2/4 vCPU, 4 GB Memory, tools installedResults:

- First line: Troughtput/Bandwidth (MiB/s)

- Second line: IOPS: KIOPS (only linux)

Considerations:

- On bare metal I get full disk performance: approx. double read speed due to RAID1.

- On VM the bandwidth and IOPS are approx 20% of bare metal values

- On VM the bottleneck is tapdisk process (CPU at 100%) and can handle approx 1900 MB/s

-

Just wanted to say thank you for this thread. I am in the midst of evaluating ESXi alternatives and trying ProxMox as well as XCP-NG.

I just installed XCP-NG this morning and have been trying to figure out if I should be doing hardware RAID vs ZFS raid. This thread provided the answer I needed. Hardware Raid on my Dell Power Edge Servers is easy and reliable.

Thank you again!

-

This post is deleted!