Epyc VM to VM networking slow

-

Please note that we are actively investigating to it, and believe me it's costly (25k€ already invested to track this down). So as you can see, it's a priority and something we are actively working on. AMD is also aware of it.

-

@bleader Had some time to test this on an AMD system (HP T740 Thin Client). Hopefully it helps a bit, even it is not an Epyc CPU.

Host:

- CPU: AMD Ryzen Embedded V1756B

- Number of sockets: 1

- CPU pinning: no

- XCP-NG version: 8.3 beta 1 (updated with

yum updateas of today) - Output of xl info -n:

[22:05 hpt740 ~]# xl info -n host : hpt740 release : 4.19.0+1 version : #1 SMP Wed Jan 24 17:19:11 CET 2024 machine : x86_64 nr_cpus : 8 max_cpu_id : 15 nr_nodes : 1 cores_per_socket : 4 threads_per_core : 2 cpu_mhz : 3244.038 hw_caps : 178bf3ff:7ed8320b:2e500800:244033ff:0000000f:209c01a9:00000000:00000500 virt_caps : pv hvm hvm_directio pv_directio hap shadow total_memory : 30636 free_memory : 17610 sharing_freed_memory : 0 sharing_used_memory : 0 outstanding_claims : 0 free_cpus : 0 cpu_topology : cpu: core socket node 0: 0 0 0 1: 0 0 0 2: 1 0 0 3: 1 0 0 4: 2 0 0 5: 2 0 0 6: 3 0 0 7: 3 0 0 device topology : device node No device topology data available numa_info : node: memsize memfree distances 0: 34803 17610 10 xen_major : 4 xen_minor : 13 xen_extra : .5-10.58 xen_version : 4.13.5-10.58 xen_caps : xen-3.0-x86_64 hvm-3.0-x86_32 hvm-3.0-x86_32p hvm-3.0-x86_64 xen_scheduler : credit xen_pagesize : 4096 platform_params : virt_start=0xffff800000000000 xen_changeset : 708e83f0e7d1, pq 8e58b4872724 xen_commandline : watchdog ucode=scan dom0_max_vcpus=1-8 crashkernel=256M,below=4G console=vga vga=mode-0x0311 dom0_mem=4294967296B,max:4294967296B cc_compiler : gcc (GCC) 11.2.1 20210728 (Red Hat 11.2.1-1) cc_compile_by : mockbuild cc_compile_domain : [unknown] cc_compile_date : Thu Jan 25 10:20:16 CET 2024 build_id : ae0904d024e04d4daf2ecdfddc37ea146f48d7e1 xend_config_format : 4Server and client VMs are both Debian 12 (Linux 6.1.0-18-amd64) with 4 cores and 4G RAM.

xentopisclient/server/dom0V2V 1T 90/140/210 5.1 Gbits/sec V2V 4T 150/220/260 8.1 Gbits/sec H2V 1T 0/170/210 10.2 Gbits/sec* H2V 4T 0/310/340 11.9 Gbits/sec* *: with some spread of cpu utilization and throughputMinimum of three runs per test scenario.

-

We made tests on Ryzen CPUs and we couldn't really reproduce the problem: it seems to be EPYC specific.

-

@olivierlambert We did talk in DM before, I told him any data is always welcome, especially as I didn't even know this range of CPUs

-

@gskger It does seem quite lower than the 5950x and the 7600 we tested, but:

- it is a zen1 if I'm not mistaken

- in the 4 threads case, with 8 threads on the physical CPU, the VMs and dom0 are actually sharing ressources

- for single thread I guess the generation and memory speed could explain the difference.

I would say that this confirms these ryzen cpus are not really impacted either.

Thanks for sharing, I'll update the table tomorrow.

-

@bleader you are correct, the V1756B is a low power (45W TDP) desktop CPU of the AMD Ryzen embedded v1000 series based on the ZEN microarchitecture with 4 cores and 8 threads. It operates at a base freqeuncy of 3.25 GHz and a boost frequency of 3.6 GHz max. The HP T740 thin client is a capable low power, low noise computer for running XCP-ng in a homelab, but it's not a real match for serious AMD desktop or server CPUs.

-

@gskger Returning to this:

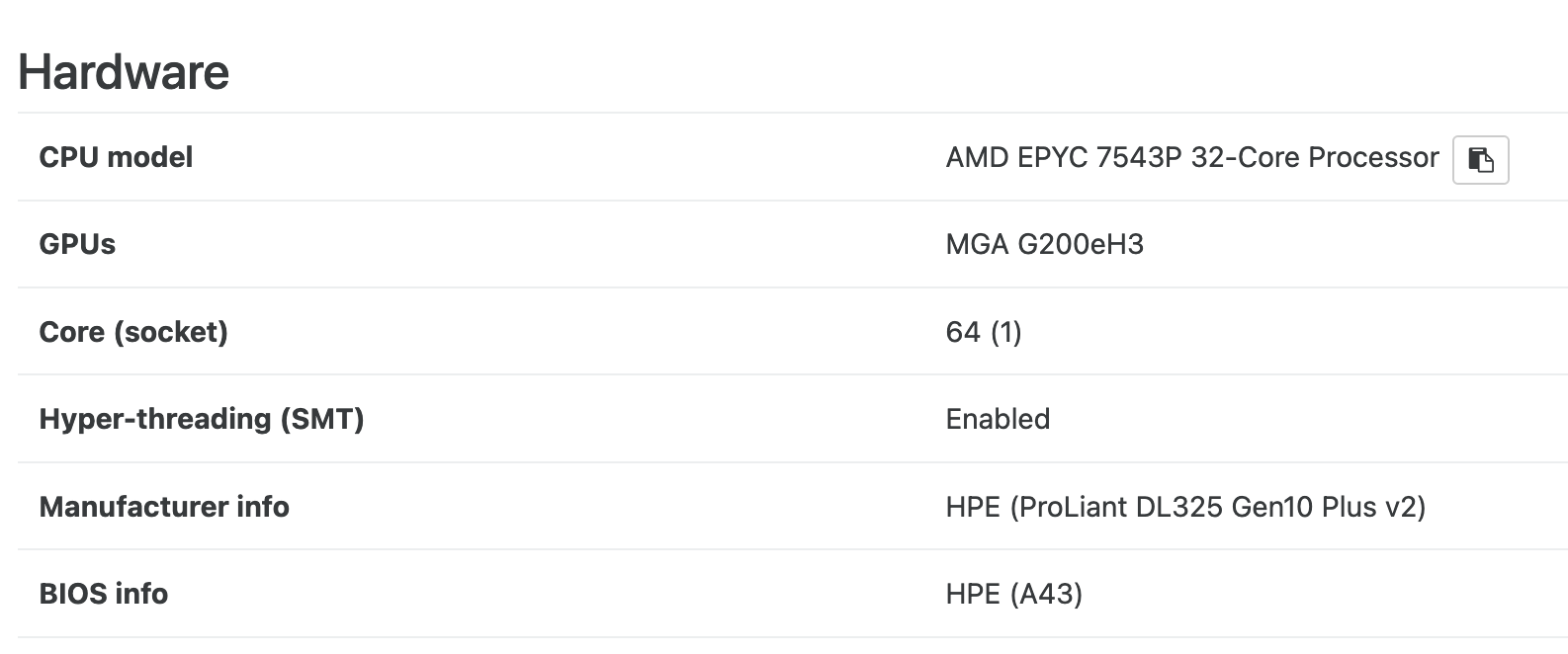

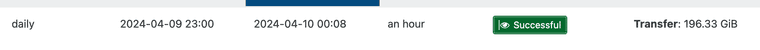

Our backups on our business deployment, 2 HPE, (,ProLiant DL325 Gen10 Plus v2,) with AMD EPYC 7543P 32-Core Processor connected via redundant 10G nic's and switched to 10G NAS (QNAP, Synology) for storage and backups we get backup speeds of 80-90MiB/s tops with NBP.

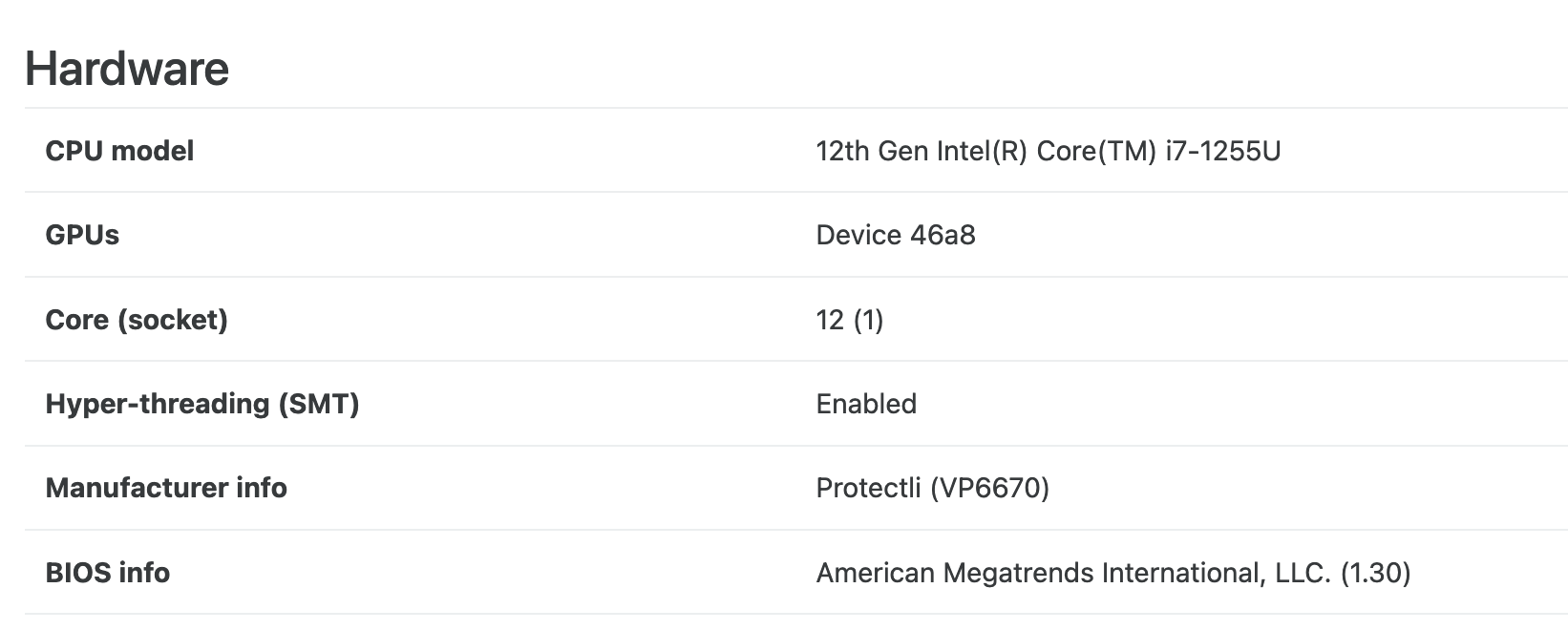

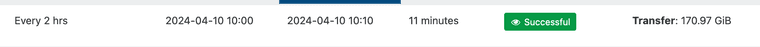

On my Homelab with Protectli Mini PC connected via 10G also to 10G QNAP I get 250-300 MiB/s !!!!!!

This really is a problem for us now since we started with XCPNG 1 yr ago. Slow backup/restore speeds are a hindrance in our backup strategy.

Now that I switched my Homelab from Proxmox (using it for 3yrs) to XCPNG I stumbled upn this speed difference and it is incredible.

I wonder if it is not also related to this issue with EPYC networking.

P.S: I have opened a ticket BUT I wanted ti share this here also.

-

@manilx hi, I am working on the backup side, that is a very interesting finding. I have some question to rule out some hypothesis :

What storage do you use on both side ? iscsi / nfs ?

Is XO running on the master ? -

@bleader do you remember if we also had slower network speed between a VM and the Dom0 or only between 2 regular guests?

-

-

Not necessarily. XOA is a VM, but it's communicating with the Dom0, which is a VM indeed, but not a regular one. Could have been interesting to check if XOA VM is not sitting on the same host it's doing a backup, to see the result.

-

@olivierlambert vm to host is impacted too, althrough less, reaching over 10Gbps on a zen2 epyc.

-

@florent Hi,

Both storages are NFS, all connections 10G.

On both cases XO/XOA is running on the master. -

@olivierlambert I have tested this already. It doesn't matter if the XOA VM is on the master or another host. Backup speeds are "the same"

-

@olivierlambert as @bleader mentioned. All testing shows that it is any VM networking at all. Vm to vm, vm to host, vm to external appliances are all equally affected. Just that vm to vm issue is half the bandwidth of all other usecases since i has to handle traffic to both VMs and as such is found faster. But no matter how the VM communicates there is an upper roof bandwidth limit that is VERY low.

-

@Seneram As explained, we have been living with this for 1yr now but at the time Vates told us that all was OK and that backup speeds were normal at 80-90 MiB/s.

It was just NOW that I have it running at the HomeLab on crap Intel PC's (

) that I see that HUGE speed difference. And this thread has opened my eyes also.....

) that I see that HUGE speed difference. And this thread has opened my eyes also..... -

While I'm very happy to see this getting some attention now, I am a bit disappointed that this has been reported for so long (easily two years or more) and is only now getting serious attention. Hopefully it will be resolved fairly soon.

That said...If you need high-speed networking in Epyc VM's now, SR-IOV can be your friend. Using ConnectX-4 25Gb cards I can hit 22-23Gb/s with guest VM's. Obviously SR-IOV brings along a whole other set of issues, but it's a way to get fast networking today.

-

@JamesG this bug has not been reported for two years. This thread is 6 months and our big report is open about the same amount of time.

It has had excellent attention since day one of us reporting it .

-

@Seneram If you search the forum you'll find other topics that discuss this. In January/February 2023 I reported it myself because I was trying to build a cluster that needed high-performance networking and found that the VM's couldn't do it. While researching the issue then, I seem to recall seeing other topics from a year or so prior to that.

Just because this one thread isn't two years old doesn't mean this is the only topic reporting the issue.

-

@JamesG As of now, we roughly spent 50k€ on this issue already (in time and expenses), so your impression of something not taken seriously is a bit wrong. If you want us to speed up, I'll be happy to get even more budget

Chasing those very low level CPUs architecture issues are really costly.