Benchmarks between XCP & TrueNAS

-

@olivierlambert some of the tests we did run twice and noted a bit of a slower speed, but overall not too noticeable.

The question then is, is it industry practice to use NFS for VHD storage? I guess right now where we are this seems like a hindsight question but is what we're seeing above "normal" - How much would a benchmark like this reflect on running vm's? We've got a small handful of VM's in our testbench. I am optimistic atleast that the speed between 1 concurrent test and 10 concurrent tests indicated that the speed was not stuck at for example 5mb/s

-

let me rephrase rather, it's obvious that NFS would be slower than local storage (which is a given), NFS is obviously widely used and I am sure you guys internally use something similar, have you noticed any critical performance drops which may lead to sluggish general use VM's over NFS storage?

-

@mauzilla said in Benchmarks between XCP & TrueNAS:

let me rephrase rather, it's obvious that NFS would be slower than local storage (which is a given), NFS is obviously widely used and I am sure you guys internally use something similar, have you noticed any critical performance drops which may lead to sluggish general use VM's over NFS storage?

Yes it is a lot slower, but you get thin provisioning and a very simplified network configuration compared to iSCSI or fiber channel.

We recently did a benchmark between XCP-NG and VMware since I suggested we should migrate everything over to XCP-NG due to the licensing changes on VMWare but we changed our minds after the tests.

We actually even mounted a iSCSI LUN directly in the VM and the performance difference was huge!

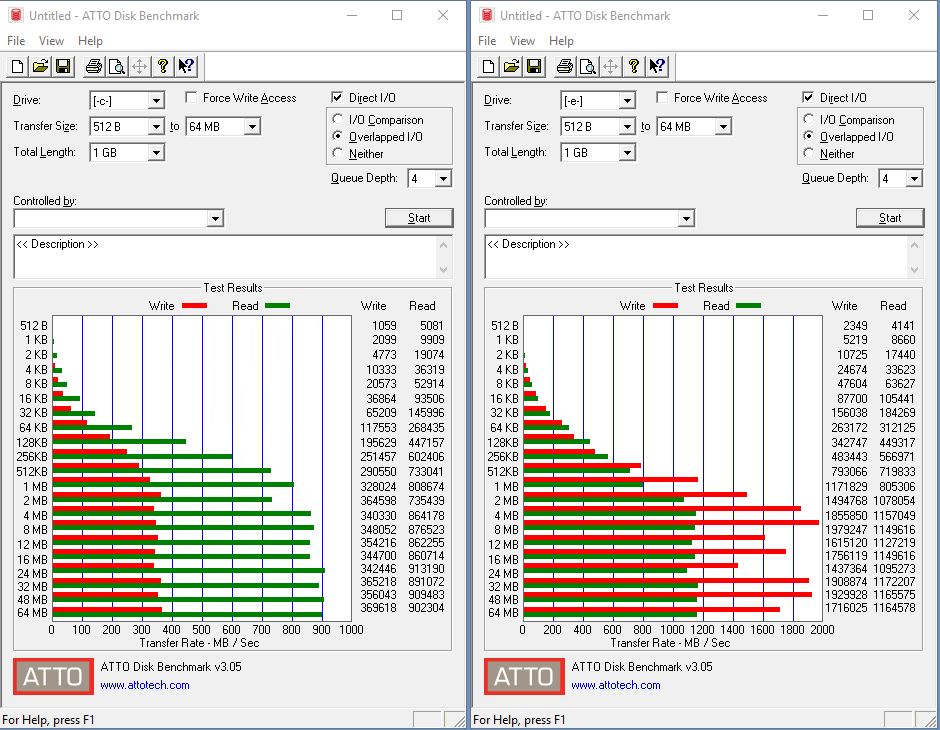

On the left: The VM has a VDI which resides on a NFS share presented by a Dell Powerstore 1000T. Networking on all hosts are 2x10G with MTU 9000.

On the right: The VM has a iSCIS LUN mapped from the same Dell Powerstore 1000T. Network setup is still the same on the hosts (2x10G with MTU 9000).

I think that SMAPIv1 is to blame here and Vates are working on SMAPIv3 which will probably make a big difference when it comes to disk performance.

We used to use iSCSI but due to the thin provisioning we actually migrated over to NFS even tho the performance is not as good. -

@nikade thank you for your feedback, we're actually kickstarting our migration to NFS thin next week (we're currently using local storage which is as fast as it gets but with obvious redundancy issues) - iSCSI was on the table (we purchased a Equallogic) but after realizing the thin provisioning wont happen NFS seems like the most obvious option.

I guess for running VM's the biggest drawback would be slower bootup times perhaps? Once the VM is booted have you noticed any sluggish performance? It's a bit late in the discussions as our migration starts next week so we're putting our money on NFS right now

-

@mauzilla said in Benchmarks between XCP & TrueNAS:

@nikade thank you for your feedback, we're actually kickstarting our migration to NFS thin next week (we're currently using local storage which is as fast as it gets but with obvious redundancy issues) - iSCSI was on the table (we purchased a Equallogic) but after realizing the thin provisioning wont happen NFS seems like the most obvious option.

I guess for running VM's the biggest drawback would be slower bootup times perhaps? Once the VM is booted have you noticed any sluggish performance? It's a bit late in the discussions as our migration starts next week so we're putting our money on NFS right now

To be honest you wont notice under "normal workload" and I dont think any of our users notice any difference.

Its when you start running disk intensive stuff you'll notice it, like database servers or fileservers with a lot of activity.For a long time we keept 1 iSCSI LUN per pool for our SQL servers, but we decided to give them a lot of RAM instead and switch over to NFS for those.

-

@nikade thank you so much, this one would have given me a fair amount of sleepless nights for the next week

I knew NFS would be in most cases the most viable solution but as my experience has mostly been local storage running some benchmarks had me a bit worried.

I knew NFS would be in most cases the most viable solution but as my experience has mostly been local storage running some benchmarks had me a bit worried.From where we are now (local storage) the jump to network storage is the obvious choice (redundancy, replication, can run shared pools instead of independant hosts etc). We fortunately dont have too many high intensive workloads, and we are spreading the load accross different TrueNAS servers instead of a single pool (not that it would probably make much of a difference as it's not the disks that are being throttled but rather the connection to the disks).

Just something worth noting with regards to the SMAPIv1 integration, we ran a test directly on one of the hosts with a NFS share and the benchmark was about the same, I am not sure if the SMAPIv3 would improve this, maybe something @olivierlambert and team can confirm?

Long story short, I would rather offer a slower but more reliable solution than offer something that is fast but when there's trouble the recovery time is affected

-

Performance is linked to the datapath, not the volume format & mgmt (VHD or something else). SMAPIv3 decouple the datapath and the volume management. So we could have a SMAPIv3 driver with NFS using the same format and datapath than v1, that wouldn't change anything at all. Obviously, we want something better

-

It also depends upon whether you are using NFS 3, NFS 4, NFS 4.1 or even NFS 4.2. As well as whether pNFS (Parallel Network File System) can be leveraged and whether it's done so fully.

You can find pNFS in the NFS version 4.1 or higher (especially in NFS version 4.2) this has improved performance over NFS 4.0 and earlier. So the operations between the XCP-ng and TrueNAS will be increased.

Especially when using TrueNAS Scale 23.10, though once production ready it would be better to aim for TrueNAS Scale version 24.04.1. As it really increases the performance, and security over the earlier releases of TrueNAS Scale.

https://www.truenas.com/blog/truenas-dragonfish-performance-breathes-fire/

-

@john-c good info, I didnt know this.

We're using TrueNAS for our backup NFS which is the target for our XOA backups. -

@john-c thank you for this info, I will investigate immediately tonight.

(not wanting to misuse the XO forum for TrueNAS questions), but we're moving into production 4x TrueNAS servers next week. We have a couple of TrueNAS core servers already and have been very happy with the reliability it presents, and we opted to install TrueNAS core on the new servers simply on the fact of "what you know is what you know". It however has also come to my attention that TrueNAS Core is effectively "end of life"

Based on what you are sharing now, it would be a stupid idea for us to continue using Core if the forecast of better performance for our usecase would be present. We don't use the TrueNAS servers for anything else than NFS shares (could care less about the rest of the apps / plugins etc).

Are you perhaps using Scale for NFS VM sharing? And if so, would you recommend we onboard ourselves with Scale rather than Core?

-

@mauzilla I would definately check on TrueNAS Scale - Its their "new" thing and its been stable for a while now.

Big difference is that its based on Linux on and not FreeBSD which the old FreeNAS/TrueNAS was. -

@nikade we'll be doing it right away, and given that I now know that there is potential challenges I can address with performance I'd rather give it a go. Thank you all for your feedback so far, a huge relief!

-

@mauzilla Happy to help, good luck with your project!

-

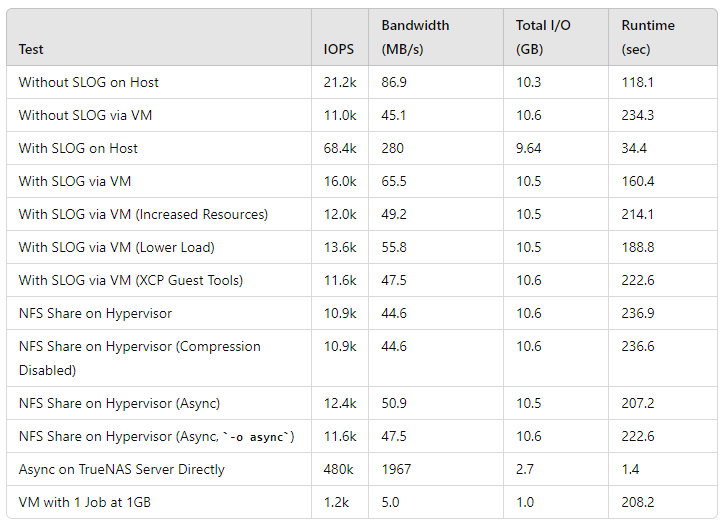

Considering the feedback we got yesterday (and not previously knowing TrueNAS support ISCSI), we took a chance this morning and setup a ISCSI connection:

- Created a "file" ISCSI connection on the same pool with the SLOG

- Setup in XCP / XO

- Migrated VM to the ISCSI SR

And wow, what a difference, jumping from approximately 44.6MB to 76MB/s and IOPS jumping from 10.9 to 17.8k

This leads me then to believe if we designate a sub section of the pool in TrueNAS for ISCSI, we will be able to reap the benefits of both by leaving "data" disks on the NFS (so that we can benefit from thin) and put disks within the ISCSI SR (understanding that it will be full VDI)

Questions:

- With regards to backups / Delta replication, can I mix / match backups where some disks are VHD (thin) and some are full LVM? I assume that the first backup will always be the longest (as it will need to backup the full say 50GB of the disk isntead of the thin file) - Will this impact delta replication / backups?

- Any risks with the intended setup? I can only assume that other people might be doing something similar?

I was really amazed with the simplicity of the ISCSI setup between TrueNAS and XCP - We have an equallogic and I attempted to setup ISCSI but it was extremely difficult if you dont understand the semantics involved

-

@mauzilla sounds like we are doing similar things! I gather you're now running TrueNAS Scale as we are? We decided to go with RC2 of Electric Eel since there's been some big architecture changes between 24.04 and 24.10.

We are just running it through testing and have run into performance issues for backups to an NFS target and I was interested to read the huge gain in speed you got from moving to iSCSI.

I was thinking of seeing if we can backup to an iSCSI target while keeping many of the VMs on NFS. Have you tried this and had any success?

-

@andrewperry - we've started our migration from local storage to TrueNAS based NAS (NFS) the last couple of weeks, and ironically ran into our first "confirmed" issue on Friday. For most of the transferred VM's we had little "direct" issues, but we can definately see a major issue with VM's that are reliant on heavy databases (and call recordings).

We will be investigating options this week, will keep everyone posted. Right now we dont know here the issue is (or what a reasonable benchmark is) but with 4 x TrueNAS, 2 pools per TrueNAS with enterprise SSD, SLOG's for each pool and a dual 10GB Fibre connection to Arista having only 2-3 VM's per pool is giving mixed results.

We will first try to rule out other hardware but will update the thread here as we think it will be of value for others and maybe we find a solution. Right now I am of the opinion that it's NFS, hoping we can do some tweaks as the prospects of just running iSCSI is concerning as thin provisioning is then not possible anymore.

-

@mauzilla we've been running iscsi based backups with our previous 'bare metal' Debian Xen system, thanks to rdiff-backup. It worked really well for our purposes and I am still a bit uncomfortable with XO / XCP-ng backing up over a 1Gbps link. Hopefully a 10Gbps link will resolve that.

Have you considered using ZFS replication with TrueNAS as part of your backup strategy?

-

@andrewperry yes, we replicate TrueNAS to a "standby" TrueNAS using zpool / truenas replication. Our current policy is hourly. We then (plan) to do Incremental replication of all VM's to a standby TrueNAS over weekends giving a 3 possible methods for recovery.

Currently we're running into a major drawback with VM's on TrueNAS over NFS (specifically VM's that rely on fast storage such as databases and recordings). We did not anticipate such a huge drop in performance having VHD files over NFS. We're reaching out to XCP to ask them to give us some advice as it could likely be in part due to customization we can make.