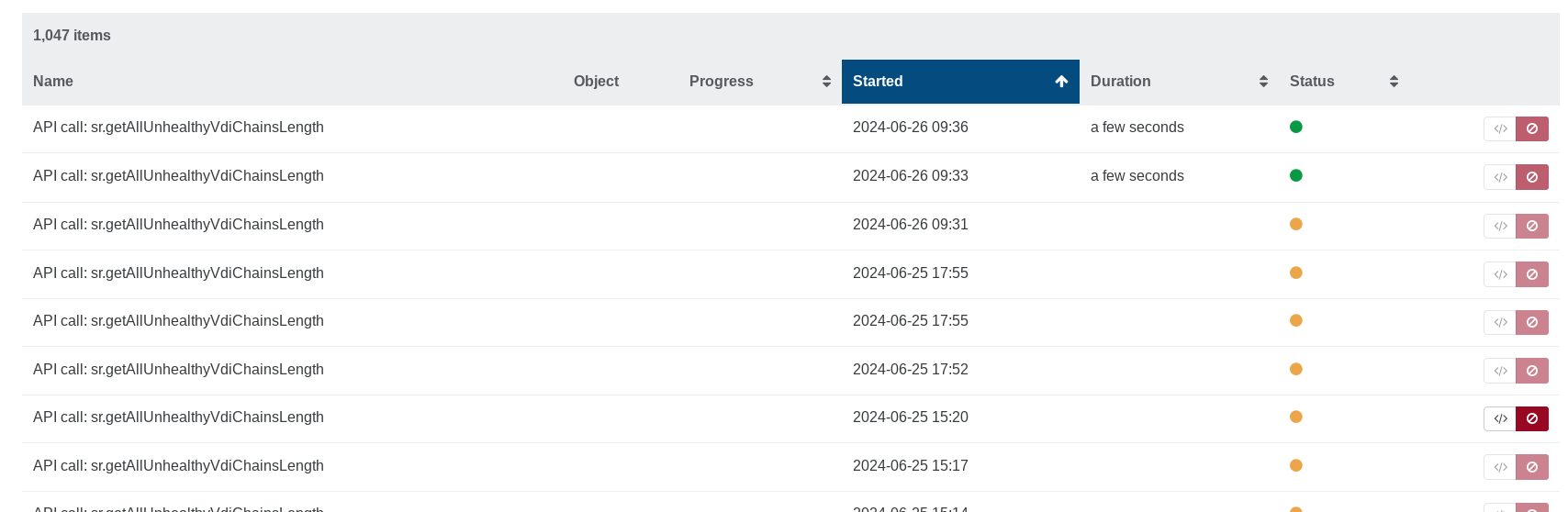

huge number of api call "sr.getAllUnhealthyVdiChainsLength" in tasks

-

Hi, i've this situation in Task

What's this task?

Now i've ~50 of this call.. -

@robyt I have the same here. Started a while ago.

And in addition I have a VM which doesn't coalesce (dashboard-health shows 'VDIs with invalid parent VHD') and I already made a clone but after a while (backups) I get the same again. I also tried the solution in https://docs.xenserver.com/en-us/xenserver/8/storage/manage.html#reclaim-space-by-using-the-offline-coalesce-tool but that resulted in 'VM has no leaf-coalesceable VDIs'.Might all be related.

-

My home install of XO from the sources is also doing this.

-

Ping @julien-f

-

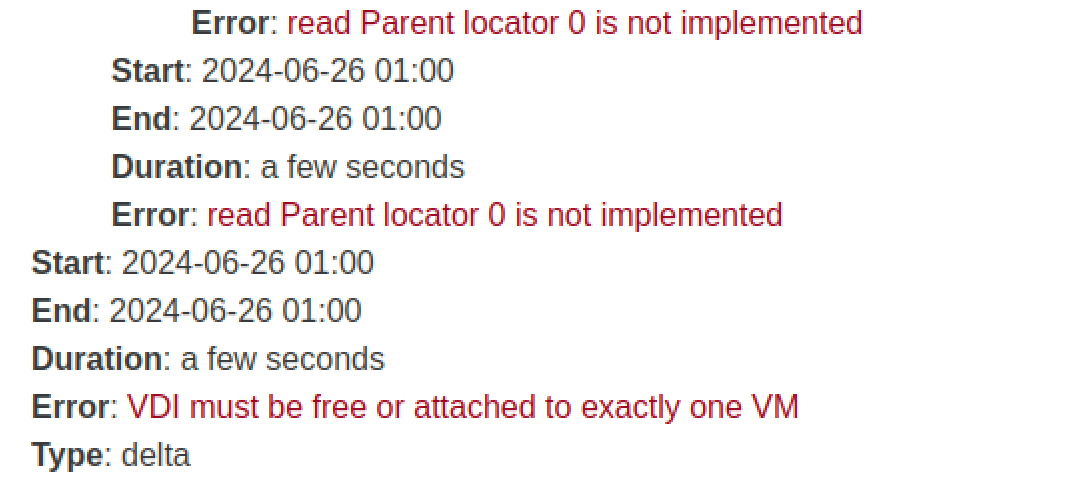

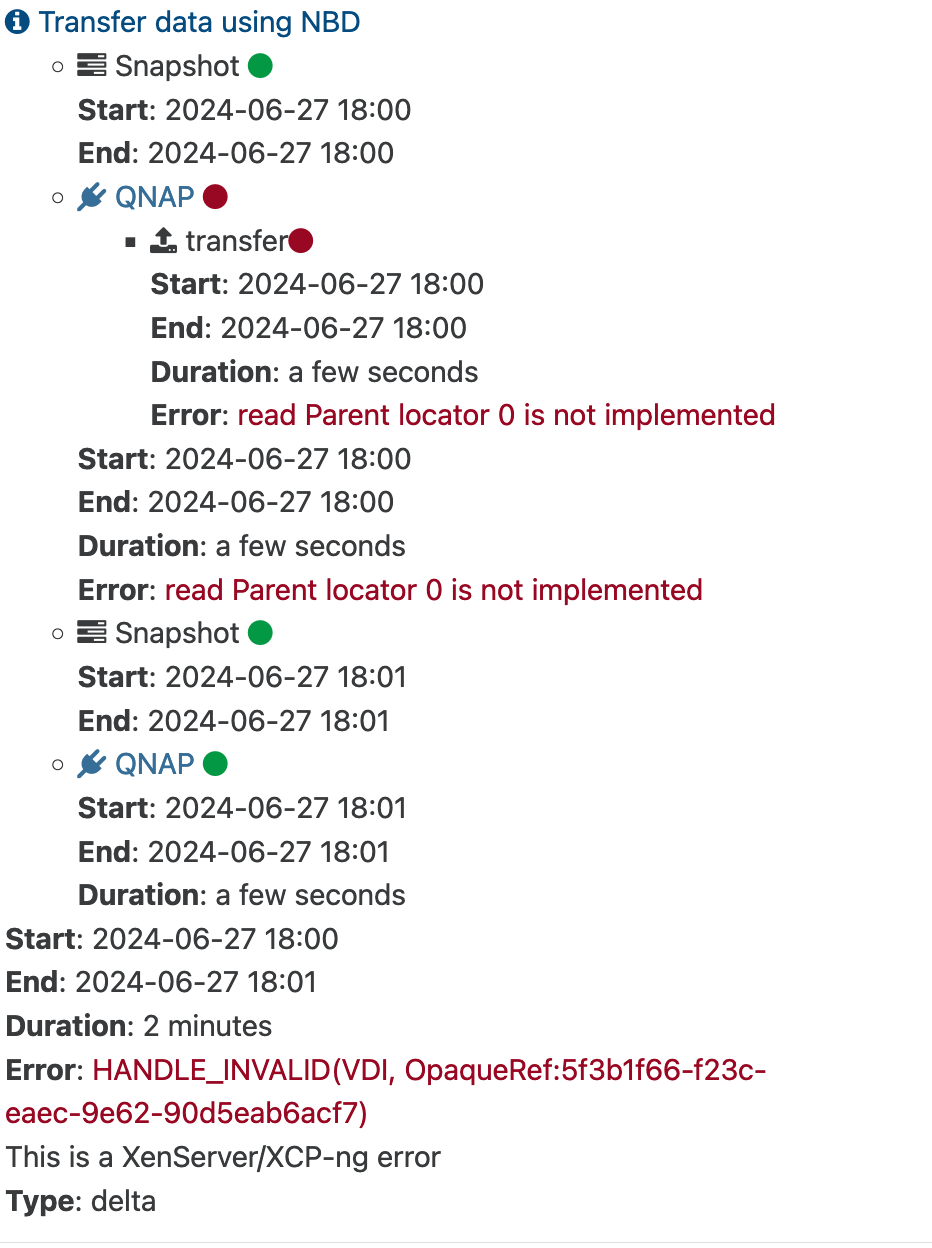

Updating to the latest commit (93833) and its still happening. Backups are also no longer functioning for me as seen below:

It seems editing the backup job and disabling NBD/CBT in advanced settings gets backups back up and running again however the health check shows all my VDIs as unhealthy and needing to coalesce.

As a test I tried creating an entirely new job with CBT enabled and while the job finishes now the task lingers and never goes away under tasks until you restart the toolstack of the host.

Edit: Rolling back to commit 3c13e and removing all .cbtlog files from my SR got backups back up and running and made the sr.getAllUnhealthyVdiChainsLength loop end. Im guessing theres been a lot of change to enable CBT and something is misbehaving somewhere.

-

-

Hi,

We added 2 fixes in latest commit on master, please restart your XAPI (to clear all tasks), and try again with your updated XO

-

@olivierlambert Still broken backups

-

@flakpyro said in huge number of api call "sr.getAllUnhealthyVdiChainsLength" in tasks:

removing all .cbtlog files from my SR got backups back up and running

What did you do exctly to remove the .cbtlog files? Where are they located?

I find that after backups with the "broken" versions (also the latest with the 2 fixes) I get my coalescing stopped. And checking the logs (cat /var/log/SMlog | grep -i coalesce) I have entries like

['/usr/sbin/cbt-util', 'coalesce', '-p', '/var/run/sr-mount/ea3c92b7-0e82-5726-502b-482b40a8b097/0cc54304-4961-482f-a1b7-a8222dd143a1.cbtlog', '-c', '/var/run/sr-mount/ea3c92b7-0e82-5726-502b-482b40a8b097/fe11c7f1-7331-427e-8fa9-76412a2cbb75.cbtlogAnd those correspond to the hanged coalesces...

When I reboot the host they disappear but on next delta backup I have the issue again.

-

@manilx the .cbt logs are located on your SR (/run/sr-mount/UUID) i don't totally know if removing them is what fixed things for me but once backups started failing my goal was to "revert" everything back to a known good working state. Doing this, reverting XOA and restarting the tool stack on the host got things back to how they were.

I'm very much looking forward to CBT backups though as i've been spoiled by Veeam / ESX CBT backups for a lot of years!

-

@olivierlambert I'm not sure that I am doing it correctly. XOA is up to date (Master, commit 6ee7a) and I went through and re scanned the storage repositories to get snapshots to coalesce. Backups work properly now.

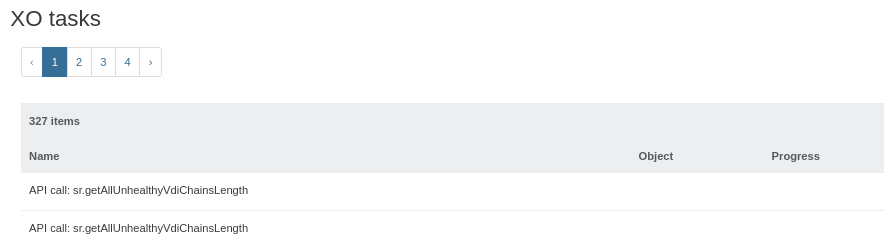

I have 50 or so instances of the following in XO tasks

- API call: sr.getAllUnhealthyVdiChainsLength

- API call: sr.getVdiChainsInfo

- API call: sr.reclaimSpace

- API call: proxy.getAll

- API call: session.getUser

- API call: pool.listMissingPatches

I've rebooted the host and ran 'xe-toolstack-restart' but I'm getting the same results. I must be missing something.

Thanks for your help.

-

Hi,

XO tasks aren't related to XAPI tasks, it's normal to see those. They were hidden before, now it's helpful to see when we have so much calls

-

FYI, CBT was reverted, we'll continue to fix bugs on it in a dedicated branch for people to test (with a community and Vates joint test campaign).

-

@olivierlambert I applaud this decision. Running stable for many many months suddenly to have all these issues, without really knowing what caused it and how to resolve has been a nightmare for me

I'm all in for those new features (!) but if updating to new build to get fixes results in those issues is not good.

I've spent many hours lately, rebooted the hosts more times than the last 5 years to get backups/coalesce working again and still am not sure if everything is back to normal....

So, yes, this is a good decision.

-

This thread will be used to centralize all backup issues related to CBT: https://xcp-ng.org/forum/topic/9268/cbt-the-thread-to-centralize-your-feedback

-

After i seen the CBT stuff was reverted on github, i updated to the latest commit on my home server, (253aa) i can report my backups are now working as they should and coalesce runs without issues leaving a clean health check dashboard again. :). Glad to see this has been held back on XOA as well as i was planning to stay on 5.95.1 otherwise! Looking forward to CBT eventually all the same!

-

@flakpyro Just updated, run my delta backups and all is fine

-

-

Same problem here 300+ sessions and counting

XAPI restart did not solve the issue... -

Doesn't seem to be respecting [NOBAK] anymore for storage repos. Tried '[NOSNAP][NOBAK] StorageName' and it still grabs it.