CBT: the thread to centralize your feedback

-

@manilx Have you enabled CBT+data removal? Otherwise you'll continue to see snapshots (but yeah, the old ones should be removed at some point)

-

@olivierlambert No, I haven't. Will do now.

-

@manilx P.S. It wasn't clear to me that I had to turn this on. I just left everything as it was after updating XO..... (Was always using NBT before):

-

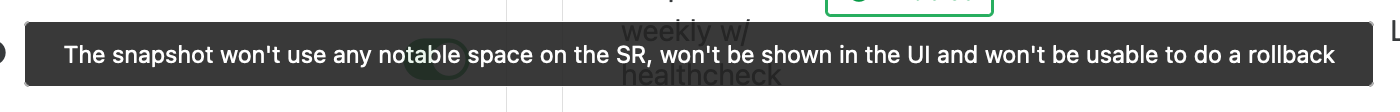

@olivierlambert The next backup has run and now there a no snapshots at all (even the last one I left was removed). Is this how this is supposed to be? Totally confused.

I get this:

.

.

OK. But if I want the "old" way to have the snapshots, they accumulate as we have seen...... ?? -

- That's thanks to CBT

- The old way can be used again, just disable the data removal toggle. And yes, it shouldn't accumulate more than 1 snap per schedule.

-

@olivierlambert Understood. Then it's up to @florent to fix this snapshot accumulation.

Thx -

If we can reproduce the issue, because for now I do not have the problem here. Double check you are on the latest commit (or XOA latest fully up to date)

-

@olivierlambert Positive. Will disable it again and see if it happens again.

-

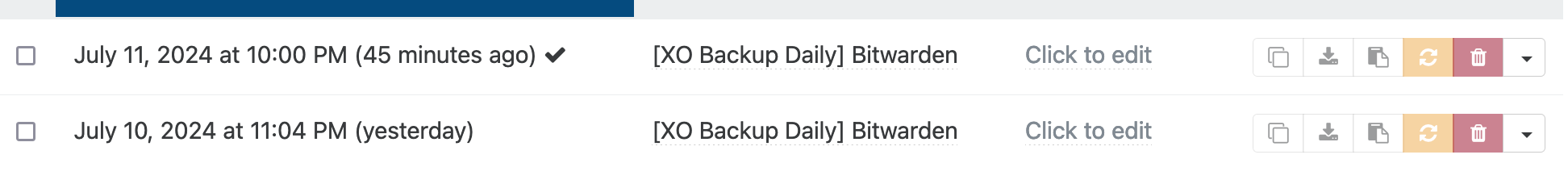

Seeing a nice decrease in space used.

I'm keeping the snapshot around in case I need it, thought I doubt that I would.

-

@olivierlambert Disabled the data removal option again.

1st backup run: snapshot created

2nd one: still just the one snapshot.Seems like enabling, backing up, disabling the option cleared this up.

Will monitor.

-

We have this error "stream has ended with not enough data (actual: 446, expected: 512)" on multiple vms in the last few days anyone seeing this issue?

-

Is this VM also using NBD-enabled network? In our prod, I also have this issue each 2 backups for one specific VM not hosted in the same pool than the rest, without any NBD configured network.

-

@olivierlambert yes both NBD, we have them in the same pool and NBD network is enabled.

-

@olivierlambert, we haven't encountered this error very often, but it seems to occur mainly when the backup load is higher. With it being Windows Update week, some jobs are taking longer than usual, leading to overlaps. The issues seem to arise particularly during these overlaps. I've opened a GitHub issue for this problem as well as for the VDI in-use issue. Hopefully, Florent will have time today to look into it more deeply.

-

@rtjdamen said in CBT: the thread to centralize your feedback:

We have this error "stream has ended with not enough data (actual: 446, expected: 512)" on multiple vms in the last few days anyone seeing this issue?

the same for me.

Backup too long (delta for 18 hours, before nbd+cbt less than hour.. is a full, not delta) and at the end many many errors{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1720616400003", "jobId": "30159f11-3b54-48d1-ab8b-d32858991349", "jobName": "Delta FtPA", "message": "backup", "scheduleId": "b94e6227-c7b8-4a39-9bf1-b881422971df", "start": 1720616400003, "status": "failure", "infos": [ { "data": { "vms": [ "be0a9812-fd14-be75-e2fa-40c31ce8875c" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "be0a9812-fd14-be75-e2fa-40c31ce8875c", "name_label": "FtPA" }, "id": "1720616402582", "message": "backup VM", "start": 1720616402582, "status": "failure", "tasks": [ { "id": "1720616402661", "message": "clean-vm", "start": 1720616402661, "status": "success", "warnings": [ { "data": { "path": "/xo-vm-backups/be0a9812-fd14-be75-e2fa-40c31ce8875c/20240703T070203Z.json", "actual": 356950134784, "expected": 356950138368 }, "message": "cleanVm: incorrect backup size in metadata" } ], "end": 1720616408054, "result": { "merge": false } }, { "id": "1720616410224", "message": "snapshot", "start": 1720616410224, "status": "failure", "end": 1720616668284, "result": { "code": "HANDLE_INVALID", "params": [ "VBD", "OpaqueRef:27874b37-4e3b-4d33-9a68-0d3dbaae7664" ], "task": { "uuid": "1823000a-df0a-970e-db2b-c12be53943fc", "name_label": "Async.VM.snapshot", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20240710T13:00:29Z", "finished": "20240710T13:04:28Z", "status": "failure", "resident_on": "OpaqueRef:4706cbe1-12ab-45d9-9001-cbe6ec1270ce", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "HANDLE_INVALID", "VBD", "OpaqueRef:27874b37-4e3b-4d33-9a68-0d3dbaae7664" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename ocaml/xapi-client/client.ml)(line 7))((process xapi)(filename ocaml/xapi-client/client.ml)(line 19))((process xapi)(filename ocaml/xapi-client/client.ml)(line 6016))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 134))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 205))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 95)))" }, "message": "HANDLE_INVALID(VBD, OpaqueRef:27874b37-4e3b-4d33-9a68-0d3dbaae7664)", "name": "XapiError", "stack": "XapiError: HANDLE_INVALID(VBD, OpaqueRef:27874b37-4e3b-4d33-9a68-0d3dbaae7664)\n at XapiError.wrap (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/_XapiError.mjs:16:12)\n at default (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/_getTaskResult.mjs:13:29)\n at Xapi._addRecordToCache (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1033:24)\n at file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1067:14\n at Array.forEach (<anonymous>)\n at Xapi._processEvents (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1057:12)\n at Xapi._watchEvents (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1230:14)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)" } }, { "id": "1720616668336", "message": "clean-vm", "start": 1720616668336, "status": "success", "end": 1720616674074, "result": { "merge": false } }, { "id": "1720616674189", "message": "clean-vm", "start": 1720616674189, "status": "success", "end": 1720616679904, "result": { "merge": false } }, { "id": "1720616681937", "message": "snapshot", "start": 1720616681937, "status": "success", "end": 1720616891256, "result": "4f5c9b99-d96e-4e1f-5f2e-0b9e7fa28952" }, { "data": { "id": "601f8729-7602-4d6f-a018-d4cc525ca371", "isFull": false, "type": "remote" }, "id": "1720616891257", "message": "export", "start": 1720616891257, "status": "success", "tasks": [ { "id": "1720617038363", "message": "clean-vm", "start": 1720617038363, "status": "success", "end": 1720617044510, "result": { "merge": true } } ], "end": 1720617044698 }, { "id": "1720617044736", "message": "clean-vm", "start": 1720617044736, "status": "failure", "tasks": [ { "id": "1720617048047", "message": "merge", "start": 1720617048047, "status": "failure", "end": 1720617656522, "result": { "errno": -2, "code": "ENOENT", "syscall": "open", "path": "/run/xo-server/mounts/601f8729-7602-4d6f-a018-d4cc525ca371/xo-vm-backups/be0a9812-fd14-be75-e2fa-40c31ce8875c/vdis/30159f11-3b54-48d1-ab8b-d32858991349/1f3803c8-0335-4470-8c26-297f98af442c/20240703T070203Z.vhd", "message": "ENOENT: no such file or directory, open '/run/xo-server/mounts/601f8729-7602-4d6f-a018-d4cc525ca371/xo-vm-backups/be0a9812-fd14-be75-e2fa-40c31ce8875c/vdis/30159f11-3b54-48d1-ab8b-d32858991349/1f3803c8-0335-4470-8c26-297f98af442c/20240703T070203Z.vhd'", "name": "Error", "stack": "Error: ENOENT: no such file or directory, open '/run/xo-server/mounts/601f8729-7602-4d6f-a018-d4cc525ca371/xo-vm-backups/be0a9812-fd14-be75-e2fa-40c31ce8875c/vdis/30159f11-3b54-48d1-ab8b-d32858991349/1f3803c8-0335-4470-8c26-297f98af442c/20240703T070203Z.vhd'\nFrom:\n at NfsHandler.addSyncStackTrace (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/fs/dist/local.js:21:26)\n at NfsHandler._openFile (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/fs/dist/local.js:154:35)\n at NfsHandler.__openFile (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/fs/dist/abstract.js:448:51)\n at NfsHandler.openFile (/usr/local/lib/node_modules/xo-server/node_modules/limit-concurrency-decorator/index.js:97:24)\n at VhdFile.open (/usr/local/lib/node_modules/xo-server/node_modules/vhd-lib/Vhd/VhdFile.js:86:30)\n at openVhd (/usr/local/lib/node_modules/xo-server/node_modules/vhd-lib/openVhd.js:15:28)\n at async #openVhds (/usr/local/lib/node_modules/xo-server/node_modules/vhd-lib/merge.js:118:23)\n at async Disposable.<anonymous> (/usr/local/lib/node_modules/xo-server/node_modules/vhd-lib/merge.js:164:39)" } } ], "end": 1720617656522, "result": { "errno": -2, "code": "ENOENT", "syscall": "open", "path": "/run/xo-server/mounts/601f8729-7602-4d6f-a018-d4cc525ca371/xo-vm-backups/be0a9812-fd14-be75-e2fa-40c31ce8875c/vdis/30159f11-3b54-48d1-ab8b-d32858991349/1f3803c8-0335-4470-8c26-297f98af442c/20240703T070203Z.vhd", "message": "ENOENT: no such file or directory, open '/run/xo-server/mounts/601f8729-7602-4d6f-a018-d4cc525ca371/xo-vm-backups/be0a9812-fd14-be75-e2fa-40c31ce8875c/vdis/30159f11-3b54-48d1-ab8b-d32858991349/1f3803c8-0335-4470-8c26-297f98af442c/20240703T070203Z.vhd'", "name": "Error", "stack": "Error: ENOENT: no such file or directory, open '/run/xo-server/mounts/601f8729-7602-4d6f-a018-d4cc525ca371/xo-vm-backups/be0a9812-fd14-be75-e2fa-40c31ce8875c/vdis/30159f11-3b54-48d1-ab8b-d32858991349/1f3803c8-0335-4470-8c26-297f98af442c/20240703T070203Z.vhd'\nFrom:\n at NfsHandler.addSyncStackTrace (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/fs/dist/local.js:21:26)\n at NfsHandler._openFile (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/fs/dist/local.js:154:35)\n at NfsHandler.__openFile (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/fs/dist/abstract.js:448:51)\n at NfsHandler.openFile (/usr/local/lib/node_modules/xo-server/node_modules/limit-concurrency-decorator/index.js:97:24)\n at VhdFile.open (/usr/local/lib/node_modules/xo-server/node_modules/vhd-lib/Vhd/VhdFile.js:86:30)\n at openVhd (/usr/local/lib/node_modules/xo-server/node_modules/vhd-lib/openVhd.js:15:28)\n at async #openVhds (/usr/local/lib/node_modules/xo-server/node_modules/vhd-lib/merge.js:118:23)\n at async Disposable.<anonymous> (/usr/local/lib/node_modules/xo-server/node_modules/vhd-lib/merge.js:164:39)" } }, { "id": "1720617656648", "message": "snapshot", "start": 1720617656648, "status": "success", "end": 1720618073493, "result": "b5ae2b32-ef3c-aa99-4e1f-07d42835746a" }, { "data": { "id": "601f8729-7602-4d6f-a018-d4cc525ca371", "isFull": true, "type": "remote" }, "id": "1720618073494", "message": "export", "start": 1720618073494, "status": "success", "tasks": [ { "id": "1720618090517", "message": "transfer", "start": 1720618090517, "status": "success", "end": 1720680654839, "result": { "size": 2496704791552 } }, { "id": "1720680657579", "message": "clean-vm", "start": 1720680657579, "status": "success", "warnings": [ { "data": { "mergeStatePath": "/xo-vm-backups/be0a9812-fd14-be75-e2fa-40c31ce8875c/vdis/30159f11-3b54-48d1-ab8b-d32858991349/9becf507-abfc-47b4-9091-803ef2a1b47c/.20240703T070203Z.vhd.merge.json", "missingVhdPath": "/xo-vm-backups/be0a9812-fd14-be75-e2fa-40c31ce8875c/vdis/30159f11-3b54-48d1-ab8b-d32858991349/9becf507-abfc-47b4-9091-803ef2a1b47c/20240703T070203Z.vhd" }, "message": "orphan merge state" }, { "data": { "path": "/xo-vm-backups/be0a9812-fd14-be75-e2fa-40c31ce8875c/20240703T090201Z.json", "actual": 83366506496, "expected": 360746910208 }, "message": "cleanVm: incorrect backup size in metadata" } ], "end": 1720680685535, "result": { "merge": false } } ], "end": 1720680685538 } ], "warnings": [ { "data": { "attempt": 1, "error": "HANDLE_INVALID(VBD, OpaqueRef:27874b37-4e3b-4d33-9a68-0d3dbaae7664)" }, "message": "Retry the VM backup due to an error" }, { "data": { "error": { "code": "VDI_IN_USE", "params": [ "OpaqueRef:7462ea3f-8b99-444e-9007-07529868daf2", "data_destroy" ], "call": { "method": "VDI.data_destroy", "params": [ "OpaqueRef:7462ea3f-8b99-444e-9007-07529868daf2" ] } }, "vdiRef": "OpaqueRef:7462ea3f-8b99-444e-9007-07529868daf2" }, "message": "Couldn't deleted snapshot data" }, { "data": { "error": { "code": "VDI_IN_USE", "params": [ "OpaqueRef:1dfc4766-a3b1-4540-ba6a-8c0eab4dbaca", "data_destroy" ], "call": { "method": "VDI.data_destroy", "params": [ "OpaqueRef:1dfc4766-a3b1-4540-ba6a-8c0eab4dbaca" ] } }, "vdiRef": "OpaqueRef:1dfc4766-a3b1-4540-ba6a-8c0eab4dbaca" }, "message": "Couldn't deleted snapshot data" }, { "data": { "attempt": 2, "error": "stream has ended with not enough data (actual: 446, expected: 512)" }, "message": "Retry the VM backup due to an error" } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:18043fd4-1a85-495a-b011-18ce047e46de" }, "message": "Snapshot data has been deleted" }, { "data": { "vdiRef": "OpaqueRef:e4f89ea5-626d-4131-a9ce-ed330d3b2aec" }, "message": "Snapshot data has been deleted" }, { "data": { "vdiRef": "OpaqueRef:1b61a681-598b-4d6e-92c3-05c384fa0164" }, "message": "Snapshot data has been deleted" }, { "data": { "vdiRef": "OpaqueRef:ec381ed4-9d2d-407a-b56b-203c8029fcee" }, "message": "Snapshot data has been deleted" }, { "data": { "vdiRef": "OpaqueRef:abc11faa-73b0-46c3-b3dc-ffe8752671a7" }, "message": "Snapshot data has been deleted" }, { "data": { "vdiRef": "OpaqueRef:a387b947-47bf-4339-92c8-0d749803115f" }, "message": "Snapshot data has been deleted" }, { "data": { "vdiRef": "OpaqueRef:2f054a5c-f1f6-4b1d-9d54-f2e8d74b5757" }, "message": "Snapshot data has been deleted" }, { "message": "Transfer data using NBD" } ], "end": 1720680685540, "result": { "generatedMessage": false, "code": "ERR_ASSERTION", "actual": true, "expected": false, "operator": "strictEqual", "message": "Disk is still attached to DOM0 VM", "name": "AssertionError", "stack": "AssertionError [ERR_ASSERTION]: Disk is still attached to DOM0 VM\n at Array.<anonymous> (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:244:20)\n at Function.from (<anonymous>)\n at asyncMap (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/async-map/index.js:21:28)\n at Array.<anonymous> (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:233:13)\n at Function.from (<anonymous>)\n at asyncMap (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/async-map/index.js:21:28)\n at IncrementalXapiVmBackupRunner._removeUnusedSnapshots (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:219:11)\n at IncrementalXapiVmBackupRunner.run (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:375:18)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/_runners/VmsXapi.mjs:166:38" } } ], "end": 1720680685540 } -

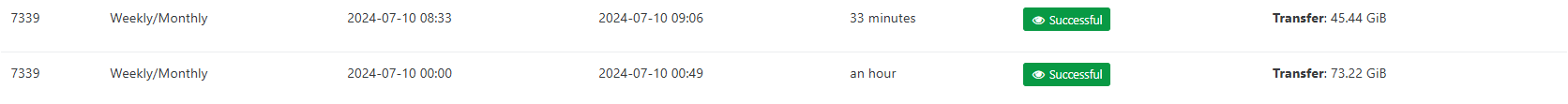

@olivierlambert Problem is back after one day:

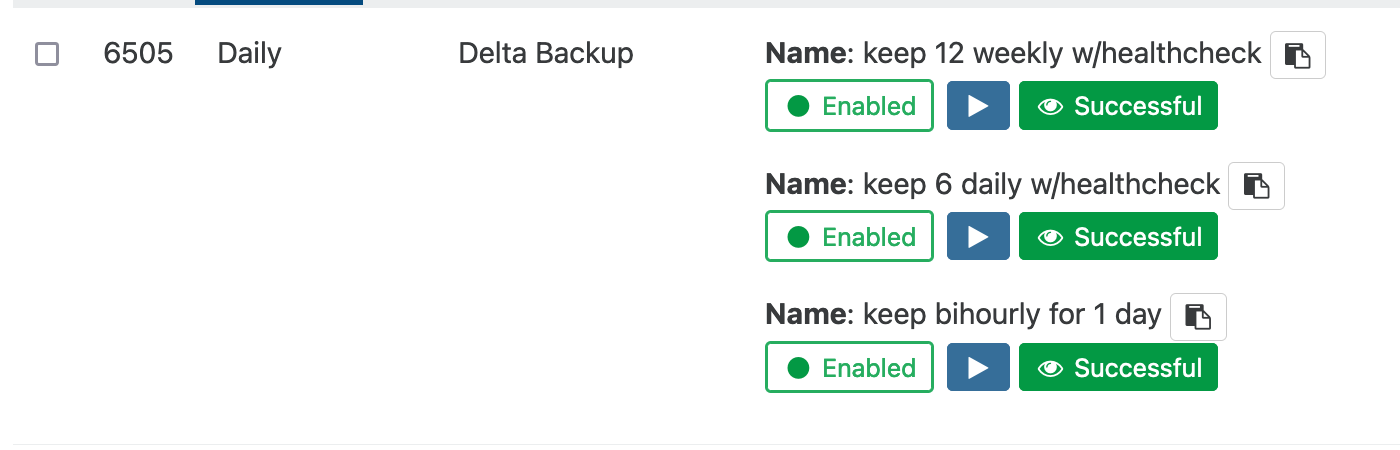

The last snapshot of the previous day is left over. The ones from today leave only the last one.The backup has 3 schedules in one backup job. This might be the problem:

6 bihourly from 6 to 22 every 2hrs mo-sat

6 daily at 23:00 mo-sat

12 weekly at sundays -

Florent provided us with a fix for the Stream error, so far i did not see it on new runs, we keep an eye on the running jobs. The issues we have remaining is the vdi_in_use error and sometimes the proxy loses connection with the XOA master server in that case the jobs shows as interupted but in real it is still running and finishing the job.

-

@olivierlambert It looks like, different from previous versions, the backup keeps one snapshot for each schedule. Was just one for all three before.

Does this seem OK? -

I don't think it was possible to keep one snapshot for many schedules. Because a snapshot is the point of reference, so if your schedules aren't aligned in terms of start date, we must have multiple snapshots (regardless the fact we keep them visible or using CBT to remove them).

-

@olivierlambert This would be OK with me. Just that it wasn't like that (pre-CBT) before. I only had one snapshot for the backup job......