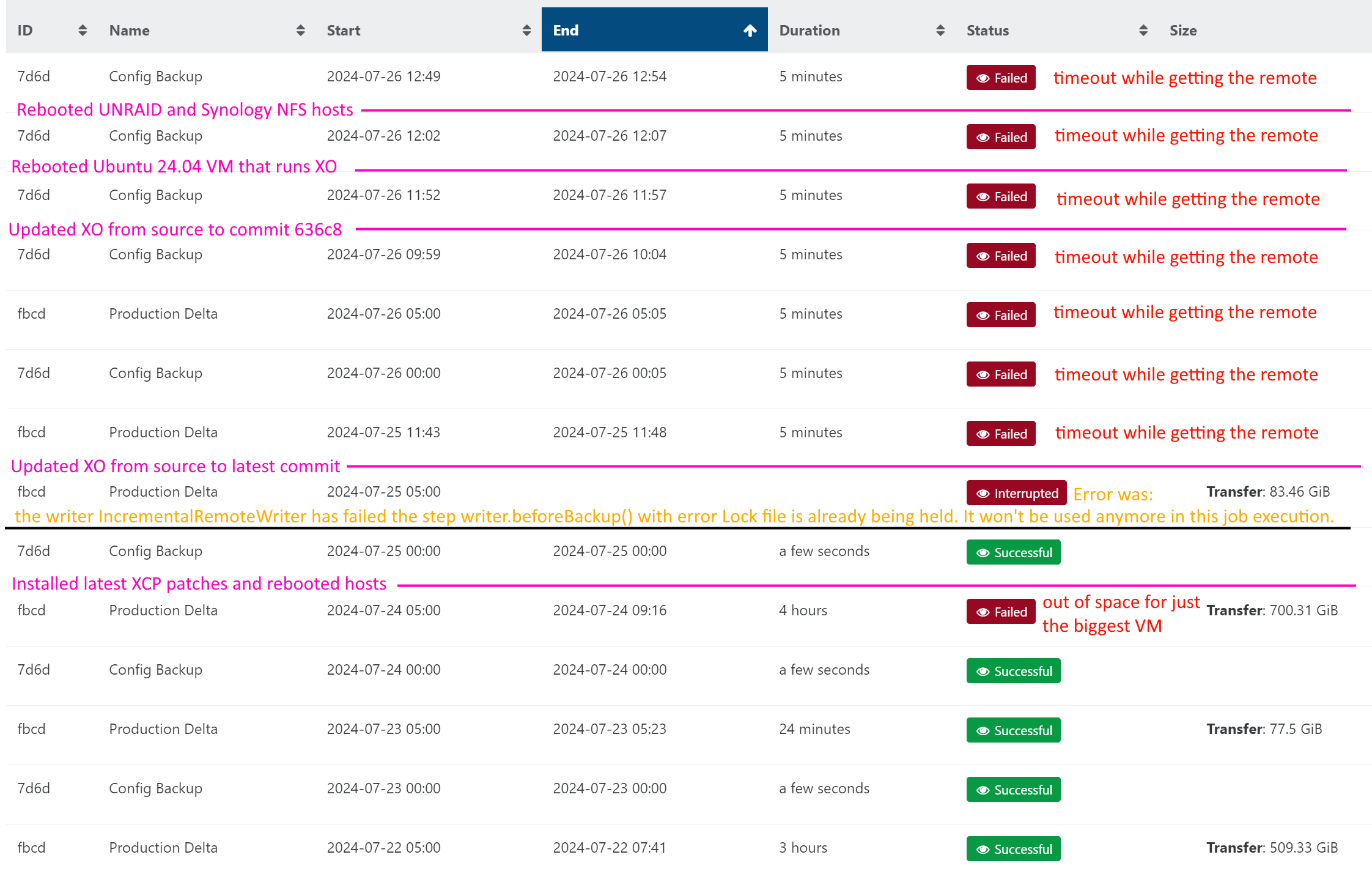

All NFS remotes started to timeout during backup but worked fine a few days ago

-

@CodeMercenary Sorry first off to hear about your personal issues. They need to take precedence.

If there is network contention, it's important that things not be impacted on backups because they utilize a lot of resources: network, memory, and CPU load.

That's why we always ran them over isolated network connections and at times of the day when in general VM ctivity was at a minimum. Make sure you have adequate CPU and memory on your hosts (run top or xtop) and also, iostat (I suggest adding the -x flag) can be very helpful is seeing if other resources are getting maxed out. -

Since the nfs shares can be mounted on other hosts, I'd guess a fsid/clientid mismatch.

In the share, always specify fsid export option. If you do not use it, the nfs server tries to determine a suitable id from the underlying mounts. It may not always be reliable, for example after an upgrade or other changes. Now, if you combine this with a client that uses

hardmount option and the fsid changes, it will not be possible to recover the mount as the client keeps asking for the old id.Nfs3 uses rpcbind and nfs4 doesn't, though this shouldn't matter if your nfs server supports both protocols. With nfs4 you should not export the same directory twice. That is do not export the root directory /mnt/datavol if /mnt/datavol/dir1 and /mnt/datavol/dir2 are exported.

So to fix this, you can adjust your exports (fsid, nesting) and the nfs mount option (to soft) , reboot the nfs server and client and see if it works.

-

@Forza That all is good advice. Again, the showmount command is a good utility that cam show you right away if you can see/mount the storage device from your host.

-

@Forza Thank you. I'll go learn about fsid and implement that. I'm very new to NFS so I appreciate the input.

I do not export it more than once, no nested exports.

I was out of the office yesterday but since making those changes the other day, the backups to UNRAID are working but the backups to the Synology array are failing with

EIO: error closeandEIO: i/o error, unlink '/run/xo-server/mounts/<guid>/xo-vm-backups/<guid>/vdis/<guid>/<guid>/.20240802T110016Z.vhd'Going to investigate that today too. That's a brand-new side quest in this adventure.

I also have a couple VMs that are failing with

VDI must be free or attached to exactly one VMbut I suspect that's due to me having to reboot the server to get the prior backup unstuck. -

@tjkreidl said in All NFS remotes started to timeout during backup but worked fine a few days ago:

@Forza That all is good advice. Again, the showmount command is a good utility that cam show you right away if you can see/mount the storage device from your host.

I do not think showmount lists nfs4 only clients. At least not on my system. For nfs4 I can see connected clients via

/proc/fs/nfsd/clients/Debug logging on a Linux nfs server can be controlled with

rpcdebug. To enable all debug output for the nfsd you can userpcdebug -m nfsd all. Though on the Unraid/Synology it might be different.In dmesg it would look like this:

[465664.478823] __find_in_sessionid_hashtbl: 1722337149:795964100:1:0 [465664.478829] nfsd4_sequence: slotid 0 [465664.478831] check_slot_seqid enter. seqid 4745 slot_seqid 4744 [465664.478928] found domain 10.5.1.1 [465664.478937] found fsidtype 7 [465664.478941] found fsid length 24 [465664.478944] Path seems to be </mnt/6TB/volume/haiku> [465664.478946] Found the path /mnt/6TB/volume/haiku [465664.478994] --> nfsd4_store_cache_entry slot 00000000e3c4c7e5 -

I used

exportfs -von UNRAID to look at the share options, without changing anything first, and they were set to:

(async,wdelay,hide,no_subtree_check,fsid=101,sec=sys,rw,secure,root_squash,no_all_squash)So,

fsidwas already being used.The UNRAID remote has worked the last couple days, since I set

soft,timeo=15,retrans=4. I'm curious to see how it does over the weekend.I'm having trouble getting the VDIs detached from dom0.

-

@CodeMercenary said in All NFS remotes started to timeout during backup but worked fine a few days ago:

I used

exportfs -von UNRAID to look at the share options, without changing anything first, and they were set to:

(async,wdelay,hide,no_subtree_check,fsid=101,sec=sys,rw,secure,root_squash,no_all_squash)So,

fsidwas already being used.This is good.

I'm having trouble getting the VDIs detached from dom0.

I was thinking the issue was using the nfs as a Remote (for storing backups), not as an SR (storage repository for VM/VDI). Those are very different things with different needs. Using

softon a SR can cause corruption in your VDI. I would also pay attention to theasyncexport option as this can lead to corruption if your nfs server crashes or looses power (unless you have battery backups, etc). -

@Forza Seems you are correct about

showmount. On UNRAID running v4showmountsays there are no clients connected. I previously assumed that meant XO only connected during the backup. When I look at/proc/fs/nfsd/clientsI see the connections.On Synology, running v3,

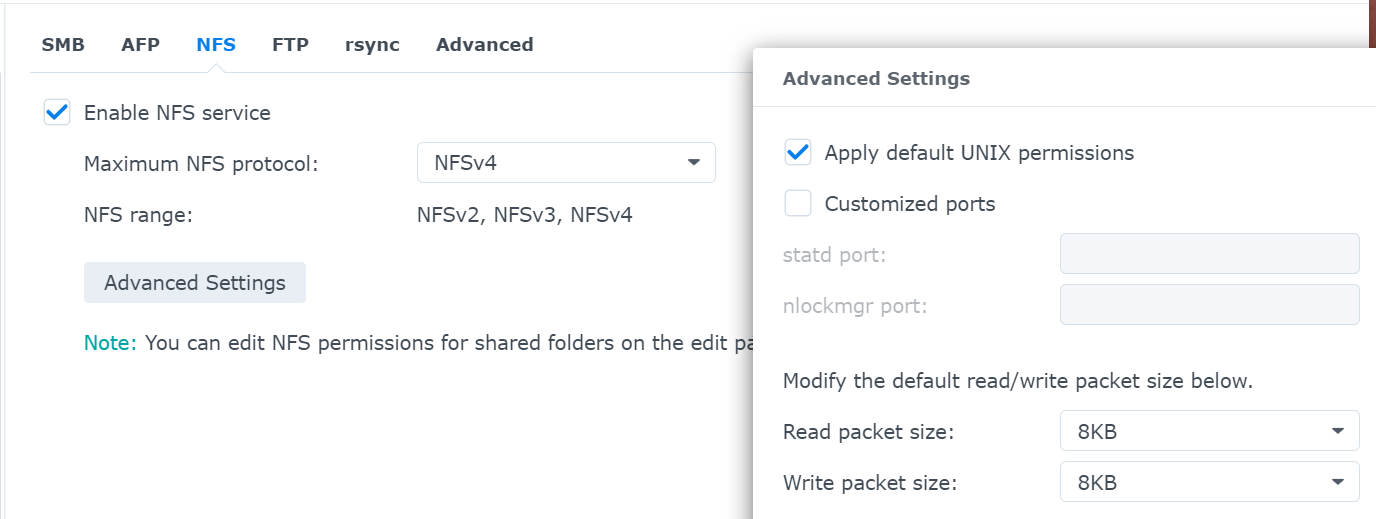

showmountdoes show the XO IP connected. Synology is supposed to support v4 and I have the share set to allow v4 but XO had trouble connecting that way. Synology is pretty limited in what options it lets me set for NFS shares.

Synology doesn't have

rcpdebugavailable. I'll see if I can figure out how to get more logging info about NFS. -

@Forza Sorry, you were correct, I just mixed in another new issue. NFS is currently used only for backups. All my SRs are in local storage. It just happened that I now have backups failing not just because of the NFS issue but because of the VDI issue but I think it's a side-effect of the NFS problem causing the backup to get interrupted so now the VDI is stuck attached to dom0. I should have made that more clear or never mentioned the VDI issue at all.

-

@Forza said in All NFS remotes started to timeout during backup but worked fine a few days ago:

I would also pay attention to the async export option as this can lead to corruption if your nfs server crashes or looses power (unless you have battery backups, etc).

I do have battery backup for both NFS hosts.

asyncmust be a default since I didn't set it. I have no problem disabling that if it will improve reliability. If it makes a difference, both systems are sharing folders on HDD, not SSDs.Seems the Synology does support v4. The other day I think I tried v4.2 and it failed so I dropped back to v3 and that worked. I hadn't tried v4.1 and v4. Maybe it'll work better with v4 than it has with v3.

-

@CodeMercenary That's OK. I just didn't want to confuse things and give bad advice for you

-

I got the same thing two night's ago.

"timeout while getting the remote 0d2da3ba-fde1-blah-blah-blah"I rebooted teh NFS server and everything worked fine last night.

-

So far it seems like there were two things that fixed this.

Setting this in the XO NFS options for the two remotes:

soft,timeo=15,retrans=4Adding

vers=4to the XO NFS option for the Synology connection, as opposed tovers=4.2orvers=3. It seems the Synology supports version 2, 3 and 4 but not 4.1 or 4.2. So far, version 4 seems to be working better than 3 did. -

A bit after this I started having trouble again. I scrubbed my delta backup sets, switched to using SMB to access the same share and so far I'm a week in with no trouble at all. Remains to be seen if the strange failures will crop up again but so far I think this is the longest I've gone with the delta backups not having some random failure. Usually, it was a backup getting stuck in Started status for a day or two then switching to Interrupted because I'd reboot the XO VM to unstick it, then one of the VM backups will fail because a VDI is attached to DOM0. I know theoretically NFS is better than SMB but for now the one that breaks least often is the better option. Of course, maybe my issue had nothing to do with NFS but for now it's felt like SMB is more reliable.

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (either via email, or push notification). You'll also be able to save bookmarks and upvote posts to show your appreciation to other community members.

With your input, this post could be even better 💗

Register Login