Live Migration Fails: MIRROR_FAILED

-

Hi,

I'm trying to live migrate a large VM from one host to another and XO built from source using the latest commit a7d7c is reporting it will take over 32 hours for the migration to complete. However, after about 20+ hours, the live migration fails at about 48% complete.

XO shows the following error under Settings > Logs:

vm.migrate { "vm": "3797df5e-695a-00be-86bd-318a7c48860f", "mapVifsNetworks": { "de9a4140-0b67-314b-6fed-0bbd00d4cf74": "eefd492c-2bef-502e-79e7-f5123209d887", "cbea6ad6-cb84-5536-e021-d2bdabda6348": "34f336c5-b05d-4258-20d0-984523113b85" }, "migrationNetwork": "02bfbb0b-5f1a-e47e-d50b-28f0f7c50b11", "sr": "8da3d03e-4d2c-bab2-cd94-0d15168a58f3", "targetHost": "2d926060-41bf-4e17-ba76-bac9b1112257" } { "code": "MIRROR_FAILED", "params": [ "OpaqueRef:7069c225-0bf2-433c-a255-0fab035ea70b" ], "task": { "uuid": "f31eafed-faea-2eb4-b9e9-d45cc7967498", "name_label": "Async.VM.migrate_send", "name_description": "", "allowed_operations": [], "current_operations": { "DummyRef:|e8163dcb-8c54-4907-a0de-97d36cf2127e|task.cancel": "cancel" }, "created": "20241014T04:40:24Z", "finished": "20241015T00:09:27Z", "status": "failure", "resident_on": "OpaqueRef:d3f118d9-3aef-4d16-94d5-6d6fa22f84b9", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "MIRROR_FAILED", "OpaqueRef:7069c225-0bf2-433c-a255-0fab035ea70b" ], "other_config": { "mirror_failed": "b607eebc-49ad-4fc0-ad4a-b605fedfc51e" }, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename ocaml/xapi/xapi_vm_migrate.ml)(line 1556))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 131))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 205))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 95)))" }, "message": "MIRROR_FAILED(OpaqueRef:7069c225-0bf2-433c-a255-0fab035ea70b)", "name": "XapiError", "stack": "XapiError: MIRROR_FAILED(OpaqueRef:7069c225-0bf2-433c-a255-0fab035ea70b) at Function.wrap (file:///opt/xen-orchestra/packages/xen-api/_XapiError.mjs:16:12) at default (file:///opt/xen-orchestra/packages/xen-api/_getTaskResult.mjs:13:29) at Xapi._addRecordToCache (file:///opt/xen-orchestra/packages/xen-api/index.mjs:1041:24) at file:///opt/xen-orchestra/packages/xen-api/index.mjs:1075:14 at Array.forEach (<anonymous>) at Xapi._processEvents (file:///opt/xen-orchestra/packages/xen-api/index.mjs:1065:12) at Xapi._watchEvents (file:///opt/xen-orchestra/packages/xen-api/index.mjs:1238:14)" }On the source host, /var/log/xensource.log show the following when the error occurs:

And on the target host, /var/log/xensource.log show the following:

I appreciate any help you can offer to help me identify why the live migration is failing.

Thank you,

SW

-

Hi,

This is not an XO issue, but a "normal" problem when you try to move a big VM: a live storage migration means the blocks has to be replicated to the other side while the VM is running. However, if you write a bit faster than the mirror, it will fail. For such large VMs, I would go for warm migration.

-

In the log of the source host I seeOct 14 20:09:06 XCP25 xapi: [error||3 ||storage_migrate] Tapdisk mirroring has failed

Can you also provide /var/log/SMlog that corresponds to the migration time. As it was yesterday maybe you should look in /var/log/SMlog.1

Thx -

-

@olivierlambert Thank you! Is it possible to simply attach the shared from the source pool to the new pool without having to do a live/warm migration?

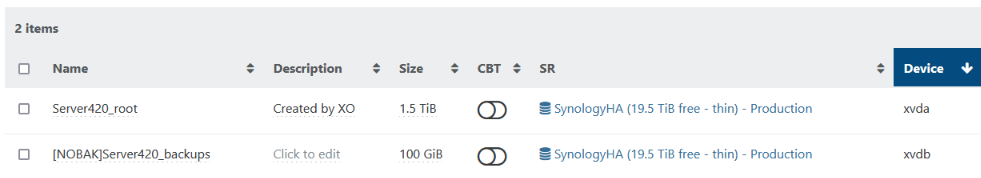

We are only moving this VM from one pool to a new pool but the VM disks are going to remain on the shared storage (NFS):

The reason I'm moving pools is we have newer servers.

Thank you,

SW

-

XCP-ng doesn't know it's the same SR. So either you added the host in the pool and just do a regular live migration without any data move (and then eject the old host), or if the hardware is very different (not same CPU vendor or NIC numbers too different), then warm migration is likely the easiest way.

Alternative, if there's no complex VDI chain, you could move the VHD file to the right folder. But this can be tricky and dangerous if you don't know exactly what you are doing.

-

@stevewest15 Thx for SMlog. I don't see anything suspicious