Gpu passthrough on Asrock rack B650D4U3-2L2Q will not work

-

@Teddy-Astie It's an asus prime h370f with an old 8 core intel core i7 9700k

-

I have gpu pcie passthrough working on Asrock rack wrx80d8-2t (Threadripper), and x470d4u2 (Ryzen) to passthrough multiple Radeon Pro 7000 series gpus

Rodney

-

@ravenet Do you remember if you only enabled svm and Iommu? Or did you take the whole bunch with SR-iov, ACS,AER,ARI support, resize bar Support

-

@steff22

Far as I know, SVM, IOMMU, SR-IOV and POSSIBLY resize bar were enabled.

Can't check the threadripper system until the weekend as it runs production loads.I'll reboot one of the Ryzen boxes tonight to verify what I enabled there, but was basically defaults other than making sure the 3 or 4 items above were enabled.

-

@ravenet ok thanks

Didn't work here.

But there may be some error in the bios since it is beta and the first bios that supports the Amd Ryzen9000 series.But the strange thing is that it works stably with Proxmox without any errors of any kind.

-

It's not strange, those a two VERY different system in how they work.

-

@olivierlambert Is there a slight software difference, yes But both are hypervisors.

So the fact that one of them works means that it should be possible to make it happen with my hardware.

Isn't that much you do with proxmox rather than enable iommu in grub and blacklist nvidia drivers plus disable_vga out on the Gpu card.

Is it possible to blacklist the nvidia drivers with Xcp-ng?

And there is no difference to enable pci passthrough in xen-orchestra compared to command-line?I think I have tried both parts but not sure if I had enabled everything in the bios then

-

No, it's not a slight difference: it's a completely different design. In XCP-ng, you start first to boot on Xen, a kind of microkernel. Then only you boot on specific VM, the Dom0, which is a PV guest. Then, from this guest, you have the API etc. But if the Dom0 has access to the hardware for I/O (NICs, disks, GPUs…), it's still a VM and Dom0 doesn't have access to all CPUs and memory of the physical machine.

This means, Xen will always be "in the middle" to control who takes what (on cores and RAM). This is a great design from a security perspective because you always have a small piece of software (Xen) that is controlling what's going on.

In KVM, it's vastly different. You boot on a full Linux kernel, and then you load a small module (KVM). The "host" is really the host, accessing all the memory and CPUs. There's a lot less isolation (and therefore security), however the plus is that there's nobody in the middle to deal with.

I can assure you that PCI passthrough is really complex and also depends on many factors, even how the BIOS is configured and how many things are done by the motherboard manufacturer.

So knowing it works with Proxmox is as saying "it works on Windows", it's that different. It doesn't mean it's not possible in Xen, but if it does, it will be vastly different on how it's done.

-

@olivierlambert Ok, didn't realize there was such a big difference. I actually like everything better with Xcp-ng and xen orchestra.

All testing has been done with UEFI BIOS. Is it just a waste of time to try with legacy bios on Xcp-ng and vm os?

Does it still make sense to try to blacklist the nvidia drivers with Xcp-ng Dom0 to try to isolate the Gpu even more.

But is Xcp-ng more like Vmware esxi in the way everything is handled? doesn't work with vmware esxi either.

-

Yes, you could say XCP-ng is a lot closer to VMware than KVM

(even if VMware is more advanced on some aspects, the architecture is roughly similar).

(even if VMware is more advanced on some aspects, the architecture is roughly similar).Anyway, it doesn't mean that will never work; However, it might be less straightforward than with KVM until we find exactly what's causing the problem.

-

@steff22 Assuming the

xen-pciback.hidewas previously set, could you try this workaround (no guarantee that'll work, since each motherboard and BIOSes have their quirks):/opt/xensource/libexec/xen-cmdline --set-dom0 pci=realloc reboot -

@tuxen Didn't work unfortunately. Have used xen orchestra for pciback.hide. the last time. But tried the old fashioned way first. with the command line.

-

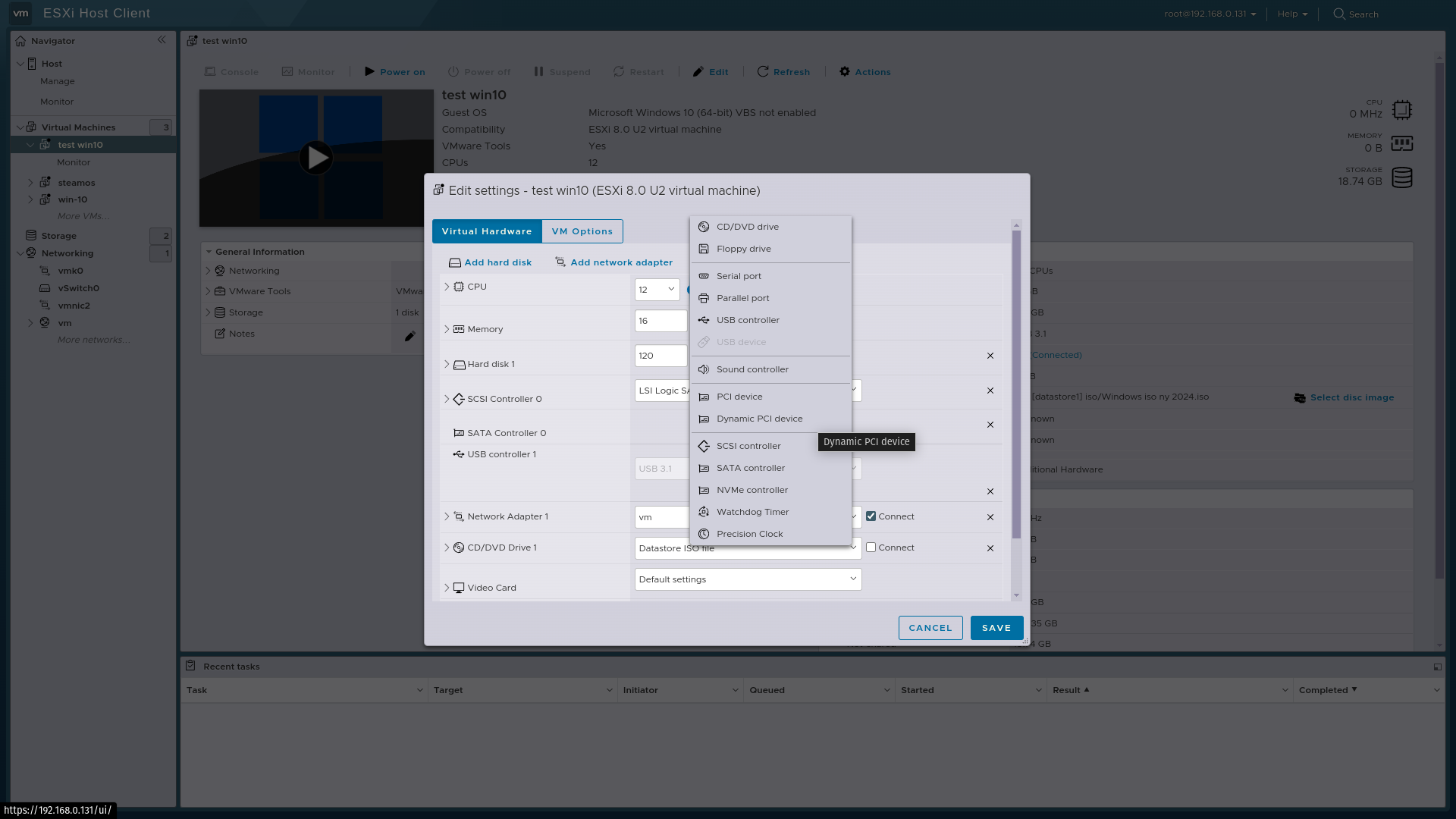

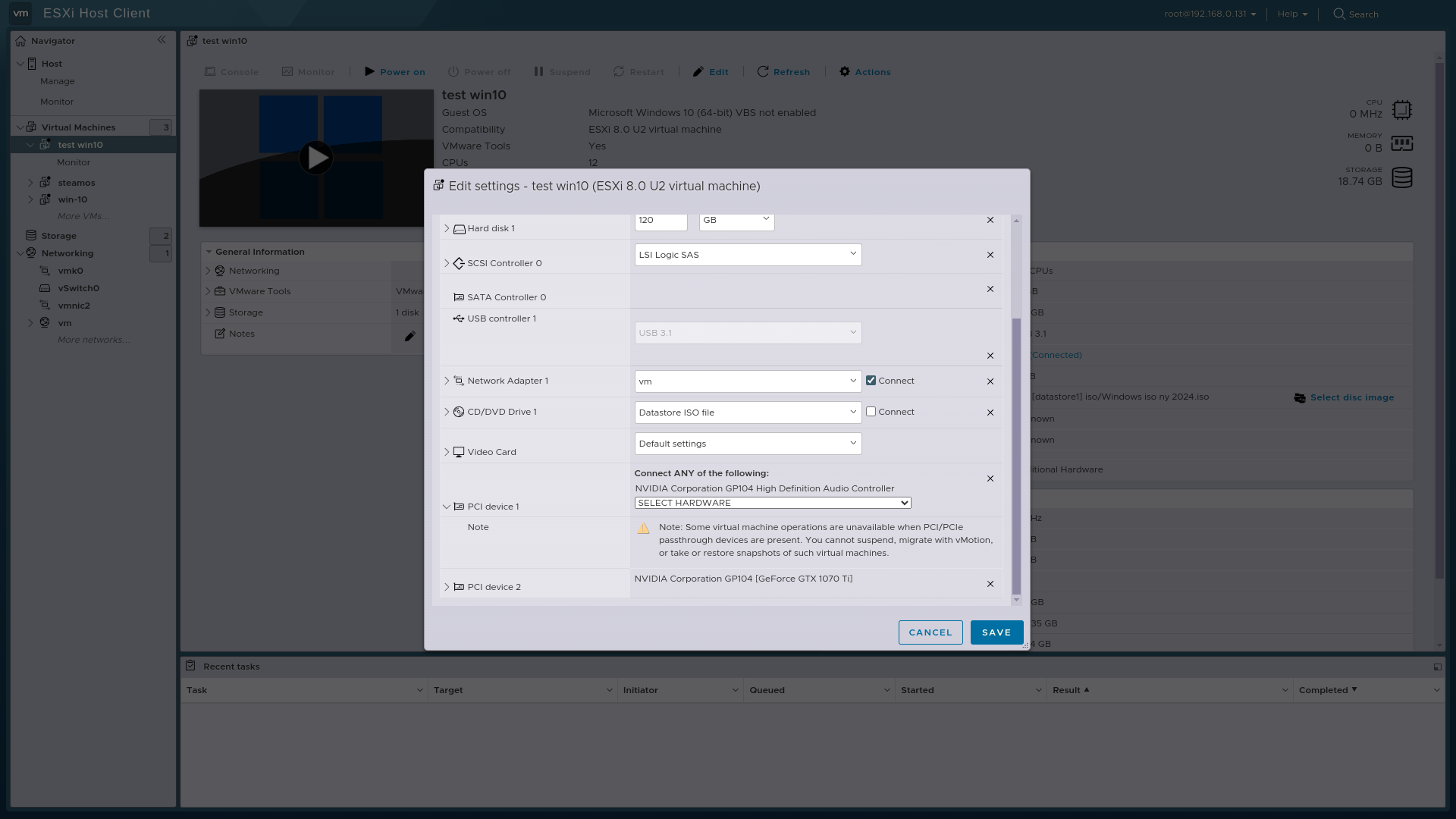

I got Gpu passthrough working with Vmware esxi 8.0.3 now.

Was a choice that one could choose to connect a dynamic PCI device.

Isn't this an indication that the error lies in how Xcp-ng handles pci passthrough?

Both Vmware esxi 8.0.3 and proxmox have two different ways to connect pci devices to vm only one of them works the other method creates error 43 in windows.

But as I said, I don't know anything about what the different choices do

-

Do you have more information in that VMware option?

-

@olivierlambert No, unfortunately, nothing but this picture

-

@Teddy-Astie do you have any idea what it could be and why it makes the device working?

-

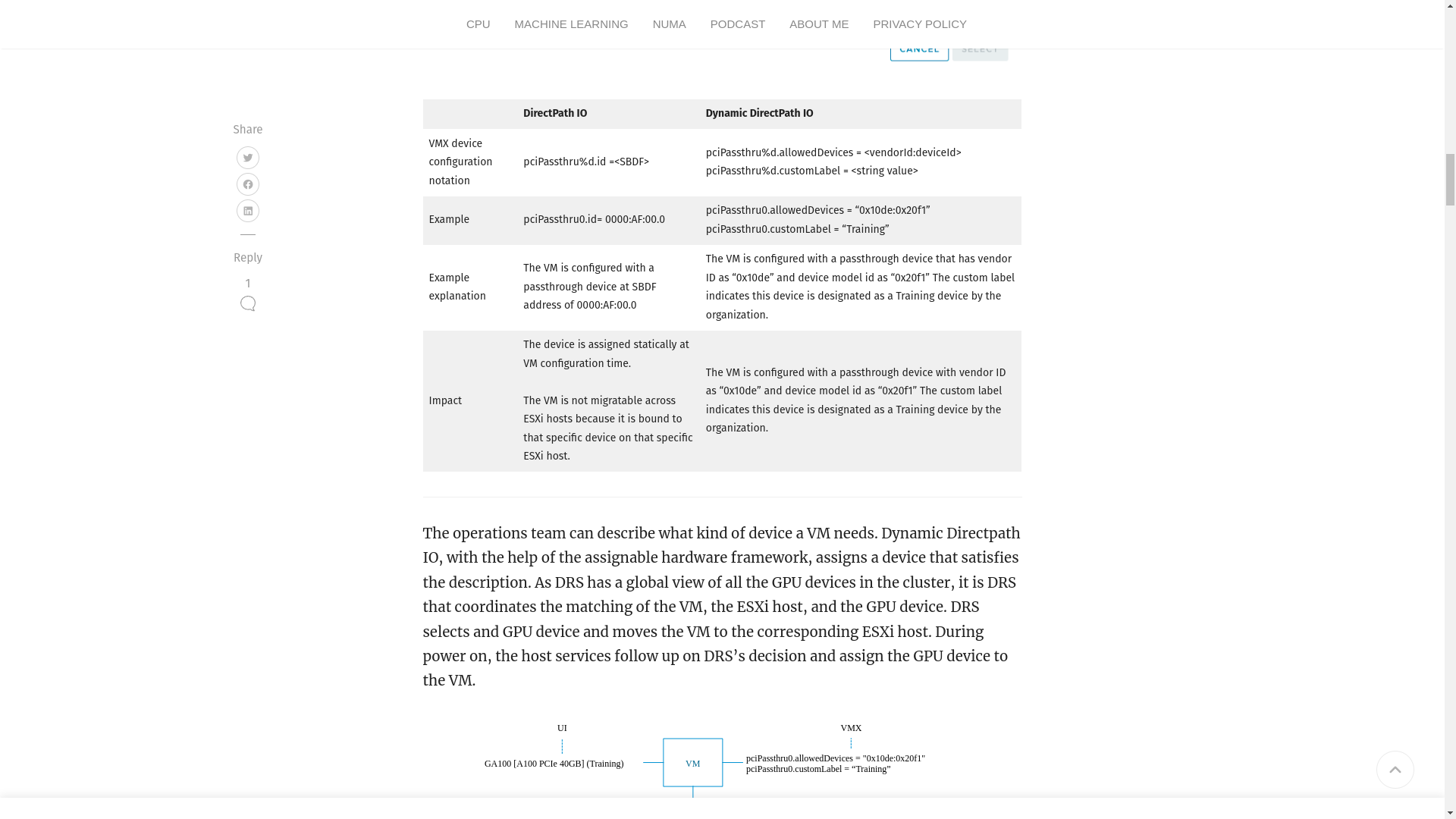

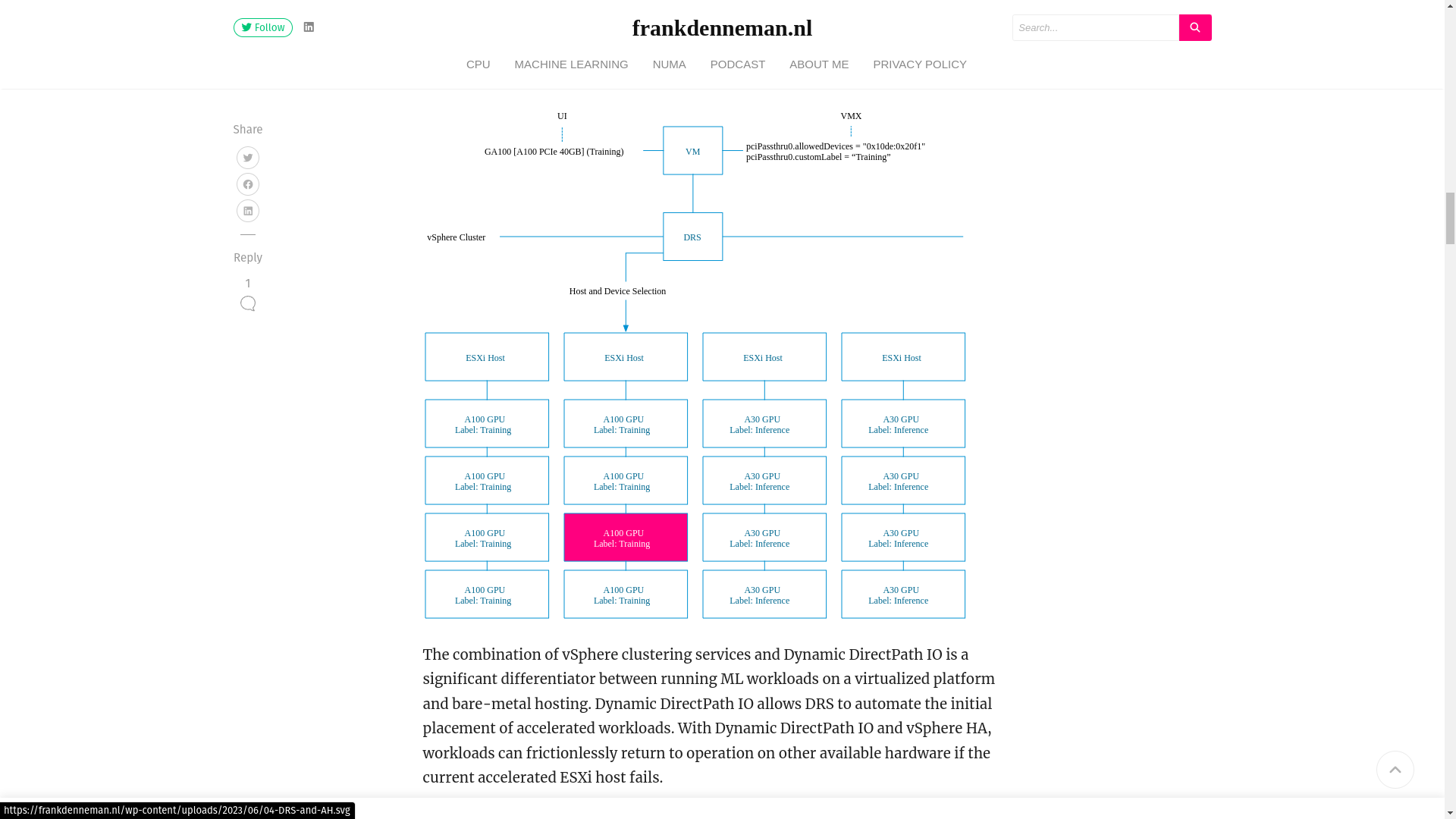

@olivierlambert Found this article which explains a bit about dynamic PCI device. From what I understood, dynamic PCI devices are newer and more accurate and use DRS

-

@steff22 what's the output of

lspci -kandxl pci-assignable-list?Also, the outputs of the system logs re. GPU and IOMMU initialization would be very useful:

egrep -i '(nvidia|vga|video|pciback)' /var/log/kern.log xl dmesgTux

-

@tuxen said in Gpu passthrough on Asrock rack B650D4U3-2L2Q will not work:

lspci -k and xl pci-assignable-list

xcp-ng-Asrock ~]# lspci -k 00:00.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14d8 Subsystem: Advanced Micro Devices, Inc. [AMD] Device 14d8 00:00.2 IOMMU: Advanced Micro Devices, Inc. [AMD] Device 14d9 Subsystem: Advanced Micro Devices, Inc. [AMD] Device 14d9 00:01.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14da 00:01.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Device 14db Kernel driver in use: pcieport 00:01.2 PCI bridge: Advanced Micro Devices, Inc. [AMD] Device 14db Kernel driver in use: pcieport 00:02.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14da 00:02.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Device 14db Kernel driver in use: pcieport 00:02.2 PCI bridge: Advanced Micro Devices, Inc. [AMD] Device 14db Kernel driver in use: pcieport 00:03.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14da 00:04.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14da 00:08.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14da 00:08.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Device 14dd Kernel driver in use: pcieport 00:08.3 PCI bridge: Advanced Micro Devices, Inc. [AMD] Device 14dd Kernel driver in use: pcieport 00:14.0 SMBus: Advanced Micro Devices, Inc. [AMD] FCH SMBus Controller (rev 71) Subsystem: Advanced Micro Devices, Inc. [AMD] FCH SMBus Controller Kernel driver in use: piix4_smbus Kernel modules: i2c_piix4 00:14.3 ISA bridge: Advanced Micro Devices, Inc. [AMD] FCH LPC Bridge (rev 51) Subsystem: Advanced Micro Devices, Inc. [AMD] FCH LPC Bridge 00:18.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14e0 00:18.1 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14e1 00:18.2 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14e2 00:18.3 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14e3 00:18.4 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14e4 00:18.5 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14e5 00:18.6 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14e6 00:18.7 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14e7 01:00.0 VGA compatible controller: NVIDIA Corporation GP104 [GeForce GTX 1070 Ti] (rev a1) Subsystem: ZOTAC International (MCO) Ltd. Device 2445 Kernel driver in use: pciback 01:00.1 Audio device: NVIDIA Corporation GP104 High Definition Audio Controller (rev a1) Subsystem: ZOTAC International (MCO) Ltd. Device 2445 Kernel driver in use: pciback 02:00.0 Ethernet controller: Broadcom Inc. and subsidiaries BCM57502 NetXtreme-E 10Gb/25Gb/40Gb/50Gb Ethernet (rev 12) Subsystem: ASRock Incorporation Device 1752 Kernel driver in use: bnxt_en Kernel modules: bnxt_en 02:00.1 Ethernet controller: Broadcom Inc. and subsidiaries BCM57502 NetXtreme-E 10Gb/25Gb/40Gb/50Gb Ethernet (rev 12) Subsystem: ASRock Incorporation Device 1752 Kernel driver in use: bnxt_en Kernel modules: bnxt_en 03:00.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset PCIe Switch Upstream Port (rev 01) Kernel driver in use: pcieport 04:00.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset PCIe Switch Downstream Port (rev 01) Kernel driver in use: pcieport 04:01.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset PCIe Switch Downstream Port (rev 01) Kernel driver in use: pcieport 04:02.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset PCIe Switch Downstream Port (rev 01) Kernel driver in use: pcieport 04:03.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset PCIe Switch Downstream Port (rev 01) Kernel driver in use: pcieport 04:04.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset PCIe Switch Downstream Port (rev 01) Kernel driver in use: pcieport 04:08.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset PCIe Switch Downstream Port (rev 01) Kernel driver in use: pcieport 04:0c.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset PCIe Switch Downstream Port (rev 01) Kernel driver in use: pcieport 04:0d.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset PCIe Switch Downstream Port (rev 01) Kernel driver in use: pcieport 05:00.0 USB controller: Renesas Technology Corp. uPD720201 USB 3.0 Host Controller (rev 03) Subsystem: Renesas Technology Corp. uPD720201 USB 3.0 Host Controller Kernel driver in use: xhci_hcd Kernel modules: xhci_pci 06:00.0 Ethernet controller: Intel Corporation I210 Gigabit Network Connection (rev 03) Subsystem: ASRock Incorporation Device 1533 Kernel driver in use: igb Kernel modules: igb 07:00.0 Ethernet controller: Intel Corporation I210 Gigabit Network Connection (rev 03) Subsystem: ASRock Incorporation Device 1533 Kernel driver in use: igb Kernel modules: igb 08:00.0 PCI bridge: ASRock Incorporation Device 1150 (rev 06) 09:00.0 VGA compatible controller: ASPEED Technology, Inc. ASPEED Graphics Family (rev 52) Subsystem: ASRock Incorporation Device 2000 0c:00.0 USB controller: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset USB 3.2 Controller (rev 01) Subsystem: ASMedia Technology Inc. Device 1142 Kernel driver in use: xhci_hcd Kernel modules: xhci_pci 0d:00.0 SATA controller: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset SATA Controller (rev 01) Subsystem: ASMedia Technology Inc. Device 1062 Kernel driver in use: ahci Kernel modules: ahci 0e:00.0 Non-Volatile memory controller: Kingston Technology Company, Inc. FURY Renegade NVMe SSD with heatsink (rev 01) Subsystem: Kingston Technology Company, Inc. FURY Renegade NVMe SSD with heatsink Kernel driver in use: nvme Kernel modules: nvme 0f:00.0 VGA compatible controller: Advanced Micro Devices, Inc. [AMD/ATI] Device 13c0 (rev c1) Subsystem: Advanced Micro Devices, Inc. [AMD/ATI] Device 13c0 0f:00.1 Audio device: Advanced Micro Devices, Inc. [AMD/ATI] Rembrandt Radeon High Definition Audio Controller Subsystem: Advanced Micro Devices, Inc. [AMD/ATI] Rembrandt Radeon High Definition Audio Controller 0f:00.2 Encryption controller: Advanced Micro Devices, Inc. [AMD] Family 19h PSP/CCP Subsystem: Advanced Micro Devices, Inc. [AMD] Family 19h PSP/CCP 0f:00.3 USB controller: Advanced Micro Devices, Inc. [AMD] Device 15b6 Subsystem: Advanced Micro Devices, Inc. [AMD] Device 15b6 Kernel driver in use: xhci_hcd Kernel modules: xhci_pci 0f:00.4 USB controller: Advanced Micro Devices, Inc. [AMD] Device 15b7 Subsystem: Advanced Micro Devices, Inc. [AMD] Device 15b6 Kernel driver in use: xhci_hcd Kernel modules: xhci_pci 0f:00.5 Multimedia controller: Advanced Micro Devices, Inc. [AMD] ACP/ACP3X/ACP6x Audio Coprocessor (rev 62) Subsystem: Advanced Micro Devices, Inc. [AMD] ACP/ACP3X/ACP6x Audio Coprocessor 0f:00.6 Audio device: Advanced Micro Devices, Inc. [AMD] Family 17h/19h HD Audio Controller Subsystem: Advanced Micro Devices, Inc. [AMD] Device d601 10:00.0 USB controller: Advanced Micro Devices, Inc. [AMD] Device 15b8 Subsystem: Advanced Micro Devices, Inc. [AMD] Device 15b6 Kernel driver in use: xhci_hcd Kernel modules: xhci_pci [17:29 xcp-ng-Asrock ~]# xl pci-assignable-list 0000:01:00.0 0000:01:00.1 [17:29 xcp-ng-Asrock ~]# -

[17:34 xcp-ng-Asrock ~]# egrep -i '(nvidia|vga|video|pciback)' /var/log/kern.log Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 0.000000] Command line: root=LABEL=root-zcnzyl ro nolvm hpet=disable console=hvc0 console=tty0 quiet vga=785 splash plymouth.ignore-serial-consoles xen-pciback.hide=(0000:01:00.0)(0000:01:00.1) pci=realloc Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 0.300513] Kernel command line: root=LABEL=root-zcnzyl ro nolvm hpet=disable console=hvc0 console=tty0 quiet vga=785 splash plymouth.ignore-serial-consoles xen-pciback.hide=(0000:01:00.0)(0000:01:00.1) pci=realloc Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 0.372191] ACPI: Added _OSI(Linux-Dell-Video) Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 0.413827] pci 0000:01:00.0: vgaarb: VGA device added: decodes=io+mem,owns=none,locks=none Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 0.413827] pci 0000:09:00.0: vgaarb: setting as boot VGA device Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 0.413827] pci 0000:09:00.0: vgaarb: VGA device added: decodes=io+mem,owns=io+mem,locks=none Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 0.413827] pci 0000:0f:00.0: vgaarb: VGA device added: decodes=io+mem,owns=none,locks=none Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 0.413827] pci 0000:0f:00.0: vgaarb: bridge control possible Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 0.413827] pci 0000:09:00.0: vgaarb: bridge control possible Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 0.413827] pci 0000:01:00.0: vgaarb: bridge control possible Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 0.413827] vgaarb: loaded Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 0.433564] pciback 0000:01:00.0: seizing device Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 0.433567] pciback 0000:01:00.1: seizing device Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 0.435408] pciback 0000:01:00.1: Linked as a consumer to 0000:01:00.0 Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 0.435773] pci 0000:09:00.0: Video device with shadowed ROM at [mem 0x000c0000-0x000dffff] Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 0.486767] fb0: EFI VGA frame buffer device Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 0.488017] pciback 0000:01:00.1: enabling device (0000 -> 0002) Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 1.324232] xen_pciback: backend is vpci Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 4.540246] ACPI: Video Device [VGA] (multi-head: yes rom: no post: no) Nov 6 09:23:35 xcp-ng-Asrock kernel: [ 4.541145] input: Video Bus as /devices/LNXSYSTM:00/LNXSYBUS:00/PNP0A08:00/device:20/LNXVIDEO:00/input/input4 Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 0.000000] Command line: root=LABEL=root-zcnzyl ro nolvm hpet=disable console=hvc0 console=tty0 quiet vga=785 splash plymouth.ignore-serial-consoles xen-pciback.hide=(0000:01:00.0)(0000:01:00.1) pci=realloc Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 0.404516] Kernel command line: root=LABEL=root-zcnzyl ro nolvm hpet=disable console=hvc0 console=tty0 quiet vga=785 splash plymouth.ignore-serial-consoles xen-pciback.hide=(0000:01:00.0)(0000:01:00.1) pci=realloc Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 0.475292] ACPI: Added _OSI(Linux-Dell-Video) Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 0.521409] pci 0000:01:00.0: vgaarb: VGA device added: decodes=io+mem,owns=none,locks=none Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 0.521409] pci 0000:09:00.0: vgaarb: setting as boot VGA device Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 0.521409] pci 0000:09:00.0: vgaarb: VGA device added: decodes=io+mem,owns=io+mem,locks=none Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 0.521409] pci 0000:0f:00.0: vgaarb: VGA device added: decodes=io+mem,owns=none,locks=none Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 0.521409] pci 0000:0f:00.0: vgaarb: bridge control possible Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 0.521409] pci 0000:09:00.0: vgaarb: bridge control possible Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 0.521409] pci 0000:01:00.0: vgaarb: bridge control possible Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 0.521409] vgaarb: loaded Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 0.545092] pciback 0000:01:00.0: seizing device Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 0.545097] pciback 0000:01:00.1: seizing device Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 0.546907] pciback 0000:01:00.1: Linked as a consumer to 0000:01:00.0 Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 0.547268] pci 0000:09:00.0: Video device with shadowed ROM at [mem 0x000c0000-0x000dffff] Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 0.599648] fb0: EFI VGA frame buffer device Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 0.600798] pciback 0000:01:00.1: enabling device (0000 -> 0002) Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 1.431777] xen_pciback: backend is vpci Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 4.649186] ACPI: Video Device [VGA] (multi-head: yes rom: no post: no) Nov 6 10:40:48 xcp-ng-Asrock kernel: [ 4.649539] input: Video Bus as /devices/LNXSYSTM:00/LNXSYBUS:00/PNP0A08:00/device:20/LNXVIDEO:00/input/input7 Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 0.000000] Command line: root=LABEL=root-zcnzyl ro nolvm hpet=disable console=hvc0 console=tty0 quiet vga=785 splash plymouth.ignore-serial-consoles xen-pciback.hide=(0000:01:00.0)(0000:01:00.1) pci=realloc Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 0.294998] Kernel command line: root=LABEL=root-zcnzyl ro nolvm hpet=disable console=hvc0 console=tty0 quiet vga=785 splash plymouth.ignore-serial-consoles xen-pciback.hide=(0000:01:00.0)(0000:01:00.1) pci=realloc Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 0.365459] ACPI: Added _OSI(Linux-Dell-Video) Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 0.407742] pci 0000:01:00.0: vgaarb: VGA device added: decodes=io+mem,owns=none,locks=none Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 0.407742] pci 0000:09:00.0: vgaarb: setting as boot VGA device Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 0.407742] pci 0000:09:00.0: vgaarb: VGA device added: decodes=io+mem,owns=io+mem,locks=none Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 0.407742] pci 0000:0f:00.0: vgaarb: VGA device added: decodes=io+mem,owns=none,locks=none Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 0.407742] pci 0000:0f:00.0: vgaarb: bridge control possible Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 0.407742] pci 0000:09:00.0: vgaarb: bridge control possible Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 0.407742] pci 0000:01:00.0: vgaarb: bridge control possible Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 0.407742] vgaarb: loaded Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 0.422576] pciback 0000:01:00.0: seizing device Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 0.422576] pciback 0000:01:00.1: seizing device Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 0.425790] pciback 0000:01:00.1: Linked as a consumer to 0000:01:00.0 Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 0.426118] pci 0000:09:00.0: Video device with shadowed ROM at [mem 0x000c0000-0x000dffff] Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 0.483172] fb0: EFI VGA frame buffer device Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 0.484591] pciback 0000:01:00.1: enabling device (0000 -> 0002) Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 1.313911] xen_pciback: backend is vpci Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 4.917967] ACPI: Video Device [VGA] (multi-head: yes rom: no post: no) Nov 8 17:20:20 xcp-ng-Asrock kernel: [ 4.918453] input: Video Bus as /devices/LNXSYSTM:00/LNXSYBUS:00/PNP0A08:00/device:20/LNXVIDEO:00/input/input13 [17:37 xcp-ng-Asrock ~]# 17:37 xcp-ng-Asrock ~]# xl dmesg __ __ _ _ _ _____ ____ \ \/ /___ _ __ | || | / |___ | ___| \ // _ \ '_ \ | || |_ | | / /|___ \ / \ __/ | | | |__ _|| | / /_ ___) | /_/\_\___|_| |_| |_|(_)_|/_/(_)____/ (XEN) [0000004806bfa1ae] Xen version 4.17.5-3 (mockbuild@[unknown]) (gcc (GCC) 11.2.1 20210728 (Red Hat 11.2.1-1)) debug=n Wed Oct 2 16:21:17 CEST 2024 (XEN) [0000004806bfb27a] Latest ChangeSet: 430ce6cd9365, pq 326ba7419f1d (XEN) [0000004806bfc54a] build-id: 8dbf3a0a4e64db53bf7d322a2fde56a7a922f5e0 (XEN) [0000004806bfcb56] Bootloader: GRUB 2.06 (XEN) [0000004806bfd10c] Command line: dom0_mem=2624M,max:2624M watchdog ucode=scan dom0_max_vcpus=1-8 crashkernel=256M,below=4G console=vga vga=mode-0x0311 (XEN) [0000004806bfda1e] Xen image load base address: 0x86e00000 (XEN) [0000004806bfddfb] Video information: (XEN) [0000004806bfe738] VGA is graphics mode 3840x2160, 32 bpp (XEN) [0000004806bfeb6b] Disc information: (XEN) [0000004806bff177] Found 0 MBR signatures (XEN) [0000004806bff5d5] Found 3 EDD information structures (XEN) [0000004806c00e30] CPU Vendor: AMD, Family 26 (0x1a), Model 68 (0x44), Stepping 0 (raw 00b40f40) (XEN) [0000004806c0222d] Enabling Supervisor Shadow Stacks (XEN) [0000004806c065de] EFI RAM map: (XEN) [0000004806c06fc7] [0000000000000000, 000000000009ffff] (usable) (XEN) [0000004806c07700] [00000000000a0000, 00000000000fffff] (reserved) (XEN) [0000004806c07d37] [0000000000100000, 0000000009afefff] (usable) (XEN) [0000004806c081eb] [0000000009aff000, 0000000009ffffff] (reserved) (XEN) [0000004806c0861e] [000000000a000000, 000000000a1fffff] (usable) (XEN) [0000004806c08a7c] [000000000a200000, 000000000a21dfff] (ACPI NVS) (XEN) [0000004806c08f5b] [000000000a21e000, 000000000affffff] (usable) (XEN) [0000004806c0938e] [000000000b000000, 000000000b020fff] (reserved) (XEN) [0000004806c09817] [000000000b021000, 000000008857efff] (usable) (XEN) [0000004806c09cf6] [000000008857f000, 000000008e57efff] (reserved) (XEN) [0000004806c0a1d5] [000000008e57f000, 000000008e67efff] (ACPI data) (XEN) [0000004806c0a735] [000000008e67f000, 000000009067efff] (ACPI NVS) (XEN) [0000004806c0ab93] [000000009067f000, 00000000987fefff] (reserved) (XEN) [0000004806c0aff1] [00000000987ff000, 0000000099ff6fff] (usable) (XEN) [0000004806c0b44f] [0000000099ff7000, 0000000099ffbfff] (reserved) (XEN) [0000004806c0b882] [0000000099ffc000, 0000000099ffffff] (usable) (XEN) [0000004806c0bcb5] [000000009a000000, 000000009bffffff] (reserved) (XEN) [0000004806c0c113] [000000009d7f3000, 000000009fffffff] (reserved) (XEN) [0000004806c0c51b] [00000000e0000000, 00000000efffffff] (reserved) (XEN) [0000004806c0c979] [00000000fd000000, 00000000ffffffff] (reserved) (XEN) [0000004806c0ceae] [0000000100000000, 000000083dd7ffff] (usable) (XEN) [0000004806c0d3b8] [000000083edc0000, 00000008801fffff] (reserved) (XEN) [0000004806c0d918] [000000fd00000000, 000000ffffffffff] (reserved) (XEN) [00000048098db966] Kdump: 256MB (262144kB) at 0x77000000 (XEN) [0000004809907701] ACPI: RSDP 90665014, 0024 (r2 ALASKA) (XEN) [0000004809909f26] ACPI: XSDT 90664728, 00EC (r1 ALASKA A M I 1072009 AMI 1000013) (XEN) [000000480990c012] ACPI: FACP 8E673000, 0114 (r6 ALASKA A M I 1072009 AMI 10013) (XEN) [000000480990dccb] ACPI: DSDT 8E5F6000, 7329C (r208 ALASKA A M I 1072009 INTL 20230331) (XEN) [000000480990eb12] ACPI: FACS 90662000, 0040 (XEN) [000000480990f903] ACPI: SSDT 8E676000, 816C (r2 AMD Splinter 2 MSFT 5000000) (XEN) [0000004809910877] ACPI: SPMI 8E675000, 0041 (r5 ALASKA A M I 0 AMI. 0) (XEN) [0000004809911510] ACPI: SSDT 8E674000, 03F1 (r2 ALASKA CPUSSDT 1072009 AMI 1072009) (XEN) [0000004809912026] ACPI: FIDT 8E66D000, 009C (r1 ALASKA A M I 1072009 AMI 10013) (XEN) [0000004809912b3c] ACPI: MCFG 8E66C000, 003C (r1 ALASKA A M I 1072009 MSFT 10013) (XEN) [0000004809913550] ACPI: HPET 8E66B000, 0038 (r1 ALASKA A M I 1072009 AMI 5) (XEN) [0000004809913f39] ACPI: FPDT 8E66A000, 0044 (r1 ALASKA A M I 1072009 AMI 1000013) (XEN) [0000004809914dd6] ACPI: VFCT 8E5EB000, AE84 (r1 ALASKA A M I 1 AMD 33504F47) (XEN) [00000048099158c1] ACPI: SPCR 8E671000, 0050 (r2 ALASKA A M I 1072009 AMI 50023) (XEN) [0000004809916300] ACPI: SSDT 8E5E1000, 9BAE (r2 AMD AMD CPU 1 AMD 1) (XEN) [0000004809916d6a] ACPI: BGRT 8E672000, 0038 (r1 ALASKA A M I 1072009 AMI 10013) (XEN) [0000004809917c32] ACPI: TPM2 8E670000, 004C (r4 ALASKA A M I 1 AMI 0) (XEN) [000000480991861b] ACPI: SSDT 8E66F000, 06D4 (r2 AMD CPMWLRC 1 INTL 20230331) (XEN) [00000048099190b0] ACPI: SSDT 8E5DF000, 169E (r2 AMD CPMDFIG2 1 INTL 20230331) (XEN) [0000004809919ac4] ACPI: SSDT 8E5DC000, 2AA6 (r2 AMD CDFAAIG2 1 INTL 20230331) (XEN) [000000480991a4d8] ACPI: SSDT 8E66E000, 078F (r2 AMD CPMDFDG1 1 INTL 20230331) (XEN) [000000480991aeec] ACPI: SSDT 8E5D2000, 9A9E (r2 AMD CPMCMN 1 INTL 20230331) (XEN) [000000480991b92b] ACPI: SSDT 8E5CF000, 26F3 (r2 AMD AOD 1 INTL 20230331) (XEN) [000000480991c33f] ACPI: WSMT 8E5CE000, 0028 (r1 ALASKA A M I 1072009 AMI 10013) (XEN) [000000480991d0af] ACPI: APIC 8E5CD000, 015E (r6 ALASKA A M I 1072009 AMI 10013) (XEN) [000000480991dac3] ACPI: IVRS 8E5CC000, 00C8 (r2 AMD AmdTable 1 AMD 1) (XEN) [000000480991e52d] ACPI: SSDT 8E5CB000, 0500 (r2 AMD MEMTOOL0 2 INTL 20230331) (XEN) [000000480991ef41] ACPI: SSDT 8E5CA000, 047C (r2 AMD AMDWOV 1 INTL 20230331) (XEN) [000000480991f955] ACPI: SSDT 8E5C9000, 0460 (r2 AMD AmdTable 1 INTL 20230331) (XEN) [0000004809955ce4] System RAM: 31861MB (32626028kB) (XEN) [000000480b6579c1] No NUMA configuration found (XEN) [000000480b658150] Faking a node at 0000000000000000-000000083dd80000 (XEN) [000000480ffe3f8e] Domain heap initialised (XEN) [00000048132910c9] vesafb: framebuffer at 0x000000fcc0000000, mapped to 0xffff82c000201000, using 34560k, total 34560k (XEN) [0000004813291ab2] vesafb: mode is 3840x2160x32, linelength=16384, font 8x16 (XEN) [0000004813292674] vesafb: Truecolor: size=8:8:8:8, shift=24:16:8:0 (XEN) [0000004814df211f] SMBIOS 3.7 present. (XEN) [0000004814e12b2b] XSM Framework v1.0.1 initialized (XEN) [0000004814e3246b] Initialising XSM SILO mode (XEN) [0000004814ea6b87] Using APIC driver default (XEN) [0000004814ee23e9] ACPI: PM-Timer IO Port: 0x808 (32 bits) (XEN) [0000004814f0579d] ACPI: v5 SLEEP INFO: control[0:0], status[0:0] (XEN) [0000004814f2c81f] ACPI: SLEEP INFO: pm1x_cnt[1:804,1:0], pm1x_evt[1:800,1:0] (XEN) [0000004814f57269] ACPI: 32/64X FACS address mismatch in FADT - 90662000/0000000000000000, using 32 (XEN) [0000004814f89751] ACPI: wakeup_vec[9066200c], vec_size[20] (XEN) [0000004815138b23] Overriding APIC driver with bigsmp (XEN) [0000004815185ada] ACPI: IOAPIC (id[0x20] address[0xfec00000] gsi_base[0]) (XEN) [00000048151b5bfb] IOAPIC[0]: apic_id 32, version 33, address 0xfec00000, GSI 0-23 (XEN) [00000048151e1767] ACPI: IOAPIC (id[0x21] address[0xfec01000] gsi_base[24]) (XEN) [000000481520ad33] IOAPIC[1]: apic_id 33, version 33, address 0xfec01000, GSI 24-55 (XEN) [0000004815263787] ACPI: INT_SRC_OVR (bus 0 bus_irq 0 global_irq 2 dfl dfl) (XEN) [000000481528d309] ACPI: INT_SRC_OVR (bus 0 bus_irq 9 global_irq 9 low level) (XEN) [00000048152e921b] ACPI: HPET id: 0x10228201 base: 0xfed00000 (XEN) [000000481531ba8a] PCI: MCFG configuration 0: base e0000000 segment 0000 buses 00 - ff (XEN) [000000481534891c] PCI: MCFG area at e0000000 reserved in E820 (XEN) [000000481536faa0] PCI: Using MCFG for segment 0000 bus 00-ff (XEN) [000000481539a08c] ACPI: BGRT: invalidating v1 image at 0x82368018 (XEN) [00000048153bfc65] Using ACPI (MADT) for SMP configuration information (XEN) [00000048153e642b] SMP: Allowing 32 CPUs (0 hotplug CPUs) (XEN) [0000004815417eff] IRQ limits: 56 GSI, 6600 MSI/MSI-X (XEN) [00000048155cb020] BSP microcode revision: 0x0b404022 (XEN) [00000048155f22fc] xstate: size: 0x988 and states: 0x2e7 (XEN) [000000481566082b] CPU0: AMD Fam1ah machine check reporting enabled (XEN) [000000481568c1e9] Speculative mitigation facilities: (XEN) [00000048156acb49] Hardware hints: STIBP_ALWAYS IBRS_FAST IBRS_SAME_MODE BTC_NO IBPB_RET IBPB_BRTYPE (XEN) [00000048156df1df] Hardware features: IBPB IBRS STIBP SSBD PSFD L1D_FLUSH SBPB (XEN) [00000048157092c1] Compiled-in support: INDIRECT_THUNK HARDEN_ARRAY HARDEN_BRANCH HARDEN_GUEST_ACCESS HARDEN_LOCK (XEN) [0000004815740cfd] Xen settings: BTI-Thunk: JMP, SPEC_CTRL: IBRS+ STIBP+ SSBD- PSFD-, Other: IBPB-ctxt BRANCH_HARDEN (XEN) [00000048157791ce] Support for HVM VMs: MSR_SPEC_CTRL MSR_VIRT_SPEC_CTRL RSB IBPB-entry (XEN) [00000048157a6972] Support for PV VMs: None (XEN) [00000048157c3ce7] XPTI (64-bit PV only): Dom0 disabled, DomU disabled (without PCID) (XEN) [00000048157f081d] PV L1TF shadowing: Dom0 disabled, DomU disabled (XEN) [0000004815817ace] Using scheduler: SMP Credit Scheduler (credit) (XEN) [0000004822505125] Platform timer is 14.318MHz HPET (XEN) [ 0.107811] Detected 4291.916 MHz processor. (XEN) [ 0.110336] EFI memory map: (XEN) [ 0.110358] 0000000000000-0000000003fff type=2 attr=000000000000000f (XEN) [ 0.110395] 0000000004000-000000008efff type=7 attr=000000000000000f (XEN) [ 0.110432] 000000008f000-000000009efff type=2 attr=000000000000000f (XEN) [ 0.110469] 000000009f000-000000009ffff type=4 attr=000000000000000f (XEN) [ 0.110506] 0000000100000-00000039e4fff type=2 attr=000000000000000f (XEN) [ 0.110543] 00000039e5000-0000009afefff type=7 attr=000000000000000f (XEN) [ 0.110580] 0000009aff000-0000009ffffff type=0 attr=000000000000000f (XEN) [ 0.110616] 000000a000000-000000a1fffff type=7 attr=000000000000000f (XEN) [ 0.110653] 000000a200000-000000a21dfff type=10 attr=000000000000000f (XEN) [ 0.110691] Setting RUNTIME attribute for 000000a200000-000000a21dfff (XEN) [ 0.110728] 000000a21e000-000000a29dfff type=4 attr=000000000000000f (XEN) [ 0.110765] 000000a29e000-000000affffff type=7 attr=000000000000000f (XEN) [ 0.110802] 000000b000000-000000b020fff type=0 attr=000000000000000f (XEN) [ 0.110839] 000000b021000-000005d7d5fff type=7 attr=000000000000000f (XEN) [ 0.110876] 000005d7d6000-000005f77dfff type=4 attr=000000000000000f (XEN) [ 0.110913] 000005f77e000-000007fa9efff type=1 attr=000000000000000f (XEN) [ 0.110950] 000007fa9f000-000007faa0fff type=4 attr=000000000000000f (XEN) [ 0.110987] 000007faa1000-000007fad7fff type=7 attr=000000000000000f (XEN) [ 0.111023] 000007fad8000-000007fae7fff type=4 attr=000000000000000f (XEN) [ 0.111060] 000007fae8000-000007faf1fff type=7 attr=000000000000000f (XEN) [ 0.111097] 000007faf2000-000007faf2fff type=4 attr=000000000000000f (XEN) [ 0.111134] 000007faf3000-000007faf4fff type=7 attr=000000000000000f (XEN) [ 0.111171] 000007faf5000-000007fb11fff type=4 attr=000000000000000f (XEN) [ 0.111208] 000007fb12000-000007fb15fff type=7 attr=000000000000000f (XEN) [ 0.111244] 000007fb16000-000007fb2efff type=4 attr=000000000000000f (XEN) [ 0.111281] 000007fb2f000-000007fcaefff type=7 attr=000000000000000f (XEN) [ 0.111318] 000007fcaf000-000007fdc2fff type=1 attr=000000000000000f (XEN) [ 0.111355] 000007fdc3000-00000800c6fff type=7 attr=000000000000000f (XEN) [ 0.111392] 00000800c7000-00000800c7fff type=4 attr=000000000000000f (XEN) [ 0.111429] 00000800c8000-00000800c8fff type=7 attr=000000000000000f (XEN) [ 0.111466] 00000800c9000-00000800d4fff type=4 attr=000000000000000f (XEN) [ 0.111503] 00000800d5000-000008016bfff type=7 attr=000000000000000f (XEN) [ 0.111539] 000008016c000-0000080188fff type=4 attr=000000000000000f (XEN) [ 0.111576] 0000080189000-00000801a5fff type=7 attr=000000000000000f (XEN) [ 0.111613] 00000801a6000-00000801a6fff type=4 attr=000000000000000f (XEN) [ 0.111650] 00000801a7000-00000801a7fff type=7 attr=000000000000000f (XEN) [ 0.111687] 00000801a8000-00000801a8fff type=4 attr=000000000000000f (XEN) [ 0.111724] 00000801a9000-00000801b1fff type=7 attr=000000000000000f (XEN) [ 0.111761] 00000801b2000-00000801b3fff type=4 attr=000000000000000f (XEN) [ 0.111798] 00000801b4000-00000801befff type=7 attr=000000000000000f (XEN) [ 0.111834] 00000801bf000-00000801c3fff type=4 attr=000000000000000f (XEN) [ 0.111871] 00000801c4000-00000801c4fff type=7 attr=000000000000000f (XEN) [ 0.111908] 00000801c5000-00000801c5fff type=4 attr=000000000000000f (XEN) [ 0.111945] 00000801c6000-00000801cbfff type=7 attr=000000000000000f (XEN) [ 0.111982] 00000801cc000-00000801ccfff type=4 attr=000000000000000f (XEN) [ 0.112019] 00000801cd000-00000801d0fff type=7 attr=000000000000000f (XEN) [ 0.112056] 00000801d1000-00000801d1fff type=2 attr=000000000000000f (XEN) [ 0.112092] 00000801d2000-0000082160fff type=4 attr=000000000000000f (XEN) [ 0.112129] 0000082161000-0000082161fff type=7 attr=000000000000000f (XEN) [ 0.112166] 0000082162000-000008216afff type=4 attr=000000000000000f (XEN) [ 0.112203] 000008216b000-000008216dfff type=7 attr=000000000000000f (XEN) [ 0.112240] 000008216e000-000008216efff type=4 attr=000000000000000f (XEN) [ 0.112277] 000008216f000-0000082171fff type=7 attr=000000000000000f (XEN) [ 0.112314] 0000082172000-0000082172fff type=4 attr=000000000000000f (XEN) [ 0.112350] 0000082173000-0000082175fff type=7 attr=000000000000000f (XEN) [ 0.112387] 0000082176000-0000082183fff type=4 attr=000000000000000f (XEN) [ 0.112424] 0000082184000-000008218dfff type=7 attr=000000000000000f (XEN) [ 0.112461] 000008218e000-0000082570fff type=4 attr=000000000000000f (XEN) [ 0.112498] 0000082571000-0000082572fff type=7 attr=000000000000000f (XEN) [ 0.112535] 0000082573000-00000825a0fff type=4 attr=000000000000000f (XEN) [ 0.112571] 00000825a1000-00000825a8fff type=7 attr=000000000000000f (XEN) [ 0.112608] 00000825a9000-00000825a9fff type=4 attr=000000000000000f (XEN) [ 0.112645] 00000825aa000-00000825acfff type=7 attr=000000000000000f (XEN) [ 0.112682] 00000825ad000-00000826defff type=4 attr=000000000000000f (XEN) [ 0.112719] 00000826df000-00000826e1fff type=7 attr=000000000000000f (XEN) [ 0.112756] 00000826e2000-00000828e8fff type=4 attr=000000000000000f (XEN) [ 0.112793] 00000828e9000-00000828f8fff type=7 attr=000000000000000f (XEN) [ 0.112830] 00000828f9000-0000082902fff type=4 attr=000000000000000f (XEN) [ 0.112866] 0000082903000-0000082905fff type=7 attr=000000000000000f (XEN) [ 0.112903] 0000082906000-0000082ab1fff type=4 attr=000000000000000f (XEN) [ 0.112940] 0000082ab2000-0000082ab2fff type=7 attr=000000000000000f (XEN) [ 0.112977] 0000082ab3000-000008657efff type=4 attr=000000000000000f (XEN) [ 0.113014] 000008657f000-0000086ffffff type=7 attr=000000000000000f (XEN) [ 0.113051] 0000087000000-00000879fffff type=2 attr=000000000000000f (XEN) [ 0.113088] 0000087a00000-0000087a40fff type=7 attr=000000000000000f (XEN) [ 0.113124] 0000087a41000-000008857efff type=3 attr=000000000000000f (XEN) [ 0.113161] 000008857f000-000008e57efff type=0 attr=000000000000000f (XEN) [ 0.113198] 000008e57f000-000008e67efff type=9 attr=000000000000000f (XEN) [ 0.113235] 000008e67f000-000009067efff type=10 attr=000000000000000f (XEN) [ 0.113272] Setting RUNTIME attribute for 000008e67f000-000009067efff (XEN) [ 0.113318] 000009067f000-000009867efff type=6 attr=800000000000000f (XEN) [ 0.113362] 000009867f000-00000987fefff type=5 attr=800000000000000f (XEN) [ 0.113404] 00000987ff000-0000098dfffff type=4 attr=000000000000000f (XEN) [ 0.113441] 0000098e00000-0000098f0cfff type=7 attr=000000000000000f (XEN) [ 0.113478] 0000098f0d000-000009900cfff type=4 attr=000000000000000f (XEN) [ 0.113515] 000009900d000-0000099031fff type=3 attr=000000000000000f (XEN) [ 0.113552] 0000099032000-0000099066fff type=4 attr=000000000000000f (XEN) [ 0.113589] 0000099067000-0000099098fff type=3 attr=000000000000000f (XEN) [ 0.113625] 0000099099000-00000990b1fff type=4 attr=000000000000000f (XEN) [ 0.113662] 00000990b2000-00000990c9fff type=3 attr=000000000000000f (XEN) [ 0.113699] 00000990ca000-0000099e5cfff type=4 attr=000000000000000f (XEN) [ 0.113736] 0000099e5d000-0000099e60fff type=3 attr=000000000000000f (XEN) [ 0.113773] 0000099e61000-0000099e74fff type=4 attr=000000000000000f (XEN) [ 0.113810] 0000099e75000-0000099e76fff type=3 attr=000000000000000f (XEN) [ 0.113847] 0000099e77000-0000099e87fff type=4 attr=000000000000000f (XEN) [ 0.115762] 0000099e88000-0000099eacfff type=3 attr=000000000000000f (XEN) [ 0.117680] 0000099ead000-0000099eaefff type=4 attr=000000000000000f (XEN) [ 0.119603] 0000099eaf000-0000099eaffff type=7 attr=000000000000000f (XEN) [ 0.121530] 0000099eb0000-0000099ed3fff type=4 attr=000000000000000f (XEN) [ 0.123459] 0000099ed4000-0000099ed4fff type=7 attr=000000000000000f (XEN) [ 0.125390] 0000099ed5000-0000099ed5fff type=3 attr=000000000000000f (XEN) [ 0.127319] 0000099ed6000-0000099fe7fff type=4 attr=000000000000000f (XEN) [ 0.129244] 0000099fe8000-0000099feefff type=3 attr=000000000000000f (XEN) [ 0.131164] 0000099fef000-0000099ff6fff type=4 attr=000000000000000f (XEN) [ 0.133085] 0000099ff7000-0000099ffbfff type=6 attr=800000000000000f (XEN) [ 0.135005] 0000099ffc000-0000099ffffff type=4 attr=000000000000000f (XEN) [ 0.136923] 0000100000000-000083dd7ffff type=7 attr=000000000000000f (XEN) [ 0.138839] 00000000a0000-00000000fffff type=0 attr=000000000000000f (XEN) [ 0.140754] 000009a000000-000009bffffff type=0 attr=000000000000000f (XEN) [ 0.142666] 000009d7f3000-000009fffffff type=0 attr=000000000000000f (XEN) [ 0.144577] 00000e0000000-00000efffffff type=11 attr=800000000000100d (XEN) [ 0.146490] 00000fd000000-00000fedfffff type=11 attr=800000000000100d (XEN) [ 0.148400] 00000fee00000-00000fee00fff type=11 attr=8000000000000001 (XEN) [ 0.150307] 00000fee01000-00000ffffffff type=11 attr=800000000000100d (XEN) [ 0.152223] 000083edc0000-000085fffffff type=0 attr=000000000000000f (XEN) [ 0.154133] 0000860000000-00008801fffff type=11 attr=800000000000100d (XEN) [ 0.156048] 000fd00000000-000ffffffffff type=0 attr=0000000000000001 (XEN) [ 0.157962] alt table ffff82d0406a36b8 -> ffff82d0406b2e58 (XEN) [ 0.169837] AMD-Vi: IOMMU Extended Features: (XEN) [ 0.171744] - Peripheral Page Service Request (XEN) [ 0.173648] - NX bit (XEN) [ 0.175542] - Guest APIC Physical Processor Interrupt (XEN) [ 0.177444] - Invalidate All Command (XEN) [ 0.179332] - Guest APIC (XEN) [ 0.181205] - Performance Counters (XEN) [ 0.183068] - Host Address Translation Size: 0x2 (XEN) [ 0.184923] - Guest Address Translation Size: 0 (XEN) [ 0.186767] - Guest CR3 Root Table Level: 0x1 (XEN) [ 0.188602] - Maximum PASID: 0xf (XEN) [ 0.190426] - SMI Filter Register: 0x1 (XEN) [ 0.192244] - SMI Filter Register Count: 0x1 (XEN) [ 0.194061] - Guest Virtual APIC Modes: 0x1 (XEN) [ 0.195874] - Dual PPR Log: 0x2 (XEN) [ 0.197682] - Dual Event Log: 0x2 (XEN) [ 0.199488] - Secure ATS (XEN) [ 0.201285] - User / Supervisor Page Protection (XEN) [ 0.203086] - Device Table Segmentation: 0x3 (XEN) [ 0.204883] - PPR Log Overflow Early Warning (XEN) [ 0.206675] - PPR Automatic Response (XEN) [ 0.208459] - Memory Access Routing and Control: 0x1 (XEN) [ 0.210247] - Block StopMark Message (XEN) [ 0.212026] - Performance Optimization (XEN) [ 0.213800] - MSI Capability MMIO Access (XEN) [ 0.215569] - Guest I/O Protection (XEN) [ 0.217331] - Enhanced PPR Handling (XEN) [ 0.219087] - Invalidate IOTLB Type (XEN) [ 0.220837] - VM Table Size: 0x2 (XEN) [ 0.222581] - Guest Access Bit Update Disable (XEN) [ 0.232769] AMD-Vi: IOMMU 0 Enabled. (XEN) [ 0.234701] I/O virtualisation enabled (XEN) [ 0.236442] - Dom0 mode: Relaxed (XEN) [ 0.238174] Interrupt remapping enabled (XEN) [ 0.239897] Enabled directed EOI with ioapic_ack_old on! (XEN) [ 0.241622] Enabling APIC mode. Using 2 I/O APICs (XEN) [ 0.244172] ENABLING IO-APIC IRQs (XEN) [ 0.245883] -> Using old ACK method (XEN) [ 0.247826] ..TIMER: vector=0xF0 apic1=0 pin1=2 apic2=-1 pin2=-1 (XEN) [ 0.432812] Defaulting to alternative key handling; send 'A' to switch to normal mode. (XEN) [ 0.434545] Allocated console ring of 128 KiB. (XEN) [ 0.436265] HVM: ASIDs enabled. (XEN) [ 0.437971] SVM: Supported advanced features: (XEN) [ 0.439678] - Nested Page Tables (NPT) (XEN) [ 0.441378] - Last Branch Record (LBR) Virtualisation (XEN) [ 0.443081] - Next-RIP Saved on #VMEXIT (XEN) [ 0.444776] - VMCB Clean Bits (XEN) [ 0.446463] - DecodeAssists (XEN) [ 0.448143] - Virtual VMLOAD/VMSAVE (XEN) [ 0.449819] - Virtual GIF (XEN) [ 0.451486] - Pause-Intercept Filter (XEN) [ 0.453151] - Pause-Intercept Filter Threshold (XEN) [ 0.454816] - TSC Rate MSR (XEN) [ 0.456470] - NPT Supervisor Shadow Stack (XEN) [ 0.458124] - MSR_SPEC_CTRL virtualisation (XEN) [ 0.459774] HVM: SVM enabled (XEN) [ 0.461415] HVM: Hardware Assisted Paging (HAP) detected (XEN) [ 0.463063] HVM: HAP page sizes: 4kB, 2MB, 1GB (XEN) [ 0.464733] alt table ffff82d0406a36b8 -> ffff82d0406b2e58 (XEN) [ 1.097888] Brought up 32 CPUs (XEN) [ 1.101853] Testing NMI watchdog on all CPUs:ok (XEN) [ 1.123224] Scheduling granularity: cpu, 1 CPU per sched-resource (XEN) [ 1.125738] mcheck_poll: Machine check polling timer started. (XEN) [ 1.148253] NX (Execute Disable) protection active (XEN) [ 1.149893] d0 has maximum 1304 PIRQs (XEN) [ 1.151542] csched_alloc_domdata: setting dom 0 as the privileged domain (XEN) [ 1.153201] *** Building a PV Dom0 *** (XEN) [ 1.271900] Xen kernel: 64-bit, lsb (XEN) [ 1.273526] Dom0 kernel: 64-bit, PAE, lsb, paddr 0x1000000 -> 0x302c000 (XEN) [ 1.275332] PHYSICAL MEMORY ARRANGEMENT: (XEN) [ 1.276959] Dom0 alloc.: 0000000820000000->0000000824000000 (642480 pages to be allocated) (XEN) [ 1.278636] Init. ramdisk: 000000083a9b0000->000000083dbffa00 (XEN) [ 1.280288] VIRTUAL MEMORY ARRANGEMENT: (XEN) [ 1.281918] Loaded kernel: ffffffff81000000->ffffffff8302c000 (XEN) [ 1.283556] Phys-Mach map: 0000008000000000->0000008000520000 (XEN) [ 1.285191] Start info: ffffffff8302c000->ffffffff8302c4b8 (XEN) [ 1.286826] Page tables: ffffffff8302d000->ffffffff8304a000 (XEN) [ 1.288460] Boot stack: ffffffff8304a000->ffffffff8304b000 (XEN) [ 1.290092] TOTAL: ffffffff80000000->ffffffff83400000 (XEN) [ 1.291723] ENTRY ADDRESS: ffffffff8242b180 (XEN) [ 1.293606] Dom0 has maximum 8 VCPUs (XEN) [ 1.304745] Initial low memory virq threshold set at 0x4000 pages. (XEN) [ 1.306379] Scrubbing Free RAM in background (XEN) [ 1.307999] Std. Loglevel: Errors, warnings and info (XEN) [ 1.309628] Guest Loglevel: Nothing (Rate-limited: Errors and warnings) (XEN) [ 1.311275] Xen is relinquishing VGA console. (XEN) [ 1.341402] *** Serial input to DOM0 (type 'CTRL-a' three times to switch input) (XEN) [ 1.341546] Freed 2048kB init memory (XEN) [ 1022.240112] d1v2 VIRIDIAN GUEST_CRASH: 0x116 0xffffdb8ffaf76010 0xfffff8077938e9f0 0xffffffffc0000001 0x4 [17:38 xcp-ng-Asrock ~]#