Speed Limit - Overall, per VM or NBD connection ?

-

Hi,

We have a 1GiB backhaul connection between our DC and office, which is where I have our backup server (running XMP8.3).

So that we don't flood our available bandwidth, I've enabled a 'Speed limit' of 800MiB/s, which should be well below our 1000MiB/s backhaul, however I see our connection is at capacity and our provider is reporting they are seeing significant dropped packets occurring on our backhaul.

This is how I've configured the backup schedule:

Concurrency: 6

NBD connections: 4

Purge snapshot data when using CBT: Enabled

Full backup interval: 8

Speed limit: 800 MiB/sSo my question is, is the 'Speed limit' intended to be overall, per VM or per NDB connection ?

-

Let me ask the XO team about it

-

Thanks - the other question I have is regarding the "Full backup interval" option.

The documentation suggests doing this periodically (eg. 20) in case the backup Delta itself gets corrupt.

I have (currently) 168 servers totalling ~65TB storage. I'm using the [NOBAK] option on OS and local backups, reducing this down to ~22Tb that I'm using Continuous Replication on.

I'm trying to figure out the best way to spread this so that only say 10 servers are reset each time on a rotating basis.

Open to ideas ?

Thanks

Kent. -

Another issue I have, is I have two VM's which fail to back up despite multiple 'full' attempts. After a couple of failed attempts, I have deleted the snapshot that's left behind, the backup VM that's created (basically anything that has the VM name in it other than the original machine itself) and tried again (twice).

The other VM's in this schedule all backed up fine.What am I looking for to resolve this issue ?

UUID: ccb53826-eb4a-4b42-b397-2882ad92e17f

Start time: Monday, January 6th 2025, 9:35:17 pm

End time: Monday, January 6th 2025, 10:33:56 pm

Duration: an hour

️ Transfer data using NBD

️ Transfer data using NBD

Error: VDI_IN_USE(OpaqueRef:90cc2a1e-4480-318c-a791-32536f9aad02, destroy)UUID: a826a1d8-9bc1-87a0-0225-f5e7c27c4d84

Start time: Monday, January 6th 2025, 9:40:13 pm

End time: Monday, January 6th 2025, 10:48:00 pm

Duration: an hour

️ Transfer data using NBD

️ Transfer data using NBD

Error: VDI_IN_USE(OpaqueRef:61fc3b2b-fe6b-a6e0-a9ed-5f1f18676b98, destroy)Transfer data using NBD

Snapshot

Start: 2025-01-06 21:35

End: 2025-01-06 21:35

Local storage (179.85 TiB free - thin) - MHP101

transfer

Start: 2025-01-06 21:36

End: 2025-01-06 22:14

Duration: 38 minutes

Size: 74 GiB

Speed: 32.92 MiB/s

Start: 2025-01-06 21:35

End: 2025-01-06 22:14

Duration: 39 minutesStart: 2025-01-06 21:35

End: 2025-01-06 22:33

Duration: an hour

Error: VDI_IN_USE(OpaqueRef:90cc2a1e-4480-318c-a791-32536f9aad02, destroy)This is a XenServer/XCP-ng error

Type: full -

VDI in use is something else, it means the VDI is still mounted somewhere. It's very hard to tell you exactly where, maybe stuck attached to the Dom0.

For the other question, the limit should be per job total, regardless NBD or VHD.

-

VDI in use is something else, it means the VDI is still mounted somewhere. It's very hard to tell you exactly where, maybe stuck attached to the Dom0.

I tracked down these - in once case the same VDI was linked to three different hosts. Restarting the toolstack on the master and these hosts didn't release the vdi, in the end the only way I was able to do so was to restart the physical host. I ended up having to do this on 7 of my 12 separate hosts to release all the VM's that were in this state.

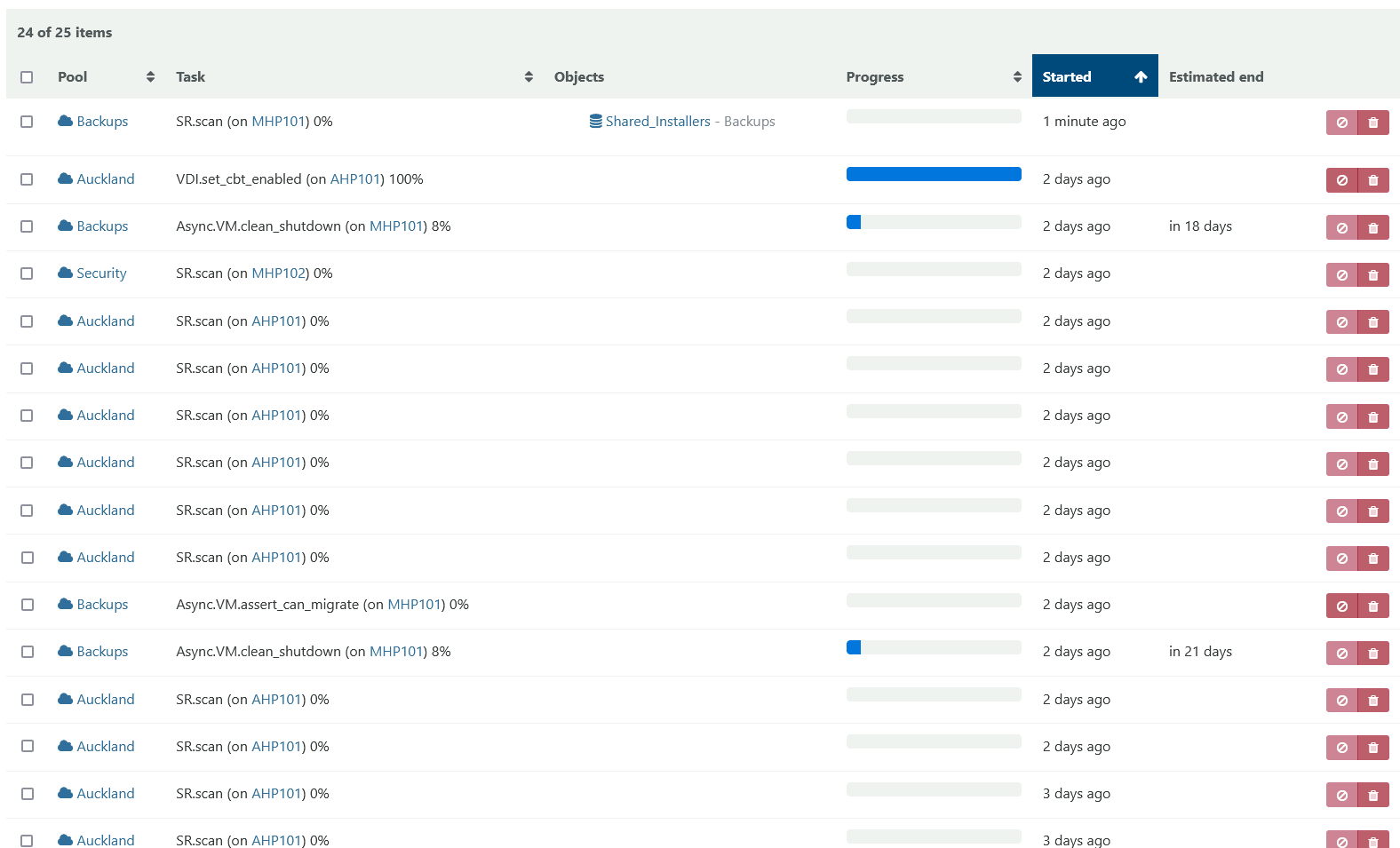

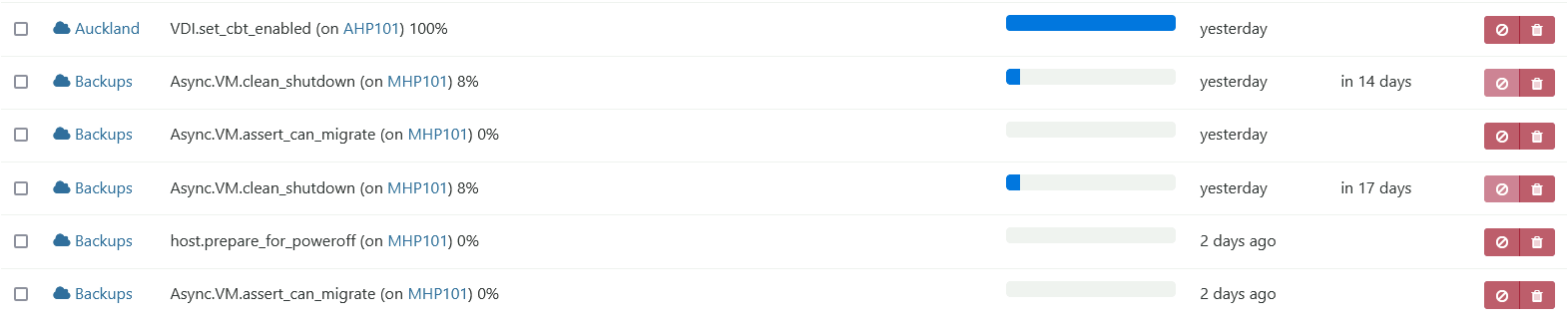

The latest issue I'm having is I can't migrate VM's between different pools since I enabled backups (at least I believe it's since I enabled the backups). I do not that there are jobs still running after 2+ days and say they have days left to run. Again, I've restarted the toolstack and physical host (at least twice) of MHP101 but they keep coming back after the restart. It's been over a day since I last restarted and they appear stuck.

What's going on here and how do I get rid of these tasks ?

Cheers

Kent. -

Check in the health view if you have VDI attached to the control domain.

Regarding the other question: the speed limit is in MiB/s: it's Bytes not Bits.

-

Dou! Thanks for clarifying the speed limit - I've adjust to 100MiB, which should use about 80% of my available bandwidth.

Resolving the VDIs' appears to have worked as backups going now.

I just have these long running tasks which have been going multiple days with no end in sight that I can't resolve. I suspect they may be related to why I can't migrate VM's between hosts now.

Cheers

Kent.