VDI_COPY_FAILED: Failed to find parent

-

Good-day folks, anybody have any insights into my question above?

-

Hi,

Have you tried with

xe vdi-copy? -

@olivierlambert No sir, I have not. I'll try that and report back shortly.

-

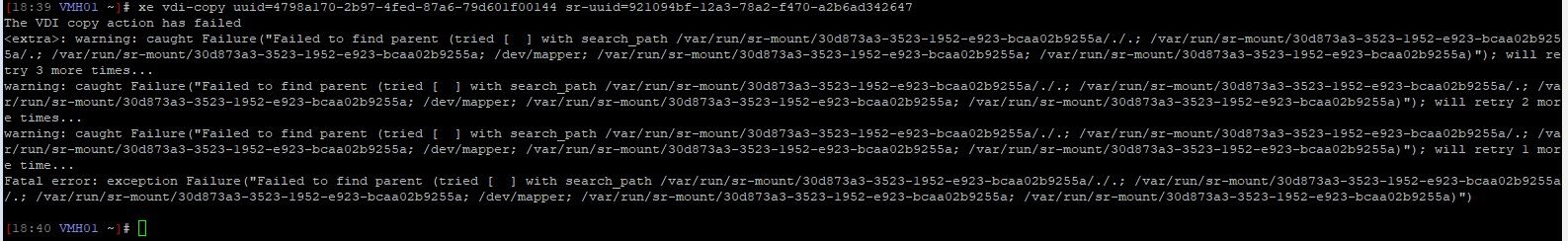

@olivierlambert I just tried the operation via the

xe vdi-copycommand on the pool master (VMH01), and it failed with the same error (see screenshot below

I restarted the toolstack and tried again, with the same result. I even tried with XCP-ng Center (vNext) and got the same error.

-

K kagbasi-ngc referenced this topic on

-

So it's not an XO issue, but something related to the storage stack. Can you do a

ls -latrhin the SR folder to see what's inside? -

@olivierlambert As requested, here's the output of

ls -latrhof both the source SR and destination SR:SOURCE SR:

[14:02 VMH01 ~]# ls -latrh /run/sr-mount/30d873a3-3523-1952-e923-bcaa02b9255a total 170G -rw-r--r-- 1 root root 1 Nov 1 23:37 sm-hardlink.conf -rw-r--r-- 1 root root 314K Nov 8 19:38 12d41988-8c76-40b0-8073-467c673e9c47.vhd -rw-r--r-- 1 root root 314K Nov 8 19:40 106c139a-a961-4e7a-b322-df1e7f81f87d.vhd -rw-r--r-- 1 root root 770M Nov 8 19:40 05628260-c1ec-4446-908e-1024535b8e02.vhd -rw-r--r-- 1 root root 598M Nov 8 19:48 8e64b5cf-c880-4677-b8de-7c37ac806582.vhd -rw-r--r-- 1 root root 314K Nov 8 20:16 4794c6a5-3062-46f9-a7c8-27fe2c46a36e.vhd -rw-r--r-- 1 root root 314K Nov 13 20:49 4798a170-2b97-4fed-87a6-79d601f00144.vhd -rw-r--r-- 1 root root 1.8G Nov 13 20:49 5dd4a829-6eed-458f-8bca-99ff44347f22.vhd -rw-r--r-- 1 root root 2.0G Nov 22 13:39 f1f0c837-8460-4abb-bbbd-5a1827d09d04.vhd -rw-r--r-- 1 root root 2.2G Nov 27 16:55 6b9299ae-64c4-4719-b866-c504dd46f0f6.vhd -rw-r--r-- 1 root root 1.7G Nov 27 18:35 564d8df9-735c-4781-9f82-481a6e9f4e6b.vhd -rw-r--r-- 1 root root 314K Nov 27 18:35 3a4c3c5e-5424-4a6b-b9c2-7db80695c211.vhd -rw-r--r-- 1 root root 103M Nov 27 18:35 152bdb03-1559-492e-af08-b7dff77878c0.vhd -rw-r--r-- 1 root root 314K Dec 16 22:10 OLD_26c304ef-36d2-42ca-9930-9aa76ba933e8.vhd -rw-r--r-- 1 root root 11M Dec 17 20:17 73e3cb5e-c936-4eb4-b15c-65987880f7e5.vhd -rw-r--r-- 1 root root 3.7G Dec 17 20:17 6c8efff9-1033-4622-9d40-deab6b250a80.vhd -rw-r--r-- 1 root root 438M Dec 17 20:26 9139a04d-6138-4c88-adeb-80d6ff8bc79f.vhd -rw-r--r-- 1 root root 279M Dec 17 20:27 e07e6db7-00e0-4be8-ac6d-8d5175e18058.vhd -rw-r--r-- 1 root root 2.0G Dec 17 20:55 771286d5-cb8d-4a99-a04d-258c221cff0b.vhd -rw-r--r-- 1 root root 656M Dec 17 20:57 e0fb4664-c11a-4b15-85a5-6a6a5f54c349.vhd -rw-r--r-- 1 root root 2.1G Dec 17 20:59 e838f601-4cec-43cf-afce-7927324ae10b.vhd -rw-r--r-- 1 root root 3.7G Dec 18 09:44 eaaf71a8-622e-41e8-b277-8322a7962cc4.vhd -rw-r--r-- 1 root root 74G Dec 18 16:21 b90664ff-507f-4399-9920-79497458d1b2.vhd -rw-r--r-- 1 root root 5.1G Dec 19 00:52 76f3d5bc-e4de-40ae-9958-71e6becb1b83.vhd -rw-r--r-- 1 root root 3.9G Dec 19 00:52 1bee7e69-1e9f-42e4-aaee-396a90bd6745.vhd -rw-r--r-- 1 root root 14G Dec 19 00:53 46006a84-c4d6-4939-b0b1-305760e50d9d.vhd drwx------ 9 root root 200 Jan 3 07:53 .. -rw-r--r-- 1 root root 9.3G Jan 3 08:41 c5801736-90a6-4ddd-961f-e453ce43c772.vhd -rw-r--r-- 1 root root 13M Jan 3 08:41 6561bbbd-d1e4-483d-bace-15d0264d814b.vhd -rw-r--r-- 1 root root 21K Jan 3 08:41 filelog.txt drwxr-xr-x 2 root root 16K Jan 3 08:41 . -rw-r--r-- 1 root root 38G Jan 15 2025 8e26a3b8-0bcf-4db8-b558-75611d952dc1.vhd -rw-r--r-- 1 root root 1.3G Jan 15 2025 c6967c64-01a3-4910-9783-78c36629c080.vhd -rw-r--r-- 1 root root 4.4G Jan 15 2025 e780b1e4-e800-4d2c-b3d1-5bfa31693ba9.vhd [14:03 VMH01 ~]#DESTINATION SR:

[14:03 VMH01 ~]# ls -latrh /run/sr-mount/921094bf-12a3-78a2-f470-a2b6ad342647/ total 88G -rw-r--r-- 1 root root 1 Dec 12 20:07 sm-hardlink.conf -rw-r--r-- 1 root root 312K Dec 16 21:18 02472306-fe53-43e9-8871-6f90b49c3457.vhd -rw-r--r-- 1 root root 21G Dec 16 21:59 4930f19a-e540-40d5-8dc7-8d1f103c5ed9.vhd -rw-r--r-- 1 root root 314K Dec 16 22:08 305dc785-f819-4a2d-b071-dc6d1f5b7020.vhd -rw-r--r-- 1 root root 51G Dec 16 22:10 7f8c18e5-4e7f-4742-8b5c-cf3d92080c51.vhd -rw-r--r-- 1 root root 314K Dec 16 22:31 f9a568e9-3df6-4d45-99cd-1b2f2305d4a6.vhd -rw-r--r-- 1 root root 19G Dec 16 22:31 b0c7bda2-4b42-4106-b5ef-5618541caeb4.vhd -rw-r--r-- 1 root root 2.4G Dec 17 11:12 41dd5596-5945-45a4-8b28-dbe4b8ac69c6.vhd -rw-r--r-- 1 root root 3.6G Dec 19 00:23 b324640b-7ff9-4df4-b57f-895b9a24c85e.vhd -rw-r--r-- 1 root root 101M Dec 19 01:38 b3bd2577-9eda-41d1-9f39-663651435474.vhd -rw-r--r-- 1 root root 99M Dec 19 01:38 c95d9bf8-316d-482b-b03f-027b3bce8127.vhd -rw-r--r-- 1 root root 99M Dec 19 01:38 3bfdf9ce-56ea-4bf2-83dc-5e2f8544ec3c.vhd -rw-r--r-- 1 root root 2.3G Dec 19 01:38 014116e0-c75d-4e2d-a3ca-59e72ae9db08.vhd -rw-r--r-- 1 root root 1.8G Dec 19 21:34 8f7d755f-ebfc-4321-9bab-30aedc13787b.vhd -rw-r--r-- 1 root root 13M Dec 20 10:49 1b3d22fe-ea47-4915-9587-d6e4aac4d1bc.vhd -rw-r--r-- 1 root root 13M Dec 20 10:49 cb8dbecd-102a-4e73-ba3c-2b32ccfded6d.vhd -rw-r--r-- 1 root root 13M Dec 20 10:49 291e296f-d0e7-4382-9ae3-9835db15d974.vhd -rw-r--r-- 1 root root 3.3G Dec 20 10:49 8b2a6ef1-2f42-44e8-9af4-8961bc96200e.vhd -rw-r--r-- 1 root root 108K Dec 20 12:15 23bd22eb-fc83-4f2c-95f7-9baa55db32a0.vhd -rw-r--r-- 1 root root 63M Dec 20 12:15 9a7e2b37-dd8f-4e95-ba84-fb3e26f179b1.vhd -rw-r--r-- 1 root root 108K Dec 20 12:15 65e77f16-bd71-45d6-99ec-5d6e195f526b.vhd -rw-r--r-- 1 root root 8.2M Dec 20 12:15 249b7b7c-de9d-46dc-b5dc-3e5582227cfa.vhd -rw-r--r-- 1 root root 108K Dec 20 12:15 198b0bc1-33ac-4b2c-8e1d-f1eb84542e71.vhd -rw-r--r-- 1 root root 8.2M Dec 20 12:15 376a5f9c-84e0-41b8-b830-b42e0074c11d.vhd -rw-r--r-- 1 root root 314K Dec 20 12:15 af668755-61d6-42b0-ad3b-23f6d86379d9.vhd -rw-r--r-- 1 root root 5.7G Dec 20 12:15 7e1ab2c4-c9c3-4be6-9aef-6839147bc92d.vhd -rw-r--r-- 1 root root 6.5G Dec 20 14:40 8838ebef-2efc-4835-8b09-fc4d4c719286.vhd -rw-r--r-- 1 root root 314K Dec 20 15:16 f4b28604-3142-4030-b3cb-1191de0b84fa.vhd -rw-r--r-- 1 root root 241M Dec 20 15:16 6a3848ca-ec63-4cb5-87bc-4c36c3e07974.vhd -rw-r--r-- 1 root root 1.1G Jan 1 13:51 3473eec2-72e8-420a-8360-ec7998bace1f.vhd drwx------ 9 root root 200 Jan 3 07:53 .. -rw-r--r-- 1 root root 5.2M Jan 3 07:54 8bee2082-fa8f-4849-a3ec-a3aeb2330ac5.vhd -rw-r--r-- 1 root root 5.2M Jan 3 07:54 368454d1-0e36-4852-aa01-f89cae6289e2.vhd -rw-r--r-- 1 root root 312K Jan 3 08:41 14a3a217-47fa-4db3-9513-25c6933eb0c5.vhd -rw-r--r-- 1 root root 15G Jan 13 08:51 d7556d20-823b-4df6-bec3-f2ec5106d7b3.vhd -rw-r--r-- 1 root root 314K Jan 14 19:43 27d179cb-2849-4c4f-9060-3c324258b1c7.vhd -rw-r--r-- 1 root root 13K Jan 14 19:43 filelog.txt drwxr-xr-x 2 root root 8.0K Jan 14 21:10 . -rw-r--r-- 1 root root 103M Jan 14 21:27 261bdfa3-ab41-4093-b6ba-325abc2a1186.vhd -rw-r--r-- 1 root root 141M Jan 15 14:00 ecc11478-a0bd-4c64-810c-1fc19dd28a62.vhd -rw-r--r-- 1 root root 8.7G Jan 15 2025 a30dfb0c-da63-4f96-a5dd-2d22a41cdb64.vhd -rw-r--r-- 1 root root 15G Jan 15 2025 759c7422-6531-4cbb-ba33-bfb044f549cd.vhd -rw-r--r-- 1 root root 5.0G Jan 15 2025 f526778e-be83-4f89-b6da-8dc1b144cdbd.vhd [14:03 VMH01 ~]# -

So, before anything, I would double check to have a backup of all your VMs on the source SR.

Then, I would try to move

OLD_26c304ef-36d2-42ca-9930-9aa76ba933e8.vhdin another folder (like/root) and rescan the SR, then try again. -

@olivierlambert I have not been able to create a successful backup yet, so backups are a moot point right now. I already have a support ticket open (#7732723).

All hosts are shutdown now so I can run the memory tests, so once they complete on all three hosts, I'll boot them up and do what you've suggested and report back.

Now, for my own edification, what is the significance of this file

OLD_26c304ef-36d2-42ca-9930-9aa76ba933e8.vhd, and why do you think moving it will have a positive effect? -

Because it seems to be a leftover from a failed coalesce, at least it seems you have a problem with a chain. Maybe it's this chain and moving (not removing just in case) will make it right. The best solution would be to check which UUID reported in the error is in which chain to remove that broken chain and rescan the SR.

-

@olivierlambert Roger that. The memtest is still running, and I've left the office to go get my kids from school. I will be returning to the lab in a couple of hours, and if the memtest is done on host1, I'll bring up the pool and move

OLD_26c304ef-36d2-42ca-9930-9aa76ba933e8.vhdand report back. -

@olivierlambert As requested, I moved the VDI named

OLD_26c304ef-36d2-42ca-9930-9aa76ba933e8.vhdout of the source SR30d873a3-3523-1952-e923-bcaa02b9255aand then rescanned the SR. I then retried the copy, both from the XOA web UI and from the master host (VMH01) usingxe vdi-copy. The result was the same failure; that the parent couldn't be found.Is there a place I can find out what the UUID is of the parent VDI that seems to be missing? Maybe it's still on the NFS server but has somehow gotten corrupted?

Also, I'd like to point out that I have an unresolved issue with that particular SR - where some of the VDIs keep disappearing and reappearing (Ticket#7729741). Perhaps could be related?

-

So now you have to figure out the chain to find which VDI as a parent pointing to this missing VDI. Hard to tell if i it's related, but you clearly have an issue on this storage (why is not easy to answer).

@Danp I think I remember a tool we could use to display the chain?

-

@olivierlambert Yes, the tool is called

xapi-explore-sr. Installation and usage details can be found at https://github.com/vatesfr/xen-orchestra/tree/master/packages/xapi-explore-sr. -

So now you have to figure out the chain to find which VDI as a parent pointing to this missing VDI.

@olivierlambert How do I figure out the chain of a VDI? I've looked over the entire XOA UI to see if there's a way of seeing who the parent of any VDI is, but I don't see anything obvious. Must this be done via CLI? If so, how?

Also, I don't know enough about XCP-ng or XO to confidently agree that it's the storage, but I can certainly see why you think so. That said, I have another SR - targeting the same NFS server - where I'm not having these issues.

I want to take advantage of this situation to help you figure out if there is a problem with the underlying code. I could very easily blow away the SR I'm having issues with, but that will rob us of this opportunity.

-

@Danp and @olivierlambert

Haha, looks like we were both typing at the same time. I'll checkout the link provided and educate myself on how to use the tool, thanks again.

-

So problem, I'm in an airgapped environment. I cannot pull packages from the internet directly. How can I get this utility installed?

Also, I figure that this utility needs to be run on XOA, since I don't see NPM installed on the hosts?

-

In theory, it could be installed on any machine. Is there a way to get it installed on a laptop from Internet then plugged in your airgap network?

-

@olivierlambert Technically, yes, I suppose I could do that. However, that would not mimic what a SysAdmin would have to do, should they run into this trouble in production.

Is there no other alternative to get this utility, perhaps as a .deb package that I can manually install on XOA?

Lastly, I performed the following testing just now:

-

Created a new VDI on a known working VM and put the VDI on the problematic SR (let's call it NFS-DS1).

-

I then attempted to migrate the newly created VDI to the known good SR (NFS-DS2); it failed with error

VM_LACKS_FEATURE. -

So I crested another VDI, attached to the same VM, but this time I put it on SR NFS-DS2 (the good one) and then attempted to migrate it to SR NFS-DS21; IT WORKED.

-

So I retried migrating it back to NFS-DS2, and it worked as well.

-

Finally, I went back to the original new VDI I started with, and attempted to migrate it from SR NFS-DS21 to NFS-DS2; it worked!

SO, it seems the issue remains with the VDIs that seem to have lost their parent.

P.S. I haven't noticed the VDIs disappearing from SR NFS-DS1 for over an hour now.

-

-

K kagbasi-ngc referenced this topic on