Internal error: Not_found after Vinchin backup

-

@olivierlambert Sorry do you mean the destroy VI button. Will that cause any issues with the actual VM?

-

I don't think so, it's just a snasphot.

-

@olivierlambert Is there anything scheduled by default in xcp-ng on Sundays? Or how often does it coalesce. Just trying to figure out why every Sunday these issues happen.

-

There's no schedule in XCP-ng. It's more about when you remove snapshots (without CBT). You can check about coalesce inside the /var/log/SMlog and try to watch what's going on on Sunday.

-

@olivierlambert Ok. Why does XOA show some with CBT and some without? How does that get set? I apologize, I am just trying to narrow this down. I appreciate all the help.

-

I suppose those snapshots were done via Vinchin, so I can't tell. You can clean all snapshots to get back on something clean before trying anything else.

-

@olivierlambert Ok, will do. Synology says that xcp-ng is sending LUN disconnects to the LUN based on the dmesg on on the Synology.

iSCSI:target_core_tmr.c:629:core_tmr_lun_reset LUN_RESET: TMR starting for [fileio/Synology-VM-LUN/9c706a83-1e77-47a8-a5c5-18cfe815459d], tas: 1

-

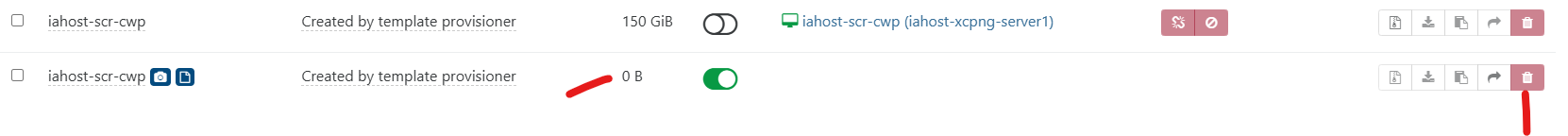

So I just click "Destroy VDI" on the snapshot?

-

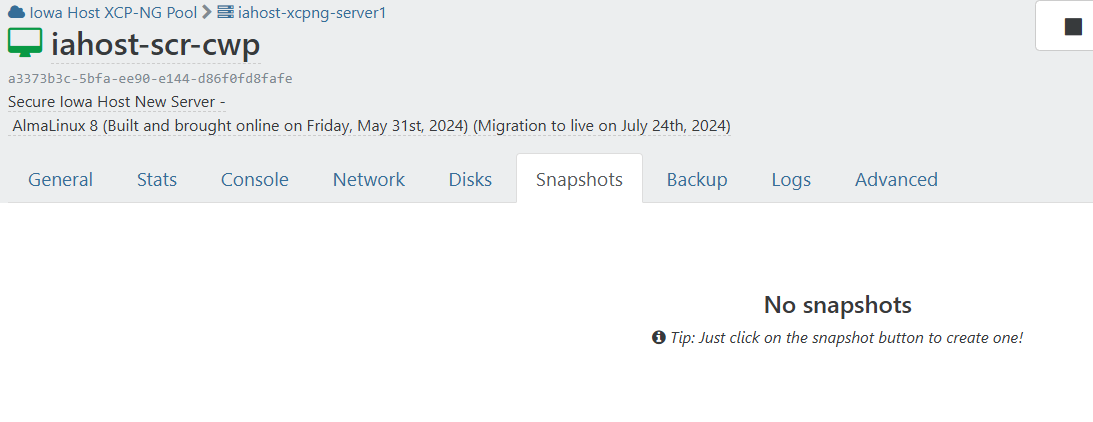

Sorry I'm just a bit confused because it is showing no snapshots on the VM view

-

Yes, destroy those CBT snapshots. It's not visible in VM/snapshots because they are CBT, not "real" VM snap that you can rollback.

-

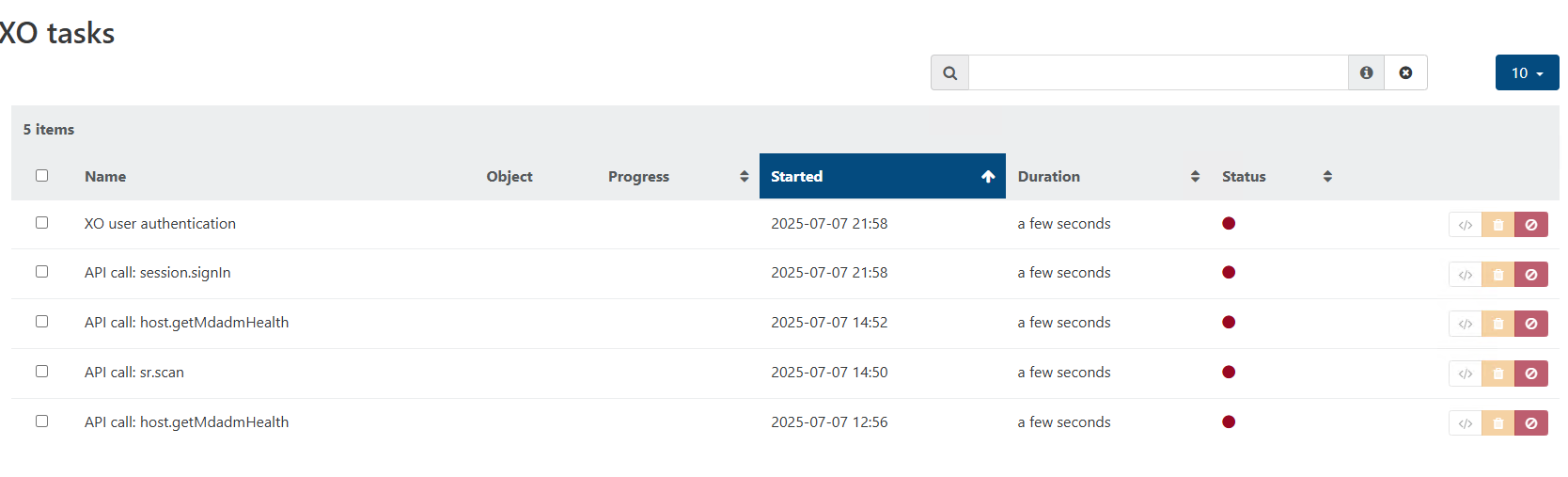

@olivierlambert I did that on one and noticed a task API call: sr.scan I opened the raw log and seeing errors. AttachedSR Scan Errors.txt

-

Is that due to the Master being disconnected from the SR. That is the one I was referring to in my previous Topic post "Pool Master".

-

Just keeps bouncing over and over

-

So even taking Vinchin out of the picture for now, I am still worried that xcp-ng will run the Garbage Collection and disconnect the ST, brining the VM down again.

-

Ignore the XO tasks panel, it's not important. About the rest, please use it and report if you continue to have issues.

-

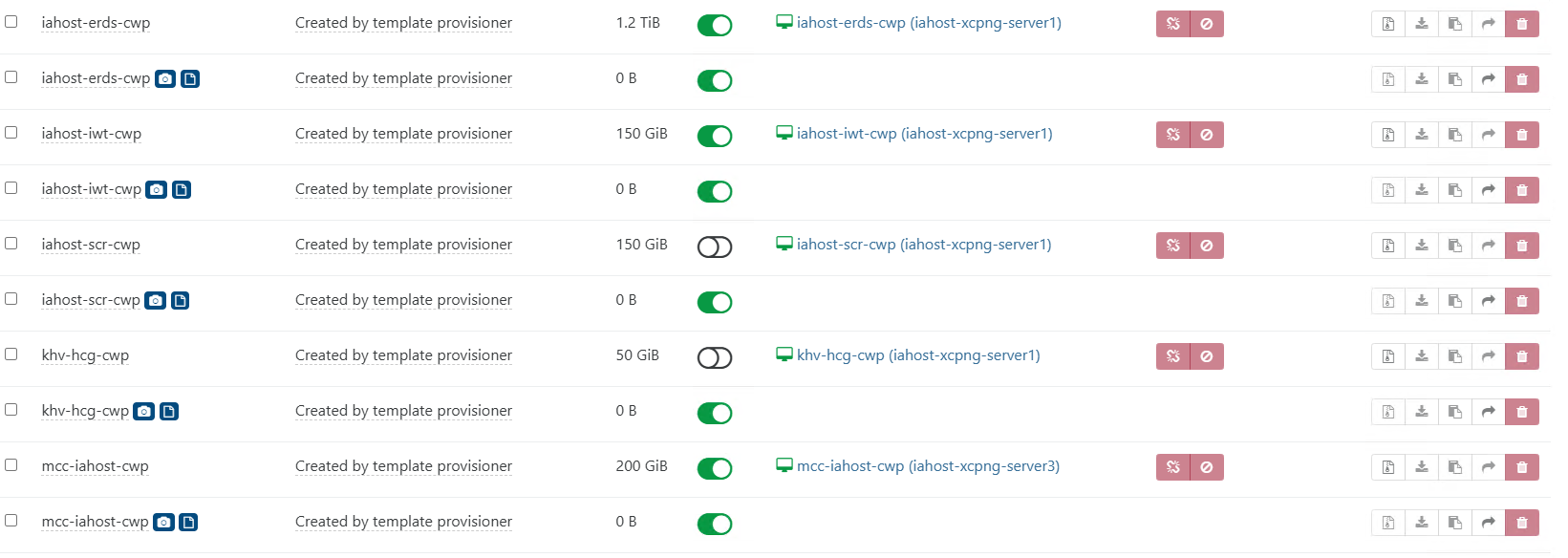

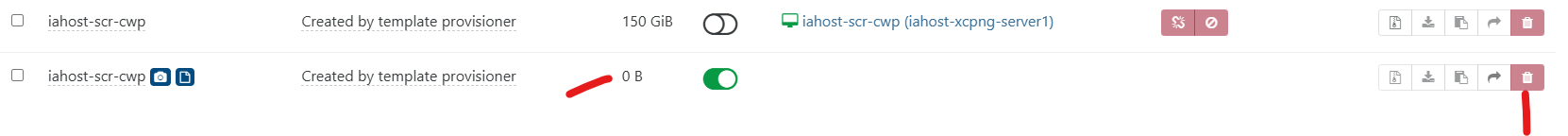

I deleted the snapshot from this one vm.

However, that one remains in the list on here:

-

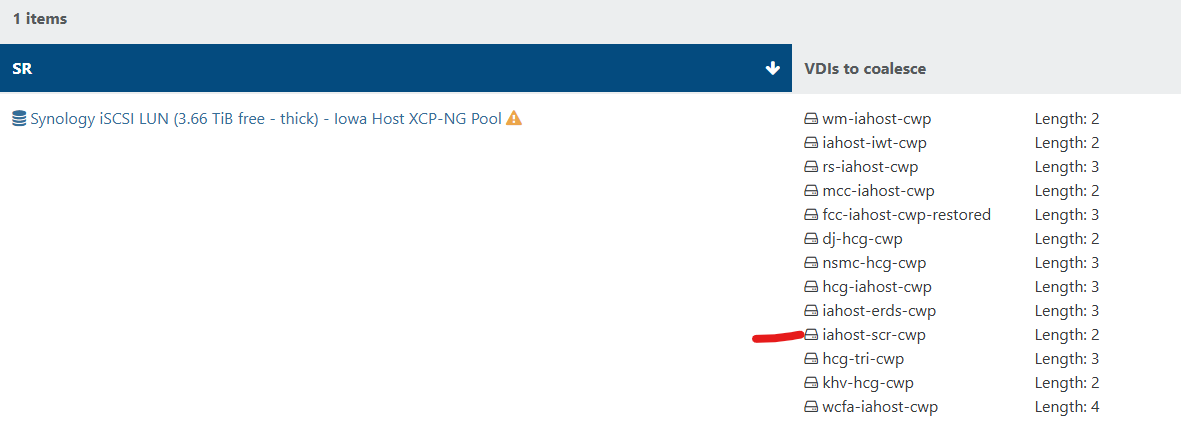

You can check if coalesce is running if you see that garbage collection is planned on XO/SR view.

-

What does this mean exactly? When will this happen? I'm sorry I am very nervous.

-

I don't know if this helps from the SMlog

[15:18 iahost-xcpng-server2 ~]# grep -i "coalesce" /var/log/SMlog Jul 8 14:32:53 iahost-xcpng-server2 SM: [31275] Aborting GC/coalesce Jul 8 14:33:00 iahost-xcpng-server2 SM: [31789] Entering doesFileHaveOpenHandles with file: /dev/mapper/VG_XenStorage--88d7607c--f807--3b06--6f70--2dcb319d97ea-coalesce_8241ba22--3125--4f45--b3b1--254792a525c7_1 Jul 8 14:33:00 iahost-xcpng-server2 SM: [31789] Entering findRunningProcessOrOpenFile with params: ['/dev/mapper/VG_XenStorage--88d7607c--f807--3b06--6f70--2dcb319d97ea-coalesce_8241ba22--3125--4f45--b3b1--254792a525c7_1', False] Jul 8 14:33:00 iahost-xcpng-server2 SM: [31789] ['/sbin/dmsetup', 'remove', '/dev/mapper/VG_XenStorage--88d7607c--f807--3b06--6f70--2dcb319d97ea-coalesce_8241ba22--3125--4f45--b3b1--254792a525c7_1'] Jul 8 14:59:34 iahost-xcpng-server2 SMGC: [20458] Coalesced size = 316.035G Jul 8 14:59:34 iahost-xcpng-server2 SMGC: [20458] Coalesce candidate: *8241ba22[VHD](600.000G//88.477G|n) (tree height 5) Jul 8 14:59:35 iahost-xcpng-server2 SMGC: [20458] Coalesced size = 316.035G Jul 8 14:59:35 iahost-xcpng-server2 SMGC: [20458] Coalesce candidate: *8241ba22[VHD](600.000G//88.477G|a) (tree height 5) Jul 8 14:59:35 iahost-xcpng-server2 SM: [20458] ['/sbin/lvremove', '-f', '/dev/VG_XenStorage-88d7607c-f807-3b06-6f70-2dcb319d97ea/coalesce_8241ba22-3125-4f45-b3b1-254792a525c7_1'] Jul 8 14:59:35 iahost-xcpng-server2 SM: [20458] ['/sbin/dmsetup', 'status', 'VG_XenStorage--88d7607c--f807--3b06--6f70--2dcb319d97ea-coalesce_8241ba22--3125--4f45--b3b1--254792a525c7_1'] Jul 8 14:59:36 iahost-xcpng-server2 SMGC: [20458] Coalesced size = 316.035G Jul 8 14:59:36 iahost-xcpng-server2 SMGC: [20458] Coalesce candidate: *8241ba22[VHD](600.000G//88.477G|a) (tree height 5) Jul 8 14:59:36 iahost-xcpng-server2 SM: [20458] ['/sbin/lvcreate', '-n', 'coalesce_8241ba22-3125-4f45-b3b1-254792a525c7_1', '-L', '4', 'VG_XenStorage-88d7607c-f807-3b06-6f70-2dcb319d97ea', '--addtag', 'journaler', '-W', 'n'] Jul 8 15:01:41 iahost-xcpng-server2 SMGC: [20458] Coalesced size = 316.035G Jul 8 15:02:11 iahost-xcpng-server2 SMGC: [20458] Running VHD coalesce on *8241ba22[VHD](600.000G//88.477G|a) Jul 8 15:02:11 iahost-xcpng-server2 SM: [22617] ['/usr/bin/vhd-util', 'coalesce', '--debug', '-n', '/dev/VG_XenStorage-88d7607c-f807-3b06-6f70-2dcb319d97ea/VHD-8241ba22-3125-4f45-b3b1-254792a525c7'] -

You shouldn't be nervous for a home lab

You have backups right?

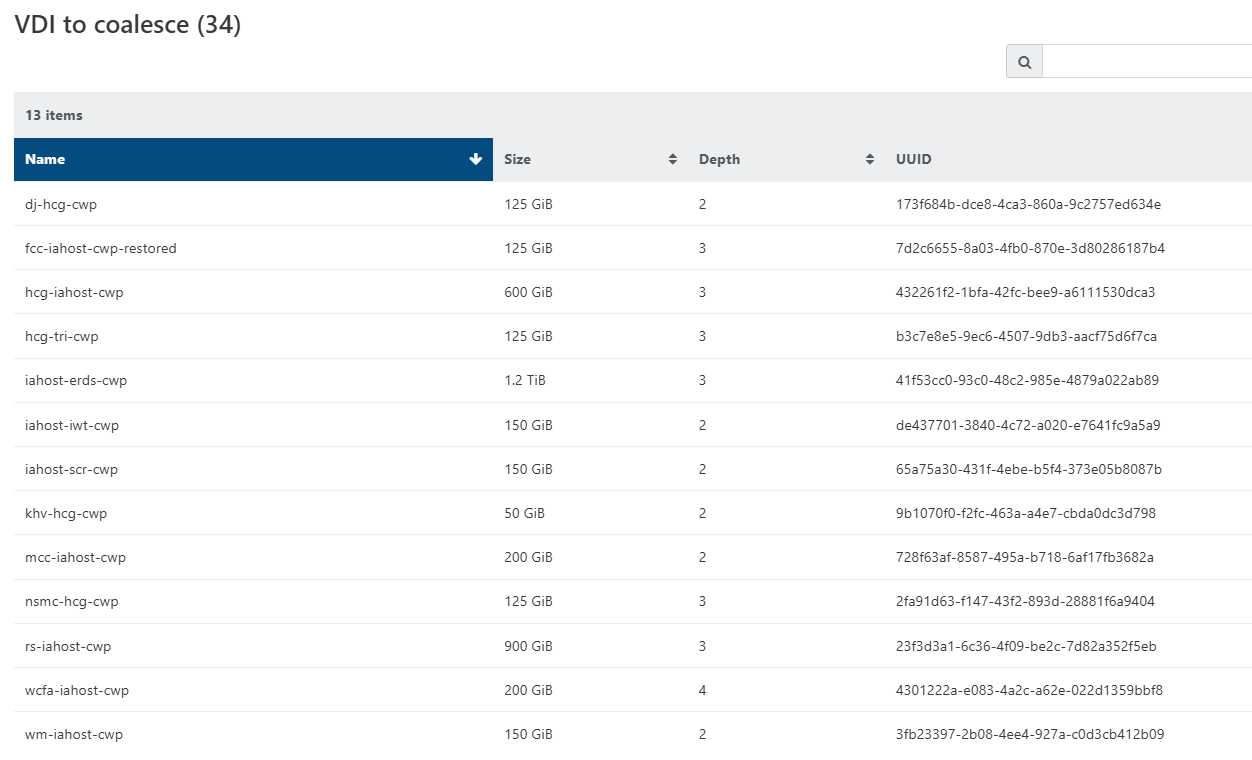

You have backups right?It means there's 34 VDIs that will be coalesced in the future. You can check if coalesce is working by looking at that number 34 and checks if it goes down.

If it doesn't, check the SM log to understand what's going on. Also, does a SR scan works?