Internal error: Not_found after Vinchin backup

-

@olivierlambert I did that on one and noticed a task API call: sr.scan I opened the raw log and seeing errors. AttachedSR Scan Errors.txt

-

Is that due to the Master being disconnected from the SR. That is the one I was referring to in my previous Topic post "Pool Master".

-

Just keeps bouncing over and over

-

So even taking Vinchin out of the picture for now, I am still worried that xcp-ng will run the Garbage Collection and disconnect the ST, brining the VM down again.

-

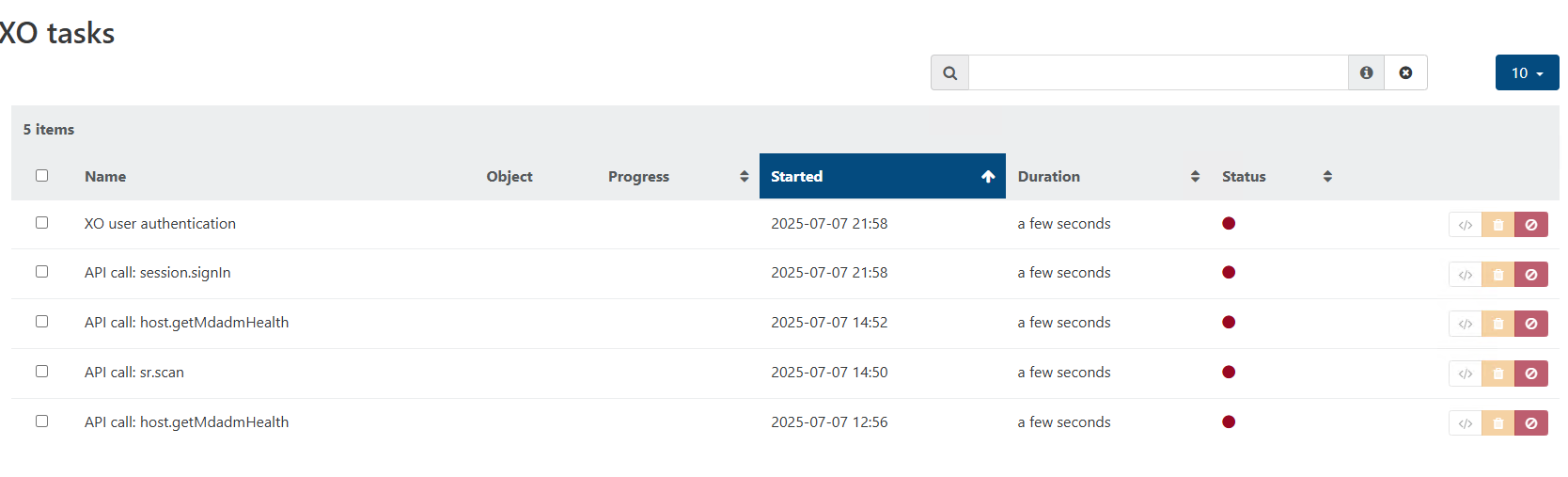

Ignore the XO tasks panel, it's not important. About the rest, please use it and report if you continue to have issues.

-

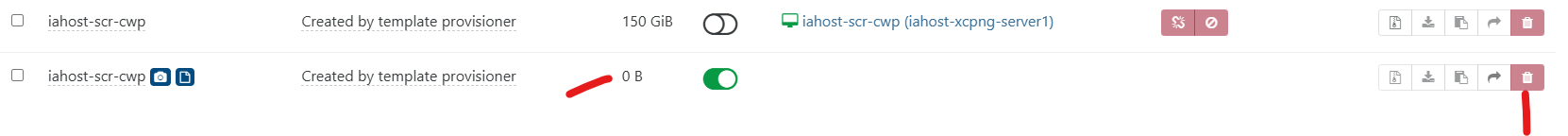

I deleted the snapshot from this one vm.

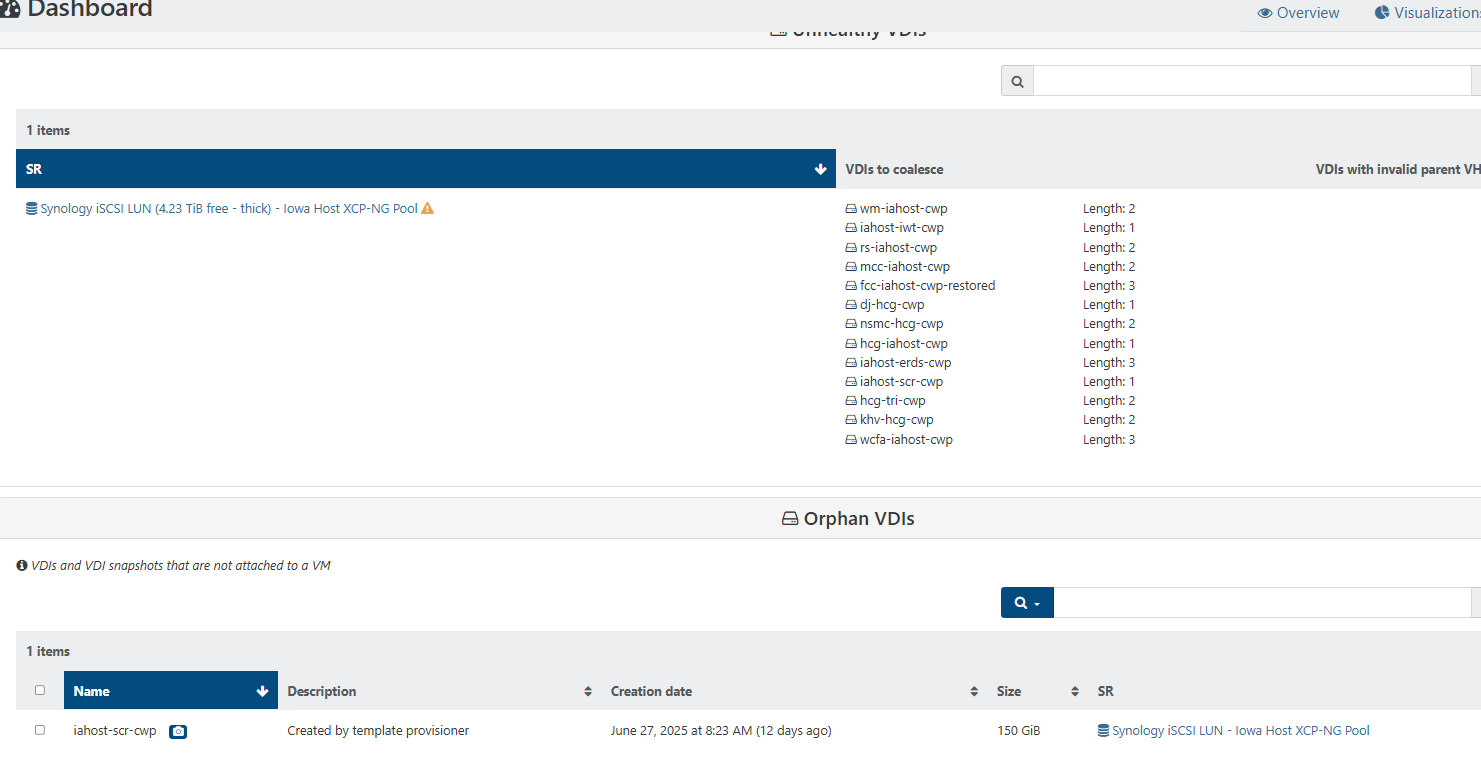

However, that one remains in the list on here:

-

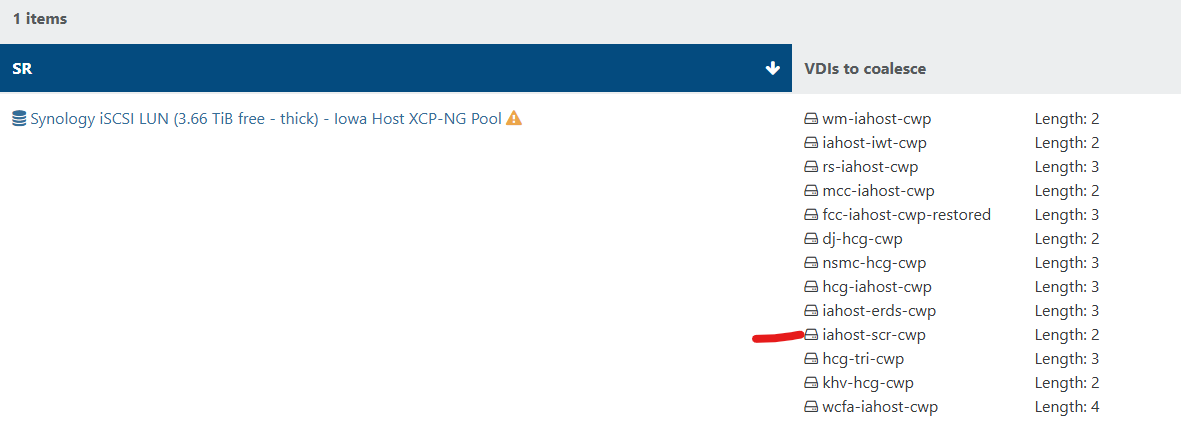

You can check if coalesce is running if you see that garbage collection is planned on XO/SR view.

-

What does this mean exactly? When will this happen? I'm sorry I am very nervous.

-

I don't know if this helps from the SMlog

[15:18 iahost-xcpng-server2 ~]# grep -i "coalesce" /var/log/SMlog Jul 8 14:32:53 iahost-xcpng-server2 SM: [31275] Aborting GC/coalesce Jul 8 14:33:00 iahost-xcpng-server2 SM: [31789] Entering doesFileHaveOpenHandles with file: /dev/mapper/VG_XenStorage--88d7607c--f807--3b06--6f70--2dcb319d97ea-coalesce_8241ba22--3125--4f45--b3b1--254792a525c7_1 Jul 8 14:33:00 iahost-xcpng-server2 SM: [31789] Entering findRunningProcessOrOpenFile with params: ['/dev/mapper/VG_XenStorage--88d7607c--f807--3b06--6f70--2dcb319d97ea-coalesce_8241ba22--3125--4f45--b3b1--254792a525c7_1', False] Jul 8 14:33:00 iahost-xcpng-server2 SM: [31789] ['/sbin/dmsetup', 'remove', '/dev/mapper/VG_XenStorage--88d7607c--f807--3b06--6f70--2dcb319d97ea-coalesce_8241ba22--3125--4f45--b3b1--254792a525c7_1'] Jul 8 14:59:34 iahost-xcpng-server2 SMGC: [20458] Coalesced size = 316.035G Jul 8 14:59:34 iahost-xcpng-server2 SMGC: [20458] Coalesce candidate: *8241ba22[VHD](600.000G//88.477G|n) (tree height 5) Jul 8 14:59:35 iahost-xcpng-server2 SMGC: [20458] Coalesced size = 316.035G Jul 8 14:59:35 iahost-xcpng-server2 SMGC: [20458] Coalesce candidate: *8241ba22[VHD](600.000G//88.477G|a) (tree height 5) Jul 8 14:59:35 iahost-xcpng-server2 SM: [20458] ['/sbin/lvremove', '-f', '/dev/VG_XenStorage-88d7607c-f807-3b06-6f70-2dcb319d97ea/coalesce_8241ba22-3125-4f45-b3b1-254792a525c7_1'] Jul 8 14:59:35 iahost-xcpng-server2 SM: [20458] ['/sbin/dmsetup', 'status', 'VG_XenStorage--88d7607c--f807--3b06--6f70--2dcb319d97ea-coalesce_8241ba22--3125--4f45--b3b1--254792a525c7_1'] Jul 8 14:59:36 iahost-xcpng-server2 SMGC: [20458] Coalesced size = 316.035G Jul 8 14:59:36 iahost-xcpng-server2 SMGC: [20458] Coalesce candidate: *8241ba22[VHD](600.000G//88.477G|a) (tree height 5) Jul 8 14:59:36 iahost-xcpng-server2 SM: [20458] ['/sbin/lvcreate', '-n', 'coalesce_8241ba22-3125-4f45-b3b1-254792a525c7_1', '-L', '4', 'VG_XenStorage-88d7607c-f807-3b06-6f70-2dcb319d97ea', '--addtag', 'journaler', '-W', 'n'] Jul 8 15:01:41 iahost-xcpng-server2 SMGC: [20458] Coalesced size = 316.035G Jul 8 15:02:11 iahost-xcpng-server2 SMGC: [20458] Running VHD coalesce on *8241ba22[VHD](600.000G//88.477G|a) Jul 8 15:02:11 iahost-xcpng-server2 SM: [22617] ['/usr/bin/vhd-util', 'coalesce', '--debug', '-n', '/dev/VG_XenStorage-88d7607c-f807-3b06-6f70-2dcb319d97ea/VHD-8241ba22-3125-4f45-b3b1-254792a525c7'] -

You shouldn't be nervous for a home lab

You have backups right?

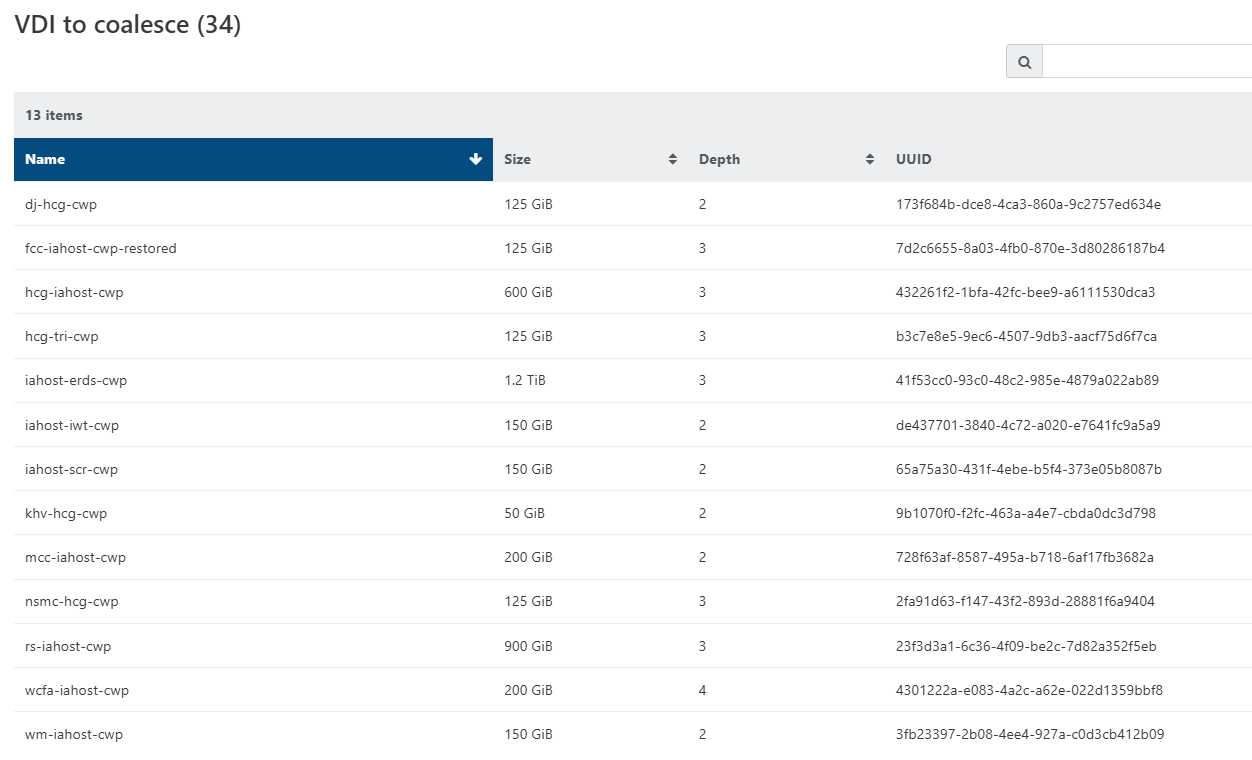

You have backups right?It means there's 34 VDIs that will be coalesced in the future. You can check if coalesce is working by looking at that number 34 and checks if it goes down.

If it doesn't, check the SM log to understand what's going on. Also, does a SR scan works?

-

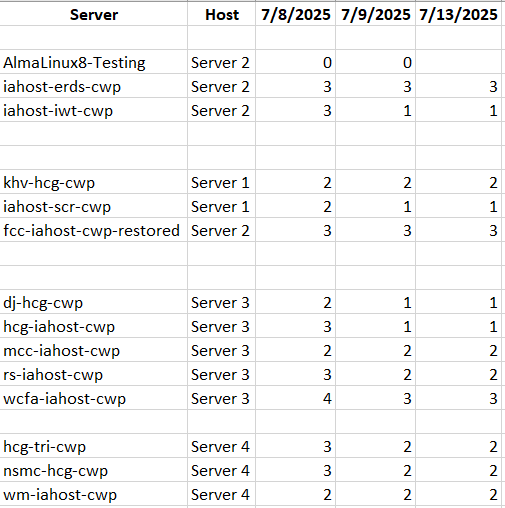

@olivierlambert So the count has gone down to 25. The host that all of these servers were on of course disconnected from the SR again. Is there a way to run the Garbage Collection and/or Coalesce on one host only? I was thinking if I move the VMs one at a time over to a host that has nothing else on it I could run that against a powered off VM to clean it up. Then move it to another host and power it back on. Then to the next and next until it's all cleaned up. Does that make sense?

-

The number is going down: excellent news! Just be patient now

I would advise just to let it run, trying to outsmart the storage stack almost never works

-

@olivierlambert What entry would I look for to see a successful and/or failed Coalesce? I'm looking at the SMlog.

-

Usually "grep -i exception" on

/var/log/SMlogwill report failures. But as long you see the number going down, it's OK. -

@olivierlambert Thank you. It's just that the host that some of these VMs are on is disconnecting the SR from the host. So I have been shutting them down and moving them to another host and powering back on. I was just hoping I could finish the coalesce for them manually to prevent unknown downtimes.

-

@olivierlambert Should I not worry about deleting any more of the extra 0B disk listed on the SR page Disks tab and just watch the number on this page?

-

You have a rather long chain now, but it should coalesce anyway

-

Well I have not had any issues since the 9th which is when I disabled Vinchin Backups. However, my count did not go down since then either.

-

So you have to dig in the SMlog to check what's going on